🚨 🆕 Preprint 🚨

How does the brain represent natural images?

Using MEG + multivariate analysis, we disentangle contributions of retinotopy, spatial frequency, shape, and texture

Together, our results reveal how visual features jointly and dynamically support human object recognition.

link 👇

13.12.2025 17:38 — 👍 39 🔁 12 💬 1 📌 0

🧠✨ Exciting new research alert! ✨🧠

Did you know that catecholamines can reduce choice history biases in perceptual decision-making? 🧐🔍

Paper: journals.plos.org/plosbiology/...

With @donnerlab.bsky.social and @swammerdamuva.bsky.social

05.09.2025 08:05 — 👍 22 🔁 8 💬 1 📌 0

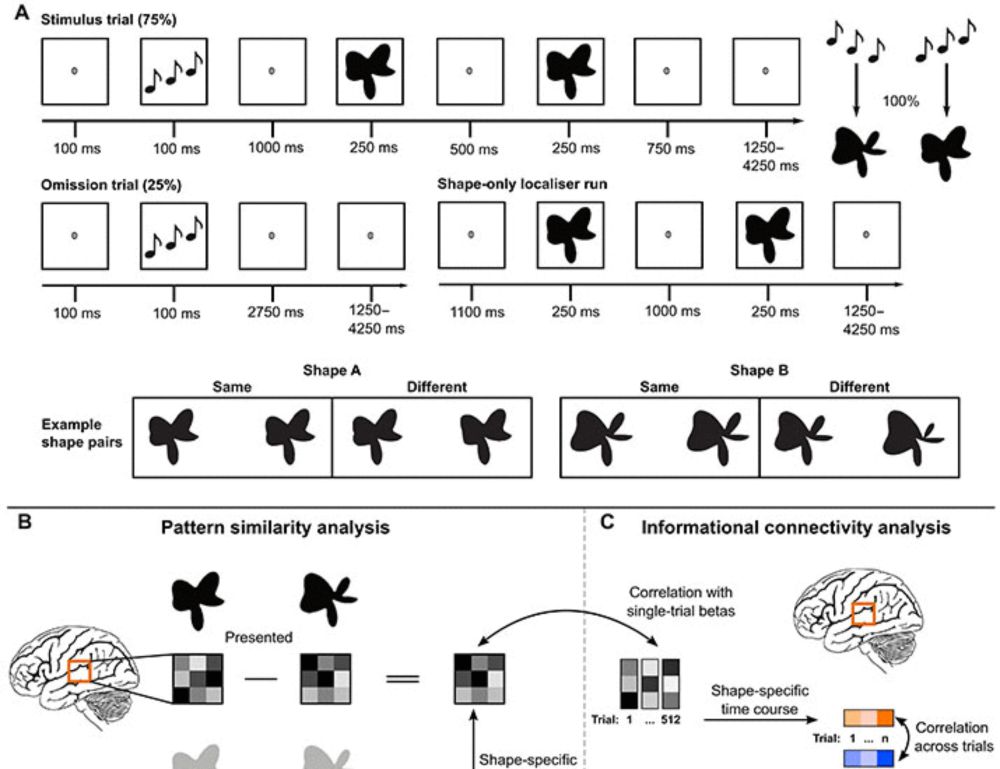

Contents of visual predictions oscillate at alpha frequencies

Predictions of future events have a major impact on how we process sensory signals. However, it remains unclear how the brain keeps predictions online in anticipation of future inputs. Here, we combin...

@dotproduct.bsky.social's first first author paper is finally out in @sfnjournals.bsky.social! Her findings show that content-specific predictions fluctuate with alpha frequencies, suggesting a more specific role for alpha oscillations than we may have thought. With @jhaarsma.bsky.social. 🧠🟦 🧠🤖

21.10.2025 11:05 — 👍 112 🔁 44 💬 7 📌 3

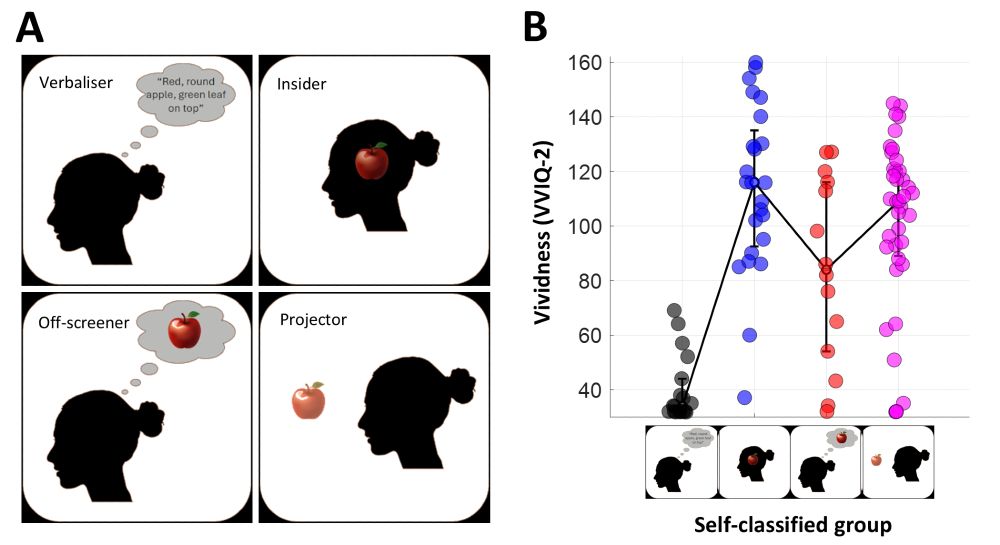

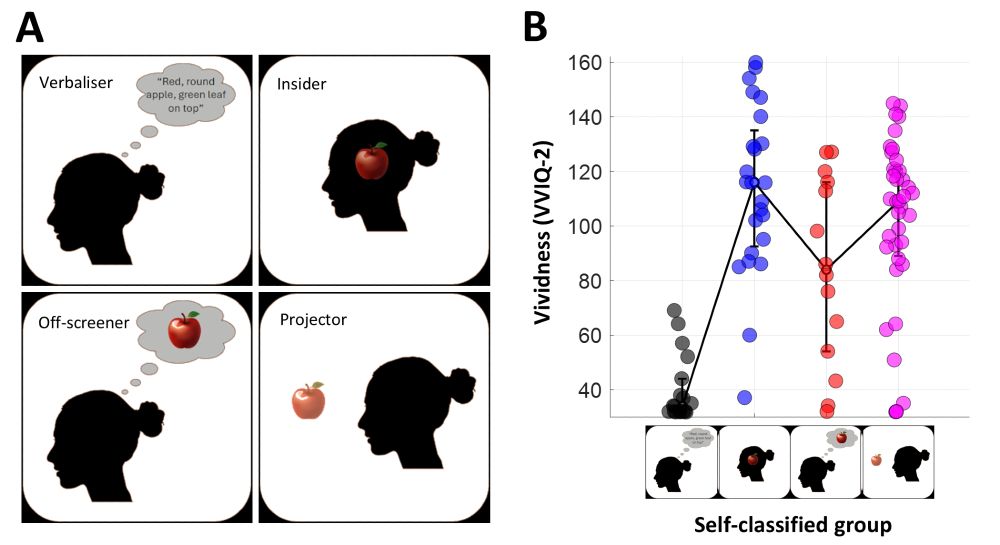

Long time in the making: our preprint of survey study on the diversity with how people seem to experience #mentalimagery. Suggests #aphantasia should be redefined as absence of depictive thought, not merely "not seeing". Some more take home msg:

#psychskysci #neuroscience

doi.org/10.1101/2025...

02.10.2025 18:10 — 👍 112 🔁 35 💬 11 📌 2

Really enjoyed my weekend read on 𝐚𝐜𝐭𝐢𝐯𝐞 𝐟𝐢𝐥𝐭𝐞𝐫𝐢𝐧𝐠: local recurrence amplifies natural input patterns and suppresses stray activity. This review beautifully argues that sensory cortex itself is a site of memory and prediction. Food for thought on hallucinations!

#neuroskyence #neuroscience

27.09.2025 15:12 — 👍 46 🔁 14 💬 0 📌 1

✨ Meet our speakers! ✨

Among our speakers this year at #SNS2025 we have Marlene Cohen (@marlenecohen.bsky.social), from University of Chicago

Read the abstract here 💬 👇

meg.medizin.uni-tuebingen.de/sns_2025/abs...

#neuroskyence

29.09.2025 11:16 — 👍 3 🔁 3 💬 0 📌 0

✨ Meet our speakers! ✨

Among our speakers this year at #SNS2025 we have Floris de Lange (@predictivebrain.bsky.social)

Read the abstract here 💬 👇

meg.medizin.uni-tuebingen.de/sns_2025/abs...

#neuroskyence

26.09.2025 08:24 — 👍 13 🔁 6 💬 0 📌 0

✨ Meet our speakers! ✨

Among our speakers this year at #SNS2025 we have Tim Kietzmann (@timkietzmann.bsky.social)

Read the abstract here 💬 👇

meg.medizin.uni-tuebingen.de/sns_2025/abs...

#neuroskyence #compneurosky #NeuroAI

25.09.2025 16:12 — 👍 12 🔁 4 💬 0 📌 0

An array of 9 purple discs on a blue background. Figure from Hinnerk Schulz-Hildebrandt.

A nice shift in perceived colour between central and peripheral vision. The fixated disc looks purple while the others look blue.

The effect presumably comes from the absence of S-cones in the fovea.

From Hinnerk Schulz-Hildebrandt:

arxiv.org/pdf/2509.115...

24.09.2025 10:16 — 👍 703 🔁 263 💬 31 📌 40

✨ Meet our speakers! ✨

Among our speakers this year at #SNS2025 we also have Sylvia Schröder (@sylviaschroeder.bsky.social), from University of Sussex

Read the abstract here 💬 👇

meg.medizin.uni-tuebingen.de/sns_2025/abs...

#neuroskyscience

22.09.2025 12:19 — 👍 3 🔁 3 💬 0 📌 0

a woman in front of a white board with the words take your time written on it

ALT: a woman in front of a white board with the words take your time written on it

📢 Deadline extended! 📢

The registration deadline for #SNS2025 has been extended to Sunday, September 28th!

Register here 👉 meg.medizin.uni-tuebingen.de/sns_2025/reg...

PS: Students of the GTC (Graduate Training Center for Neuroscience) in Tübingen can earn 1 CP for presenting a poster! 👀

17.09.2025 13:11 — 👍 6 🔁 6 💬 0 📌 0

✨ Meet our speakers! ✨

Among our speakers this year at #SNS2025 we have Simone Ebert (@simoneebert.bsky.social) & Jan Lause (@janlause.bsky.social), from Hertie AI Institute, University of Tübingen

Read the abstract here 💬 👇

meg.medizin.uni-tuebingen.de/sns_2025/abs...

#neuroskyscience

16.09.2025 18:06 — 👍 10 🔁 5 💬 0 📌 0

✨ Meet our speakers! ✨

Next speaker to present is Arthur Lefevre, from University of Lyon

Read the abstract here 💬 👇

meg.medizin.uni-tuebingen.de/sns_2025/abs...

#neuroscience #neuroskyscience

15.09.2025 10:20 — 👍 5 🔁 3 💬 0 📌 0

✨ Meet our speakers! ✨

Next speaker to present is Mara Wolter, PhD student at University of Tübingen in Yulia Oganian lab

Read the abstract here 💬 👇

meg.medizin.uni-tuebingen.de/sns_2025/abs...

12.09.2025 09:39 — 👍 5 🔁 3 💬 0 📌 0

🔵 Tübingen SNS2025 🔵

Over the next few days, we’ll be introducing you to the brilliant scientists who will be deliver their talks at #SNS2025!

✨ Get ready to meet our speakers! ✨

We are starting with Liina Pylkkänen, from New York University

💬 meg.medizin.uni-tuebingen.de/sns_2025/abs...

11.09.2025 08:30 — 👍 9 🔁 6 💬 1 📌 0

Great work from a great team. Congrats guys!🎉

09.09.2025 10:42 — 👍 5 🔁 0 💬 0 📌 0

Not long to go now! For those of you who enjoy a more intimate conference with a chance to get to know your favourite speakers I would highly recommend this right here. Reach out if you have any questions :)

29.08.2025 08:07 — 👍 3 🔁 1 💬 0 📌 0

Can't wait to see this fantastic line-up 🤩

09.07.2025 09:48 — 👍 3 🔁 0 💬 0 📌 0

Can humans use artificial limbs for body augmentation as flexibly as their own hands?

🚨 Our new interdisciplinary study put this question to the test with the Third Thumb (@daniclode.bsky.social), a robotic extra digit you control with your toes!

www.biorxiv.org/content/10.1...

🧵1/10

07.07.2025 15:46 — 👍 18 🔁 8 💬 1 📌 2

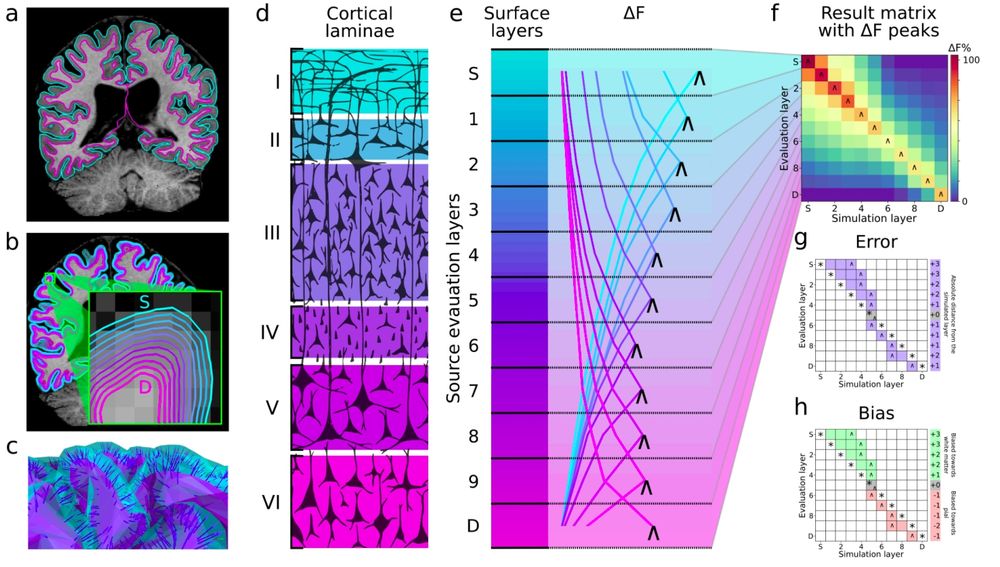

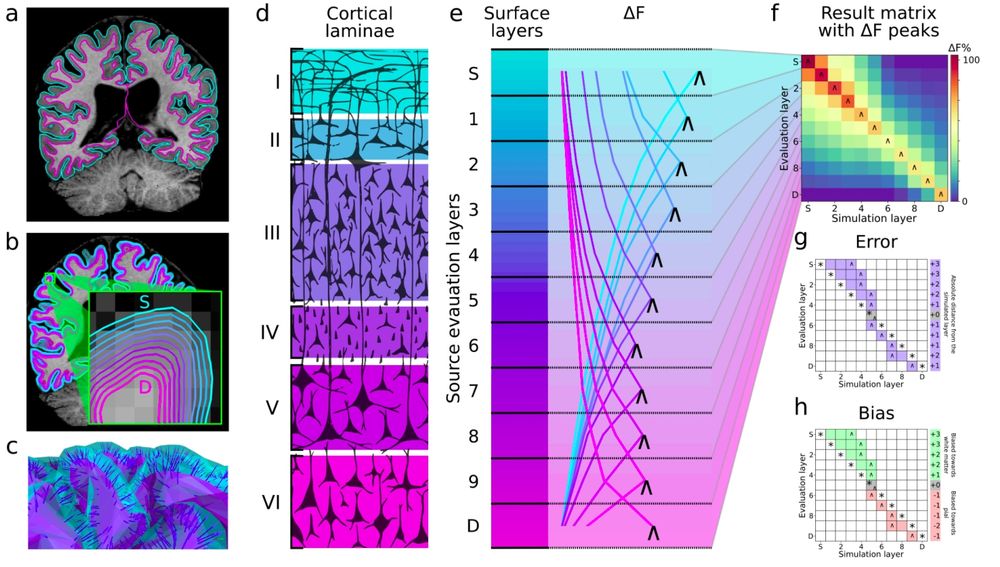

Overview of the simulation strategy and analysis. a) Pial and white matter boundaries

surfaces are extracted from anatomical MRI volumes. b) Intermediate equidistant surfaces are

generated between the pial and white matter surfaces (labeled as superficial (S) and deep (D)

respectively). c) Surfaces are downsampled together, maintaining vertex correspondence across

layers. Dipole orientations are constrained using vectors linking corresponding vertices (link vectors).

d) The thickness of cortical laminae varies across the cortical depth (70–72), which is evenly sampled

by the equidistant source surface layers. e) Each colored line represents the model evidence (relative

to the worst model, ΔF) over source layer models, for a signal simulated at a particular layer (the

simulated layer is indicated by the line color). The source layer model with the maximal ΔF is

indicated by “˄”. f) Result matrix summarizing ΔF across simulated source locations, with peak

relative model evidence marked with “˄”. g) Error is calculated from the result matrix as the absolute

distance in mm or layers from the simulated source (*) to the peak ΔF (˄). h) Bias is calculated as the

relative position of a peak ΔF(˄) to a simulated source (*) in layers or mm.

🚨🚨🚨PREPRINT ALERT🚨🚨🚨

Neural dynamics across cortical layers are key to brain computations - but non-invasively, we’ve been limited to rough "deep vs. superficial" distinctions. What if we told you that it is possible to achieve full (TRUE!) laminar (I, II, III, IV, V, VI) precision with MEG!

02.06.2025 11:54 — 👍 112 🔁 45 💬 4 📌 8

It's gotta be a Zelda playlist for me - those games trained me to problem-solve to that music 😆

28.05.2025 12:19 — 👍 1 🔁 0 💬 1 📌 0

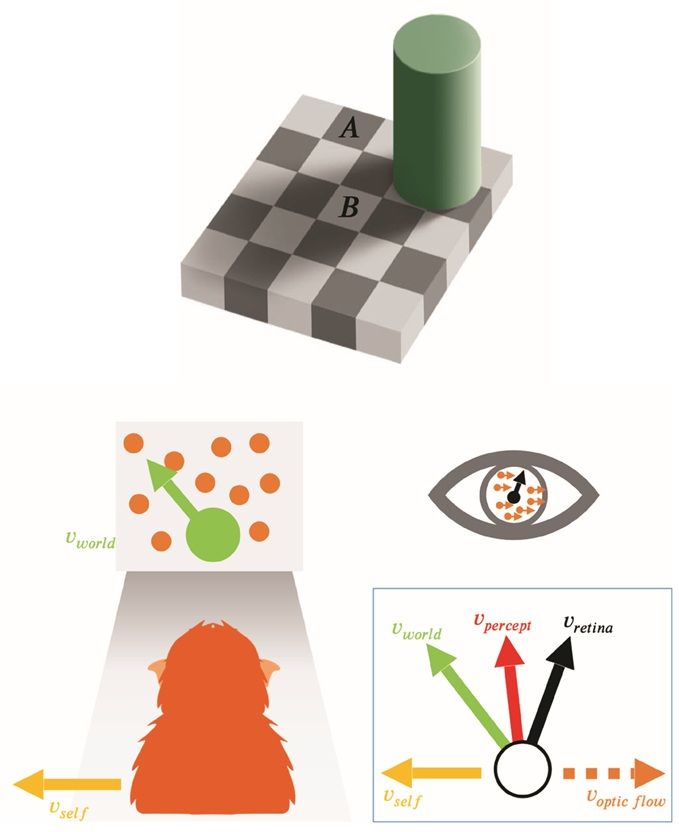

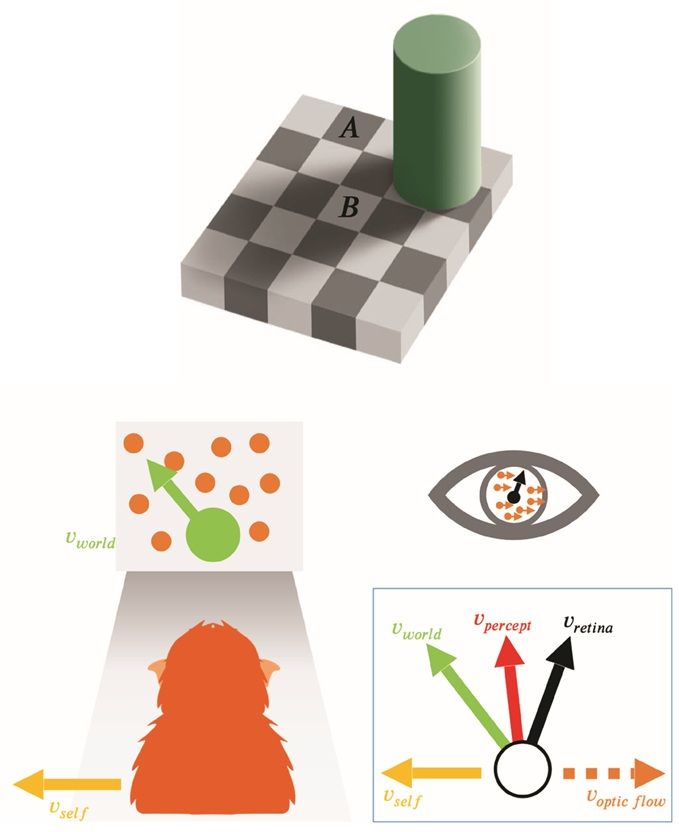

Two examples of how contextual information can bias visual perception. Top: Luminance illusion created by shadows (source: https://persci.mit.edu/gallery/checkershadow). Square B looks brighter than square A but has the same luminance, i.e., they have identical grayscale values in the picture. Bottom: Perception of object motion is biased by self-motion. The combination of leftward self-motion and up-left object motion in the world produces retinal motion that is up-right. If the animal partially subtracts the optic flow vector (orange dashed arrow) generated by self-motion (yellow arrow) from the image motion on the retina (black arrow), they may have a biased perception of object motion (red arrow) that lies between retinal and world coordinates (green arrow).

Rewarding animals to accurately report their subjective #percept is challenging. This study formalizes this problem and overcomes it with a #Bayesian method for estimating an animal’s subjective percept in real time during the experiment @plosbiology.org 🧪 plos.io/3HaxiuB

27.05.2025 18:07 — 👍 12 🔁 2 💬 0 📌 0

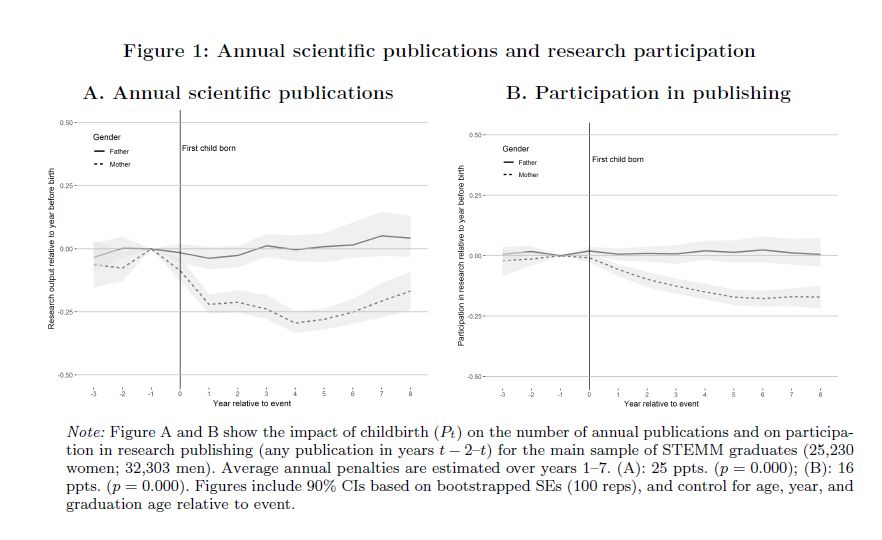

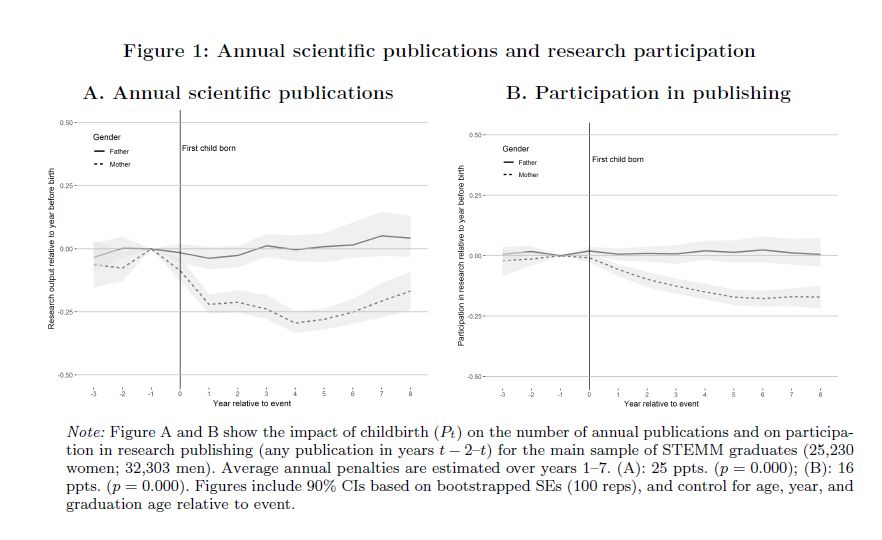

🚨 New WP! 📄 "Publish or Procreate: The Effect of Motherhood on Research Performance" (w/ @valentinatartari.bsky.social

👩🔬👨🔬 We investigate how parenthood affects scientific productivity and impact — and find that the impact is far from equal for mothers and fathers.

22.05.2025 08:03 — 👍 203 🔁 99 💬 2 📌 7

Press release on our new paper from @hih-tuebingen.bsky.social 🧠🥳

Link: www.nature.com/articles/s42...

Thread: bsky.app/profile/migh...

#neuroskyence #compneurosky #magnetoencephalography

26.05.2025 11:58 — 👍 17 🔁 3 💬 0 📌 0

The members of the Cluster of Excellence "Machine Learning: New Perspectives for Science" raise their glasses and celebrate securing another funding period.

We're super happy: Our Cluster of Excellence will continue to receive funding from the German Research Foundation @dfg.de ! Here’s to 7 more years of exciting research at the intersection of #machinelearning and science! Find out more: uni-tuebingen.de/en/research/... #ExcellenceStrategy

22.05.2025 16:23 — 👍 74 🔁 20 💬 4 📌 5

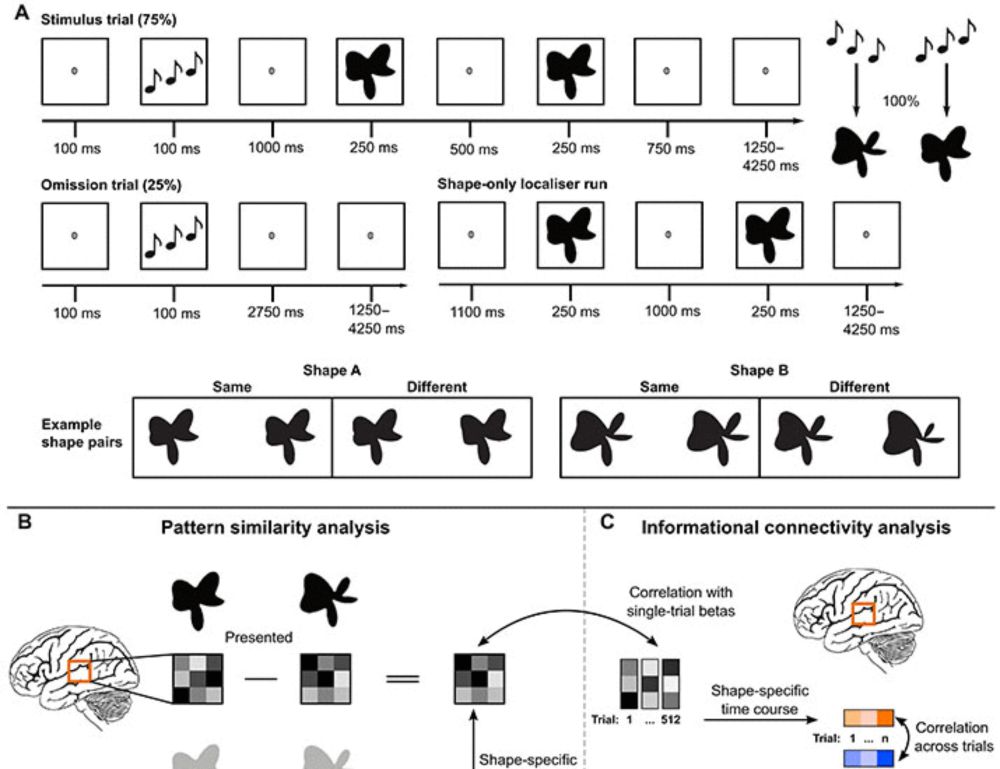

Communication of perceptual predictions from the hippocampus to the deep layers of the parahippocampal cortex

High-resolution neuroimaging reveals stimulus-specific predictions sent from hippocampus to the neocortex during perception.

Our study using layer fMRI to study the direction of communication between the hippocampus and cortex during perceptual predictions is finally out in Science Advances! Predicted-but-omitted shapes are represented in CA2/3 and correlate specifically with deep layers of PHC, suggesting feedback. 🧠🟦

22.05.2025 01:55 — 👍 166 🔁 53 💬 4 📌 1

The goal of our research is to understand how brain states shape decision-making, and how this process goes awry in certain neurological & psychiatric disorders

| tobiasdonner.net | University Medical Center Hamburg-Eppendorf, Germany

Can't read minds but learning to read brain scans! | Psychology & Cognitive Neuroscience | PhD candidate | multisensory perception & perceptual neurodiversity | synesthesiologist | views my own | she/her | cdfine.github.io

At Cerca, we offer the world’s most advanced functional brain scanner – an integrated lightweight, ergonomic and wearable device to revolutionise neuroimaging.

University of Nottingham spin-out company.

https://direct.mit.edu/imag

PhD student at University of Tübingen. Trying to understand how the brain integrates multiple sources of uncertainty to form a decision. 🧠

gabrielaiwama.github.io

neuroscientist in Korea (co-director of IBS-CNIR) interested in how neuroimaging (e.g. fMRI or widefield optical imaging) can facilitate closed-loop causal interventions (e.g. neurofeedback, patterned stimulations). https://tinyurl.com/hakwan

Assistant professor in cognitive (neuro)science at Utrecht University (The Netherlands), interested in consciousness, working memory, attention, and perception.

Head of the CAP-Lab: http://www.cap-lab.net

ORCID: 0000-0001-9728-1272

searching for principles, adaptive behaviors, empirical approach, integration | doing comp neuroscience & psychiatry | PI @cmc-lab.bsky.social | previously @maxplanckcampus.bsky.social (Peter Dayan & Nikos Logothetis)

I am a PhD student working at the intersection of neuroscience and machine learning.

My work focuses on learning dynamics in biologically plausible neural networks. #NeuroAI

Official account of the Perception and i-Perception journals.

https://journals.sagepub.com/home/pec

https://journals.sagepub.com/home/ipe

Visual (and) circadian neuroscience (Max Planck / Technical University of Munich)

ML meets Neuroscience #NeuroAI, Full Professor at the Institute of Cognitive Science (Uni Osnabrück), prev. @ Donders Inst., Cambridge University

Neuroscientist, Henry Dale Fellow/Group Leader at University of Sussex, UK. Researching why and how behaviour influences visual processing.

Professor of Linguistics and Psychology, New York University

Max Planck group leader at ESI Frankfurt | human cognition, fMRI, MEG, computation | sciences with the coolest (phd) students et al. | she/her

A project highlighting the stories and careers of women in neuroscience 👩🏾🔬👩🔬👩🏿🔬👩🏻🔬

Podcast here http://linktr.ee/storiesofwin

Environmental advocate. Check out my podcast >>> @ministryoffilmpod

PhD student in the Object Vision Group at CIMeC, University of Trento. Interested in neuroimaging and object perception. He/him 🏳️🌈

https://davidecortinovis-droid.github.io/