🎉 Excited to share: "𝐌𝐢𝐱𝐭𝐮𝐫𝐞-𝐨𝐟-𝐓𝐫𝐚𝐧𝐬𝐟𝐨𝐫𝐦𝐞𝐫𝐬 (𝐌𝐨𝐓)" has been officially accepted to TMLR (March 2025) and the code is now open-sourced!

📌 GitHub repo: github.com/facebookrese...

📄 Paper: arxiv.org/abs/2411.04996

@liang-weixin.bsky.social

@liang-weixin.bsky.social

🎉 Excited to share: "𝐌𝐢𝐱𝐭𝐮𝐫𝐞-𝐨𝐟-𝐓𝐫𝐚𝐧𝐬𝐟𝐨𝐫𝐦𝐞𝐫𝐬 (𝐌𝐨𝐓)" has been officially accepted to TMLR (March 2025) and the code is now open-sourced!

📌 GitHub repo: github.com/facebookrese...

📄 Paper: arxiv.org/abs/2411.04996

🚨 Our new study reveals widespread LLM adoption across society:

📊By late 2024, LLMs assist in writing:

- 18% of financial consumer complaints

- 24% of corporate press releases

- Up to 15% of job postings (esp. in small/young firms)

- 14% of UN press releases

arxiv.org/abs/2502.09747

Joint work with Junhong Shen, Genghan Zhang @zhang677.bsky.social, Ning Dong, Luke Zettlemoyer, Lili Yu

#LLM #MultiModal #pretraining

✅ Mamba w/ Chameleon setting (discrete tokens): Dense-level image/text at 42.5% and 65.4% FLOPs

✅ Mamba w/ Three-modality (image, text, speech): Dense-level speech at 24.8% FLOPs

Takeaway:

Modality-aware sparsity isn’t just for Transformers—it thrives in SSMs like Mamba too! 🐍

🚀 Want 2x faster pretraining for your multi-modal LLM?

🧵 Following up on Mixture-of-Transformers (MoT), we're excited to share Mixture-of-Mamba (MoM)!

✅ Mamba w/ Transfusion setting (image + text): Dense-level performance with just 34.76% of the FLOPs

Full paper: 📚 arxiv.org/abs/2501.16295

w/ Genghan Zhang(zhang677.github.io), Olivia Hsu (weiya711.github.io), and Prof. Kunle Olukotun (arsenalfc.stanford.edu/kunle/)

06.02.2025 04:42 — 👍 0 🔁 0 💬 0 📌 02/ Our system learns through curriculum-based experience, programming complex ML operators like attention and MoE from scratch.

3/ Bonus: We're releasing a clean benchmark with STeP - a new programming language never seen in training data. A true test of reasoning ability!🎯

📢 Can LLMs program themselves to run faster? 🏃⏱️

LLM self-taught to code for next-gen AI hardware!

arxiv.org/abs/2502.02534

1/ Programming AI accelerators is a major bottleneck in ML. Our self-improving LLM agent learns to write optimized code for new hardware, achieving 3.9x better results.

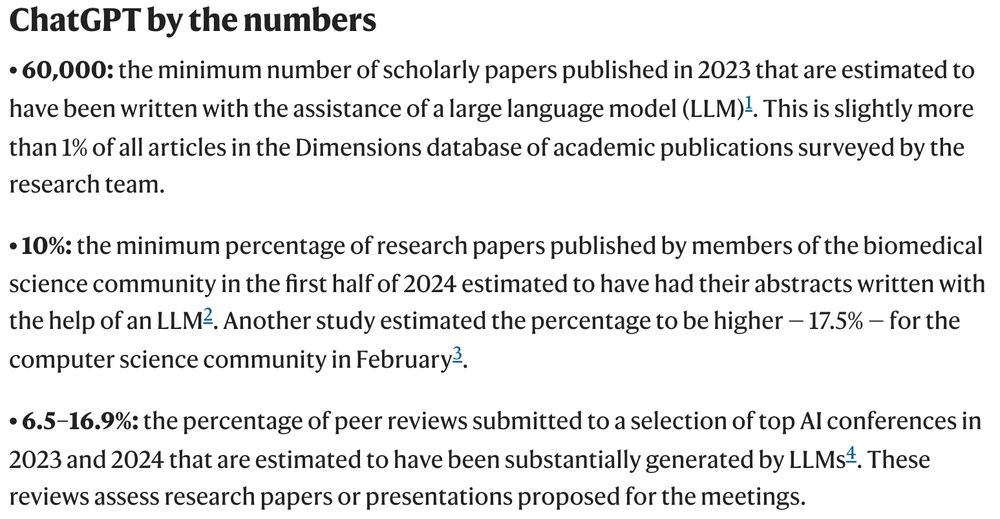

Honored that #Nature has highlighted our work again in their latest piece examining #ChatGPT's transformative impact on scientific research and academia over the past two years. h/t @natureportfolio.bsky.social

www.nature.com/articles/d41...

*Our survey was opt-in and could have selection bias.

**Reviewers should still engage w/ papers independently w/o relying on LLM.

(4/n)

Findings (cont.):

👎 GPT4 can struggle with in-depth critique of study methods; sometimes more generic.

Takeaway: high-quality human feedback still necessary; #LLM could help authors improve early drafts before official peer review**.

Collaborators: Yuhui, Hancheng, and many others 👏

(3/n)

Findings:

👍 Most authors found GPT4 generated feedback helpful*

👍 >50% of points raised by GPT4 also raised by >=1 human reviewer.

👍 Overlap between GPT4 and human feedback similar to overlap between 2 human reviewers.

(2/n)

How well can #GPT4 provide scientific feedback on research projects? We study this in: arxiv.org/abs/2310.01783

We created a pipeline using GPT4 to read 1000s papers (from #Nature, #ICLR, etc.) and generate feedback (eg suggestions for improvement). Then we compare with human expert reviews.

(1/n)

Our new study in #COLM2024 estimates that ~17% of recent CS arXiv papers used #LLMs substantially in its writing.

Around 8% for bioRxiv papers.

Paper: arxiv.org/abs/2404.01268 🧵

Excited that our paper quantifying #LLMs usage in paper reviews is selected as an #ICML2024 oral (top 1.5% of submissions)! 🚀

Main results👇

proceedings.mlr.press/v235/liang24...

Media Coverage: The New York Times

nyti.ms/3vwQhdi

Excited to share that our recent work on LLM in peer review and responsible LLM use is featured in #Nature!

Many thanks to my collaborators for their insights and dedication to advancing fair and ethical AI practices in scientific publishing. #AI #PeerReview

www.nature.com/articles/d41...

Takeaway:

Modality-aware sparsity in MoT offers a scalable path to efficient, multi-modal AI with reduced pretraining costs.

Work of a great team with Lili Yu, Liang Luo, Srini Iyer, Ning Dong, Chunting Zhou, Gargi Ghosh, Mike Lewis, Scott Wen-tau Yih, Luke Zettlemoyer, Victoria Lin.

(6/n)

✅ System profiling shows MoT achieves dense-level image quality in 47% and text quality in 75.6% of the wall-clock time**

**Measured on AWS p4de.24xlarge instances with NVIDIA A100 GPUs.

(5/n)

✅ Transfusion setting (text autoregressive + image diffusion): MoT matches dense model quality using one-third of the FLOPs.

(4/n)

✅ Chameleon setting (text + image generation): Our 7B MoT matches dense baseline quality using just 55.8% of the FLOPs.

Extended to speech as a third modality, MoT achieves dense-level speech quality with only 37.2% of the FLOPs.

(3/n)

At Meta, we introduce Mixture-of-Transformers (MoT), a sparse architecture with modality-aware sparsity for every non-embedding transformer parameter (e.g., feed-forward networks, attention matrices, and layer normalization).

MoT achieves dense-level performance with up to 66% fewer FLOPs!

(2/n)

How can we reduce pretraining costs for multi-modal models without sacrificing quality? We study this Q in our new work: arxiv.org/abs/2411.04996

✅ MoT achieves dense-level 7B performance with up to 66% fewer FLOPs!