Hi, we will have three NeuroAI postdoc openings (3 years each, fully funded) to work with Sebastian Musslick (@musslick.bsky.social), Pascal Nieters and myself on task-switching, replay, and visual information routing.

Reach out if you are interested in any of the above, I'll be at CCN next week!

09.08.2025 08:13 — 👍 57 🔁 31 💬 0 📌 1

Do come and talk to us about any of the above and whatever #NeuroAI is on your mind. Excited for this upcoming #CCN2025, and looking forward to exchanging ideas with all of you.

All posters can be found here: www.kietzmannlab.org/ccn2025/

08.08.2025 14:20 — 👍 4 🔁 0 💬 0 📌 0

And last but not least Fraser Smith's work on understanding how occluded objects are represented in visual cortex.

Time: Tuesday, August 12, 1:30 – 4:30 pm

Location: A66, de Brug & E‑Hall

08.08.2025 14:20 — 👍 6 🔁 0 💬 1 📌 0

Please also check out Songyun Bai's poster on further AVS findings that we were involved in: Neural oscillations encode context-based informativeness during naturalistic free viewing.

Time: Tuesday, August 12, 1:30 – 4:30 pm

Location: A165, de Brug & E‑Hall

08.08.2025 14:20 — 👍 4 🔁 0 💬 1 📌 0

Friday keeps on giving. Interested in representational drift in macaques? Then come check out Dan's (@anthesdaniel.bsky.social) work providing first evidence for a sequence of three different, yet comparatively stable clusters in V4.

Time: August 15, 2-5pm

Location: Poster C142, de Brug & E‑Hall

08.08.2025 14:20 — 👍 9 🔁 0 💬 1 📌 0

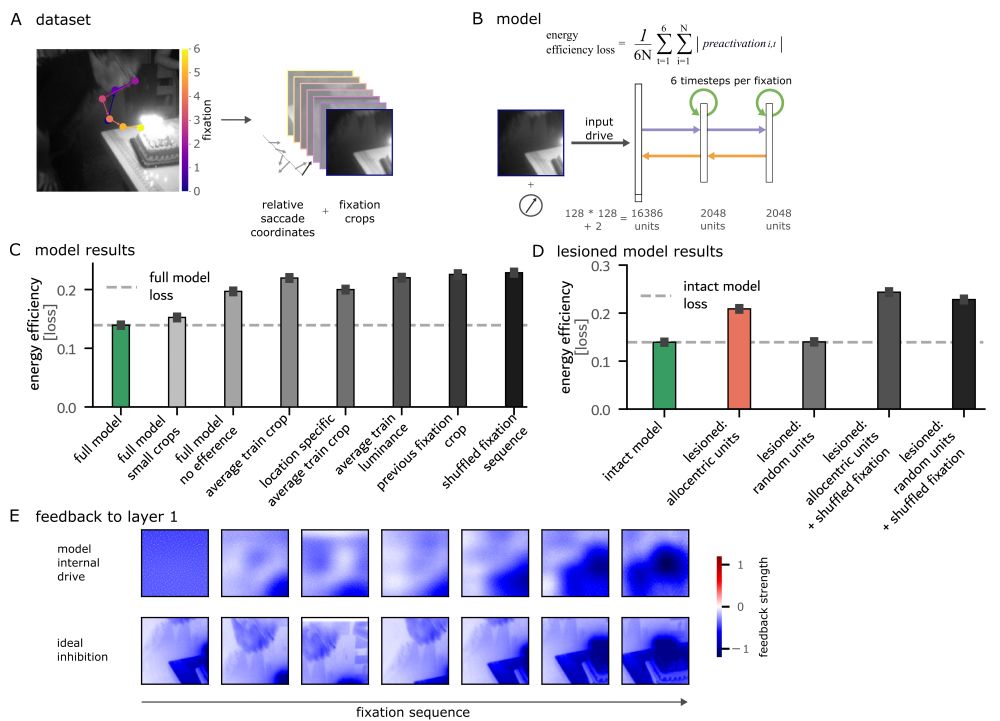

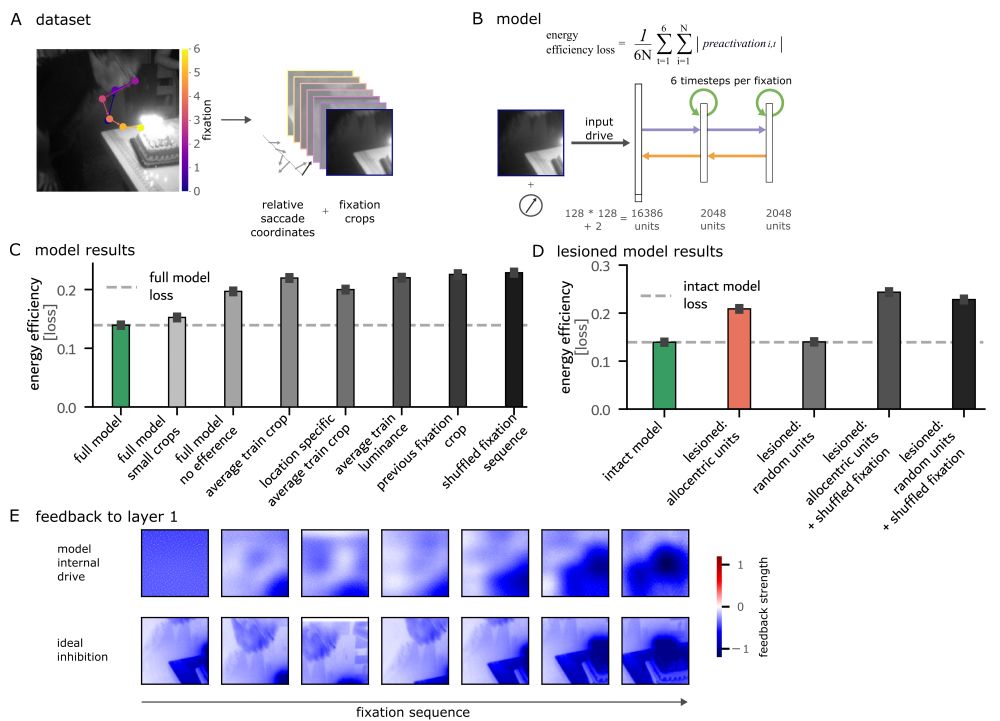

Another Friday feat: Philip Sulewski's (@psulewski.bsky.social) and @thonor.bsky.social's

modelling work. Predictive remapping and allocentric coding as consequences of energy efficiency in RNN models of active vision

Time: Friday, August 15, 2:00 – 5:00 pm,

Location: Poster C112, de Brug & E‑Hall

08.08.2025 14:20 — 👍 11 🔁 2 💬 1 📌 0

Also on Friday, Victoria Bosch (@initself.bsky.social) presents her superb work on fusing brain scans with LLMs.

CorText-AMA: brain-language fusion as a new tool for probing visually evoked brain responses

Time: 2 – 5 pm

Location: Poster C119, de Brug & E‑Hall

2025.ccneuro.org/poster/?id=n...

08.08.2025 14:20 — 👍 7 🔁 0 💬 1 📌 0

On Friday, Carmen @carmenamme.bsky.social has a talk & poster on exciting AVS analyses. Encoding of Fixation-Specific Visual Information: No Evidence of Information Carry-Over between Fixations

Talk: 12:00 – 1:00 pm, Room C1.04

Poster: C153, 2:00 – 5:00 pm, de Brug &E‑Hall

www.kietzmannlab.org/avs/

08.08.2025 14:20 — 👍 6 🔁 0 💬 1 📌 0

Also on Tuesday, Rowan Sommers will present our new WiNN architecture. Title: Sparks of cognitive flexibility: self-guided context inference for flexible stimulus-response mapping by attentional routing

Time: August 12, 1:30 – 4:30 pm

Location: A136, de Brug & E‑Hall

08.08.2025 14:20 — 👍 7 🔁 0 💬 1 📌 0

On Tuesday, Sushrut's (@sushrutthorat.bsky.social) Glimpse Prediction Networks will make their debut: a self-supervised deep learning approach for scene-representations that align extremely well with human ventral stream.

Time: August 12, 1:30 – 4:30 pm

Location: A55, de Brug & E‑Hall

08.08.2025 14:20 — 👍 12 🔁 1 💬 1 📌 2

In the "Modeling the Physical Brain" event, I will be speaking about our work on topographic neural networks.

Time: Monday, August 11, 11:30 am – 6:00 pm

Location: Room A2.07

Paper: www.nature.com/articles/s41...

08.08.2025 14:20 — 👍 10 🔁 0 💬 1 📌 0

First, @zejinlu.bsky.social will talk about how adopting a human developmental visual diet yields robust, shape-based AI vision. Biological inspiration for the win!

Talk Time/Location: Monday, 3-6 pm, Room A2.11

Poster Time/Location: Friday, 2-5 pm, C116 at de Brug & E‑Hall

08.08.2025 14:20 — 👍 10 🔁 0 💬 1 📌 1

OK, time for a CCN runup thread. Let me tell you about all the lab’s projects present at CCN this year. #CCN2025

08.08.2025 14:20 — 👍 35 🔁 9 💬 1 📌 0

A long time coming, now out in @natmachintell.nature.com: Visual representations in the human brain are aligned with large language models.

Check it out (and come chat with us about it at CCN).

07.08.2025 14:16 — 👍 18 🔁 0 💬 0 📌 0

For completeness sake: we know the other team and cite both of their papers in the preprint.

26.07.2025 18:46 — 👍 1 🔁 0 💬 1 📌 0

Devil is in the details as usual.

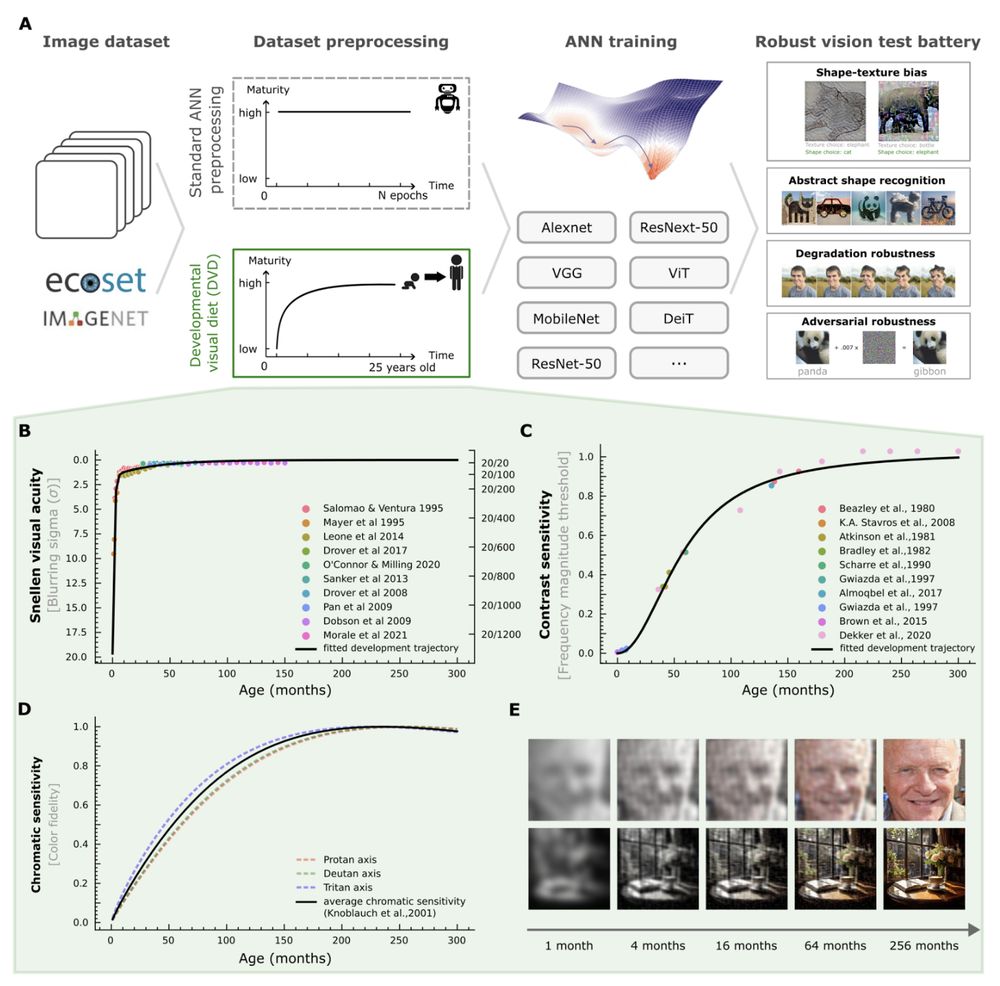

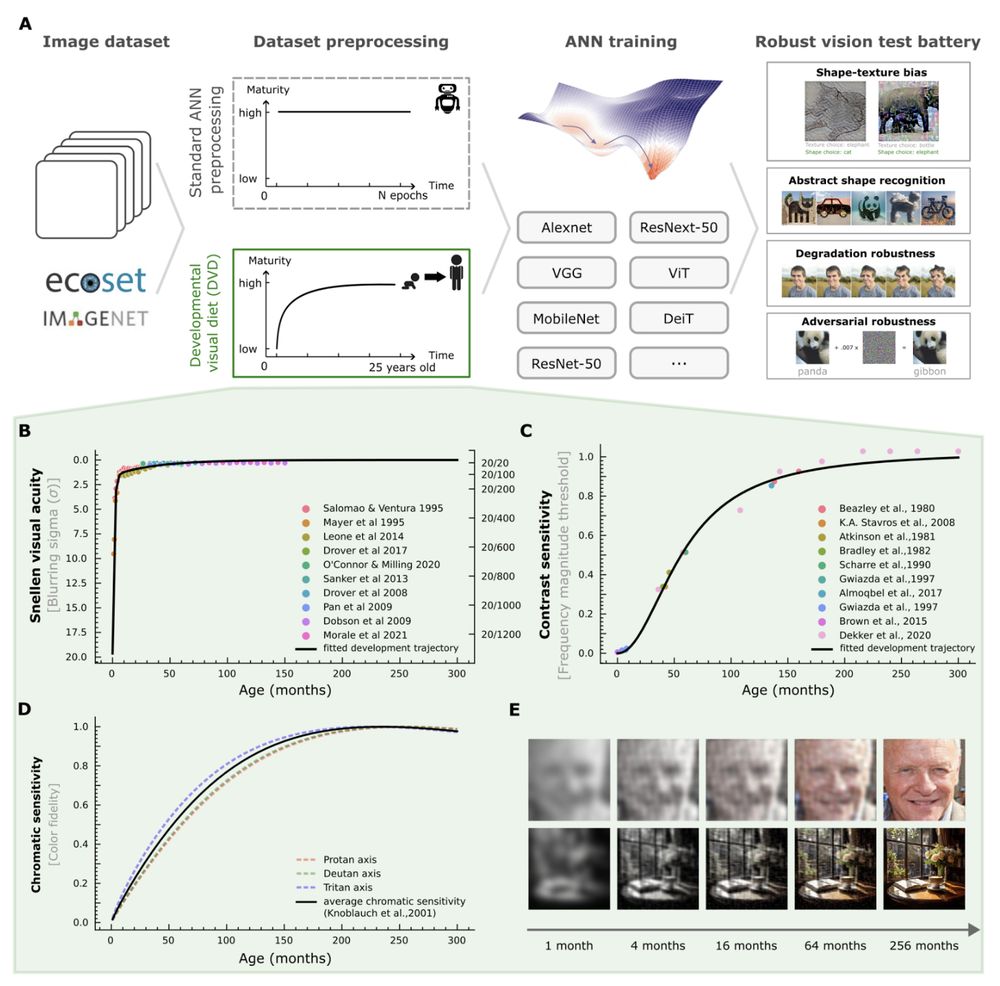

They (and others) focused on acuity, while we show that the actual gains do not come from acuity but the development of contrast sensitivity.

26.07.2025 18:44 — 👍 1 🔁 0 💬 2 📌 0

To be honest, so far it has exceeded our expectations across the board.

A big surprise was that visual acuity (i.e. initial blurring) had so little impact. This is what others had focused on in the past. Instead, the development of contrast sensitivity gets you most of the way there.

08.07.2025 21:00 — 👍 8 🔁 0 💬 1 📌 0

Exciting new preprint from the lab: “Adopting a human developmental visual diet yields robust, shape-based AI vision”. A most wonderful case where brain inspiration massively improved AI solutions.

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

08.07.2025 13:03 — 👍 139 🔁 59 💬 3 📌 10

Thank you!

08.07.2025 16:20 — 👍 1 🔁 0 💬 0 📌 0

We are incredibly excited about this because DVD may offer a resource-efficient path towards safer, more human-like AI vision — and suggests that biology, neuroscience, and psychology have much to offer in guiding the next generation of artificial intelligence. #NeuroAI #AI /fin

08.07.2025 13:03 — 👍 10 🔁 1 💬 2 📌 0

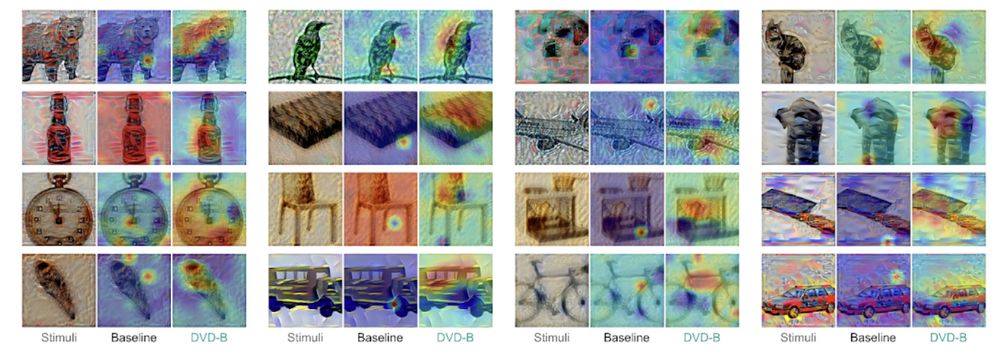

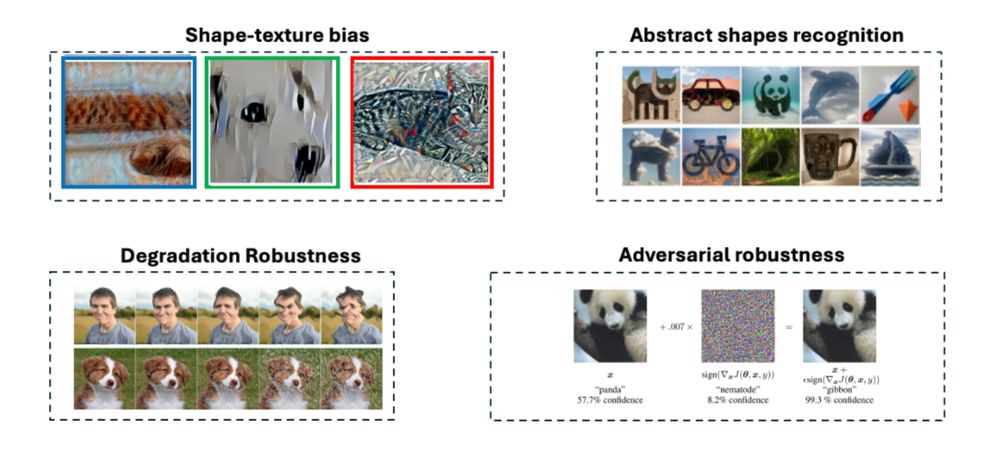

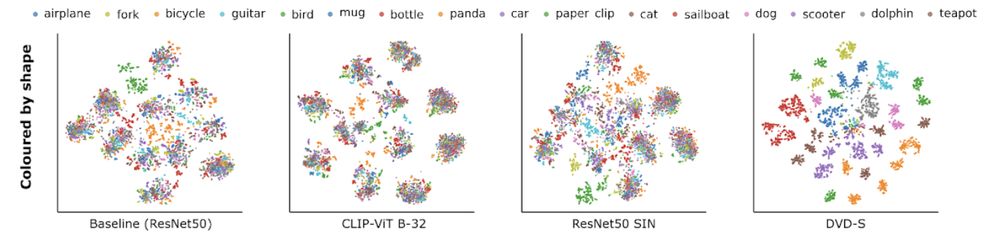

In summary, DVD-training yields models that rely on a fundamentally different feature set, shifting from distributed local textures to integrative, shape-based features as the foundation for their decisions. 9/

08.07.2025 13:03 — 👍 7 🔁 1 💬 1 📌 0

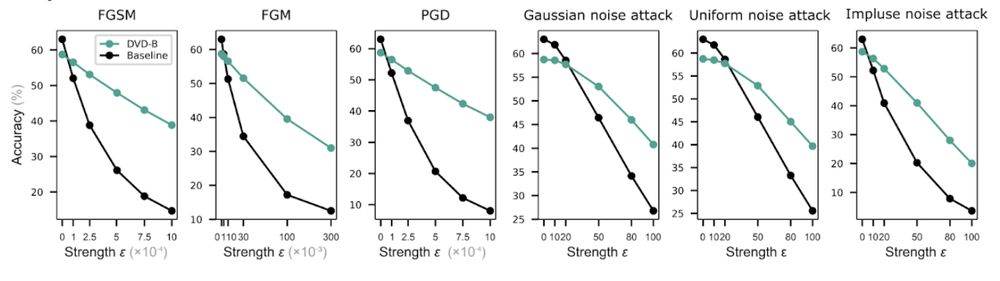

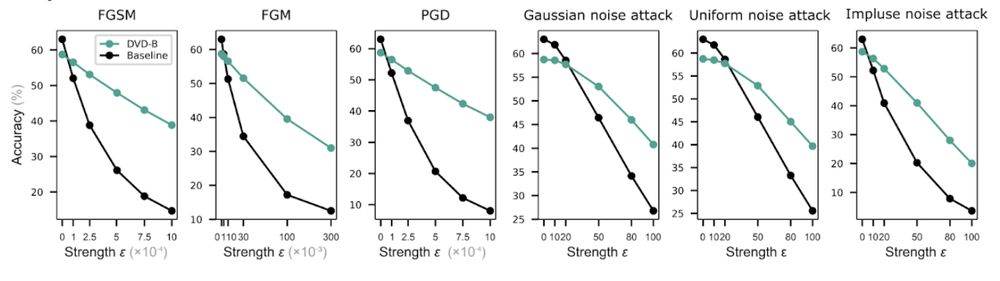

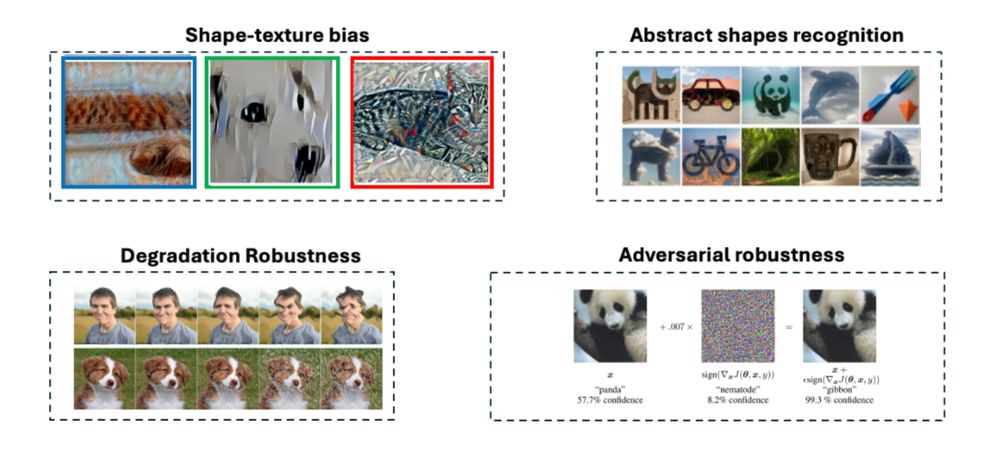

Result 4: How about adversarial robustness? DVD-trained models also showed greater resilience to all black- and white-box attacks tested, performing 3–5 times better than baselines under high-strength perturbations. 8/

08.07.2025 13:03 — 👍 3 🔁 0 💬 1 📌 0

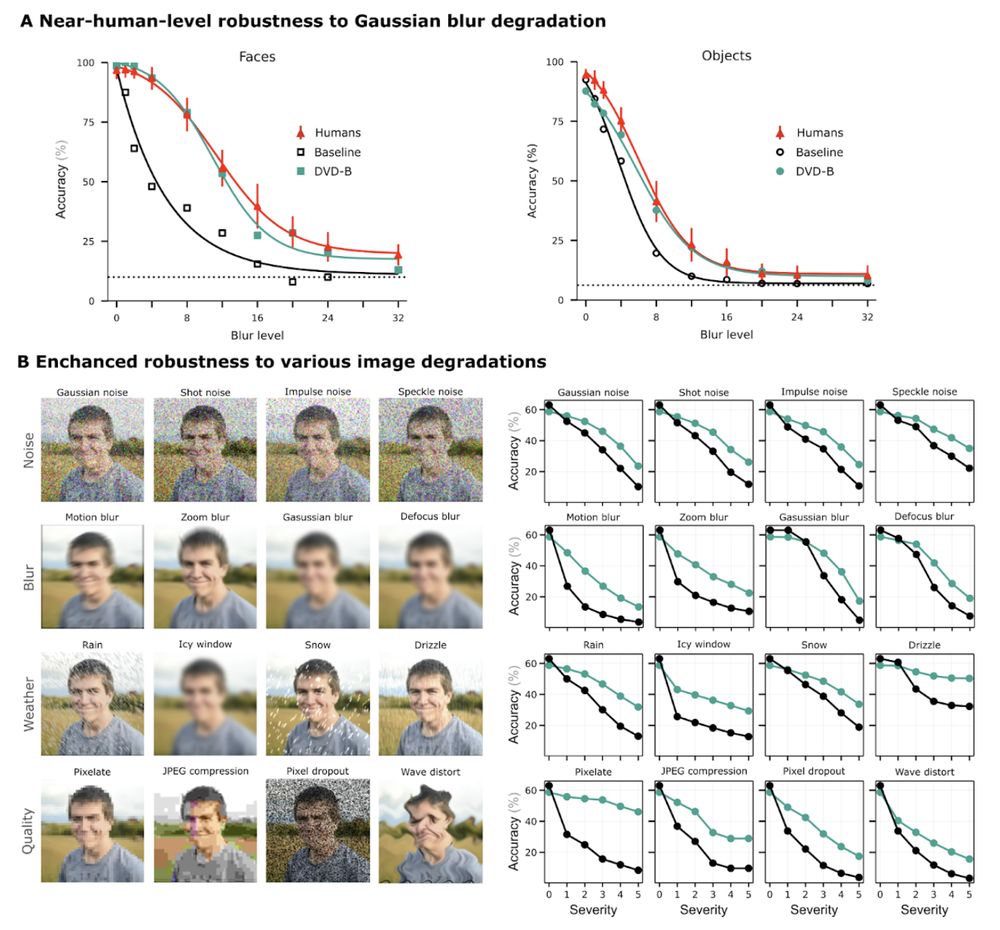

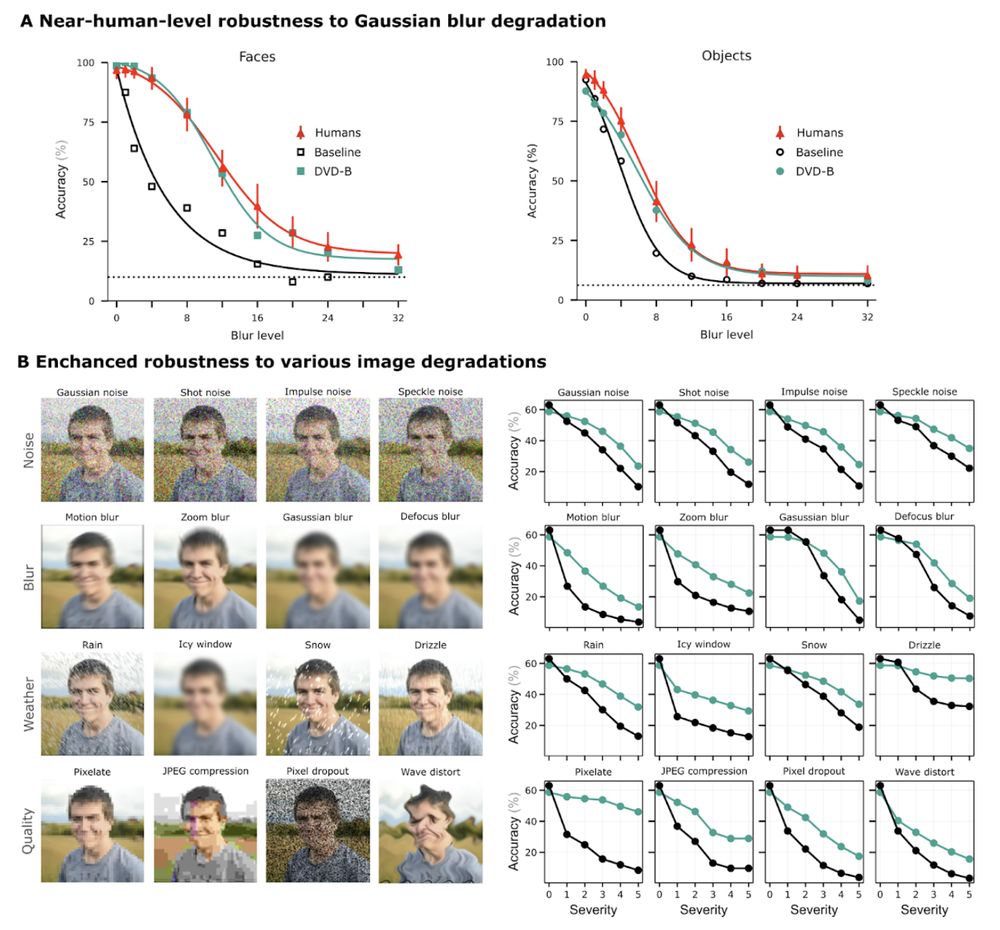

Result 3: DVD-trained models exhibit more human-like robustness to Gaussian blur compared to baselines, plus an overall improved robustness to all image perturbations tested. 7/

08.07.2025 13:03 — 👍 3 🔁 0 💬 1 📌 0

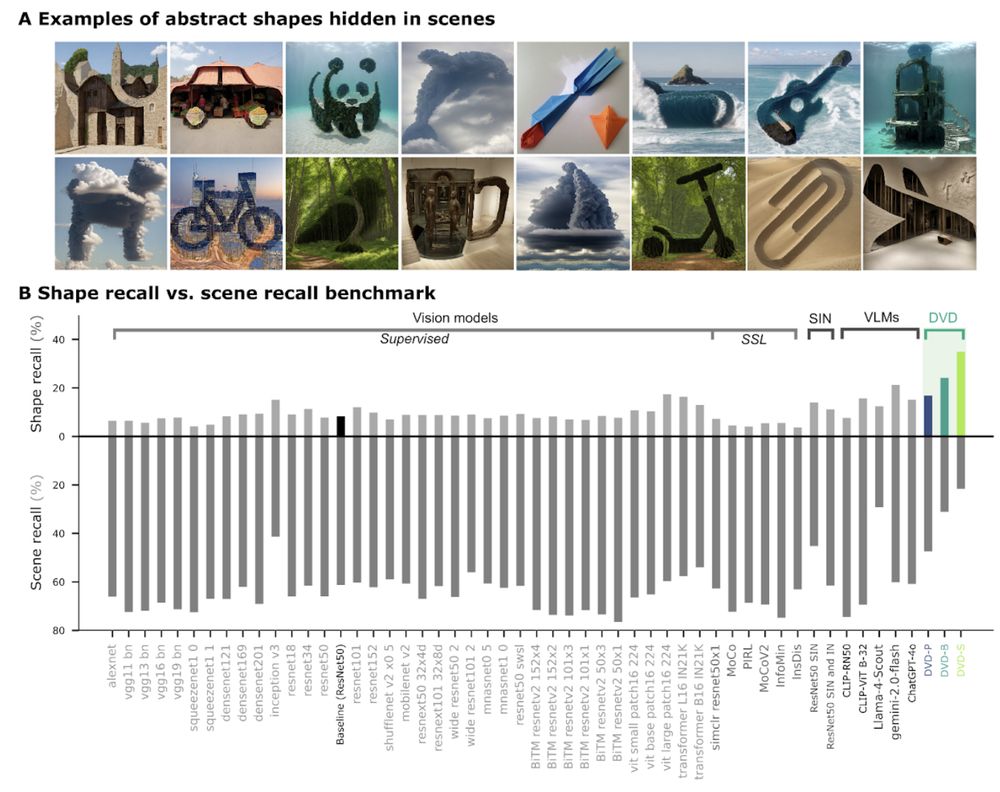

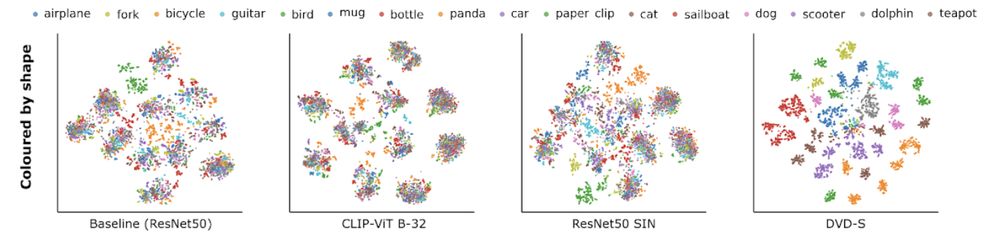

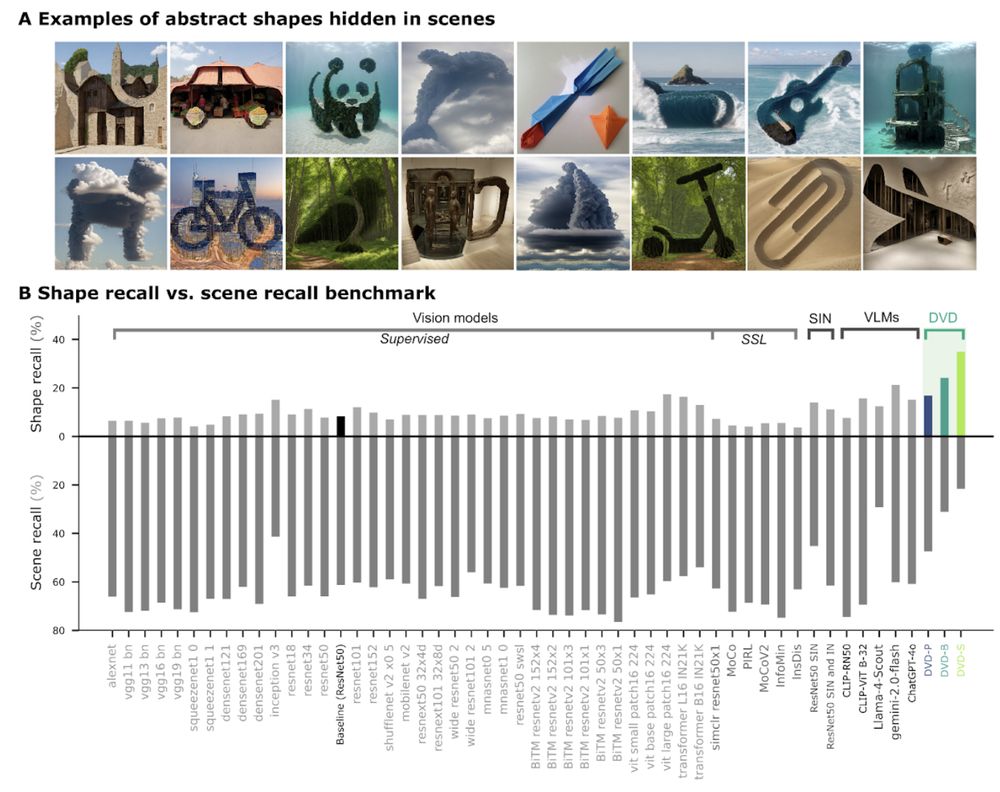

Result 2: DVD-training enabled abstract shape recognition in cases where AI frontier models, despite being explicitly prompted, fail spectacularly.

t-SNE nicely visualises the fundamentally different approach of DVD-trained models. 6/

08.07.2025 13:03 — 👍 4 🔁 0 💬 1 📌 1

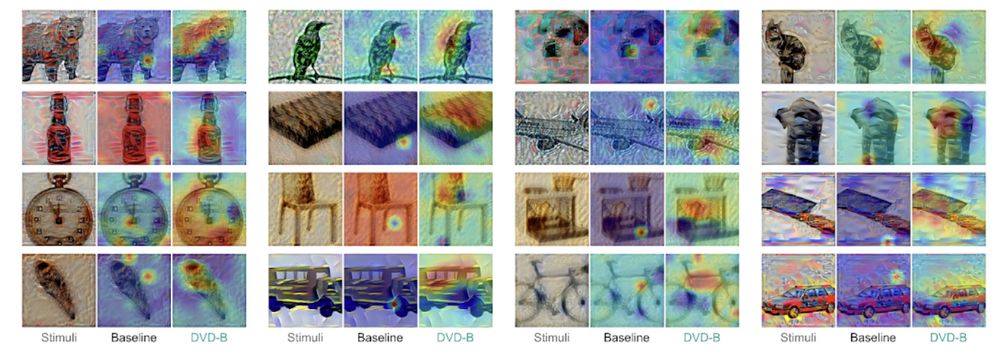

Layerwise relevance propagation revealed that DVD-training resulted in a different recognition strategy than baseline controls: DVD-training puts emphasis on large parts of the objects, rather than highly localised or highly distributed features. 5/

08.07.2025 13:03 — 👍 5 🔁 0 💬 1 📌 0

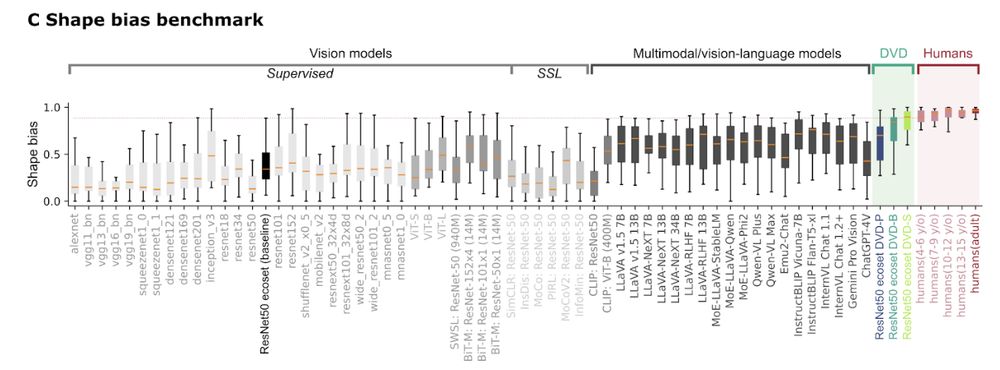

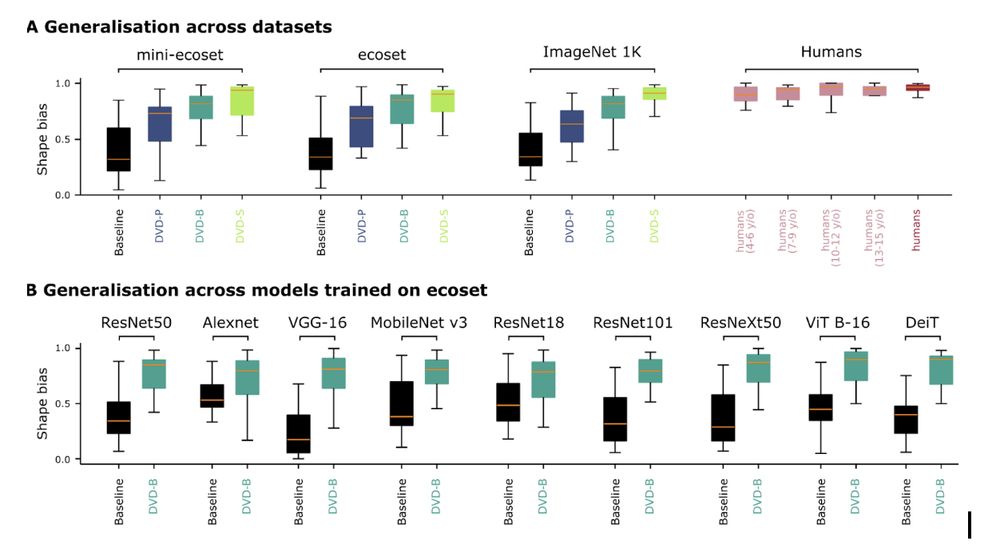

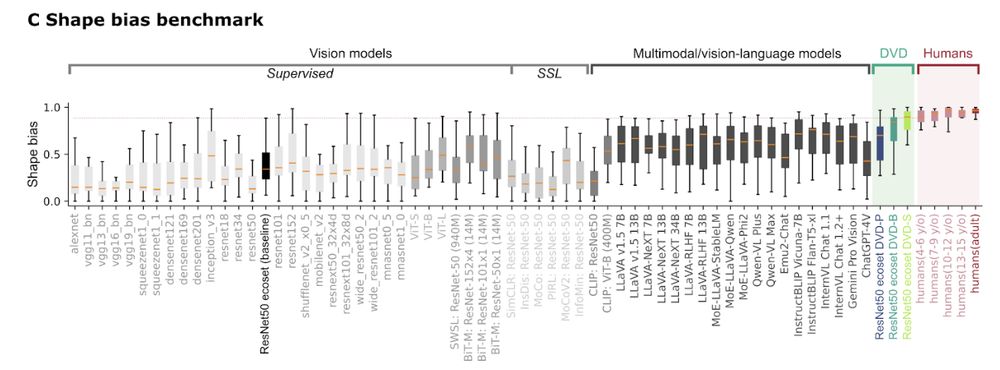

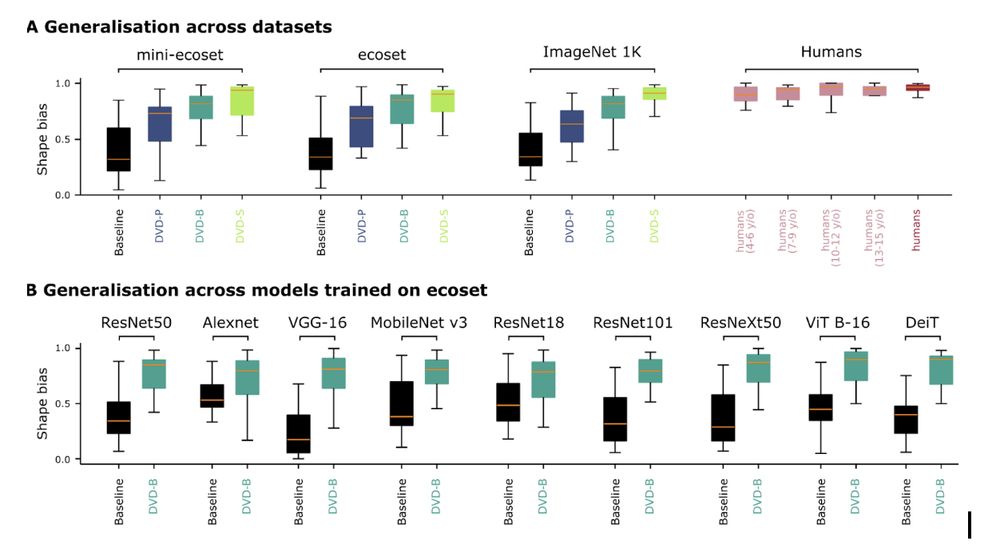

Result 1: DVD training massively improves shape-reliance in ANNs.

We report a new state of the art, reaching human-level shape-bias (even though the model uses orders of magnitude less data and parameters). This was true for all datasets and architectures tested 4/

08.07.2025 13:03 — 👍 7 🔁 1 💬 1 📌 0

We then test the resulting DNNs across a range of conditions, each selected because they are challenging to AI: (i) shape-texture bias, (ii) recognising abstract shapes embedded in complex backgrounds, (iii) robustness to image perturbations, and (iv) adversarial robustness, 3/

08.07.2025 13:03 — 👍 3 🔁 0 💬 1 📌 0

The idea: instead of high-fidelity training from the get-go (the gold standard), we simulate the visual development from newborns to 25 years of age by synthesising decades of developmental vision research into an AI preprocessing pipeline (Developmental Visual Diet - DVD) 2/

08.07.2025 13:03 — 👍 7 🔁 0 💬 1 📌 1

Exciting new preprint from the lab: “Adopting a human developmental visual diet yields robust, shape-based AI vision”. A most wonderful case where brain inspiration massively improved AI solutions.

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

08.07.2025 13:03 — 👍 139 🔁 59 💬 3 📌 10

Staff Scientist in the Behavior and Neuroimaging Core & MRI Research Facility at Brown University

Prof. @ucsantabarbara.bsky.social - Runs a lab slslab.org - Works on computation, neuroscience, behavior, vision, optics, imaging, 2p / multiphoton, optical computing, machine learning / AI - Blogs at labrigger.com - Founded @pacificoptica.bsky.social

Simons Postdoctoral Fellow in Pawan Sinha's Lab at MIT. Experimental and computational approaches to vision, time, and development. Just joined Bluesky!

Machine Learning and Neuroscience Lab @ Uni Tuebingen, PI: Matthias Bethge, bethgelab.org

The goal of our research is to understand how brain states shape decision-making, and how this process goes awry in certain neurological & psychiatric disorders

| tobiasdonner.net | University Medical Center Hamburg-Eppendorf, Germany

Director of the Vision Learning and Development Lab at Temple University.

Interested in cognition, computation, neuroscience, and development.

https://vlad-lab.com/

Computational vision. Deep learning. Center for Computational Brain Science @Brown University. Artificial and Natural Intelligence Toulouse Institute (France). European Laboratory for Learning and Intelligent Systems (ELLIS).

AI Researcher, Writer

Stanford

jaredmoore.org

https://unireps.org

Discover why, when and how distinct learning processes yield similar representations, and the degree to which these can be unified.

UCL NeuroAI fosters collaboration between our neuroscience and AI communities. https://www.ucl.ac.uk/research/domains/neuroscience/ucl-neuroai

Flatiron Research Fellow #FlatironCCN. PhD from #mitbrainandcog. Incoming Asst Prof #CarnegieMellon in Fall 2025. I study how humans and computers hear and see.

M.Sc student in Cognitive Science @UniOsnabrück. Interested in computational modeling, machine learning, and cognitive neuroscience.

Institute for Brain and Behaviour Amsterdam. Promoting interdisciplinary research between psychological and movement sciences.

Working on active perception & cognition at Humboldt-Universität zu Berlin and Science of Intelligence cluster. Part of Berlin School of Mind & Brain, Bernstein Center for Computational Neuroscience Berlin, and Einstein Center Berlin.

@rolfslab on X

I’ve boldly gone into the clear blue yonder. Follow for more recipes and tips.

AI x neuroscience.

🌊 www.rdgao.com

Postdoc @UCSD | working memory 🧠

NeuroAI, Deep Learning for neuroscience, visual system in mice and monkeys, computational lab based in Göttingen (Germany), https://sinzlab.org

Professor at JLU | cognitive computational neuroscientist | mom of 3 | she/her

Professor of Data Science @ University of Tübingen, Director of Hertie AI (www.hertie.ai) and Speaker of ML4Science (www.machinelearningforscience.de)