🚨 #CCN2026 Proceedings submissions are open!

CCN 2026 again features an 8-page Proceedings track (alongside extended abstracts). Accepted papers will appear in CCN-Proceedings (CCN‑P) with DOIs on OpenReview.

28.01.2026 16:16 — 👍 33 🔁 22 💬 1 📌 6

Elizabeth Lee smiles at the camera.

Elizabeth Lee, a first-year Ph.D. student in Neural Computation, has been awarded CMU’s 2025 Sutherland-Merlino Fellowship. Her work bridges neuroscience and machine learning, and she’s passionate about advancing STEM access for underrepresented groups.

www.cmu.edu/mcs/news-eve...

30.09.2025 20:58 — 👍 7 🔁 3 💬 0 📌 0

YouTube video by PronunciationManual

How to Pronounce Chipotle

This remains my personal fave:

www.youtube.com/watch?v=3ADu...

23.09.2025 02:14 — 👍 1 🔁 0 💬 1 📌 0

Data on the Brain & Mind

📢 10 days left to submit to the Data on the Brain & Mind Workshop at #NeurIPS2025!

📝 Call for:

• Findings (4 or 8 pages)

• Tutorials

If you’re submitting to ICLR or NeurIPS, consider submitting here too—and highlight how to use a cog neuro dataset in our tutorial track!

🔗 data-brain-mind.github.io

25.08.2025 15:43 — 👍 8 🔁 5 💬 0 📌 0

So excited for CCN2026!!! 🧠🤔🤖🗽

15.08.2025 18:06 — 👍 8 🔁 0 💬 0 📌 0

arguably the most important component of AI for neuroscience:

data, and its usability

11.08.2025 11:28 — 👍 20 🔁 2 💬 1 📌 0

Join us at #NeurIPS2025 for our Data on the Brain & Mind workshop! We aim to connect machine learning researchers and neuroscientists/cognitive scientists, with a focus on emerging datasets.

More info: data-brain-mind.github.io

05.08.2025 00:21 — 👍 17 🔁 7 💬 0 📌 0

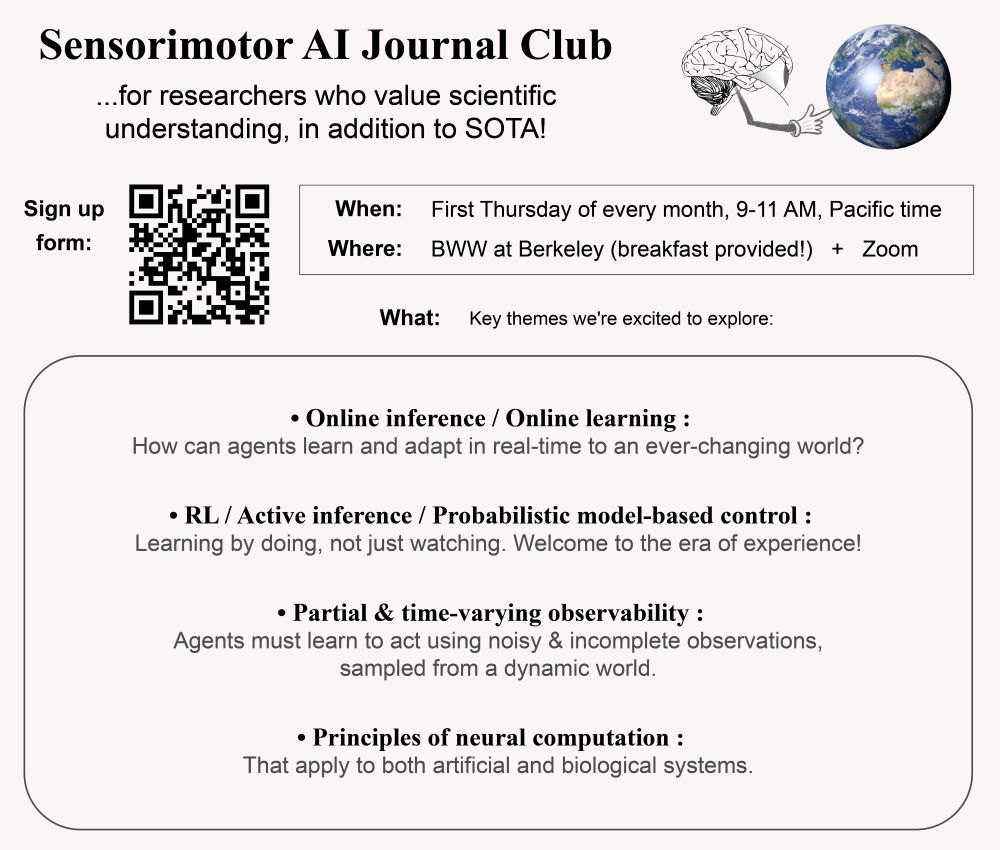

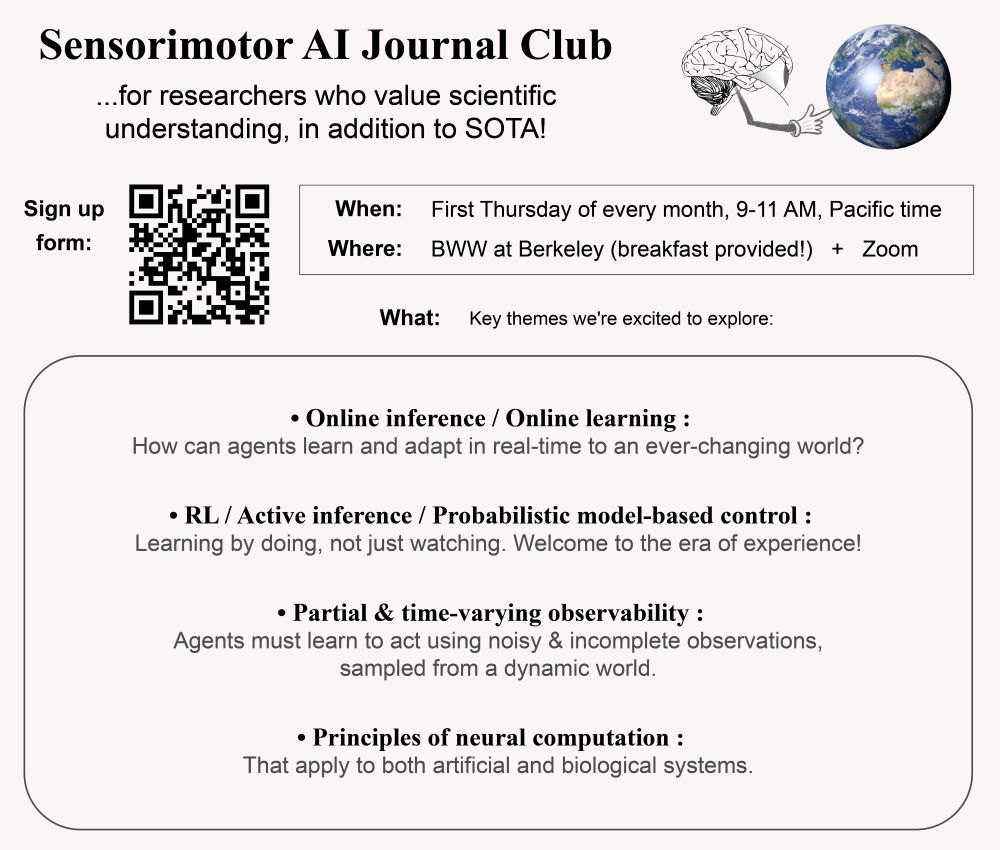

Announcing the new "Sensorimotor AI" Journal Club — please share/repost!

w/ Kaylene Stocking, Tommaso Salvatori, and @elisennesh.bsky.social

Sign up link: forms.gle/o5DXD4WMdhTg...

More details below 🧵[1/5]

🧠🤖🧠📈

09.07.2025 22:31 — 👍 24 🔁 12 💬 1 📌 0

Topics include but are not limited to:

•Optimal and adaptive stimulus selection for fitting, developing, testing or validating models

•Stimulus ensembles for model comparison

•Methods to generate stimuli with “naturalistic” properties

•Experimental paradigms and results using model-optimized stimuli

18.06.2025 20:52 — 👍 0 🔁 0 💬 0 📌 0

Consider submitting your recent work on stimulus synthesis and selection to our special issue at JOV!

18.06.2025 20:52 — 👍 2 🔁 1 💬 1 📌 0

What is the probability of an image? What do the highest and lowest probability images look like? Do natural images lie on a low-dimensional manifold?

In a new preprint with Zahra Kadkhodaie and @eerosim.bsky.social, we develop a novel energy-based model in order to answer these questions: 🧵

06.06.2025 22:11 — 👍 72 🔁 23 💬 1 📌 2

Just a few months until Cognitive Computational Neuroscience comes to Amsterdam! Check out our now-complete schedule for #CCN2025, with descriptions of each of the Generative Adversarial Collaborations (GACs), Keynotes-and-Tutorials (K&Ts), Community Events, Keynote Speakers, and social activities!

19.05.2025 11:08 — 👍 25 🔁 11 💬 0 📌 0

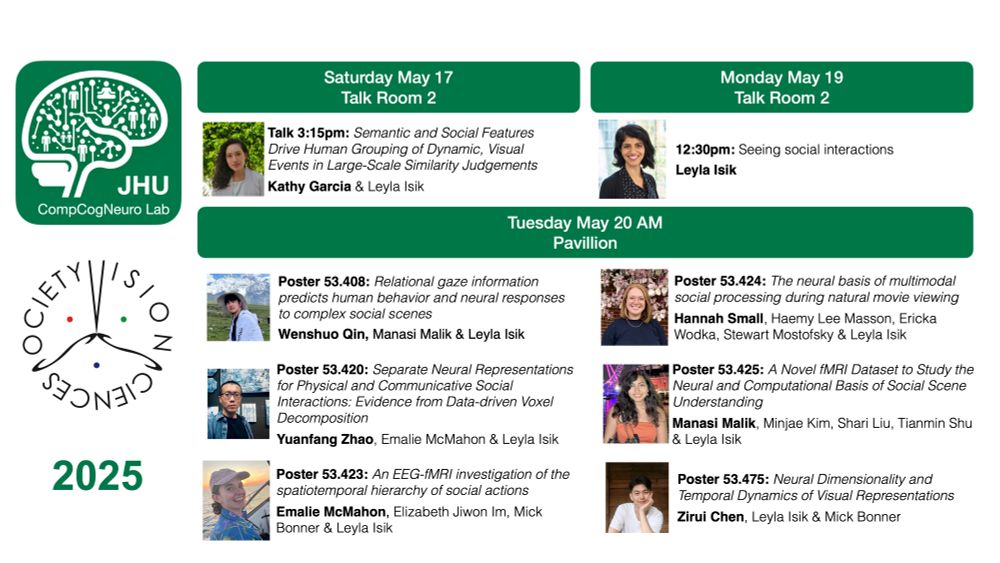

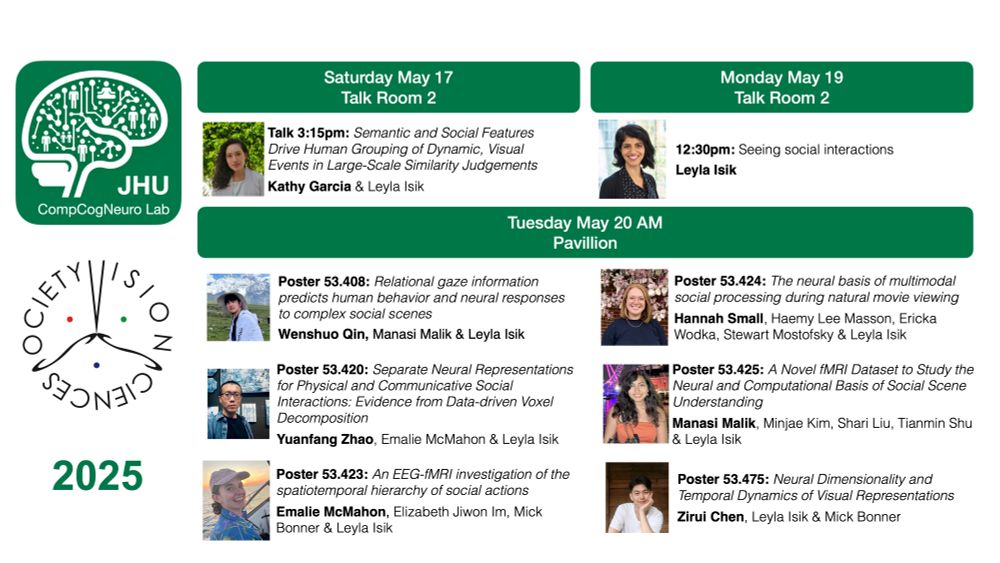

I’m happy to be at #VSS2025 and share what our lab has been up to this year!

I’m also honored to receive this year’s young investigator award and will give a short talk at the awards ceremony Monday

16.05.2025 18:12 — 👍 52 🔁 14 💬 3 📌 0

JOV Special Issue - Choose your stimuli wisely: Advances in stimulus synthesis and selection | JOV | ARVO Journals

The symposium also serves to kick off a special issue of JOV!

"Choose your stimuli wisely: Advances in stimulus synthesis and selection"

jov.arvojournals.org/ss/synthetic...

Paper Deadline: Dec 12th

For those not able to attend tomorrow, I will strive to post some of the highlights here 👀 👀 👀

15.05.2025 20:31 — 👍 4 🔁 0 💬 0 📌 0

VSS SymposiaSymposia – Vision Sciences Society

Super excited for our #VSS2025 symposium tomorrow, "Model-optimized stimuli: more than just pretty pictures".

Join us to talk about designing and using synthetic stimuli for testing properties of visual perception!

May 16th @ 1-3PM in Talk Room #2

More info: www.visionsciences.org/symposia/?sy...

15.05.2025 20:31 — 👍 25 🔁 6 💬 1 📌 0

This is joint work with fantastic co-authors from @flatironinstitute.org Center for Computational Neuroscience: @lipshutz.bsky.social (co-first) @sarah-harvey.bsky.social @itsneuronal.bsky.social @eerosim.bsky.social

24.04.2025 05:12 — 👍 2 🔁 0 💬 0 📌 0

These examples demonstrate how our framework can be used to probe for informative differences in local sensitivities between complex models, and suggest how it could be used to compare model representations with human perception.

24.04.2025 05:12 — 👍 2 🔁 0 💬 1 📌 0

In a second example, we apply our method to a set of deep neural network models and reveal differences in the local geometry that arise due to architecture and training types, illustrating the method's potential for revealing interpretable differences between computational models.

24.04.2025 05:12 — 👍 0 🔁 0 💬 1 📌 0

As an example, we use this framework to compare a set of simple models of the early visual system, identifying a novel set of image distortions that allow immediate comparison of the models by visual inspection.

24.04.2025 05:12 — 👍 0 🔁 0 💬 1 📌 0

This provides an efficient method to generate stimulus distortions that discriminate image representations. These distortions can be used to test which model is closest to human perception.

24.04.2025 05:12 — 👍 0 🔁 0 💬 1 📌 0

We then extend this work to show that the metric may be used to optimally differentiate a set of *many* models, by finding a pair of “principal distortions” that maximize the variance of the models under this metric.

24.04.2025 05:12 — 👍 1 🔁 0 💬 1 📌 0

We use the FIM to define a metric on the local geometry of an image representation near a base image. This metric can be related to previous work investigating the sensitivities of one or two models.

24.04.2025 05:12 — 👍 1 🔁 0 💬 1 📌 0

We propose a framework for comparing a set of image representations in terms of their local geometries. We quantify the local geometry of a representation using the Fisher information matrix (FIM), a standard statistical tool for characterizing the sensitivity to local stimulus distortions.

24.04.2025 05:12 — 👍 1 🔁 0 💬 1 📌 0

Recent work suggests that many models are converging to representations that are similar to each other and (maybe) to human perception. However, similarity often focuses on stimuli that are far apart in stimulus space. Even if global geometry is similar, the local geometry can be quite different.

24.04.2025 05:12 — 👍 0 🔁 0 💬 1 📌 0

Discriminating image representations with principal distortions

Image representations (artificial or biological) are often compared in terms of their global geometric structure; however, representations with similar global structure can have strikingly...

We are presenting our work “Discriminating image representations with principal distortions” at #ICLR2025 today (4/24) at 3pm! If you are interested in comparing model representations with other models or human perception, stop by poster #63. Highlights in 🧵

openreview.net/forum?id=ugX...

24.04.2025 05:12 — 👍 39 🔁 13 💬 1 📌 0

Applications close TODAY (April 14) for the 2025 Flatiron Institute Junior Theoretical Neuroscience Workshop.

All you need to apply is a CV and a 1 page abstract. 🧠🗽

14.04.2025 13:56 — 👍 4 🔁 3 💬 0 📌 0

📣 Grad students and postdocs in computational and theoretical neuroscience: please consider applying for the 2025 Flatiron Institute Junior Theoretical Neuroscience Workshop! All expenses are covered. Apply by April 14. jtnworkshop2025.flatironinstitute.org

09.04.2025 16:11 — 👍 21 🔁 16 💬 0 📌 0

Postdoc in the Hayden lab at Baylor College of Medicine studying neural computations of natural language & communication in humans. Sister to someone with autism. F32 NIDCD Fellow | Autism Research Institute funded | she/her. melissafranch.com

Cognitive science journal published by MIT Press.

https://direct.mit.edu/opmi

neuroscience and behavior in parrots and songbirds

Simons junior fellow and post-doc at NYU Langone studying vocal communication, PhD MIT brain and cognitive sciences

Workshop at #NeurIPS2025 aiming to connect machine learning researchers with neuroscientists and cognitive scientists by focusing on concrete, open problems grounded in emerging neural and behavioral datasets.

🔗 https://data-brain-mind.github.io

Associate Research Scientist at Center for Theoretical Neuroscience Zuckerman Mind Brain Behavior Institute

Kavli Institute for Brain Science

Columbia University Irving Medical Center

K99-R00 scholar @NIH @NatEyeInstitute

https://toosi.github.io/

Neuroscientist at University College London (www.ucl.ac.uk/cortexlab). Opinions my own.

Flatiron Research Fellow at @CCN and CCM

Cognitive scientist studying play & problem solving

jchu10.github.io

San Diego Dec 2-7, 25 and Mexico City Nov 30-Dec 5, 25. Comments to this account are not monitored. Please send feedback to townhall@neurips.cc.

Scripps Research Professor. HHMI Investigator. Nobel Prize Physiology or Medicine 2021. Opinions are my own and do not reflect those of my employer.

Stanford Professor | NeuroAI Scientist | Entrepreneur working at the intersection of neuroscience, AI, and neurotechnology to decode intelligence @ enigmaproject.ai

Ph.D. Student @mila-quebec.bsky.social and @umontreal.ca, AI Researcher

🧠 Scientist | UW-Madison Asst. Prof 🦡 |

| Former NIH Postdoc |

Organizer & Advocate for Science, Democracy, and Labor 🧪🇺🇸✊

(opinions are my own)

Illuminating math and science. Supported by the Simons Foundation. 2022 Pulitzer Prize in Explanatory Reporting. www.quantamagazine.org

Assistant Professor at UCSD Cognitive Science and CSE (affiliate) | Past: Postdoc @MIT, PhD @Cornell, B. Tech @IITKanpur | Interested in Biological and Artificial Intelligence

Assistant Professor, McGill University | Associate Academic Member, Mila - Quebec AI Institute | Neuroscience and AI, learning and inference, dopamine and cognition

https://massetlab.org/