Interesting. I think OpenAI may do something similar. Last night I was watching Codex try to run a `ps` and it was failing. Ultimately, I'm skeptical of these shim approaches; you want them to have tons of horsepower. Running the tool in the sandbox seems better.

21.10.2025 13:36 — 👍 0 🔁 0 💬 0 📌 0

Love this detail. reuters on the ruling: www.reuters.com/legal/litiga...

25.06.2025 00:41 — 👍 0 🔁 0 💬 0 📌 0

So not surprised. When the “is ChatGPT‘s behavior changing over time“ paper came out I published a critique, partially because it’s not that the paper was technically wrong, but it was written with ambiguous conclusions misconstrued by the press. The world loves to see AI fail right now. 🤷🏼♂️

15.06.2025 08:22 — 👍 0 🔁 0 💬 1 📌 0

ChatGPT - AI Assistant Demo Analysis

Shared via ChatGPT

This conversation with ChatGPT goes to show: garbage in, garbage out chatgpt.com/share/6841e2...

It knows a lot but it won’t second guess you unless you ask/demand it

05.06.2025 18:30 — 👍 0 🔁 0 💬 0 📌 0

as an AI founder who is ridiculously all in, when I’m like flabbergasted by the temerity of your product I just can’t even…

05.06.2025 18:18 — 👍 0 🔁 0 💬 1 📌 0

This fits really well with the “putting our national security conversation on telegram is a non-story” line

30.05.2025 10:46 — 👍 1 🔁 1 💬 0 📌 0

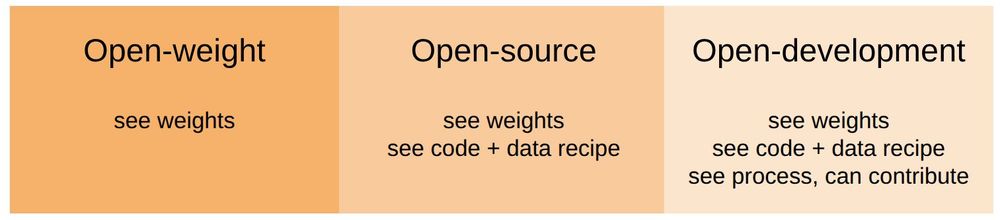

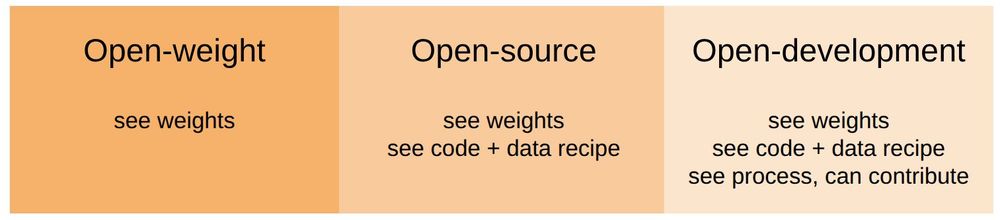

How much faster would the science of large-scale AI advance if we could open-source the *process* of building a frontier model?

Not just the final models/code/data, but also negative results, toy experiments, and even spontaneous discussions.

That's what we're trying @ marin.community

19.05.2025 19:05 — 👍 9 🔁 4 💬 1 📌 0

Suno is so fucking good. Wow.

12.05.2025 16:47 — 👍 0 🔁 0 💬 0 📌 0

The year is 2030. nVidia announces new DGX with attached Fusion reactor.

07.05.2025 15:48 — 👍 0 🔁 0 💬 0 📌 0

Claude did not understand the mission and tried to write his system prompt to Notion. haha!

06.05.2025 16:50 — 👍 0 🔁 0 💬 0 📌 0

This was using 5 as spec tokens (which is the example in the docs but for llamacpp I've found 5-8 to be a sweet spot).

06.05.2025 13:37 — 👍 0 🔁 0 💬 0 📌 0

Home setup: rtx3090+4090. testing AWQ Qwen3; both 32B and 32B w/ 4B speculative decoding

TL;DR: ⚠️ on spec in vanilla vllm. w/ a 75% token acceptance generation in batch-4 went from 150t/s (no speculative) -> 50t/s (w/ speculative)

I'd get 400t/s+ max tput w/out speculative, larger batch

06.05.2025 13:36 — 👍 0 🔁 0 💬 1 📌 0

I thought we’d agreed on paperclips

13.04.2025 00:35 — 👍 1 🔁 0 💬 0 📌 0

Yes

12.04.2025 03:54 — 👍 0 🔁 0 💬 0 📌 0

Sorry, what? This seems very specific and I’ve been wondering how to make sense of different reports on quality; lmsys model is literally labeled Maverick though. Are you saying there was a different unreleased version of Maverick?

06.04.2025 22:13 — 👍 0 🔁 0 💬 1 📌 0

Wild and wonderful watching a keynote demo 100ft wide and being able to literally picture the code in your head. 😁

01.04.2025 17:04 — 👍 0 🔁 0 💬 0 📌 0

I haven’t used it enough to say but it’s interesting to think that going from 70% success to 85% success is “twice as good”. Asymptotic improvements are still significant.

29.03.2025 06:44 — 👍 0 🔁 0 💬 0 📌 0

Every schema for content should have a human vs ai flag (or perhaps an enum with human, ai, and then some hybrid roles).

26.03.2025 15:53 — 👍 0 🔁 0 💬 0 📌 0

Well you’re not going to make a lot of friends with the lawyers but the rest of us are pretty happy.

23.03.2025 00:31 — 👍 0 🔁 0 💬 0 📌 0

Jensen: "I'd never buy a hopper!"

Azure: "We don't have any Ampere GPUs to turn up even."

Me: 😠

19.03.2025 18:45 — 👍 0 🔁 0 💬 0 📌 0

640KB ought to be enough for anybody.

15.03.2025 06:55 — 👍 0 🔁 0 💬 0 📌 0

semianalysis iirc thinks deepseek actually had 1.3B in hardware so not a box of scraps :) But I agree there’s no need or gain long term from an artificial moat. A surgical ban on deepseek api (no gvmt use, requiring contractors/employees to disclose use) would be fine imo

14.03.2025 14:31 — 👍 0 🔁 0 💬 0 📌 0

💯 to be fair, china has a deserved (I think) reputation for engineering economic success with state policies. But in this case the answer is to optimize more domestically. AI is not some consumable like a car; it is creating a flywheel. We can’t afford to be crippled.

14.03.2025 14:28 — 👍 1 🔁 0 💬 1 📌 0

Even banning API access here is super sketchy, and if this is meant to say to ban the weights... it's not just a horrible take, it's just anti-competitive lobbying. Not quite as dumb as Hawley's bill - which arguably banned the US from using China's published algos/math - but terrible still.

14.03.2025 14:11 — 👍 2 🔁 0 💬 1 📌 0

Side note - when I was chiming in on github and actually, I think, triggered gg to start merging this back in, I remember I was doing 32B_Q8 w/ 7B 4KL draft but I think I still had mine set to --draft 5; I will say 7B>>>>3B so far. I may have to play around with using some even smaller drafts

11.03.2025 13:10 — 👍 0 🔁 0 💬 0 📌 0

It's interesting because the dials of draft model, draft model quant, --draft length, they all play into the sweet spot. and it's clearly not super consistent. like I was playing with 32B-Q8 w/ 3B vs 7B 4KL drafts; with those the default --draft 16 seems like the sweet spot. (>12, >32 informal test)

11.03.2025 13:01 — 👍 0 🔁 0 💬 1 📌 0

32B Coder-Q8 w/ and w/out 7B-Q4_K_L draft - PSA speculative decoding is in llamacpp and works. (depending on your hardware, experiment w/ diff model sizes - ymmv vary wildly)

11.03.2025 12:50 — 👍 0 🔁 0 💬 0 📌 1

YouTube video by Tech Field Day

Kamiwaza Model Context Protocol for Private Models – Inferencing and Data Within the Enterprise

its at the start of this www.youtube.com/watch?v=2MnB...

11.03.2025 12:46 — 👍 1 🔁 0 💬 1 📌 0

seems plausible. I do a demo with Qwen-72B-Instruct at times with ~20 tools where based on a gentle nudge it will read instructions, check out code, bug fix it, commit a patch, write and run tests and report on those (1 message). That's with ZERO planner. just tool-returns-as-msg behavior

10.03.2025 03:55 — 👍 1 🔁 0 💬 1 📌 0

Reward function engineer

28.02.2025 14:56 — 👍 0 🔁 0 💬 0 📌 0

Founder @ Figure ($750M Backed), Cover (Weapon Detection), Archer Aviation (NYSE: ACHR), Vettery ($100M Exit)

Creating ideas, science, technology, books, companies, ...

Managing Director, Equity Capital Markets @ D.A. Davidson

#Cloud I #Security I #Data I #Innovation #CancerWarrior #SuperMom

My content; my opinions.

Engineer who helps clients scope, source and vet solutions in #Cloud #cloudsecurity #aisecurity| #ai|Tech analyst| VP Cloud and Security|USAF vet| 📚Learning from CIOs and CISOs on the daily| 💕 of NY Times Spelling 🐝

Linked In: LinkedIn.com|in|JoPeterson1

(He/him/his) Denver Metro USA #tech #goldies #dad #cycling #inclusion

Semi-retired technologist. Did k8s, GCE, gtalk and IE. Figuring shit out. Protect Trans kids 🏳️⚧️. Seattle. Signal: jbeda.99 he/him

Build games at the speed of thought, with AI. www.Rosebud.ai

Researching planning, reasoning, and RL in LLMs @ Reflection AI. Previously: Google DeepMind, UC Berkeley, MIT. I post about: AI 🤖, flowers 🌷, parenting 👶, public transit 🚆. She/her.

http://www.jesshamrick.com

I train models @ OpenAI.

Previously Research at DeepMind.

Hae sententiae verbaque mihi soli sunt.

machine learning, science & society @anthropic.com | recently: Clio, Anthropic Economic Index, Claude Artifacts | prev: phd, stanford nlp. alextamkin.com

Twitter: https://twitter.com/Teknium1

Github: http://github.com/teknium1

HuggingFace: http://huggingface.co/teknium

CEO of Quora, working on @poe.com

I build & teach AI stuff. Building @TakeoffAI. Learn to code & build apps with AI in our new Cursor & app courses on http://JoinTakeoff.com/courses.

Cofounder CEO, Perplexity.ai

how shall we live together?

societal impacts researcher at Anthropic

saffronhuang.com