The journey to DH2026 in Daejeon has begun! 🇰🇷

Here is a first glimpse of our host city and the conference theme of 'Engagement'.

For all official information, including future calls for proposals and registration, our website is your main hub.

🌐 Visit us:

dh2026.adho.org

#DH2026 #Daejeon

22.07.2025 12:23 — 👍 14 🔁 8 💬 0 📌 0

Logo of the COMUTE project.

Just now #DH2025, Sandra Balck (@traed.bsky.social) and Sascha Heße presenting the COMUTE project (Collation of Multilingual Text), run jointly by colleagues @unihalle.bsky.social @unipotsdam.bsky.social @freieuniversitaet.bsky.social.

www.comute-project.de/en/index.html

17.07.2025 08:17 — 👍 18 🔁 6 💬 1 📌 1

Collective Biographies of Women

Find the 1271 English-language books that collect chapter-length biographies of women of all types, famous and obscure, from queens to travelers, from writers to activists. CBW studies versions of wom...

"Fabulation and Care: What AI, Wikidata, and an XML Schema Can Recognize in Women's Biographies" -- @alisonbooth.bsky.social promises to be as cheekily adversarial to contemporary AI as possible. Interrogating (caringly) metaphors of text "mining," "distant" reading, &c.

#DH2025

16.07.2025 15:45 — 👍 14 🔁 4 💬 1 📌 0

Gephi Lite is great, it allows for a direct import of character network data from #DraCor, included in our "Tools" tab. Try the German "Hamlet" translation (Schlegel/Tieck edition 1843/44): dracor.org/id/gersh0000...

(Especially love the "Guess settings" option for ForceAtlas2 layouting!)

#DH2025

17.07.2025 15:32 — 👍 19 🔁 9 💬 1 📌 0

First slide of our presentation showing the title, authors and their affiliation.

Slides from our #DH2025 presentation:

"Wikipedia as an Echo Chamber of Canonicity: ›1001 Books You Must Read Before You Die‹"

bit.ly/1001echo

#DigitalHumanities @viktor.im @temporal-communities.de @freieuniversitaet.bsky.social

16.07.2025 08:31 — 👍 46 🔁 17 💬 2 📌 1

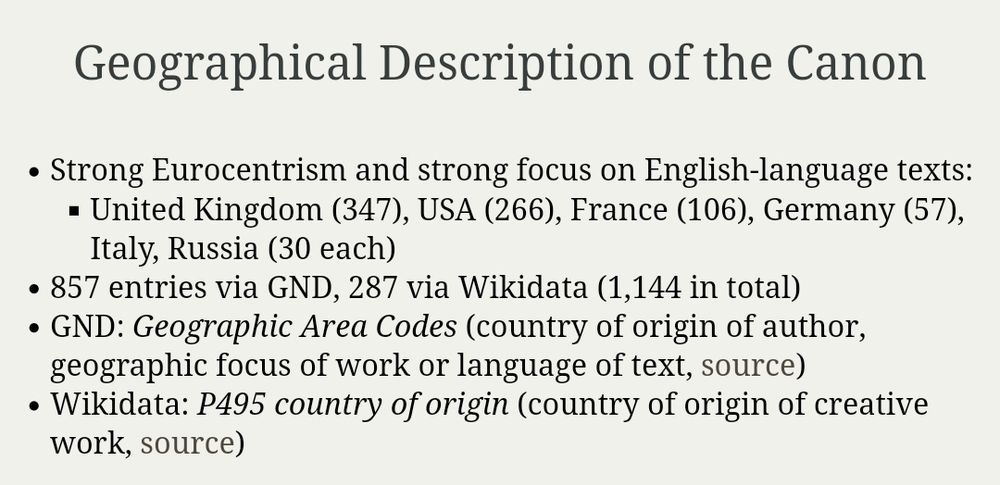

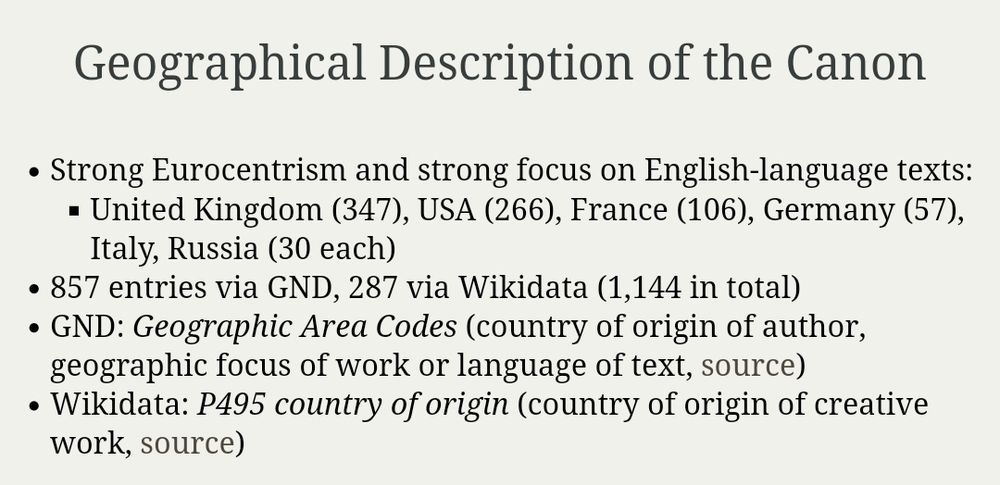

Slide showing the geographic distribution of canonical literary works retrieved from wikidata

As expected: we need more diverse sources to study canonicity and world literature.

Great work!

#dh2025

16.07.2025 08:47 — 👍 10 🔁 6 💬 0 📌 0

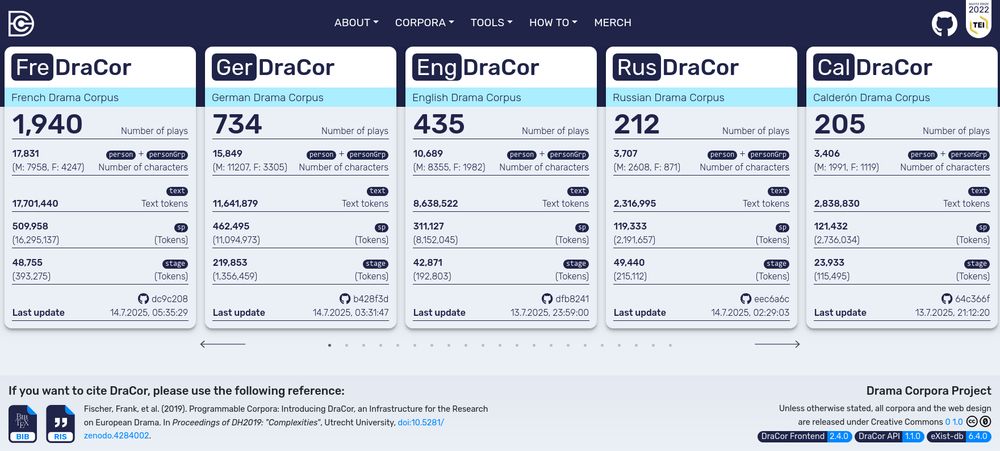

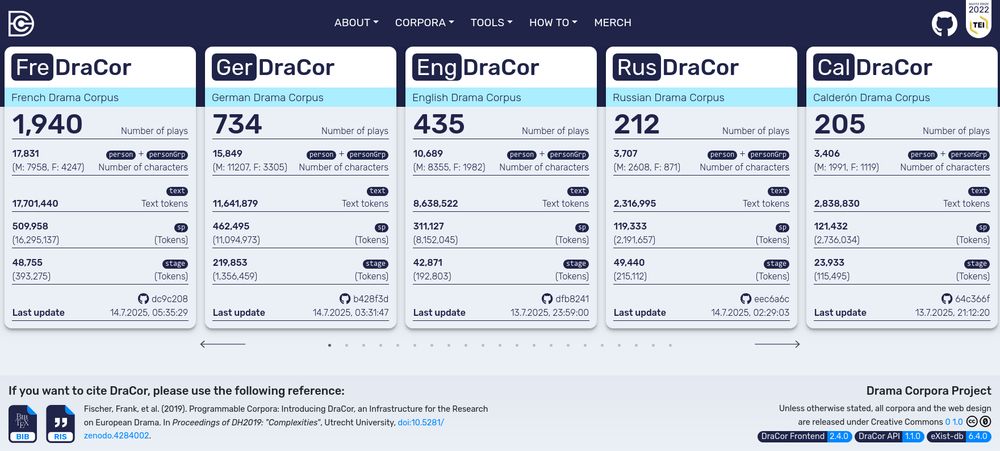

Screenshot of the dracor.org frontpage from 14 July 2025.

💫 We just rolled out a new #DraCor release featuring significant improvements to the API, Frontend, and Schema.

Full update here:

weltliteratur.net/dracor-platf...

#DigitalHumanities #DH2025

14.07.2025 09:50 — 👍 23 🔁 12 💬 0 📌 1

I picked up a book and found myself a brain rot.

02.12.2024 07:08 — 👍 1 🔁 0 💬 0 📌 0

Konrad, ein Wanderer aus Thüringen hat eine mystische Begegnung. An einem stürmischen. Herbsttag trifft er an der Ostsee auf die Meerjungfrau Selina.

Das KI-generierte Kurzmärchen kannst du hier nachlesen:

derdigitaledichter.d...

#literatur #lesen #LitWiss #KI-Literatur

10.09.2024 08:00 — 👍 1 🔁 1 💬 0 📌 0

Img credit N. Muennighoff, taken from the linked paper.

This image has two sections:

Performance vs. Cost Graph:

The y-axis shows performance (% MMLU) and the x-axis shows cost (billion active parameters).

Blue circles represent Dense LMs, and dark blue diamonds represent Mixture-of-Experts (MoE) models.

OLMoE-1B-7B (marked by a pink star) stands out in the top-left for having a good performance/cost ratio.

Table: "How open are open MoEs?":

The table compares models based on their openness in categories like "Model, Data, Code, Logs," and the number of checkpoints.

OLMoE-1B-7B is highlighted and is open in all categories.

Allen AI has released a fully open (weights, data, and code) mixture-of-experts model. It's super small (1B active parameters out of 7B total) but has good performance for its size. Paper: arxiv.org/abs/2409.02060 #machinelearning #AI 🤖 🧪

04.09.2024 03:22 — 👍 33 🔁 8 💬 2 📌 0

Heads-up that I'll be speaking in this series on Oct 23, with the title "Why AI Needs the Humanities as a Partner."

30.08.2024 15:13 — 👍 12 🔁 4 💬 1 📌 1

Large Language Models and Literature | American Comparative Literature Association

What can literature and literary studies bring to LLMs and vice versa?

Our seminar on LLMs and Literature is up on ACLA2025 website! @arhanlon.bsky.social and I are excited to read your paper proposals. Please submit them via portal between September 13 and October 14.

www.acla.org/large-langua...

15.08.2024 17:41 — 👍 20 🔁 14 💬 0 📌 1

How about using AI to recreate a lost romantic world?

15.08.2024 16:55 — 👍 2 🔁 0 💬 0 📌 0

Created by Flux Dev.

"Il meglio è l'inimico del bene".

15.08.2024 16:42 — 👍 1 🔁 0 💬 0 📌 0

Die Forschungsbibliothek Gotha der Universität Erfurt zählt zu den größten deutschen Bibliotheken mit historischen Handschriften und Büchern und bewahrt 2 x UNESCO-Weltdokumentenerbe.

#Medieval #Historian #DigitalHumanities Friedrich-Schiller-Universität Jena

Project Manager DFG 3D-Viewer & Jena4D (http://4dcity.org/intro)

Managing Editor JNHR (https://jhnr.net/about)

12. Jahrestagung des Verbands »Digital Humanities im deutschsprachigen Raum« 23.–27. Februar 2026 in Wien @univie.ac.at

https://dhd2026.digitalhumanities.de

#DHd2026

Digital Humanities | Kunstgeschichte | Colorimetry | Art History | history of concepts | @RaDiHum20 | rotrechnen | kuwiki | wikiconf

This is my second Bluesky account. The first I deleted. With this new account I need to find you guys all over again

digital humanities & art history (M.A. 👩🎓)

cultural heritage. digitization. copies & reproductions. exhibiting digital art.

🧑💻 SODa Team (sammlungen.io) 🧡

#DigitalHumanities & #DigitalScholarlyEdition of prints & manuscripts, especially:

* A. v. #Humboldt @BBAW 🌐 ➡️ https://edition-humboldt.de/

* J. W. v. #Goethe […]

🌉 bridged from ⁂ https://fedihum.org/@dta_cthomas, follow @ap.brid.gy to interact

Computational Linguistics and Literary Studies Postdoc at iSchool, UC Berkeley Founding member of @forTEXT Visiting Scholar at Stanford LitLab Editorial Ass. @jcls […]

🌉 bridged from ⁂ https://fedihum.org/@SvenjaGuhr, follow @ap.brid.gy to interact

Digital Historian | nerd | escapist

Bielefeld University

Initiated @SafeguardingResearch on Jan 15th, 2025.

"No Musk can shut us down."

Pronouns: he/him

🏳️🌈 🩷💜💙 #SmashFascism #Histodon

#BijiRojava

(Digital) […]

[bridged from https://fedihum.org/@lavaeolus on the fediverse by https://fed.brid.gy/ ]

Jetzt auch auf bsky. Digital Humanities. Computational Linguistics. Bloggen. Arbeiten an der Reise nach dem Mond. Drüben: @spinfocl@fedihum.org GF @idhcologne@bsky.social

#DH, #LIS, #Linguistik #Annotationsnerd & mehr | Mitarbeiterin im DKZ QUADRIGA am IBI der HU Berlin | Doktorandin am IBI | Hrsg. & Co-Convenorin @publicDH.bsky.social | Host @radihum20.bsky.social #Podcast

#DigitalHumanities and #ReligiousStudies with a focus on #TextAsData 📝, #Networks 🕸️ and #CulturalHeritage 🗿. Private account. Searchable via Tootfinder.

My […]

[bridged from https://fedihum.org/@felwert on the fediverse by https://fed.brid.gy/ ]

Acamom. Komparatistik mit Computer @Uni Halle. https://jakamen.github.io/

Social and media historian of the late Ottoman Eastern Mediterranean. Vested in global and multilingual #DigitalHumanities, #UrbanHistory […]

🌉 bridged from https://digitalcourage.social/@tillgrallert on the fediverse by https://fed.brid.gy/

#dh #histphil Between jobs.

[bridged from https://fedihum.org/@stefan_hessbrueggen on the fediverse by https://fed.brid.gy/ ]

CONNECTING THE GLOBAL AND THE LOCAL.

Working on Hokusai publication. Postdoctoral Fellow, East Asian Art History, University of Zurich. Japanese arts, prints and digital humanities specialist.

Asst Prof @ University of Washington Information School // PhD in English from WashU in St. Louis

I’m interested in books, data, social media, and digital humanities.

They call me "Eyre Jordan" on the bball court 🏀

https://melaniewalsh.org/

Deputy Director at @dhiparis. Digital History, French-German Relations 19th c. and Science Communication - Personal account (she/her).

[bridged from https://fedihum.org/@Mareike2405 on the fediverse by https://fed.brid.gy/ ]

Professor of Computational Philology and Medieval Studies @TUDarmstadt | President of @adwmainz | Photo Katrin Binner, Background Image Ruth Reiche CC BY-NC