Mailer that reads: Molly, Seasons may change, but the need for blood is constant.

slightly ominous mailer from the Red Cross

01.10.2025 19:24 — 👍 7008 🔁 1245 💬 176 📌 164@jamesmzd.bsky.social

PhD Candidate at the University of Michigan School of Information :: Knowledge/Cultural Production, Science of Science :: he/him/his https://jamesmzd.github.io/

Mailer that reads: Molly, Seasons may change, but the need for blood is constant.

slightly ominous mailer from the Red Cross

01.10.2025 19:24 — 👍 7008 🔁 1245 💬 176 📌 164

Analysing submissions to 60 Institute of Physics journals, @jamesmzd.bsky.social & Misha Teplitskiy find that #PeerReviewers tend to favour submissions from their own countries, meaning authors from countries with few reviewers face a major structural barrier.

@lseimpactblog.bsky.social

🗃️ From the archive: "When implemented without democratic oversight, global performance metrics risk becoming tools of centralisation"

#Bibliometrics #ResearchAssessment

Austerity measures all over the place but the university has $50 million for… whatever this is

18.09.2025 23:29 — 👍 63 🔁 19 💬 4 📌 8

There’s a Drake and Josh version that students will also not understand

18.09.2025 23:04 — 👍 1 🔁 0 💬 0 📌 0

Miyagawa Shuntei's 1898 painting, "Playing Go (Japanese Chess)"

How to quantify the impact of AI on long-run cultural evolution? Published today, I give it a go!

400+ years of strategic dynamics in the game of Go (Baduk/Weiqi), from feudalism to AlphaGo!

💥New: Lack of geographic diversity in peer review skews “publishable” research

✍️ @jamesmzd.bsky.social & Misha Teplitskiy

#PeerReview #PeerReviewWeek #PRW25 #AcademicSky

Abstract: Under the banner of progress, products have been uncritically adopted or even imposed on users — in past centuries with tobacco and combustion engines, and in the 21st with social media. For these collective blunders, we now regret our involvement or apathy as scientists, and society struggles to put the genie back in the bottle. Currently, we are similarly entangled with artificial intelligence (AI) technology. For example, software updates are rolled out seamlessly and non-consensually, Microsoft Office is bundled with chatbots, and we, our students, and our employers have had no say, as it is not considered a valid position to reject AI technologies in our teaching and research. This is why in June 2025, we co-authored an Open Letter calling on our employers to reverse and rethink their stance on uncritically adopting AI technologies. In this position piece, we expound on why universities must take their role seriously toa) counter the technology industry’s marketing, hype, and harm; and to b) safeguard higher education, critical thinking, expertise, academic freedom, and scientific integrity. We include pointers to relevant work to further inform our colleagues.

Figure 1. A cartoon set theoretic view on various terms (see Table 1) used when discussing the superset AI (black outline, hatched background): LLMs are in orange; ANNs are in magenta; generative models are in blue; and finally, chatbots are in green. Where these intersect, the colours reflect that, e.g. generative adversarial network (GAN) and Boltzmann machine (BM) models are in the purple subset because they are both generative and ANNs. In the case of proprietary closed source models, e.g. OpenAI’s ChatGPT and Apple’s Siri, we cannot verify their implementation and so academics can only make educated guesses (cf. Dingemanse 2025). Undefined terms used above: BERT (Devlin et al. 2019); AlexNet (Krizhevsky et al. 2017); A.L.I.C.E. (Wallace 2009); ELIZA (Weizenbaum 1966); Jabberwacky (Twist 2003); linear discriminant analysis (LDA); quadratic discriminant analysis (QDA).

Table 1. Below some of the typical terminological disarray is untangled. Importantly, none of these terms are orthogonal nor do they exclusively pick out the types of products we may wish to critique or proscribe.

Protecting the Ecosystem of Human Knowledge: Five Principles

Finally! 🤩 Our position piece: Against the Uncritical Adoption of 'AI' Technologies in Academia:

doi.org/10.5281/zeno...

We unpick the tech industry’s marketing, hype, & harm; and we argue for safeguarding higher education, critical

thinking, expertise, academic freedom, & scientific integrity.

1/n

Speaker and opening slide with red background

After the lunch break, the next session will be 'Open Science and Data Sharing'

The first of three talks will be by Vincent Yuan, with 'Researcher Adherence to Journal Data Sharing Policies: A Meta-Research Study'

VY: Science runs on trust - but we need evidence.

#PRC10

Proud of my union coworkers ( @geo3550.bsky.social ) for pushing to bring graduate research back under contractual protections. All grad workers were in unit when we started 50 years ago, it’s time we get back to that. www.michigandaily.com/news/adminis...

05.09.2025 15:40 — 👍 0 🔁 0 💬 0 📌 0Nothing like good ‘ol Michigan football straight to the face

31.08.2025 00:01 — 👍 1 🔁 1 💬 0 📌 0

Qualitative researchers are increasingly utilising reflexive practices to ensure transparency and assure quality. Current researcher reflexivity, we show, often hinges upon a set of assumptions about reflexivity itself. Through a critical literature review combined with reflexive vignettes, we offer an epistemological critique of reflexivity. We advocate for robust reflexivity, a practice that critically reflects on reflexivity itself. We thus encourage qualitative researchers to critique their reflexive practices in four ways: (1) we challenge the idea that reflexivity leads to revelation, and show the ways this idea reintroduces positivist notions of Truth under a constructionist guise; (2) we challenge simple binaries within many positionality statements, nuancing ideas of insider and outsider status, affinity and difference, and the dynamism of identity over time; (3) we show how reflexivity is a socially and culturally embedded practice, rather than a neutral and universal cognitive practice; and (4) we foreground the power dynamics of reflexivity, cautioning against the co-option of reflexivity in ways that perpetuate social inequities and mask hierarchies within research. To support robust reflexive practices, we offer a toolkit of questions that can act as prompts for critical engagements with reflexivity, and argue for the creation of more robustly reflexive methodological resources.

Reflexive Reflexivity

“We advocate for *robust reflexivity,* a practice that critically reflects on reflexivity itself.”

By @catherinetrundle.bsky.social and colleagues

Open Access: journals.sagepub.com/doi/full/10....

Text: superhuman intelligence already exists, and how it has affected the game of chess tells us something

The future of AI superintelligence is already here, it is just not evenly distributed yet

#genAI #LLMs #cogsci #chess

https://tomstafford.substack.com/p/superhuman-intelligence-already-exists

Congratulations @jamesmzd.bsky.social , USF MS-IDEC class of 2020!! Excellent and timely research on the structural forces working against international researchers with science of science superstar @innovation.bsky.social .

#econsky #polisky #socsky

POLICY IMPLICATIONS 🛠️

We demonstrate how a lack of reviewer diversity can structurally advantage certain authors and ideas over others.

Anonymizing author identity may not be effective, supporting calls for diversification policies. However, this may require long-term investments in training.

Private information? Many countries had lockdowns and travel restrictions in 2020 and 2021 due to the COVID-19 pandemic. We use this period as a negative shock to reviewers' information from seminars and conferences. The same-country preference was only slightly weaker during this period.

14.08.2025 17:55 — 👍 0 🔁 0 💬 1 📌 0

Identity-based bias? A quasi-random policy rollout across journals of voluntary double-anonymization enabled an instrumental variables analysis. Hiding author identity did not reduce reviewer country homophily.

14.08.2025 17:55 — 👍 0 🔁 0 💬 1 📌 0MECHANISMS ⚙️

We consider 3 mechanisms behind reviewers' country homophily: identity-based bias, private information, and localized tastes

We conduct 2 exploratory analyses and provide suggestive evidence that localized tastes are the primary driver of reviewers' same-country preference.

Comparing reviewers of the same manuscript (using fixed effects) allows us to control for common confounders (e.g., quality, topic, team size).

Together, these conditions structurally advantage authors from relatively wealthier countries.

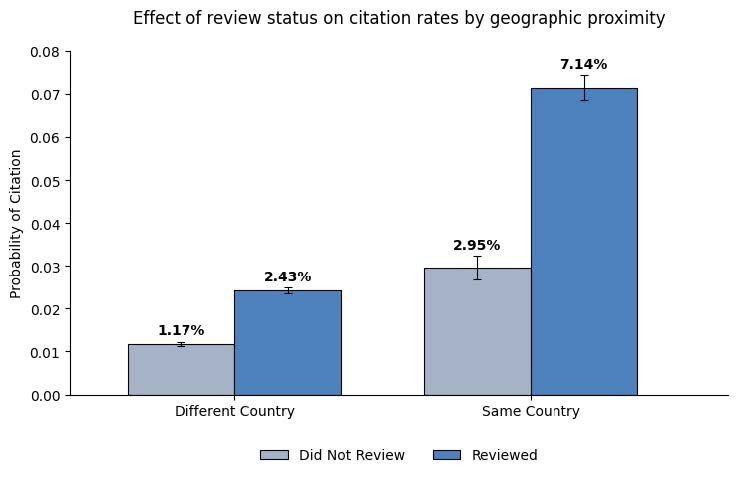

MAIN FINDINGS 🔎

1) Peer reviewers from the corresponding author's country were ~5pp more likely to recommend publication than other reviewers for the same manuscript.

2) Corr. authors' likelihood of having a same-country reviewer was highest for those in countries well-represented among reviewers.

Many have pointed out mismatches between reviewer and author populations, but evidence that this shapes what gets published has been inconclusive due to lack of granular peer review data.

Using review-level metadata on ~205k submissions from 2018-2022, we demonstrate two conditions for this bias...

Title and author info of PNAS paper linked

Now published at PNAS ‼️ w/ @innovation.bsky.social

How does peer reviewer diversity affect fairness in peer review and the direction of published science? We find a "geographical representation bias" in 60 STEM journals published by @ioppublishing.bsky.social.

www.pnas.org/doi/10.1073/...

Stack of papers and books

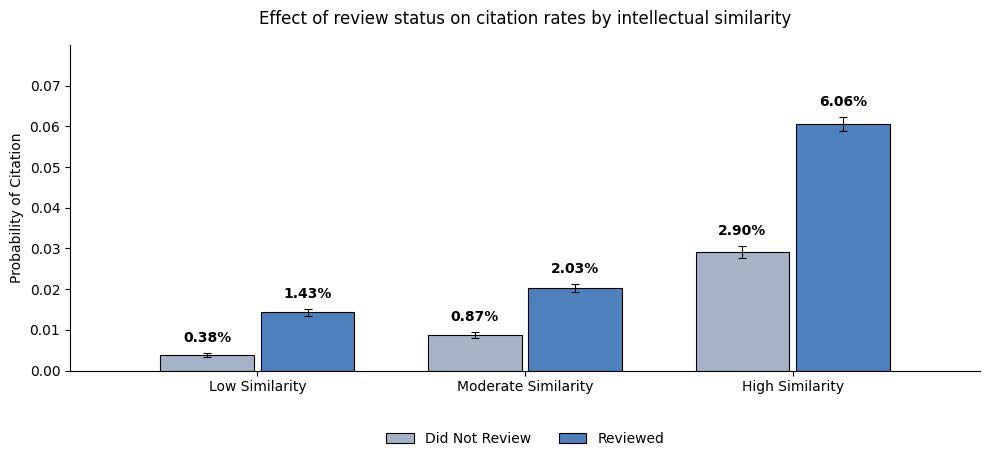

This paper explores academic peer review as a source of knowledge transfer and learning for the reviewers themselves.

spkl.io/63328A1crC

@innovation.bsky.social @jamesmzd.bsky.social

I think that would be an ideal experiment (just need $$ to run it)!! Thinking about how to do this in an ecologically valid way… especially since some folks are chiming in that they only read when reviewing 🤔

07.07.2025 03:06 — 👍 1 🔁 0 💬 0 📌 0Learning vs. familiarity/awareness looks functionally similar under the definition of learning we’re using from thr Org Behavior lit. One suggestion from @mariomalicki.bsky.social is to look at *how* reviewers cite (e.g., substantive vs. rhetorical)

07.07.2025 02:49 — 👍 0 🔁 0 💬 1 📌 0One explanation could be the 3yr citation window we set. Full paper has similar result with infinite citation window, but these papers were *reviewed* in 2018-19 (maybe published later). It’s possible this effect would be stronger with longer windows as time goes on…

07.07.2025 02:46 — 👍 1 🔁 0 💬 0 📌 0We were partially motivated by a 2018 Publons survey where ~1/3 of respondents mentioned keeping up w their field as a reason for reviewing. To our knowledge, we’re the first to observe this behaviorally!!

06.07.2025 18:34 — 👍 1 🔁 0 💬 0 📌 0Had a great time presenting these findings at #metascience2025 and looking forward to getting even more eyes on it at the #NBERSummerInstitute2025 session on Science of Science Funding (July 17-18)!

Catch in online July 17, 3:30pm at www.youtube.com/nbervideos

Implications

1. Highlighting personal learning benefits may help with reviewer recruitment

2. Peer review is an overlooked site of knowledge dissemination. Don't outsource evaluation labor to #LLMs?

3. Editors may take geographic/intellectual proximity into account when assigning reviewers

Additional Findings

1. "Learning effect" from peer reviewing is stronger for reviewers closer in geographic and intellectual distance (same-country and abstract cosine similarity)

2. Reviewing from a greater distance brings citation likelihood close to non-reviewers of a closer distance