Congrats and welcome to the DMV area!!!

17.06.2025 02:45 — 👍 0 🔁 0 💬 1 📌 0

🛠️ Interested in how your LLM behaves under this circumstance? We released the code to generate the diagnostic data for your own LLM.

@mdredze @loadingfan

8/8

16.06.2025 12:02 — 👍 1 🔁 0 💬 0 📌 0

🔗 Takeaways for practitioners

1. Check for knowledge conflict before prompting.

2. Add further explanation to guide the model in following the context.

3. Monitor hallucinations even when context is supplied.

7/8

16.06.2025 12:02 — 👍 1 🔁 0 💬 1 📌 0

📏 Implications:

⚡When using an LLM as a judge, its parametric knowledge could lead to incorrect judgment :(

⚡ Retrieval systems need mechanisms to detect and resolve contradictions, not just shove text into the prompt. 6/8

16.06.2025 12:02 — 👍 1 🔁 0 💬 1 📌 0

🧠 Key finding #3:

“Just give them more explanation?” Providing rationales helps—it pushes models to lean more on the context—but it still can’t fully silence the stubborn parametric knowledge. 5/8

16.06.2025 12:02 — 👍 1 🔁 0 💬 1 📌 0

⚖️ Key finding #2:

Unsurprisingly, LLMs prefer their own memories. Even when we explicitly instruct them to rely on the provided document, traces of the “wrong” internal belief keep leaking into answers. 4/8

16.06.2025 12:02 — 👍 1 🔁 0 💬 1 📌 0

⚠️ Key finding #1:

If the task doesn’t require external knowledge (e.g., pure copy), conflict barely matters. However, as soon as knowledge is needed, accuracy tanks when context and memory disagree.

3/8

16.06.2025 12:02 — 👍 1 🔁 0 💬 1 📌 0

🛠️ We create diagnostic data that…

- Agrees/Contradicts with the model’s knowledge

- Contradictions with different levels of plausibility

- Tasks requiring different levels of knowledge

2/8

16.06.2025 12:02 — 👍 1 🔁 0 💬 1 📌 0

What Is Seen Cannot Be Unseen: The Disruptive Effect of Knowledge Conflict on Large Language Models

Large language models frequently rely on both contextual input and parametric knowledge to perform tasks. However, these sources can come into conflict, especially when retrieved documents contradict…

What happens when an LLM is asked to use information that contradicts its knowledge? We explore knowledge conflict in a new preprint📑

TLDR: Performance drops, and this could affect the overall performance of LLMs in model-based evaluation.📑🧵⬇️ 1/8

#NLProc #LLM #AIResearch

16.06.2025 12:02 — 👍 3 🔁 1 💬 1 📌 1

Paper Link: aclanthology.org/2025.repl4nl...

06.05.2025 23:27 — 👍 0 🔁 0 💬 0 📌 0

It was quite encouraging to find that many friends share my concern of "minor details" obstructing us from gaining reliable conclusions. Really hope that we all can provide well-documented experimentsl details and value the so-called "engineering contributions" more.

06.05.2025 23:25 — 👍 0 🔁 0 💬 0 📌 0

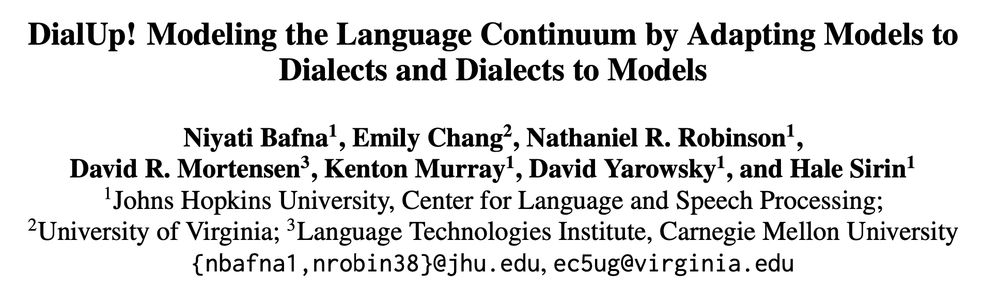

Dialects lie on continua of (structured) linguistic variation, right? And we can’t collect data for every point on the continuum...🤔

📢 Check out DialUp, a technique to make your MT model robust to the dialect continua of its training languages, including unseen dialects.

arxiv.org/abs/2501.16581

27.02.2025 02:44 — 👍 13 🔁 5 💬 1 📌 1

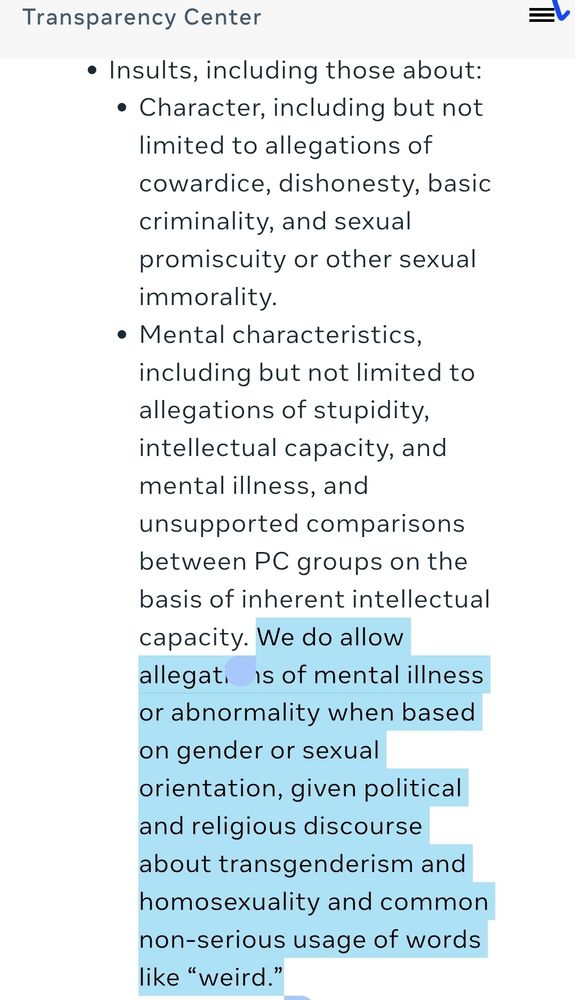

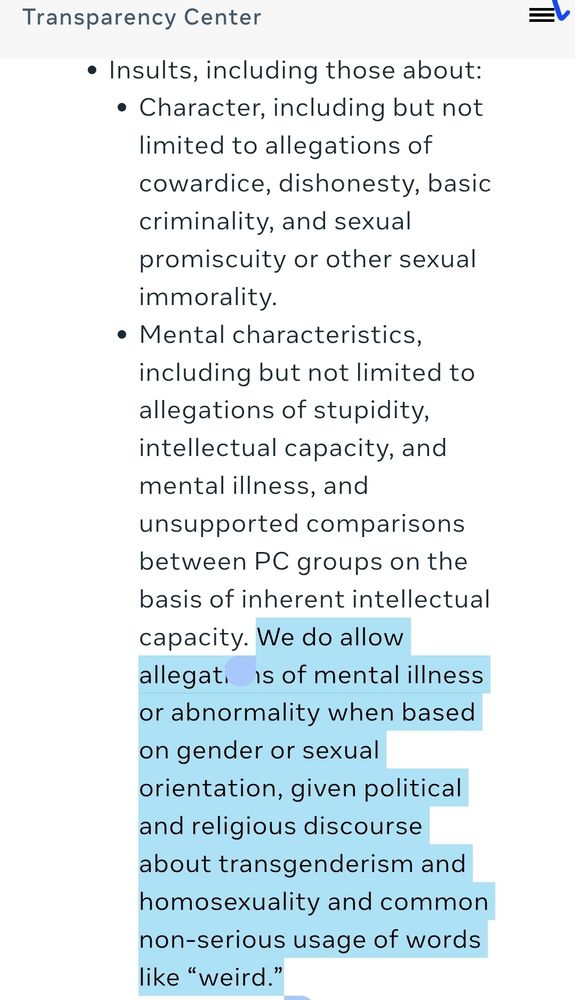

The image is from a "Transparency Center" document and lists guidelines regarding acceptable and prohibited content for insults. It mentions:

1. Insults about:

Character, such as cowardice, dishonesty, criminality, and sexual promiscuity or immorality.

Mental characteristics, including but not limited to accusations of stupidity, intellectual capacity, and mental illness, as well as unsupported comparisons among politically correct (PC) groups based on inherent intellectual traits.

2. Highlighted section:

The document allows allegations of mental illness or abnormality when tied to gender or sexual orientation, referencing political and religious discourse about transgenderism and homosexuality. It also acknowledges the non-serious use of terms like "weird."

Meta literally created a LGBTQ exception for calling someone mentally ill as an insult. You can't do it for any other group except LGBTQ people.

08.01.2025 01:51 — 👍 15386 🔁 6337 💬 646 📌 1601

with reasonable freedom, depending on the scale/focus of the business.

Case in point, we are looking to expand the research/foundation models team at Orby AI and are looking for highly motivated researchers and ML/Research engineers. Please reach out if you're interested in learning more!

/fin

08.01.2025 19:39 — 👍 2 🔁 2 💬 0 📌 0

ARR Dashboard

Excited to start my #ARR #NLP reviews!

I'll try my best and see if I can get 100% of my reviews to be 'great' this round.

If you didn't see it already, ARR publishes how many of your reviews are considered to be 'great': stats.aclrollingreview.org

Join me for the challenge :)

07.01.2025 14:54 — 👍 12 🔁 2 💬 1 📌 1

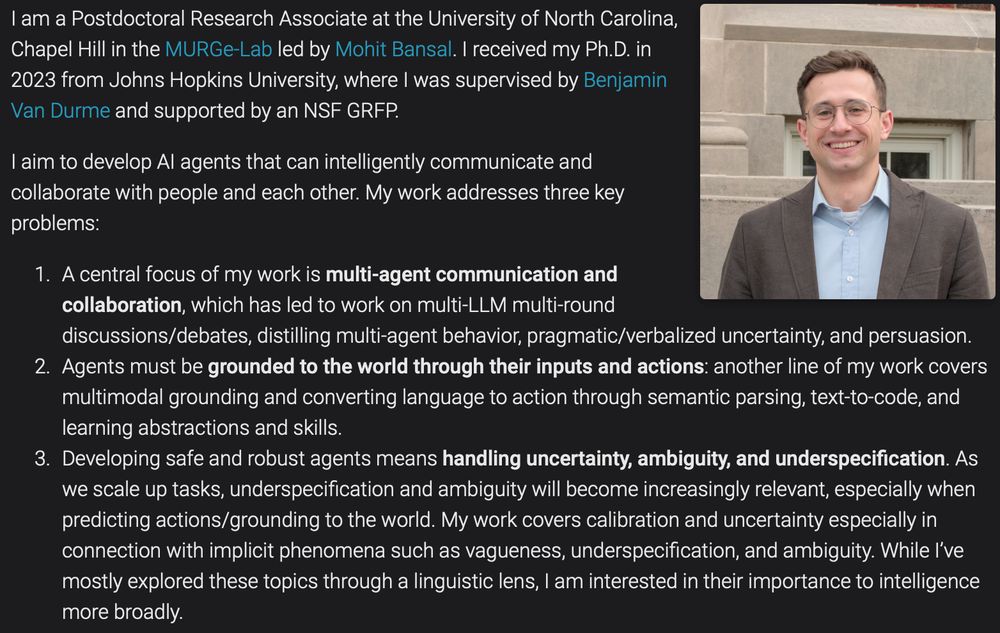

🚨 I am on the faculty job market this year 🚨

I will be presenting at #NeurIPS2024 and am happy to chat in-person or digitally!

I work on developing AI agents that can collaborate and communicate robustly with us and each other.

More at: esteng.github.io and in thread below

🧵👇

05.12.2024 19:00 — 👍 47 🔁 14 💬 2 📌 6

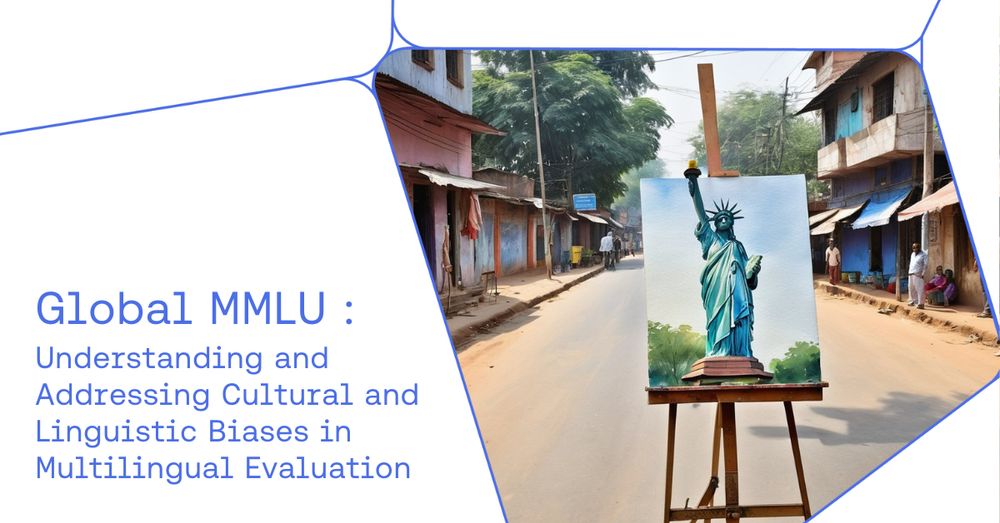

Is MMLU Western-centric? 🤔

As part of a massive cross-institutional collaboration:

🗽Find MMLU is heavily overfit to western culture

🔍 Professional annotation of cultural sensitivity data

🌍 Release improved Global-MMLU 42 languages

📜 Paper: arxiv.org/pdf/2412.03304

📂 Data: hf.co/datasets/Coh...

05.12.2024 16:31 — 👍 59 🔁 12 💬 7 📌 6

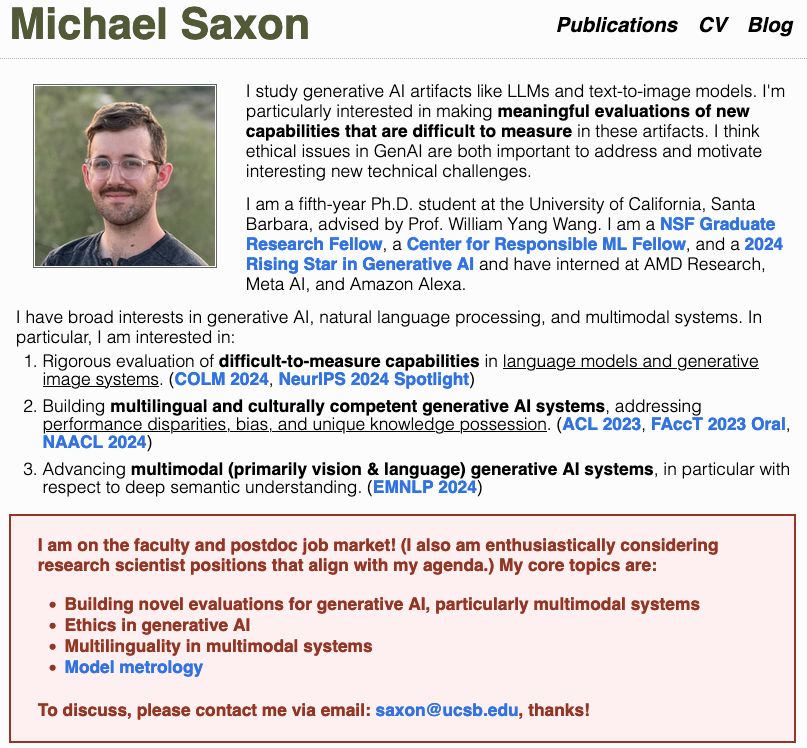

🚨I too am on the job market‼️🤯

I'm searching for faculty positions/postdocs in multilingual/multicultural NLP, vision+language models, and eval for genAI!

I'll be at #NeurIPS2024 presenting our work on meta-evaluation for text-to-image faithfulness! Let's chat there!

Papers in🧵, see more: saxon.me

06.12.2024 01:44 — 👍 49 🔁 9 💬 1 📌 2

A scatter plot comparing language models by performance (y-axis, measured in average performance on 10 benchmarks) versus training computational cost (x-axis, in approximate FLOPs). The plot shows OLMo 2 models (marked with stars) achieving Pareto-optimal efficiency among open models, with OLMo-2-13B and OLMo-2-7B sitting at the performance frontier relative to other open models like DCLM, Llama 3.1, StableLM 2, and Qwen 2.5. The x-axis ranges from 4x10^22 to 2x10^24 FLOPs, while the y-axis ranges from 35 to 70 benchmark points.

Excited to share OLMo 2!

🐟 7B and 13B weights, trained up to 4-5T tokens, fully open data, code, etc

🐠 better architecture and recipe for training stability

🐡 staged training, with new data mix Dolmino🍕 added during annealing

🦈 state-of-the-art OLMo 2 Instruct models

#nlp #mlsky

links below👇

26.11.2024 20:59 — 👍 68 🔁 12 💬 1 📌 1

Agree. Oth it might be helpful as a way to receive report and doubt. There is one user reported that the authors of a paper I was reviewing violate the anonymity policy by posting their submissions in public.

20.11.2024 22:48 — 👍 0 🔁 0 💬 1 📌 0

🙋

20.11.2024 06:57 — 👍 1 🔁 0 💬 0 📌 0

CLSP

Join the conversation

Putting together a JHU Center for Language and Speech Processing starter pack!

Please reply or DM me if you're doing research at CLSP and would like to be added - I'm still trying to find out which of us are on here so far.

go.bsky.app/JtWKca2

19.11.2024 15:37 — 👍 22 🔁 10 💬 2 📌 1

A starter pack for #NLP #NLProc researchers! 🎉

go.bsky.app/SngwGeS

04.11.2024 10:01 — 👍 253 🔁 100 💬 45 📌 13

Finally, use your app password (https://buff.ly/3WkpGuu ) when using these tools for better security. I have no opinions about social media, the maintenance of which sometimes annoys me while I still want to keep connected with friends. Hope this can help save time.

18.11.2024 17:48 — 👍 0 🔁 0 💬 0 📌 0

Import previous tweets from X to 🦋: https://buff.ly/40SlAMZ

Cross-Posting X, 🦋, and other social media:

1. Buffer (https://buffer.com/)

2. TamperMonkey Script (https://buff.ly/48ZBrLU)

18.11.2024 17:48 — 👍 0 🔁 0 💬 1 📌 0

Transfer follow list from X to 🦋.

1. Sky Follower Bridge (Browser Plugin): https://buff.ly/40g5FaU

(Code): https://buff.ly/4eE1fOP

2. Starter Packs: QueerinAI @jasmijnbastings.bsky.social: go.bsky.app/RkBEqxz

NLP Researchers @mariaa.bsky.social: https://buff.ly/4fQvdQD

18.11.2024 17:48 — 👍 1 🔁 0 💬 1 📌 0

Dealing with a new social media account can be vexatious. Here I compiled a thread of resources that might be helpful to transition to Bluesky 🦋 . 🧵⬇️ Thread below

18.11.2024 17:48 — 👍 6 🔁 1 💬 1 📌 0

✨ Comprehensive evaluation of the INTERPLAY between model internals and behavior

✨ https://interplay-workshop.github.io/

✨ Submission due June 23rd

✨ October 10th, @colmweb.org

The largest workshop on analysing and interpreting neural networks for NLP.

BlackboxNLP will be held at EMNLP 2025 in Suzhou, China

blackboxnlp.github.io

NLP Graduate Researcher at The University of Tehran #NLProc

PhD student @jhuclsp. Previously @AIatMeta, @InriaParisNLP, @EM_LCT| #NLProc

Ph.D. Candidate in NLP focusing at @ds-hamburg.bsky.social on Multilinguality and Multiculturality #NLProc

associate prof at UMD CS researching NLP & LLMs

Computational Linguists—Natural Language—Machine Learning

CS PhD JohnsHopkins | Ex NLProc @ Genentech | Information Seeking | Disinformation Agents | Copilots for Social Good | PhD

@JHUCLSP @JHUMCEH

#NLProc

🎓 PhD student @uwcse @uwnlp. 🛩 Private pilot. Previously: 🧑💻 @oculus, 🎓 @IllinoisCS. 📖 🥾 🚴♂️ 🎵 ♠️

NLP/ML @usc, @uwcse. she/her.🏹🤖👩🏻🍳. velocitycavalry.github.io. Multilinguality, retrieval, refining LLMs…

Stanford CS PhD. Prev: undergrad at @jhuclsp.bsky.social

Professor at UW; Researcher at Meta. LMs, NLP, ML. PNW life.

PhD student at Johns Hopkins CLSP (@jhuclsp.bsky.social).

Researching natural and formal language processing.

williamjurayj.com

PhD student at USC Annenberg; LLMs / CSS / SNA; @ HUMANS Lab (http://www.emilio.ferrara.name/)

PhD student at JHU. @Databricks MosaicML, Microsoft Semantic Machines/Translate, Georgia Tech. I like datasets!

https://marcmarone.com/