As an Highlight ✨ in the main conference, we will present results of a new investigation on object tracking in first-person vision by comparison against third-person videos. Work done at the MLP lab at the University of Udine led by Christian Micheloni

📆 Oct 21th, 15:00 - 17:00

📍Poster #542

3/3

18.10.2025 15:59 — 👍 1 🔁 0 💬 0 📌 0

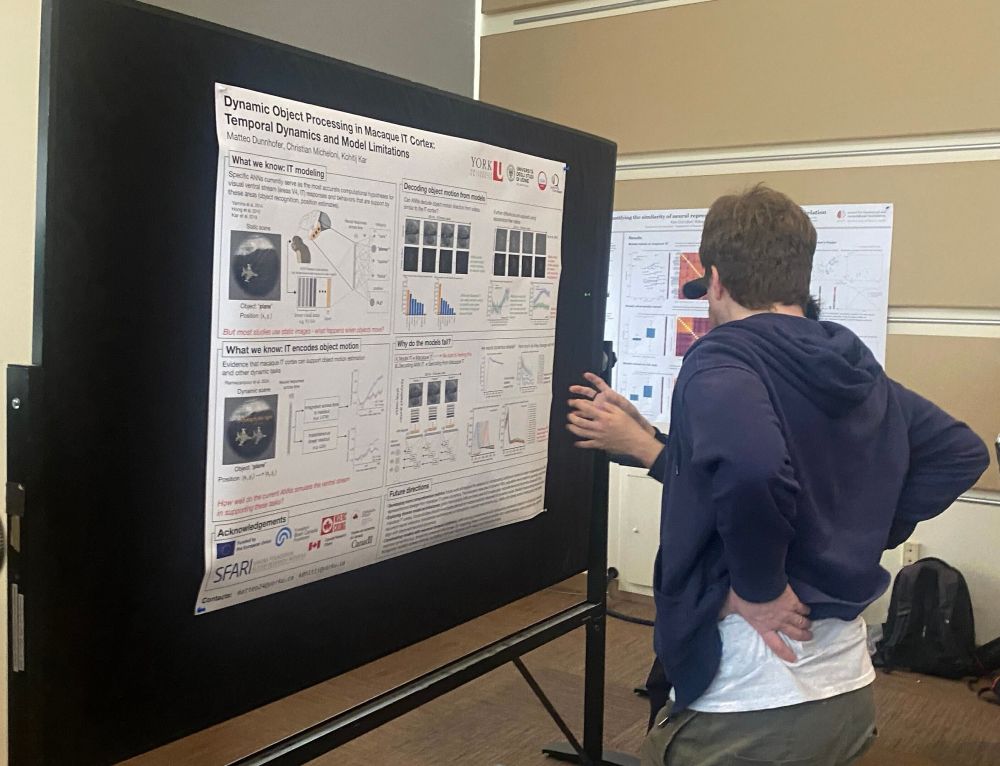

At the Human-inspired Computer Vision #HiCV2025 workshop, I will present a poster with recent results on comparing video-based ANNs and the primate visual system. Ongoing project at the ViTA lab @yorkuniversity.bsky.social led by @kohitij.bsky.social

📆 Oct 20th, 8:30 - 12:30

📍Room 309

2/3

18.10.2025 15:59 — 👍 2 🔁 1 💬 1 📌 0

On my way to #ICCV2025 in Honolulu 🏝️

@iccv.bsky.social

I will share results on ongoing projects I am working on at

@yorkuniversity.bsky.social

and at the University of Udine

Looking forward to discussions!

1/3

18.10.2025 15:59 — 👍 1 🔁 0 💬 1 📌 0

Our #ICCV2025 paper will be presented as an Highlight ✨

24.07.2025 17:40 — 👍 0 🔁 0 💬 0 📌 0

Joint work with @zairamanigrasso.bsky.social and Christian Micheloni

Funded by PRIN 2022 PNRR, MSCA Actions

(7/7)

23.07.2025 14:51 — 👍 0 🔁 0 💬 0 📌 0

These FPV-specific challenges include:

- Frequent object disappearances

- Continuous camera motion altering object appearance

- Object distractors

- Wide field-of-view distortions near frame edges

(5/7)

23.07.2025 14:51 — 👍 0 🔁 0 💬 1 📌 0

- Trackers learn viewpoint biases and perform best on the viewpoint used during training.

- FPV tracking presents its specific challenges.

(4/7)

23.07.2025 14:51 — 👍 0 🔁 0 💬 1 📌 0

Key takeaways from our study:

- FPV is challenging for state-of-the-art generalistic trackers.

- Tracking objects in human-object interaction videos is difficult across both first- and third-person viewpoints.

(3/7)

23.07.2025 14:51 — 👍 0 🔁 0 💬 1 📌 0

We specifically examined whether these drops are due to FPV itself or to the complexity of human-object interaction scenarios.

To do this, we designed VISTA, a benchmark using synchronized first and third-person recordings of the same activities.

(2/7)

23.07.2025 14:51 — 👍 0 🔁 0 💬 1 📌 0

Is Tracking really more challenging in First Person Egocentric Vision?

Our new #ICCV2025 paper follows up our IJCV 2023 study (bit.ly/4nVRJw9).

We further investigate the causes of performance drops in object and segmentation tracking under egocentric FPV settings.

🧵 (1/7)

23.07.2025 14:51 — 👍 1 🔁 0 💬 1 📌 1

The teams behind the workshops on Computer Vision for Winter Sports at @wacvconference.bsky.social and on Computer Vision in Sports at @cvprconference.bsky.social have joined forces in the organisation of a special issue in CVIU on topics related to computer vision applications in sports.

1/2

29.05.2025 15:50 — 👍 1 🔁 1 💬 1 📌 0

Honored to be on the list this year!

10.05.2025 23:27 — 👍 1 🔁 0 💬 0 📌 0

We are now live with the 3d Workshop on Computer Vision for Winter Sports @wacvconference.bsky.social #WACV2025.

Make sure to attend if you are around!

04.03.2025 15:37 — 👍 2 🔁 1 💬 0 📌 0

This paper contributes to our projects PRIN 2022 EXTRA EYE and Project PRIN 2022 PNRR TEAM funded by European Union-NextGenerationEU.

6/6

03.03.2025 17:49 — 👍 0 🔁 0 💬 0 📌 0

This work was led by Moritz Nottebaum (stop by his poster!) at the Machine Learning and Perception Lab of the University of Udine

5/6

03.03.2025 17:49 — 👍 0 🔁 0 💬 1 📌 0

LowFormer achieves significant speedups in image throughput and latency on various hardware platforms, while maintaining or surpassing the accuracy of current state-of-the-art models across image recognition, object detection, and semantic segmentation.

4/6

03.03.2025 17:49 — 👍 0 🔁 0 💬 1 📌 0

We used insights from such an analysis to enhance the hardware efficiency of backbones at the macro level, and introduced a slimmed-down version of multi-head self-attention to improve efficiency in the micro design.

3/6

03.03.2025 17:49 — 👍 0 🔁 0 💬 1 📌 0

We empirically found out that MACs alone do not accurately account for inference speed.

2/6

03.03.2025 17:49 — 👍 0 🔁 0 💬 1 📌 0

Is attendance open to YorkU researchers (e.g. postdocs)? Would like a lot to learn from your teaching style!

23.12.2024 01:25 — 👍 3 🔁 0 💬 1 📌 0

Did the same a few weeks ago in Toronto. I think this is the best pizza flavor you can get in Canada 😂

15.12.2024 12:40 — 👍 1 🔁 0 💬 1 📌 0

CV4WS@WACV2025

UPDATE (11/19/24): DEADLINE EXTENDED

The top-performing teams will be invited to present their solution at the 3rd Workshop on Computer Vision for Winter Sports at #WACV2025!

📄 sites.google.com/unitn.it/cv4...

3/3

01.12.2024 14:50 — 👍 1 🔁 0 💬 0 📌 0

CodaLab - Competition

The challenge platform is hosted on CodaLab and you can find all the submission instructions there.

Deadline for submission is January 31st 2025.

🏆 codalab.lisn.upsaclay.fr/competitions...

2/3

01.12.2024 14:50 — 👍 1 🔁 0 💬 1 📌 0

YouTube video by Matteo Dunnhofer

[WACV 2024] Tracking Skiers from the Top to the Bottom - qualitative examples

The #SkiTB Visual Tracking Challenge at #WACV2025 is open for submissions!

The goal is to track a skier in a video capturing his/her full performance across multiple video cameras, and it is based on our recently released SkiTB dataset.

🎥 youtu.be/Aos5iKrYM5o

1/3

01.12.2024 14:50 — 👍 5 🔁 1 💬 1 📌 1

Would be happy to be added as well :) I am working on visual tracking, currently at YorkU ;)

24.11.2024 17:12 — 👍 0 🔁 0 💬 0 📌 0

The outgoing phase of my MSCA project PRINNEVOT has started a few days ago. I am now at the #CentreforVisionResearch of #YorkUniversity.

I am looking forward to the next two years of research at the intersection of computer vision and visual neuroscience! 🤖🧠

18.11.2024 13:30 — 👍 2 🔁 0 💬 1 📌 0

Assistant Prof @UNIMORE 🇮🇹 Human Visual Attention, Remote Physio, Affective Computing. Former @UNIMI, co-founder @opendot, Milan

https://www.vcuculo.com

Assistant Professor, Canada Research Chair in Visual Neuroscience, @yorkuniversity, Visiting Scientist @MIT, Previously: Postdoc(@MIT), PhD (@RutgersU)

PhD student at the University of Zaragoza in Deep Learning and Computer Vision

The Vector Institute is dedicated to AI, excelling in machine & deep learning research. AI-generated content will be disclosed. FR: @institutvecteur.bsky.social

Geometric deep learning + Computer vision

PhD student at Stanford. Self-proclaimed computational neuroscientist and humanist. Incomplete bio at https://ynshah3.github.io/.

I am a Professor of Computer Science at EPFL in Switzerland. My main research interests are in Computer Vision, Machine Learning, Computer Assisted Engineering, and Biomedical imaging.

Professor, University of Tübingen @unituebingen.bsky.social.

Head of Department of Computer Science 🎓.

Faculty, Tübingen AI Center 🇩🇪 @tuebingen-ai.bsky.social.

ELLIS Fellow, Founding Board Member 🇪🇺 @ellis.eu.

CV 📷, ML 🧠, Self-Driving 🚗, NLP 🖺

Professor of Computer Vision and AI at TU Munich, Director of the Munich Center for Machine Learning mcml.ai and of ELLIS Munich ellismunich.ai

cvg.cit.tum.de

Senior Researcher at Max Planck Institute for Informatics

Founding Engineer at Kumo.ai

I am a Research Scientist at Google Zurich working on 3d vision (https://m-niemeyer.github.io/)

Studying genomics, machine learning, and fruit. My code is like our genomes -- most of it is junk.

Assistant Professor UMass Chan, Board of Directors NumFOCUS

Previously IMP Vienna, Stanford Genetics, UW CSE.

Machine Learning @ University of Edinburgh | AI4Science | optimization | numerics | networks | co-founder @ MiniML.ai | ftudisco.gitlab.io

https://ellis-jena.eu is developing+applying #AI #ML in #earth system, #climate & #environmental research.

Partner: @uni-jena.de, https://bgc-jena.mpg.de/en, @dlr-spaceagency.bsky.social, @carlzeissstiftung.bsky.social, https://aiforgood.itu.int

Analysing coffee and drinking data | Data Science | ML | NLP | Politics🌹

📍 Braunschweig, Germany

The most powerful open-source visual AI and computer vision data platform. Maximize AI performance with better data: voxel51.com

professor at WashU; computer vision, remote sensing, etc.

Professor at Michigan | Voxel51 Co-Founder and Chief Scientist | Creator, Builder, Writer, Coder, Human

![[WACV 2024] Tracking Skiers from the Top to the Bottom - qualitative examples](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:oliejj3zyalr6veu7jgkkqlp/bafkreibaa2vigtyqb57acewjvidhebodvudxgce6spfgfzdv3uhfbg5bxq@jpeg)