The result is a fair, end‑to‑end comparison that isolates what actually drives performance for radiology foundation models.

#AI #MedicalImaging #FoundationModels #ScalingLaws #Radiology

23.09.2025 08:34 — 👍 2 🔁 0 💬 0 📌 0

including not just findings but also lines & tubes classification/segmentation and report generation. We also test the effect of adding structured labels alongside reports during CLIP‑style pretraining, and study scaling laws under these controlled conditions.

23.09.2025 08:34 — 👍 0 🔁 0 💬 1 📌 0

That makes it hard to tell whether wins come from the model design or just from more data/compute or favorable benchmarks. We fix this by holding the pretraining dataset and compute constant and standardizing evaluation across tasks,

23.09.2025 08:34 — 👍 0 🔁 0 💬 1 📌 0

Why this matters: Prior comparisons of radiology encoders have often been apples‑to‑oranges: models trained on different datasets, with different compute budgets, and evaluated mostly on small datasets of finding‑only tasks.

23.09.2025 08:34 — 👍 0 🔁 0 💬 1 📌 0

✅ Pretrained on 3.5M CXRs to study scaling laws for radiology models

✅ Compared MedImageInsight (CLIP-based) vs RAD-DINO (DINOv2-based)

✅ Found that structured labels + text can significantly boost performance

✅ Showed that as little as 30k in-domain samples can outperform public foundation models

23.09.2025 08:34 — 👍 0 🔁 0 💬 1 📌 0

Data Scaling Laws for Radiology Foundation Models

Foundation vision encoders such as CLIP and DINOv2, trained on web-scale data, exhibit strong transfer performance across tasks and datasets. However, medical imaging foundation models remain constrai...

🩻Excited to share our latest preprint: “Data Scaling Laws for Radiology Foundation Models”

Foundation vision encoders like CLIP and DINOv2 have transformed general computer vision, but what happens when we scale them for medical imaging?

📄 Read the full preprint here: arxiv.org/abs/2509.12818

23.09.2025 08:34 — 👍 5 🔁 2 💬 1 📌 0

What a damning abstract

30.04.2025 08:29 — 👍 5 🔁 0 💬 0 📌 0

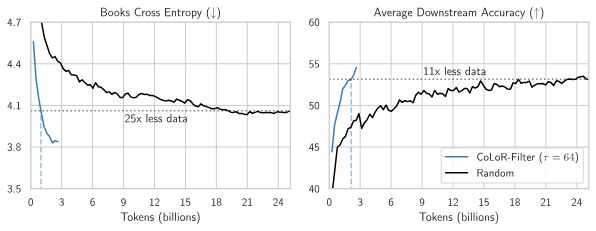

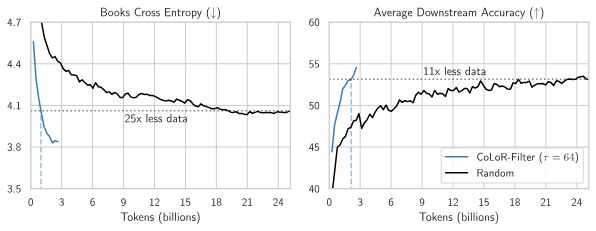

I want to reshare @brandfonbrener.bsky.social's @NeurIPSConf 2024 paper on CoLoR-Filter: A simple yet powerful method for selecting high-quality data for language model pre-training!

With @hlzhang109.bsky.social @schwarzjn.bsky.social @shamkakade.bsky.social

05.04.2025 12:04 — 👍 18 🔁 8 💬 2 📌 1

Screenshot of 'SHADES: Towards a Multilingual Assessment of Stereotypes in Large Language Models.'

SHADES is in multiple grey colors (shades).

⚫⚪ It's coming...SHADES. ⚪⚫

The first ever resource of multilingual, multicultural, and multigeographical stereotypes, built to support nuanced LLM evaluation and bias mitigation. We have been working on this around the world for almost **4 years** and I am thrilled to share it with you all soon.

10.02.2025 08:28 — 👍 128 🔁 23 💬 6 📌 3

Econometrics and Data Science

We’re looking for a motivated researcher to apply for a Marie Skłodowska-Curie postdoc with our Econometrics & Data Science group at SDU!

Focus: Causal Inference, Machine Learning, Big Data

Full support for promising projects

More info & apply:

www.sdu.dk/en/om-sdu/in...

30.01.2025 07:43 — 👍 18 🔁 12 💬 0 📌 1

a cartoon pikachu says join us in white letters

ALT: a cartoon pikachu says join us in white letters

Apply!

Assistant Professor (L/SL) in AI, including computer vision [DL 5 Mar] @BristolUni - awarded AI University of the Year in 2024.

DM to myself or @_SethBullock_ for inquiries (don't us send CV pls!, apply directly)

www.bristol.ac.uk/jobs/find/de...

28.01.2025 18:53 — 👍 20 🔁 9 💬 0 📌 0

🎓 💫 We are opening post-doc positions at the intersection of AI, data science, and medicine:

• Large Language Models for French medical texts

• Evaluating digital medical devices: statistics and causal inference

29.01.2025 08:19 — 👍 27 🔁 16 💬 1 📌 0

Happy to announce a collaboration with the Mayo Clinic to advance our research in radiology report generation!

newsnetwork.mayoclinic.org/discussion/m...

Tagging some of the core team: @valesalvatelli.bsky.social @fepegar.com @maxilse.bsky.social @sambondtaylor.bsky.social @anton-sc.bsky.social

15.01.2025 10:56 — 👍 7 🔁 2 💬 1 📌 0

The video of our talk "From Augustus to #Trump – Why #Disinformation Remains a Problem and What We Can Do About It Anyway" at #38c3, Europe's largest Hacker conference, was published, including the German original and English and Spanish translations:

media.ccc.de/v/38c3-von-a...

10.01.2025 08:49 — 👍 8 🔁 2 💬 1 📌 0

Internship in our group at Mila in reinforcement learning + graphs for reducing energy use in buildings.

More info and submit an application by Jan 13 here:

forms.gle/TCChXnvSAHqz...

Questions? Email donna.vakalis@mila.quebec with [intern!] in the subject line.

10.01.2025 01:14 — 👍 13 🔁 4 💬 0 📌 0

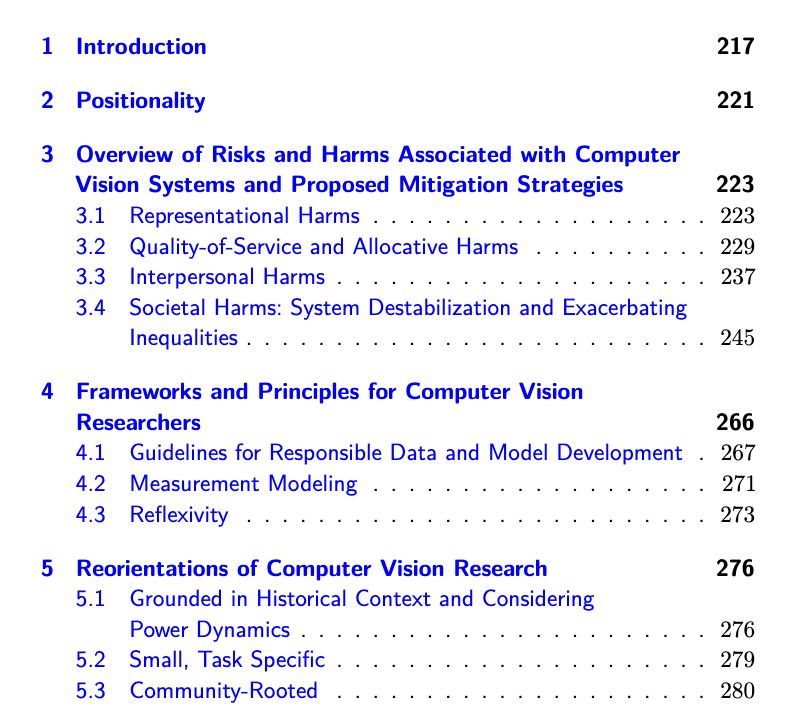

Screenshot of Table of Contents (Part 1)

Contents

1 Introduction 217

2 Positionality 221

3 Overview of Risks and Harms Associated with Computer

Vision Systems and Proposed Mitigation Strategies 223

3.1 Representational Harms . . . . . . . . . . . . . . . . . . . 223

3.2 Quality-of-Service and Allocative Harms . . . . . . . . . . 229

3.3 Interpersonal Harms . . . . . . . . . . . . . . . . . . . . . 237

3.4 Societal Harms: System Destabilization and Exacerbating

Inequalities . . . . . . . . . . . . . . . . . . . . . . . . . . 245

4 Frameworks and Principles for Computer Vision

Researchers 266

4.1 Guidelines for Responsible Data and Model Development . 267

4.2 Measurement Modeling . . . . . . . . . . . . . . . . . . . 271

4.3 Reflexivity . . . . . . . . . . . . . . . . . . . . . . . . . . 273

5 Reorientations of Computer Vision Research 276

5.1 Grounded in Historical Context and Considering

Power Dynamics . . . . . . . . . . . . . . . . . . . . . . . 276

5.2 Small, Task Specific . . . . . . . . . . . . . . . . . . . . . 279

5.3 Community-Rooted . . . . . . . . . . . . . . . . . . . . . 280

Screenshot of Table of Contents (Part 2)

6 Systemic Change 285

6.1 Collective Action and Whistleblowing . . . . . . . . . . . . 285

6.2 Refusal/The Right not to Build Something . . . . . . . . . 287

6.3 Independent Funding Outside of Military and Multinational

Corporations . . . . . . . . . . . . . . . . . . . . . . . . . 289

7 Conclusion 291

References 293

Dear computer vision researchers, students & practitioners🔇🔇🔇

Remi Denton & I have written what I consider to be a comprehensive paper on the harms of computer vision systems reported to date & how people have proposed addressing them, from different angles.

PDF: cdn.sanity.io/files/wc2kmx...

16.12.2024 16:52 — 👍 387 🔁 165 💬 8 📌 10

Posts, barbed wire fences and the main gate of the former Auschwitz II-Birkenau camp.

Help us commemorate victims, preserve memory & educate the world. Amplify our voice.

Your interaction here is more than just a click. It is an act of remembrance against forgetting. Like, share, or quote our posts.

Let people know that @auschwitzmemorial.bsky.social is present here.

29.11.2024 16:41 — 👍 1713 🔁 756 💬 48 📌 62

New timeline, same problems, same solution

13.12.2024 14:22 — 👍 250 🔁 49 💬 10 📌 3

Kudos to @blackhc.bsky.social for calling out this neurips oral: openreview.net/forum?id=0NM... for not giving the rho-loss paper (arxiv.org/abs/2206.07137) the recognition it deserves!

12.12.2024 10:14 — 👍 8 🔁 0 💬 1 📌 1

In all of the reposts I see of articles criticising generative AI, I still don't see enough mention of work like Dr. Birhane's, which shows the biases against disadvantaged groups in the training datasets.

This is very good.

10.12.2024 19:20 — 👍 50 🔁 18 💬 1 📌 0

One of the reasons the university sector has come so spectacularly off the rails is the fact it's so unfriendly to family life, people with caring responsibilities and parents. The attitude is often: 'Not working 24/7? You're not fully committed!'

www.science.org/content/arti...

29.11.2024 09:10 — 👍 94 🔁 12 💬 8 📌 2

This is just sad

27.11.2024 19:38 — 👍 3 🔁 1 💬 0 📌 0

What a surprise (not!). Yet again ... poor evaluations of specialized medical LLMs result in overhyped claims relative to the base LLMs. #bioMLeval

27.11.2024 02:16 — 👍 76 🔁 14 💬 1 📌 1

Not sure about IRM thought. They early stopped their experiments to get the colored MNIST results.

22.11.2024 22:54 — 👍 1 🔁 0 💬 1 📌 0

Good repost would have completely missed this :)

22.11.2024 14:12 — 👍 1 🔁 0 💬 0 📌 0

Thanks for the pack! Can you please add me :)

21.11.2024 13:22 — 👍 1 🔁 0 💬 1 📌 0

Screenshot of the paper.

Even as an interpretable ML researcher, I wasn't sure what to make of Mechanistic Interpretability, which seemed to come out of nowhere not too long ago.

But then I found the paper "Mechanistic?" by

@nsaphra.bsky.social and @sarah-nlp.bsky.social, which clarified things.

20.11.2024 08:00 — 👍 231 🔁 27 💬 7 📌 2

Postdoctoral Researcher @UvA_Amsterdam | Prev. @Meta, @KU_Leuven, @Netcetera_Buzz | Vision and Language

I’m not like the other Bayesians. I’m different.

Thinks about philosophy of science, AI ethics, machine learning, models, & metascience. postdoc @ Princeton.

Head of Div. Intelligent Medical Systems (IMSY) at DKFZ, director of the National Center of Tumor Diseases (NCT) Heidelberg and @ellis.eu Health board member. Excited about Medical Imaging AI, Surgical Data Science, and Validation of A(G)Is.

Cognitive scientist. Problem solving, representational change, analogies, case studies, qualitative methods

Former German Nazi concentration & extermination camp Auschwitz. Official account. We commemorate victims, educate about history & preserve the authentic site.

www.auschwitz.org | lesson.auschwitz.org | podcast.auschwitz.org

TMLR Homepage: https://jmlr.org/tmlr/

TMLR Infinite Conference: https://tmlr.infinite-conf.org/

VP and Distinguished Scientist at Microsoft Research NYC. AI evaluation and measurement, responsible AI, computational social science, machine learning. She/her.

One photo a day since January 2018: https://www.instagram.com/logisticaggression/

Building a co-pilot for hardware designers at Vinci4d. Formerly SVP of Engineering Iron Ox. WiML president, COO Mayfield Robotics Roboticist/Machine Learning Researcher

Assistant Professor in CS: researching ML/AI in sociotechnical systems & teaching Data Science and Dev tools with an emphasis responsible computing

New Englander, NSBE lifetime member

profile pic: me in a purplish sweater with math vaguely on the w

I lead Cohere For AI. Formerly Research

Google Brain. ML Efficiency, LLMs,

@trustworthy_ml.

Machine Learning researcher. Former stats faculty. Works for Google Research, and on better days, herself.

Researcher in machine learning and computer vision for science. Senior Group Leader at HHMI Janelia Research Campus. Supporter of DEIB in science and tech. CV: https://bit.ly/BransonCV

Personal Account

Founder: The Distributed AI Research Institute @dairinstitute.bsky.social.

Author: The View from Somewhere, a memoir & manifesto arguing for a technological future that serves our communities (to be published by One Signal / Atria

PhD student in Machine Learning @ MPI-IS Tübingen, Tübingen AI Center, IMPRS-IS

PhD Candidate, University of Washington

https://salonidash.com/

Harvard CS PhD Candidate. Interested in algorithmic decision-making, data-centric ML, and applications to public sector operations

Research on AI and biodiversity 🌍

Asst Prof at MIT CSAIL,

AI for Conservation slack and CV4Ecology founder

#QueerInAI 🏳️🌈

ML for remote sensing @Mila_Quebec * UdeM x McGill CS alum

Interests: Responsible ML for climate & societal impacts, STS, FATE, AI Ethics & Safety

prev: SSofCS lab

📍🇨🇦 Montreal (allegedly)

TW: @XMichellelinX

https://mchll-ln.github.io/

She/Her • AI Engineer @ IBM Research • MSc in AI @ UFMG 🇧🇷 | Interested in AI Ethics, Safety & Tech Governance. AI/ML engineering & robustness • @blackinai👩🏽💻 (I’m trying to leave twitter🙂↕️)

https://mirianfsilva.github.io

information science professor (tech ethics + internet stuff)

kind of a content creator (elsewhere also @professorcasey)

though not influencing anyone to do anything except maybe learn things

she/her

more: casey.prof