Postdoc position in Paris: come help develop new generation human brain computer interfaces ⚡🧠💻

Interested? Contact me if you have experience with machine learning (e.g. simulation-based inference, RL, generative/diffusion models) or dynamical systems.

See below for + details and retweet 🙏

27.01.2026 22:12 —

👍 75

🔁 56

💬 3

📌 5

Diagram of a recurrent neural network: input goes into the network, output is compared to a target to produce an error, and dotted feedback arrows show updates to neural activity and to synaptic weights.

1/7 How should feedback signals influence a network during learning? Should they first adjust synaptic weights, which then indirectly change neural activity (as in backprop.)? Or should they first adjust neural activity to guide synaptic updates (e.g., target prop.)? openreview.net/forum?id=xVI...

08.01.2026 22:10 —

👍 40

🔁 5

💬 1

📌 0

Thanks for the insightful response! I see how multiple populations overcome the limit of shared gain - yet in data there can be large overlaps in units tracking different variables simultaneously. And yes, maybe one shouldn’t think of separate tasks (e.g., two rings), but rather one task (one torus)

20.12.2025 13:36 —

👍 1

🔁 0

💬 0

📌 0

In some way this could be seen as doing multiple tasks at the same time (e.g., 3 ring attractors for storing three angular variables). Do you have any idea or speculation on how to extend your framework to this setting? Thanks!

2/2

19.12.2025 15:23 —

👍 1

🔁 0

💬 1

📌 0

Hi, great work and nicely written paper! It seems here there is at most one task active at a given time. It has been shown that macaques can memorise multiple stimuli at the same time in (not perfectly) orthogonal subspaces using overlapping populations of units pubmed.ncbi.nlm.nih.gov/39178858/ 1/2

19.12.2025 15:22 —

👍 3

🔁 0

💬 1

📌 0

Our paper on data constrained RNN that generalize to optogenetic perturbations now citable on eLife:

doi.org/10.7554/eLif...

18.12.2025 23:07 —

👍 42

🔁 18

💬 1

📌 2

Finally got the job ad—looking for 2 PhD students to start spring next year:

www.gao-unit.com/join-us/

If comp neuro, ML, and AI4Neuro is your thing, or you just nerd out over brain recordings, apply!

I'm at neurips. DM me here / on the conference app or email if you want to meet 🏖️🌮

03.12.2025 09:36 —

👍 81

🔁 51

💬 1

📌 5

Jobs - mackelab

The MackeLab is a research group at the Excellence Cluster Machine Learning at Tübingen University!

We are looking for a Research Engineer (E13 TV-L) to work at the intersection of #ML and #compneuro! 🤖🧠

Help us build large-scale bio-inspired neural networks, write high-quality research code, and contribute to open-source tools like jaxley, sbi, and flyvis 🪰.

More info: www.mackelab.org/jobs/

28.11.2025 13:54 —

👍 13

🔁 4

💬 0

📌 2

MackeLab has grown! 🎉 Warm welcome to 5(!) brilliant and fun new PhD students / research scientists who joined our lab in the past year — we can’t wait to do great science and already have good times together! 🤖🧠 Meet them in the thread 👇 1/7

28.11.2025 10:26 —

👍 19

🔁 4

💬 1

📌 1

Really cool work! 🔥

02.10.2025 13:04 —

👍 2

🔁 0

💬 1

📌 0

The Macke lab is well-represented at the @bernsteinneuro.bsky.social conference in Frankfurt this year! We have lots of exciting new work to present with 7 posters (details👇) 1/9

30.09.2025 14:06 —

👍 30

🔁 9

💬 1

📌 0

a man wearing a white shirt and tie smiles in front of a window

ALT: a man wearing a white shirt and tie smiles in front of a window

I've been waiting some years to make this joke and now it’s real:

I conned somebody into giving me a faculty job!

I’m starting as a W1 Tenure-Track Professor at Goethe University Frankfurt in a week (lol), in the Faculty of CS and Math

and I'm recruiting PhD students 🤗

23.09.2025 12:58 —

👍 188

🔁 31

💬 30

📌 3

Our #AI #DynamicalSystems #FoundationModel DynaMix was accepted to #NeurIPS2025 with outstanding reviews (6555) – first model which can *zero-shot*, w/o any fine-tuning, forecast the *long-term statistics* of time series provided a context. Test it on #HuggingFace:

huggingface.co/spaces/Durst...

21.09.2025 09:40 —

👍 12

🔁 4

💬 1

📌 1

From hackathon to release: sbi v0.25 is here! 🎉

What happens when dozens of SBI researchers and practitioners collaborate for a week? New inference methods, new documentation, lots of new embedding networks, a bridge to pyro and a bridge between flow matching and score-based methods 🤯

1/7 🧵

09.09.2025 15:00 —

👍 29

🔁 16

💬 1

📌 1

Got prov. approval for 2 major grants in Neuro-AI & Dynamical Systems Reconstruction, on learning & inference in non-stationary environments, out-of-domain generalization, and DS foundation models. To all AI/math/DS enthusiasts: Expect job announcements (PhD/PostDoc) soon! Feel free to get in touch.

13.07.2025 06:23 —

👍 34

🔁 8

💬 0

📌 0

Vacatures bij de RUG

Jelmer Borst and I are looking for a PhD candidate to build an EEG-based model of human working memory! This is a really cool project that I've wanted to kick off for a while, and I can't wait to see it happen. Please share and I'm happy to answer any Qs about the project!

www.rug.nl/about-ug/wor...

03.07.2025 13:29 —

👍 15

🔁 21

💬 1

📌 1

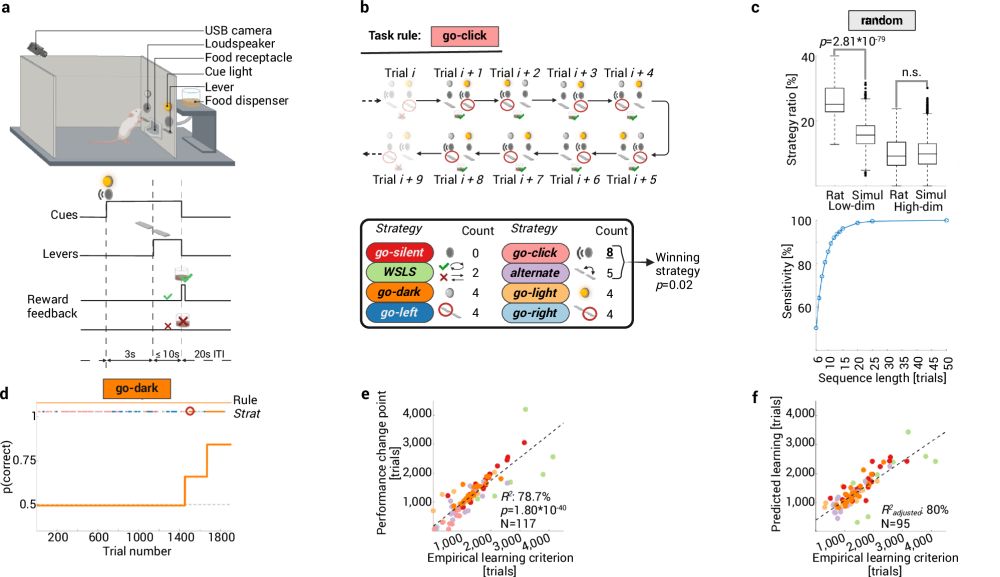

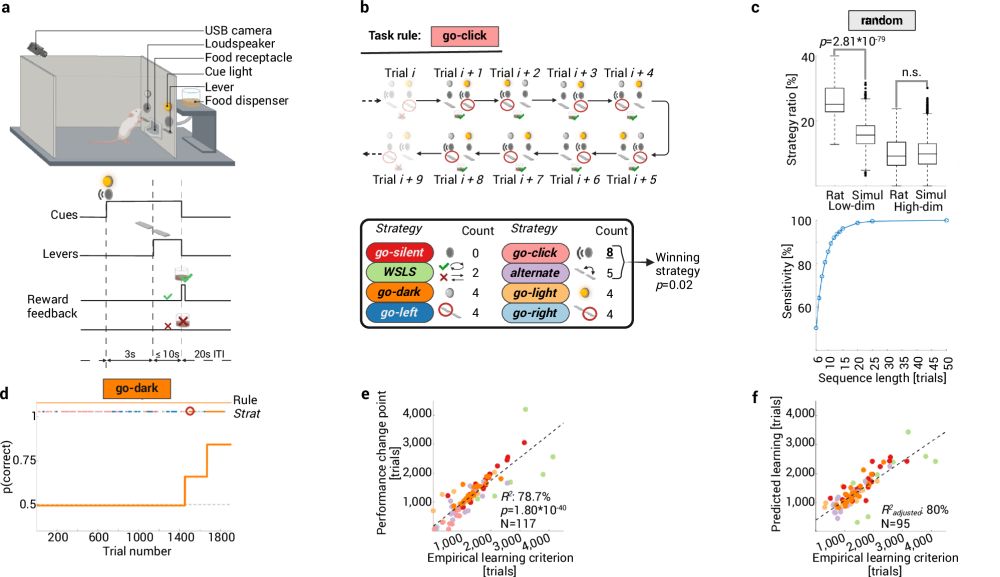

Abstract rule learning promotes cognitive flexibility in complex environments across species

Nature Communications - Whether neurocomputational mechanisms that speed up human learning in changing environments also exist in other species remains unclear. Here, the authors show that both...

How do animals learn new rules? By systematically testing diff. behavioral strategies, guided by selective attn. to rule-relevant cues: rdcu.be/etlRV

Akin to in-context learning in AI, strategy selection depends on the animals' "training set" (prior experience), with similar repr. in rats & humans.

26.06.2025 15:30 —

👍 8

🔁 2

💬 1

📌 0

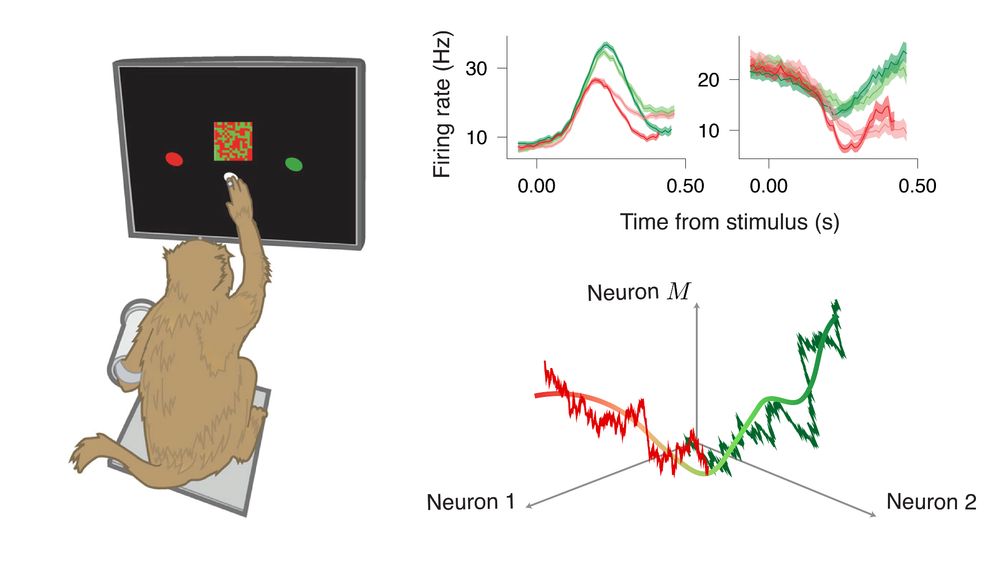

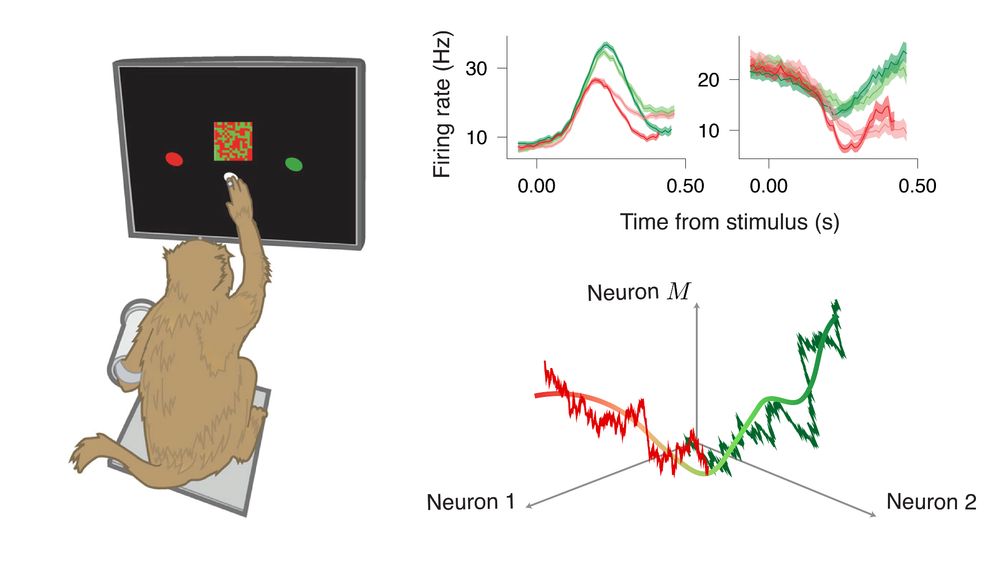

Out today in @nature.com: we show that individual neurons have diverse tuning to a decision variable computed by the entire population, revealing a unifying geometric principle for the encoding of sensory and dynamic cognitive variables.

www.nature.com/articles/s41...

25.06.2025 22:38 —

👍 206

🔁 52

💬 5

📌 4

Our new preprint 👀

09.06.2025 19:32 —

👍 31

🔁 6

💬 0

📌 0

A wide shot of approximately 30 individuals standing in a line, posing for a group photograph outdoors. The background shows a clear blue sky, trees, and a distant cityscape or hills.

Great news! Our March SBI hackathon in Tübingen was a huge success, with 40+ participants (30 onsite!). Expect significant updates soon: awesome new features & a revamped documentation you'll love! Huge thanks to our amazing SBI community! Release details coming soon. 🥁 🎉

12.05.2025 14:29 —

👍 26

🔁 7

💬 0

📌 1

Please RT🙏

Reach out if you want to help understand cognition by modelling, analyzing and/or collect large scale intracortical data from 👩🐒🐁

We're a friendly, diverse group (n>25) w/ this terrace 😎 in the center of Paris! See👇 for + info about the lab

We have funding to support your application!

10.05.2025 14:23 —

👍 39

🔁 21

💬 1

📌 0

Jobs - mackelab

The MackeLab is a research group at the Excellence Cluster Machine Learning at Tübingen University!

🎓Hiring now! 🧠 Join us at the exciting intersection of ML and Neuroscience! #AI4science

We’re looking for PhDs, Postdocs and Scientific Programmers that want to use deep learning to build, optimize and study mechanistic models of neural computations. Full details: www.mackelab.org/jobs/ 1/5

30.04.2025 13:43 —

👍 23

🔁 12

💬 1

📌 0

Re-posting is appreciated: We have a fully funded PhD position in CMC lab @cmc-lab.bsky.social (at @tudresden_de). You can use forms.gle/qiAv5NZ871kv... to send your application and find more information. Deadline is April 30. Find more about CMC lab: cmclab.org and email me if you have questions.

20.02.2025 14:50 —

👍 77

🔁 89

💬 3

📌 8

Compositional simulation-based inference for time series

Amortized simulation-based inference (SBI) methods train neural networks on simulated data to perform Bayesian inference. While this strategy avoids the need for tractable likelihoods, it often requir...

Excited to present our work on compositional SBI for time series at #ICLR2025 tomorrow!

If you're interested in simulation-based inference for time series, come chat with Manuel Gloeckler or Shoji Toyota

at Poster #420, Saturday 10:00–12:00 in Hall 3.

📰: arxiv.org/abs/2411.02728

25.04.2025 08:53 —

👍 25

🔁 4

💬 2

📌 1

ICLR 2025 Comparing noisy neural population dynamics using optimal transport distances OralICLR 2025

Excited to announce that our paper on "Comparing noisy neural population dynamics using optimal transport distances" has been selected for an oral presentation in #ICLR2025 (1.8% top papers). Check the thread for paper details (0/n).

Presentation info: iclr.cc/virtual/2025....

22.04.2025 18:06 —

👍 23

🔁 7

💬 1

📌 0

Happening tomorrow morning :).

28.03.2025 20:30 —

👍 8

🔁 0

💬 0

📌 0