On Wednesday at 15:50pm (Room I; 15:30pm session):

OpenAgentSafety: A Comprehensive Framework for Evaluating Real-World AI Agent Safety arxiv.org/abs/2507.06134

(followed by poster session at 17:20pm)

On Wednesday at 15:50pm (Room I; 15:30pm session):

OpenAgentSafety: A Comprehensive Framework for Evaluating Real-World AI Agent Safety arxiv.org/abs/2507.06134

(followed by poster session at 17:20pm)

Presenting two papers!

On Tuesday at 12:40pm (Room I; 12pm session):

1-2-3 Check: Enhancing Contextual Privacy in LLM via Multi-Agent Reasoning arxiv.org/abs/2508.07667

(followed by poster session at 1pm)

You can read more in the full paper:

www.researchgate.net/publication/...

There is also an interactive web that contains logs of the authentic interactions:

agentsofchaos.baulab.info

In this amazing multidisciplinary collaboration, we report our early experience with the @openclaw-x.bsky.social ->

23.02.2026 23:32 — 👍 40 🔁 21 💬 1 📌 9

On my way to Paris for the IASEAI conference www.iaseai.org/our-programs...! Who will be there?

22.02.2026 16:18 — 👍 1 🔁 0 💬 0 📌 1I believe so!

04.02.2026 00:48 — 👍 0 🔁 0 💬 0 📌 0oh thanks for catching that, I fixed this!

03.02.2026 14:12 — 👍 1 🔁 0 💬 0 📌 0Hi Lingze! The form itself has a place where you can indicate your own interests, and then indicate alignment with existing topics. Since the full list of mentors isn't finalized, it's best not to contact faculty; they will reach out to you!

02.02.2026 18:55 — 👍 1 🔁 0 💬 1 📌 0

🚀 Apply to CMU LTI’s Summer 2026 “Language Technology for All” internship! 🎓 Open to pre‑doctoral students new to language tech (non‑CS backgrounds welcome). 🔬 12–14 weeks in‑person in Pittsburgh — travel + stipend paid. 💸 Deadline: Feb 20, 11:59pm ET. Apply → forms.gle/cUu8g6wb27Hs...

02.02.2026 15:41 — 👍 14 🔁 12 💬 2 📌 0

I'm excited to announce the Call for Papers for the Social Context (SoCon) and Integrating NLP and Psychology to Study Social Interactions (NLPSI) workshop, @ LREC '26 in Palma de Mallorca, Spain!

🗓Deadline: February 16, 2026

🌐Website: socon-nlpsi.github.io

🗓Workshop: May 12, 2026

I'm very excited about our new work which aims to model causes and effects on stories online! Narratives and stories are everywhere, so it's helpful to be able to understand how people use them in nuanced ways.

22.12.2025 09:20 — 👍 14 🔁 3 💬 0 📌 0

How and when should LLM guardrails be deployed to balance safety and user experience?

Our #EMNLP2025 paper reveals that crafting thoughtful refusals rather than detecting intent is the key to human-centered AI safety.

📄 arxiv.org/abs/2506.00195

🧵[1/9]

📣📣 Announcing the first PersonaLLM Workshop on LLM Persona Modeling.

If you work on persona driven LLMs, social cognition, HCI, psychology, cognitive science, cultural modeling, or evaluation, do not miss the chance to submit.

Submit here: openreview.net/group?id=Neu...

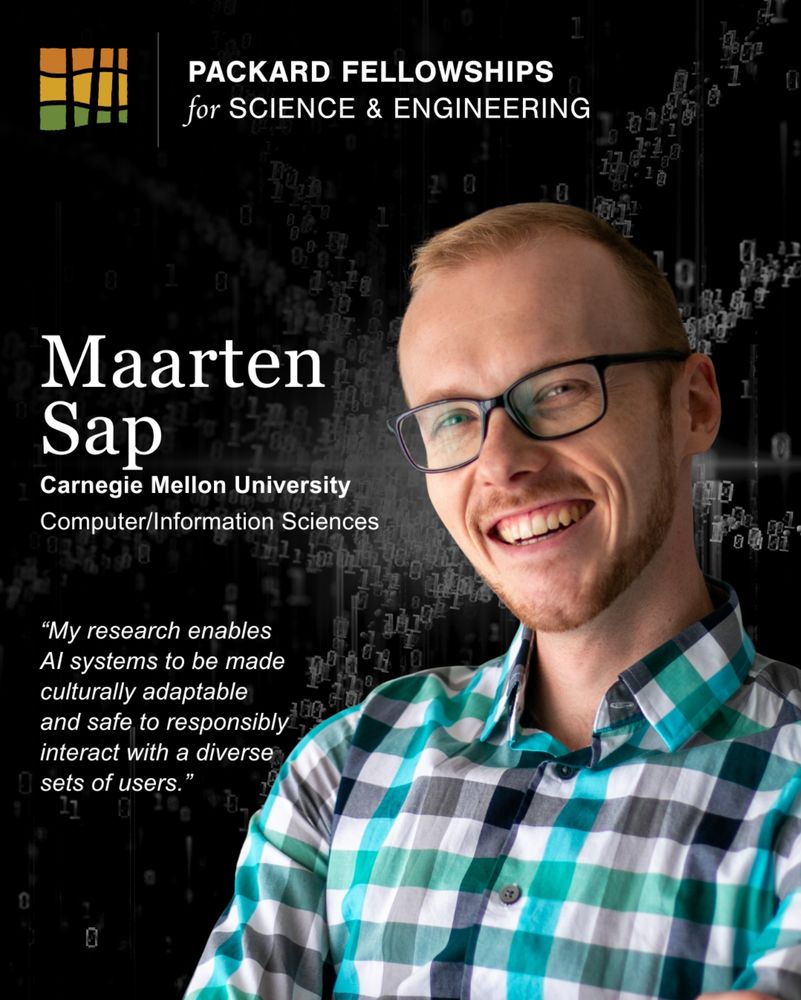

I’m ✨ super excited and grateful ✨to announce that I'm part of the 2025 class of #PackardFellows (www.packard.org/2025fellows). The @packardfdn.bsky.social and this fellowship will allow me to explore exciting research directions towards culturally responsible and safe AI 🌍🌈

15.10.2025 13:05 — 👍 11 🔁 1 💬 1 📌 2

🚨New paper: Reward Models (RMs) are used to align LLMs, but can they be steered toward user-specific value/style preferences?

With EVALUESTEER, we find even the best RMs we tested exhibit their own value/style biases, and are unable to align with a user >25% of the time. 🧵

Oh yes we have a paper under submission! I'll ask Mikayla to email you :)

14.10.2025 13:35 — 👍 1 🔁 0 💬 1 📌 0

Saplings take #COLM2025! Featuring Group lunch, amazing posters, and a panel with Yoshua Bengio!

14.10.2025 12:19 — 👍 16 🔁 1 💬 1 📌 0We are launching our Graduate School Application Financial Aid Program (www.queerinai.com/grad-app-aid) for 2025-2026. We’ll give up to $750 per person to LGBTQIA+ STEM scholars applying to graduate programs. Apply at openreview.net/group?id=Que.... 1/5

09.10.2025 00:37 — 👍 7 🔁 9 💬 1 📌 0I'm also giving a talk at #COLM2025 Social Simulation workshop (sites.google.com/view/social-...) on Unlocking Social Intelligence in AI, at 2:30pm Oct 10th!

06.10.2025 14:53 — 👍 6 🔁 0 💬 0 📌 0

Day 3 (Thu Oct 9), 11:00am–1:00pm, Poster Session 5

Poster #13: PolyGuard: A Multilingual Safety Moderation Tool for 17 Languages by @kpriyanshu256.bsky.social and @devanshrjain.bsky.social

Poster #74: Fluid Language Model Benchmarking — led by @valentinhofmann.bsky.social

Day 2 (Wed Oct 8), 4:30–6:30pm, Poster Session 4

Poster #50: The Delta Learning Hypothesis: Preference Tuning on Weak Data can Yield Strong Gains — led by

Scott Geng

Day 1 (Tue Oct 7) 4:30-6:30pm, Poster Session 2

Poster #77: ALFA: Aligning LLMs to Ask Good Questions: A Case Study in Clinical Reasoning; led by

@stellali.bsky.social & @jiminmun.bsky.social

Day 1 (Tue Oct 7) 4:30-6:30pm, Poster Session 2

Poster #42: HAICOSYSTEM: An Ecosystem for Sandboxing Safety Risks in Human-AI Interactions; led by @nlpxuhui.bsky.social

Headed to #COLM2025 today! Here's five of our papers that were accepted, and when & where to catch them 👇

06.10.2025 14:51 — 👍 6 🔁 0 💬 1 📌 1

📢 New #COLM2025 paper 📢

Standard benchmarks give every LLM the same questions. This is like testing 5th graders and college seniors with *one* exam! 🥴

Meet Fluid Benchmarking, a capability-adaptive eval method delivering lower variance, higher validity, and reduced cost.

🧵

That's a lot of people! Fall Sapling lab outing, welcoming our new postdoc Vasudha, and visitors Tze Hong and Chani! (just missing Jocelyn)

26.08.2025 17:53 — 👍 12 🔁 0 💬 0 📌 0

I'm excited cause I'm teaching/coordinating a new unique class, where we teach new PhD students all the "soft" skills of research, incl. ideation, reviewing, presenting, interviewing, advising, etc.

Each lecture is taught by a different LTI prof! It takes a village! maartensap.com/11705/Fall20...

I've always seen people on laptops during talks, but it's possible it has increased.

I realized during lockdown that I drift to emails during Zoom talks, so I started knitting to pay better attention to those talks, and now I knit during IRL talks too (though sometimes I still peck at my laptop 😅)

![Snippet of the Forbes article, with highlighted text.

A recent study by Allen Institute for AI (Ai2), titled “Let Them Down Easy! Contextual Effects of LLM Guardrails on User Perceptions and Preferences,” found that refusal style mattered more than user intent. The researchers tested 3,840 AI query-response pairs across 480 participants, comparing direct refusals, explanations, redirection, partial compliance and full compliance.

Partial compliance, sharing general but not specific information, reduced dissatisfaction by over 50% compared to outright denial, making it the most effective safeguard.

“We found that [start of highlight] direct refusals can cause users to have negative perceptions of the LLM: users consider these direct refusals significantly less helpful, more frustrating and make them significantly less likely to interact with the system in the future,” [end of highlight] Maarten Sap, AI safety lead at Ai2 and assistant professor at Carnegie Mellon University, told me. “I do not believe that model welfare is a well-founded direction or area to care about.”](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:jqtp2hdnr5g7giblg57wbd7e/bafkreieqlgyx5cr22fdgz6qq5w55yw4q74rqhglnf2qmzjaieigsbw7hka@jpeg)

Snippet of the Forbes article, with highlighted text. A recent study by Allen Institute for AI (Ai2), titled “Let Them Down Easy! Contextual Effects of LLM Guardrails on User Perceptions and Preferences,” found that refusal style mattered more than user intent. The researchers tested 3,840 AI query-response pairs across 480 participants, comparing direct refusals, explanations, redirection, partial compliance and full compliance. Partial compliance, sharing general but not specific information, reduced dissatisfaction by over 50% compared to outright denial, making it the most effective safeguard. “We found that [start of highlight] direct refusals can cause users to have negative perceptions of the LLM: users consider these direct refusals significantly less helpful, more frustrating and make them significantly less likely to interact with the system in the future,” [end of highlight] Maarten Sap, AI safety lead at Ai2 and assistant professor at Carnegie Mellon University, told me. “I do not believe that model welfare is a well-founded direction or area to care about.”

We have been studying these questions of how models should refuse in our recent paper accepted to EMNLP Findings (arxiv.org/abs/2506.00195) led by my wonderful PhD student

@mingqian-zheng.bsky.social