A screenshot of a YouTube video titled “Intro to Transformers.js” from The Coding Train channel.

As someone who learnt so much by watching @shiffman.lol's coding videos in high school, I never imagined that one day my own library would feature on his channel! 🥹

If you're interested in learning more about 🤗 Transformers.js, I highly recommend checking it out!

👉 www.youtube.com/watch?v=KR61...

26.10.2025 19:30 —

👍 12

🔁 2

💬 0

📌 0

LFM2 WebGPU – In-browser tool calling - a Hugging Face Space by LiquidAI

In-browser tool calling, powered by Transformers.js

As always, the demo is open source (which you can find under the "Files" tab), so I'm excited to see how the community builds upon this! 🚀

🔗 Link to demo: huggingface.co/spaces/Liqui...

06.08.2025 17:56 —

👍 4

🔁 1

💬 0

📌 0

The next generation of AI-powered websites is going to be WILD! 🤯

In-browser tool calling & MCP is finally here, allowing LLMs to interact with websites programmatically.

To show what's possible, I built a demo using Liquid AI's new LFM2 model, powered by 🤗 Transformers.js.

06.08.2025 17:56 —

👍 7

🔁 2

💬 2

📌 0

Voxtral WebGPU - a Hugging Face Space by webml-community

State-of-the-art audio transcription in your browser

That's right, we're running Mistral's new Voxtral-Mini-3B model 100% locally in-browser on WebGPU, powered by Transformers.js and ONNX Runtime Web! 🔥

Try it out yourself! 👇

huggingface.co/spaces/webml...

24.07.2025 15:43 —

👍 2

🔁 0

💬 0

📌 0

Introducing Voxtral WebGPU: State-of-the-art audio transcription directly in your browser! 🤯

🗣️ Transcribe videos, meeting notes, songs and more

🔐 Runs on-device, meaning no data is sent to a server

🌎 Multilingual (8 languages)

🤗 Completely free (forever) & open source

24.07.2025 15:43 —

👍 4

🔁 3

💬 1

📌 0

A community member trained a tiny Llama model (23M parameters) on 3 million high-quality @lichess.org games, then deployed it to run entirely in-browser with 🤗 Transformers.js! Super cool! 🔥

It has an estimated ELO of ~1400... can you beat it? 👀

(runs on both mobile and desktop)

22.07.2025 19:00 —

👍 10

🔁 3

💬 1

📌 4

Kokoro Text-to-Speech (WebGPU) - a Hugging Face Space by webml-community

High-quality speech synthesis powered by Kokoro TTS

The most difficult part was getting the model running in the first place, but the next steps are simple:

✂️ Implement sentence splitting, enabling streamed responses

🌍 Multilingual support (only phonemization left)

Who wants to help? 🤗

huggingface.co/spaces/webml...

07.02.2025 17:03 —

👍 8

🔁 0

💬 0

📌 0

We did it! Kokoro TTS (v1.0) can now run 100% locally in your browser w/ WebGPU acceleration. Real-time text-to-speech without a server. ⚡️

Generate 10 seconds of speech in ~1 second for $0.

What will you build? 🔥

07.02.2025 17:03 —

👍 21

🔁 6

💬 1

📌 1

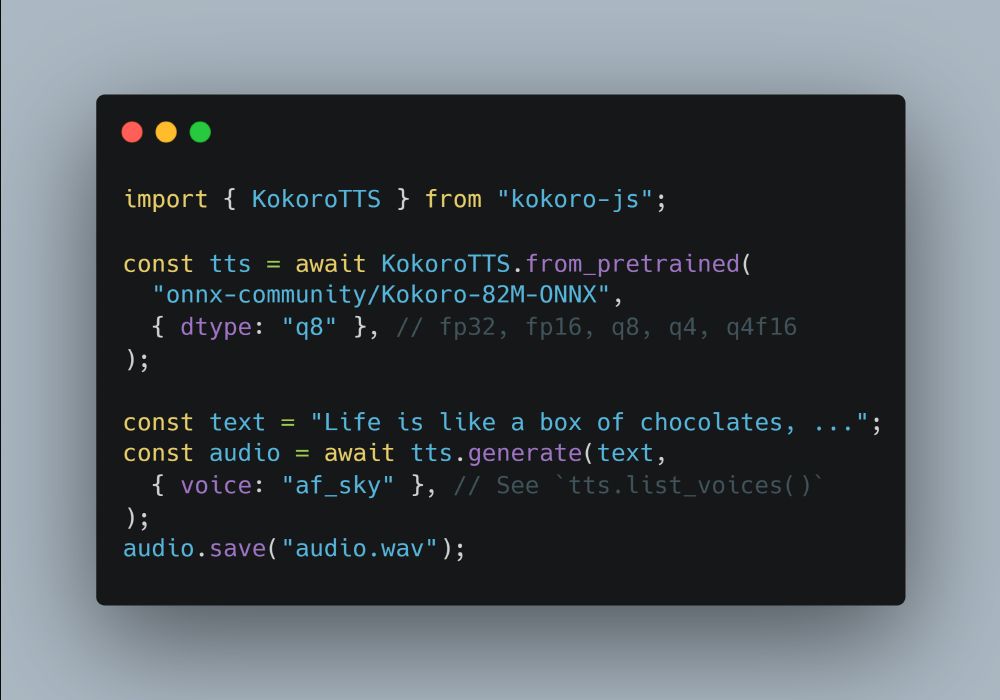

The model is also extremely resilient to quantization. The smallest variant is only 86 MB in size (down from the original 326 MB), with no noticeable difference in audio quality! 🤯

Link to models/samples: huggingface.co/onnx-communi...

16.01.2025 15:05 —

👍 4

🔁 0

💬 0

📌 0

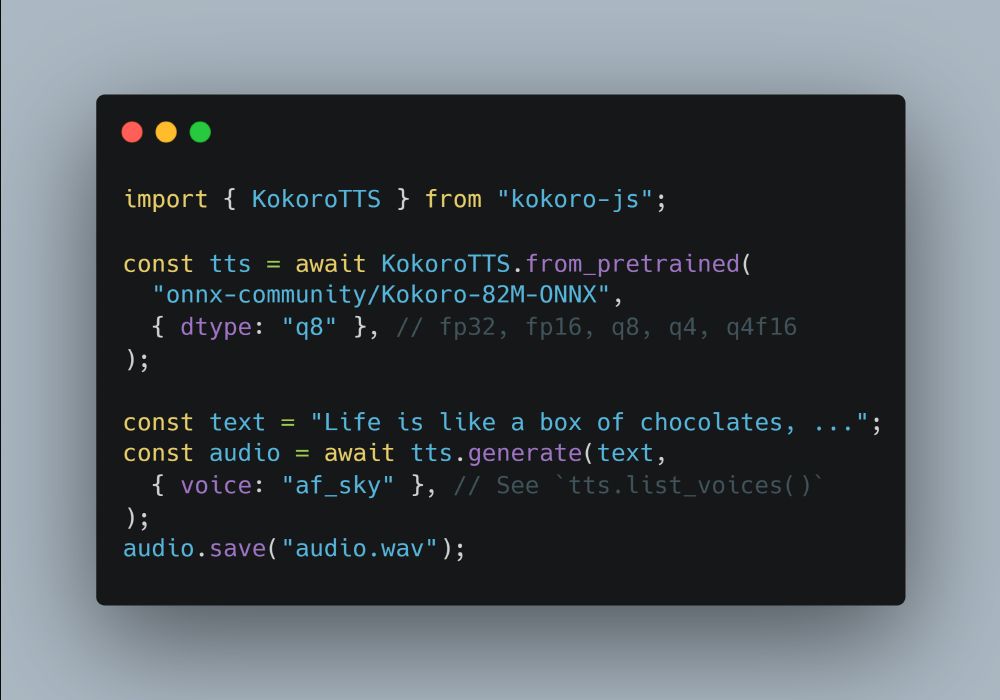

import { KokoroTTS } from "kokoro-js";

const tts = await KokoroTTS.from_pretrained(

"onnx-community/Kokoro-82M-ONNX",

{ dtype: "q8" }, // fp32, fp16, q8, q4, q4f16

);

const text = "Life is like a box of chocolates. You never know what you're gonna get.";

const audio = await tts.generate(text,

{ voice: "af_sky" }, // See `tts.list_voices()`

);

audio.save("audio.wav");

You can get started in just a few lines of code! 🧑💻

Huge kudos to the Kokoro TTS community, especially taylorchu for the ONNX exports and Hexgrad for the amazing project! None of this would be possible without you all! 🤗

Try it out yourself: huggingface.co/spaces/webml...

16.01.2025 15:05 —

👍 2

🔁 0

💬 1

📌 0

Introducing Kokoro.js, a new JavaScript library for running Kokoro TTS, an 82 million parameter text-to-speech model, 100% locally in the browser w/ WASM. Powered by 🤗 Transformers.js. WebGPU support coming soon!

👉 npm i kokoro-js 👈

Link to demo (+ sample code) in 🧵

16.01.2025 15:05 —

👍 19

🔁 3

💬 1

📌 0

Llama 3.2 Reasoning WebGPU - a Hugging Face Space by webml-community

Small and powerful reasoning LLM that runs in your browser

For the AI builders out there: imagine what could be achieved with a browser extension that (1) uses a powerful reasoning LLM, (2) runs 100% locally & privately, and (3) can directly access/manipulate the DOM! 👀

💻 Source code: github.com/huggingface/...

🔗 Online demo: huggingface.co/spaces/webml...

10.01.2025 12:19 —

👍 3

🔁 1

💬 1

📌 0

Is this the future of AI browser agents? 👀 WebGPU-accelerated reasoning LLMs are now supported in Transformers.js! 🤯

Here's MiniThinky-v2 (1B) running 100% locally in the browser at ~60 tps (no API calls)! I can't wait to see what you build with it!

Demo + source code in 🧵👇

10.01.2025 12:19 —

👍 32

🔁 7

💬 1

📌 0

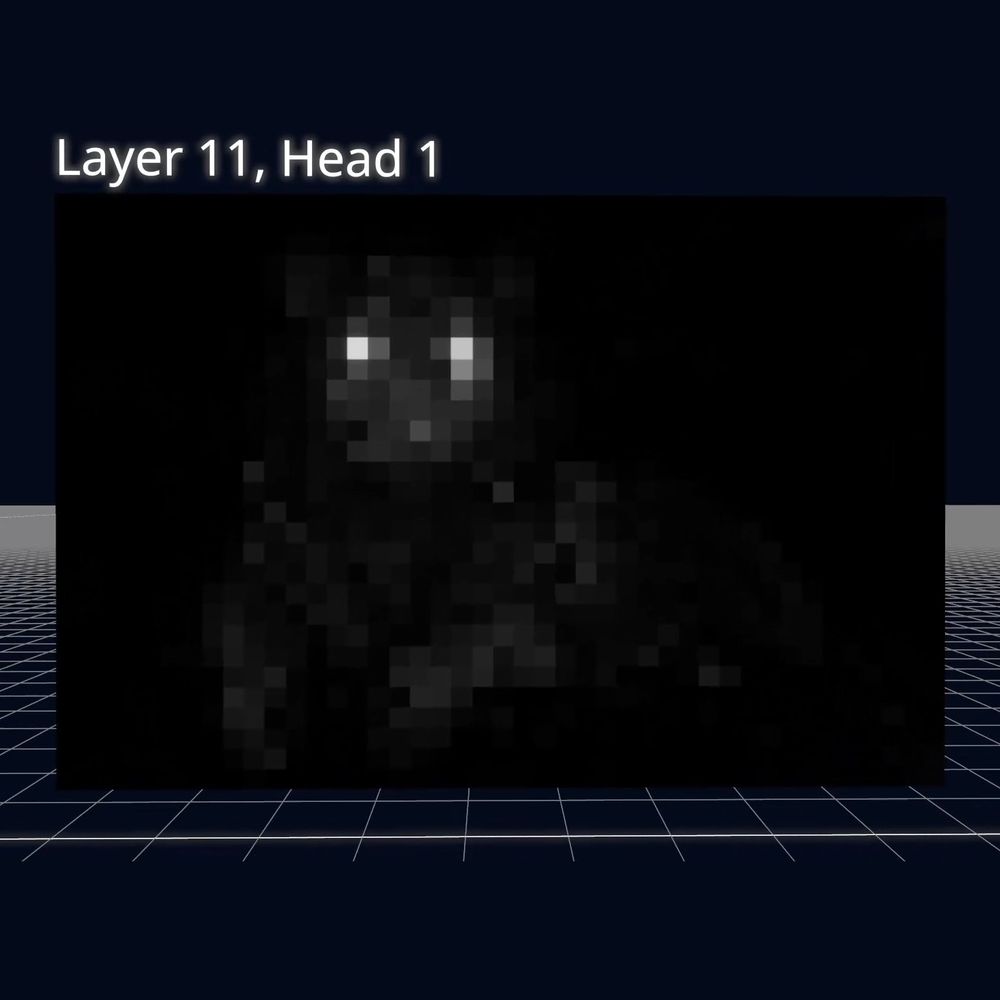

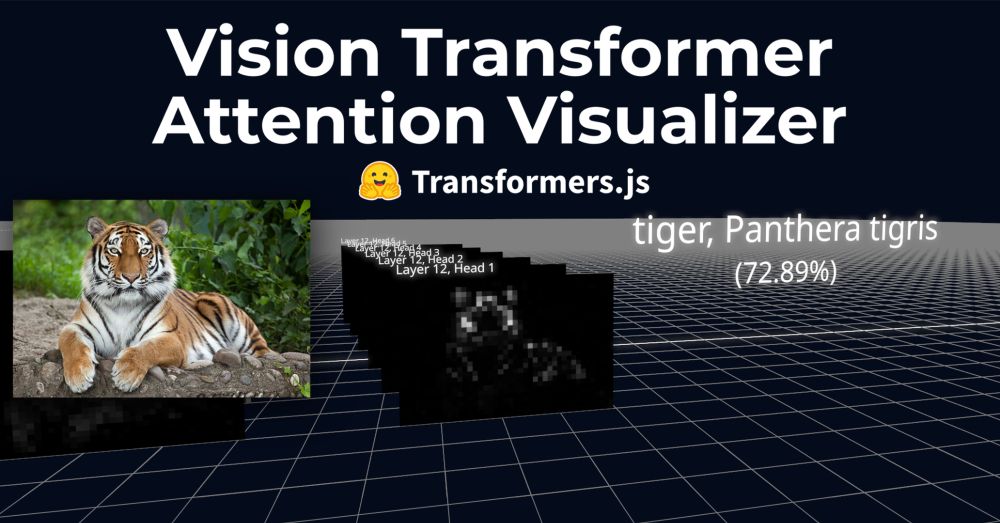

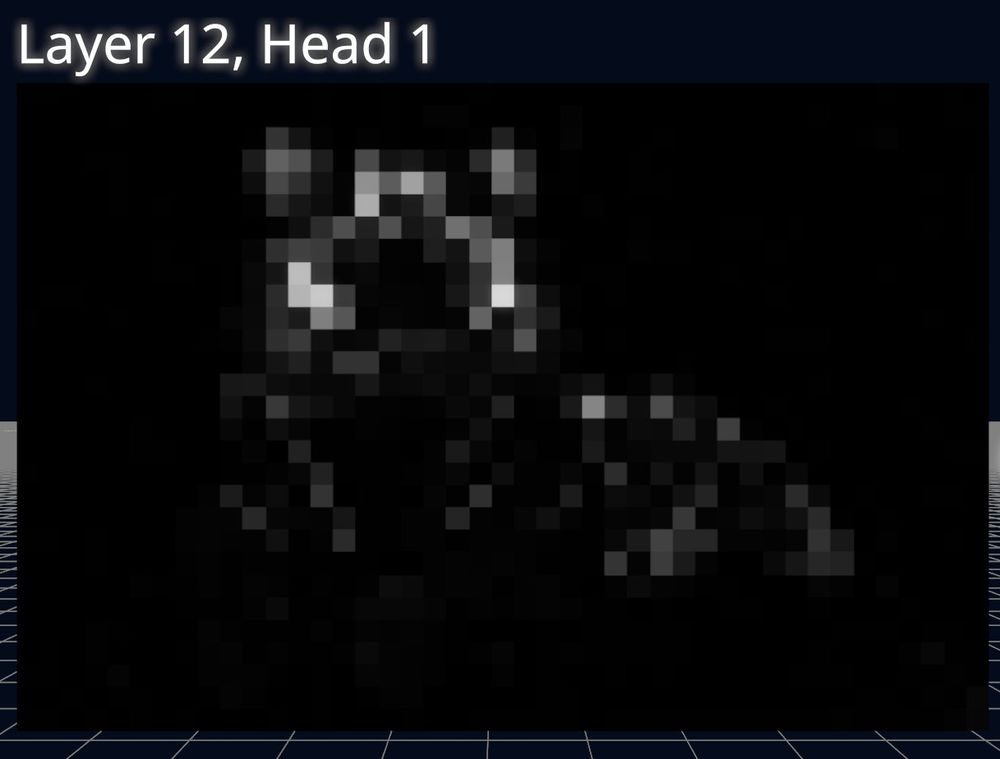

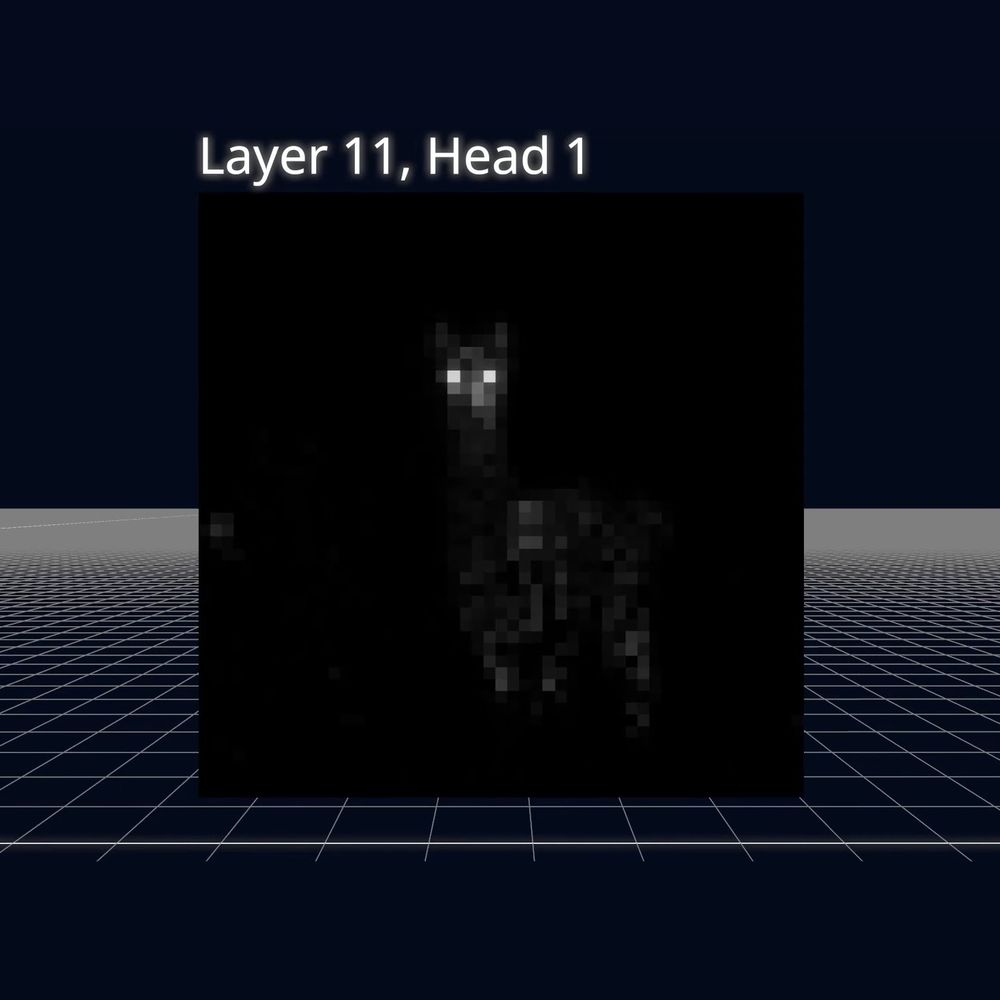

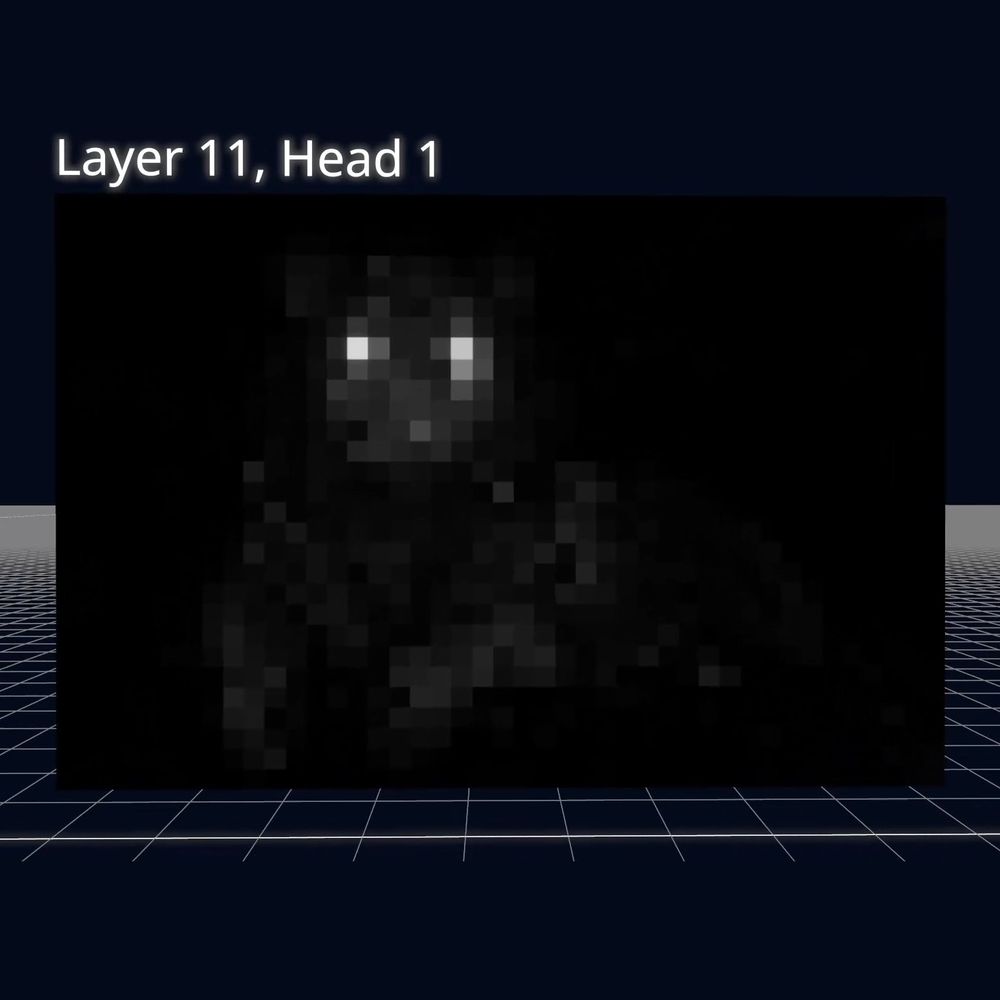

Attention Visualization - a Hugging Face Space by webml-community

Vision Transformer Attention Visualization

This project was greatly inspired by Brendan Bycroft's amazing LLM Visualization tool – check it out if you haven't already! Also, thanks to Niels Rogge for adding DINOv2 w/ Registers to transformers! 🤗

Source code: github.com/huggingface/...

Online demo: huggingface.co/spaces/webml...

01.01.2025 15:37 —

👍 4

🔁 2

💬 0

📌 0

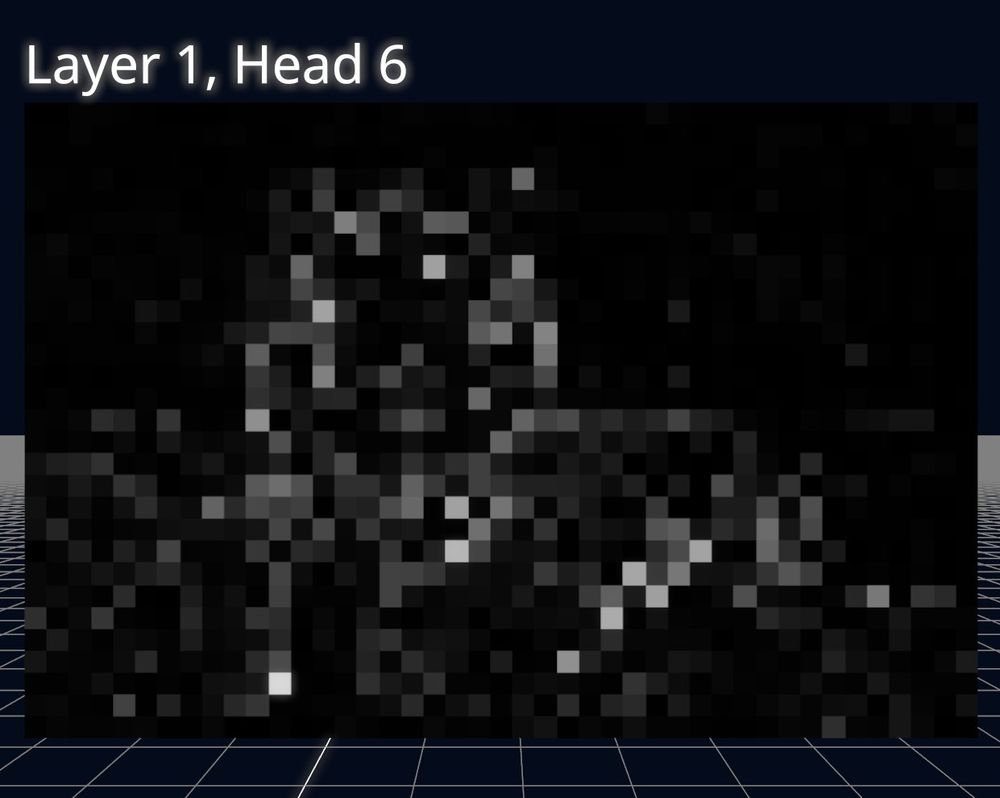

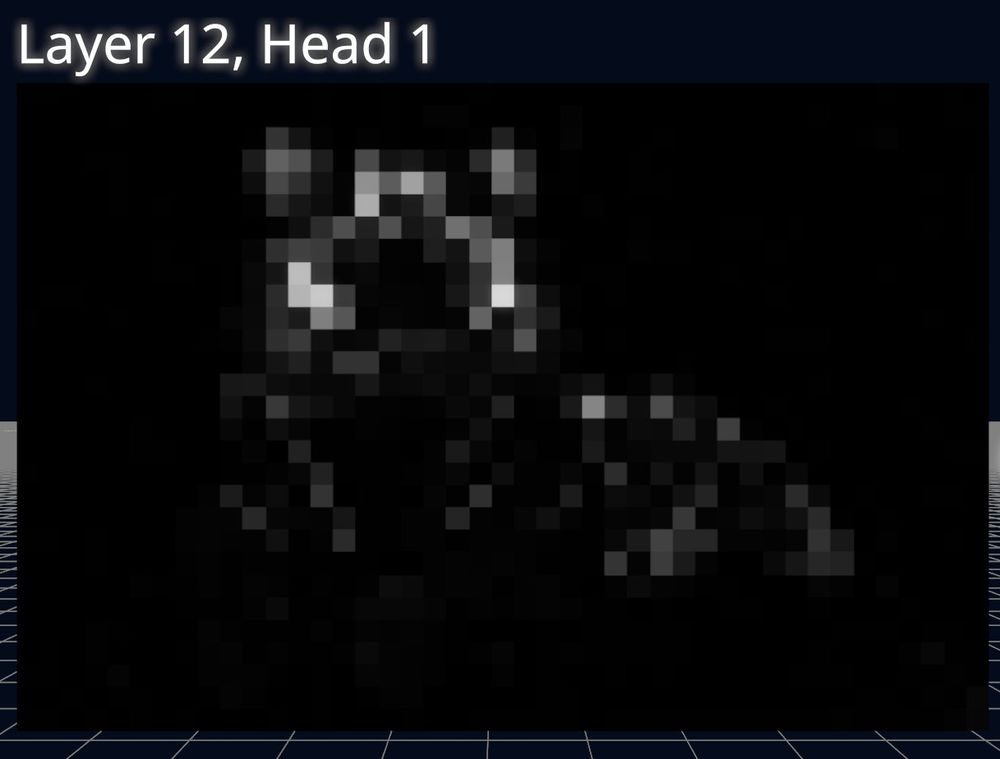

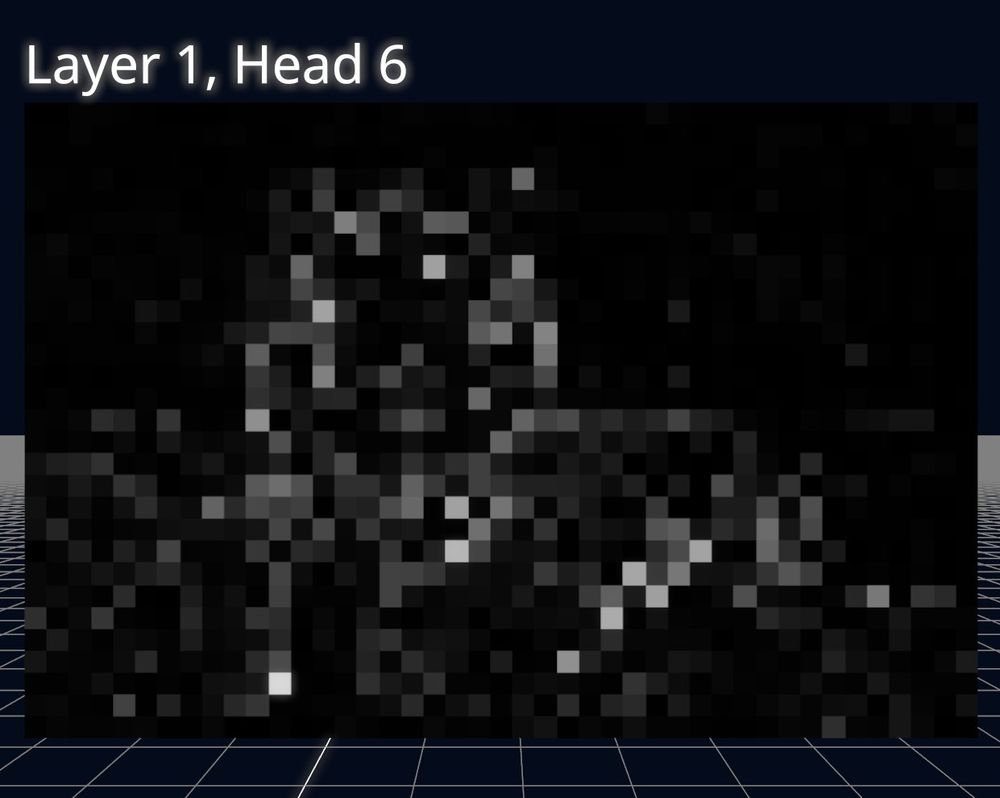

Another interesting thing to see is how the attention maps become far more refined in later layers of the transformer. For example,

First layer (1) – noisy and diffuse, capturing broad general patterns.

Last layer (12) – focused and precise, highlighting specific features.

01.01.2025 15:37 —

👍 3

🔁 0

💬 1

📌 0

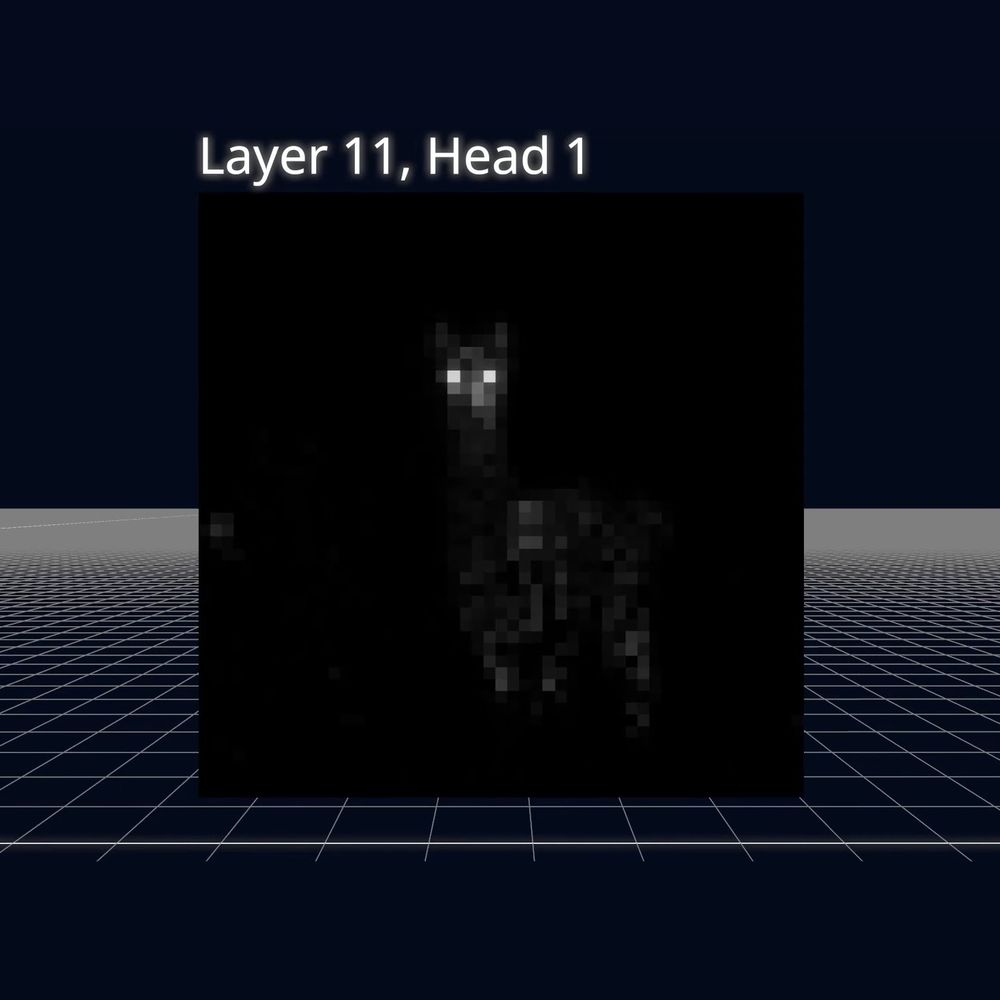

Vision Transformers work by dividing images into fixed-size patches (e.g., 14 × 14), flattening each patch into a vector and treating each as a token.

It's fascinating to see what each attention head learns to "focus on". For example, layer 11, head 1 seems to identify eyes. Spooky! 👀

01.01.2025 15:37 —

👍 2

🔁 0

💬 1

📌 0

The app loads a small DINOv2 model into the user's browser and runs it locally using Transformers.js! 🤗

This means you can analyze your own images for free: simply click the image to open the file dialog.

E.g., the model recognizes that long necks and fluffy ears are defining features of llamas! 🦙

01.01.2025 15:37 —

👍 2

🔁 1

💬 1

📌 0

First project of 2025: Vision Transformer Explorer

I built a web app to interactively explore the self-attention maps produced by ViTs. This explains what the model is focusing on when making predictions, and provides insights into its inner workings! 🤯

Try it out yourself! 👇

01.01.2025 15:37 —

👍 9

🔁 3

💬 1

📌 0

Moonshine Web - a Hugging Face Space by webml-community

Real-time in-browser speech recognition

Huge shout-out to the Useful Sensors team for such an amazing model and to Wael Yasmina for his 3D audio visualizer tutorial! 🤗

💻 Source code: github.com/huggingface/...

🔗 Online demo: huggingface.co/spaces/webml...

18.12.2024 16:51 —

👍 4

🔁 0

💬 1

📌 0

Introducing Moonshine Web: real-time speech recognition running 100% locally in your browser!

🚀 Faster and more accurate than Whisper

🔒 Privacy-focused (no data leaves your device)

⚡️ WebGPU accelerated (w/ WASM fallback)

🔥 Powered by ONNX Runtime Web and Transformers.js

Demo + source code below! 👇

18.12.2024 16:51 —

👍 28

🔁 4

💬 1

📌 0

Text-to-Speech WebGPU - a Hugging Face Space by webml-community

WebGPU text-to-Speech powered by OuteTTS and Transformers.js

Huge shout-out to OuteAI for their amazing model (OuteTTS-0.2-500M) and for helping us bring it to the web! 🤗 Together, we released the outetts NPM package, which you can install with `npm i outetts`.

💻 Source code: github.com/huggingface/...

🔗 Demo: huggingface.co/spaces/webml...

08.12.2024 19:38 —

👍 7

🔁 0

💬 0

📌 0

The model is multilingual (English, Chinese, Korean & Japanese) and even supports zero-shot voice cloning! 🤯 Stay tuned for an update that will add these features to the UI!

More samples:

bsky.app/profile/reac...

08.12.2024 19:38 —

👍 6

🔁 0

💬 2

📌 0

Introducing TTS WebGPU: The first ever text-to-speech web app built with WebGPU acceleration! 🔥

High-quality and natural speech generation that runs 100% locally in your browser, powered by OuteTTS and Transformers.js. 🤗 Try it out yourself!

Demo + source code below 👇

08.12.2024 19:38 —

👍 45

🔁 12

💬 2

📌 1

Release 3.1.0 · huggingface/transformers.js

🚀 Transformers.js v3.1 — any-to-any, text-to-image, image-to-text, pose estimation, time series forecasting, and more!

Table of contents:

🤖 New models: Janus, Qwen2-VL, JinaCLIP, LLaVA-OneVision, ...

6. MGP-STR for optical character recognition (OCR)

7. PatchTST & PatchTSMixer for time series forecasting

That's right, everything running 100% locally in your browser (no data sent to a server)! 🔥 Huge for privacy!

Check out the release notes for more information. 👇

github.com/huggingface/...

28.11.2024 15:13 —

👍 2

🔁 1

💬 0

📌 0

2. Qwen2-VL from Qwen for dynamic-resolution image understanding

3. JinaCLIP from Jina AI for general-purpose multilingual multimodal embeddings

4. LLaVA-OneVision from ByteDance for Image-Text-to-Text generation

5. ViTPose for pose estimation

28.11.2024 15:13 —

👍 4

🔁 1

💬 1

📌 0

We just released Transformers.js v3.1 and you're not going to believe what's now possible in the browser w/ WebGPU! 🤯 Let's take a look:

1. Janus from Deepseek for unified multimodal understanding and generation (Text-to-Image and Image-Text-to-Text)

Demo (+ source code): hf.co/spaces/webml...

28.11.2024 15:13 —

👍 23

🔁 5

💬 2

📌 0