Baidu just released a reasoning model 🔥 ERNIE-4.5-21B-A3B-Thinking

huggingface.co/baidu/ERNIE-...

✨ Small MoE - Apache 2.0

✨ 128K context length for deep reasoning

✨ Efficient tool usage capabilities

@adinayakup.bsky.social

AI Research @Hugging Face 🤗 Contributing to the Chinese ML community.

Baidu just released a reasoning model 🔥 ERNIE-4.5-21B-A3B-Thinking

huggingface.co/baidu/ERNIE-...

✨ Small MoE - Apache 2.0

✨ 128K context length for deep reasoning

✨ Efficient tool usage capabilities

Baidu just released a thinking model 🔥 ERNIE-4.5-21B-A3B-Thinking

huggingface.co/baidu/ERNIE-...

✨ Small MoE - Apache 2.0

✨ 128K context length for deep reasoning

✨ Efficient tool usage capabilities

MiniCPM4.1🔥New edge-side LLM built for efficiency + reasoning from OpenBMB

huggingface.co/openbmb/Mini...

✨ 8B - Apache 2.0

✨ Hybrid reasoning model: deep reasoning +fast inference.

✨5x faster on edge chips, 90% smaller (BitCPM)

✨Trained on UltraClean + UltraChat v2 data

Inverse IFEval 🔥New benchmark from Bytedance & MAP

huggingface.co/datasets/m-a...

huggingface.co/papers/2509....

Testing LLMs on their ability to override biases & follow adversarial instructions.

✨ 8 challenge types

✨ 1,012 CN/EN Qs across 23 domains

✨ Human-in-the-loop + LLM-as-a-Judge

Added the Kwai team from Kuaishou in the heatmap

huggingface.co/spaces/zh-ai...

Klear-46B-A2.5🔥 a sparse MoE LLM developed by the Kwai-Klear Team at Kuaishou

huggingface.co/collections/...

✨ 46B total / 2.5B active - Apache2.0

✨ Dense-level performance at lower cost

✨ Trained on 22T tokens with progressive curriculum

✨ 64K context length

Latest update from Moonshot AI

Kimi K2 >>> Kimi K2-Instruct-0905🔥

huggingface.co/moonshotai/K...

✨ 32B activated / 1T total parameters

✨ Enhanced agentic coding intelligence

✨ Better frontend coding experience

✨ 256K context window for long horizon tasks

✨ Supports 33 languages, including 5 ethnic minority languages in China 👀

✨ Including a translation ensemble model: Chimera-7B

✨ Full pipeline: pretrain > CPT > SFT > enhancement > ensemble refinement > SOTA performance at similar scale

Hunyuan-MT-7B 🔥 open translation model released by Tencent

huggingface.co/collections/...

✨ 560B total / ~27B active MoE — MIT license

✨ 128k context length + advanced reasoning

✨ ScMoE design: 100+ TPS inference

✨ Stable large-scale training + strong agentic performance

From food delivery to frontier AI 🚀 Meituan, the leading lifestyle platform just dropped its first open SoTA LLM: LongCat-Flash 🔥

huggingface.co/meituan-long...

✨ Large-scale triplet dataset (content, style, stylized)

✨ Disentangled learning: style alignment + content preservation

✨ Style Reward Learning (SRL) for higher fidelity

✨ USO-Bench: 1st benchmark for style & subject jointly

✨ SOTA results on subject consistency & style similarity

USO 🎨 Unified customization model released by Bytedance research

Demo

huggingface.co/spaces/byted...

Model

huggingface.co/bytedance-re...

Paper

huggingface.co/papers/2508....

✨ Direct raw audio: text & speech ,no ASR+LLM+TTS pipeline

✨ High-IQ reasoning: RL + CoT for paralinguistic cues

✨ Multimodal RAG + tool calling

✨ Emotion, timbre, dialect & style control

✨ SOTA on ASR, paralinguistic, speech dialog

Step-Audio 2🔥 New end to end multimodal LLM for audio & speech, released by StepFun

huggingface.co/collections/...

>Applications: AI-as-a-service, test bases, new standards

>Open-source: support communities, encourage contributions (incl. university credits & recognition), foster new application approaches, and build globally impactful ecosystems 👀

>Talent, policy & safety frameworks: secure sustainable growth

✨Highlights:

>Models: advance theory, efficient training/inference, evaluation system

>Data: high-quality datasets, IP/copyright reform, new incentives

>Compute: boost chips & clusters, improve national network, promote cloud standardization, and ensure inclusive, efficient, green, secure supply.

🇨🇳 China’s State Council just released its “AI+” Action Plan (2025)

huggingface.co/spaces/zh-ai...

✨Goal: By 2035, AI will deeply empower all sectors, reshape productivity & society

✨Focus on 6 pillars:

>Science & Tech

>Industry

>Consumption

>Public welfare

>Governance

>Global cooperation

✨ SOTA vision language capability

✨ 96× video token compression > high-FPS & long video reasoning

✨ Switchable fast vs deep thinking modes

✨ Strong OCR, document parsing, supports 30+ languages

MiniCPM-V 4.5 🚀 New MLLM for image, multi-image & video understanding, running even on your phone, released by OpenBMB

huggingface.co/openbmb/Mini...

InternVL3.5 🔥 New family of multimodal model by Shanghai AI lab @opengvlab

huggingface.co/collections/...

✨ 1B · 2B · 4B · 8B · 14B · 38B | MoE → 20B-A4B · 30B-A3B · 241B-A28B 📄Apache 2.0

✨ +16% reasoning performance, 4.05× speedup vs InternVL3

Intern-S1-mini 🔥 lightweight open multimodal reasoning model by Shanghai AI Lab.

huggingface.co/internlm/Int...

✨ Efficient 8B LLM + 0.3B vision encoder

✨ Apache 2.0

✨ 5T multimodal pretraining, 50%+ in scientific domains

✨ Dynamic tokenizer for molecules & protein sequences

Seed-OSS 🔥 The latest open LLM from Bytedance Seed team

huggingface.co/collections/...

✨ 36B - Base & Instruct

✨ Apache 2.0

✨ Native 512K long context

✨ Strong reasoning & agentic intelligence

✨ 2 Base versions: with & without synthetic data

✨DeepSeek V3.1 just dropped on @hf.co

huggingface.co/collections/...

✨ Apache 2.0

✨ Semantic + Appearance Editing: rotate, restyle, add/remove

✨ Precise Text Editing → edit CN/EN text, keep style

huggingface.co/spaces/Qwen/...

Before my vacation: Qwen releasing.

When I came back: Qwen still releasing

Respect!!🫡

Qwen Image Edit 🔥 the image editing version of Qwen-Image by Alibaba Qwen

huggingface.co/Qwen/Qwen-Im...

🔥 July highlights from Chinese AI community

huggingface.co/collections/...

I’ve been tracking things closely, but July’s open-source wave still managed to surprise me.

Can’t wait to see what’s coming next! 🚀

Qwen team did it again!🔥

They just released Qwen3-Coder-30B-A3B-Instruct on the hub

huggingface.co/Qwen/Qwen3-C...

✨ Apache 2.0

✨30B total / 3.3B active (128 experts, 8 top-k)

✨ Native 256K context, extendable to 1M via Yarn

✨ Built for Agentic Coding

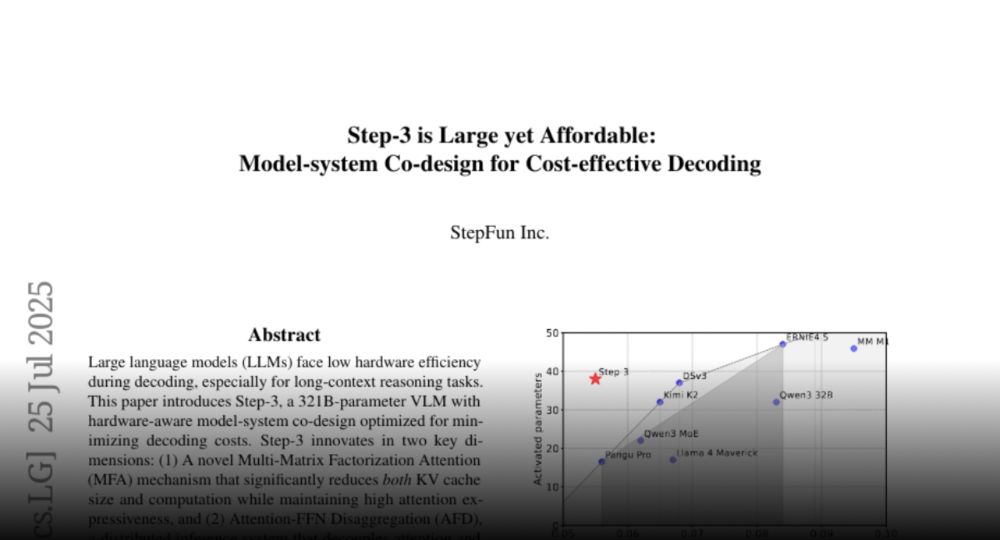

✨ 321B total / 32B active - Apache 2.0

✨ MFA + AFD : cutting decoding cost by up to 70% vs. DeepSeek-V3

✨ 4T image-text pretraining: strong vision–language grounding

✨ Modular, efficient, deployable: runs on just 8×48GB GPUs

It’s here! After the WAIC announcement, StepFun has just dropped Step 3 🔥 their latest multimodal reasoning model on the hub.

Model: huggingface.co/stepfun-ai/s...

Paper: huggingface.co/papers/2507....