Read the blog: huggingface.co/blog/nanovlm

21.05.2025 13:10 — 👍 2 🔁 0 💬 0 📌 0

Read the blog: huggingface.co/blog/nanovlm

21.05.2025 13:10 — 👍 2 🔁 0 💬 0 📌 0

Train your Vision-Language Model in just two commands:

> git clone github.com/huggingface/...

> python train.py

New Blog📖✨:

nanoVLM: The simplest way to train your own Vision-Language Model in pure PyTorch explained step-by-step!

Easy to read, even easier to use. Train your first VLM today!

Link: webml-community-smolvlm-realtime-webgpu.static.hf.space/index.html

14.05.2025 15:39 — 👍 1 🔁 0 💬 1 📌 0

Real-time SmolVLM in a web browser with transformers.js.

All running locally with no installs. Just open the website.

If you’re into efficient multimodal models, you’ll love this one.

Check out the paper: huggingface.co/papers/2504....

📱 Real-world Efficiency: We've created an app using SmolVLM on an iPhone 15 and got real-time inference directly from its camera!

🌐 Browser-based Inference? Yep! We get lightning-fast inference speeds of 40-80 tokens per second directly in a web browser. No tricks, just compact, efficient models!

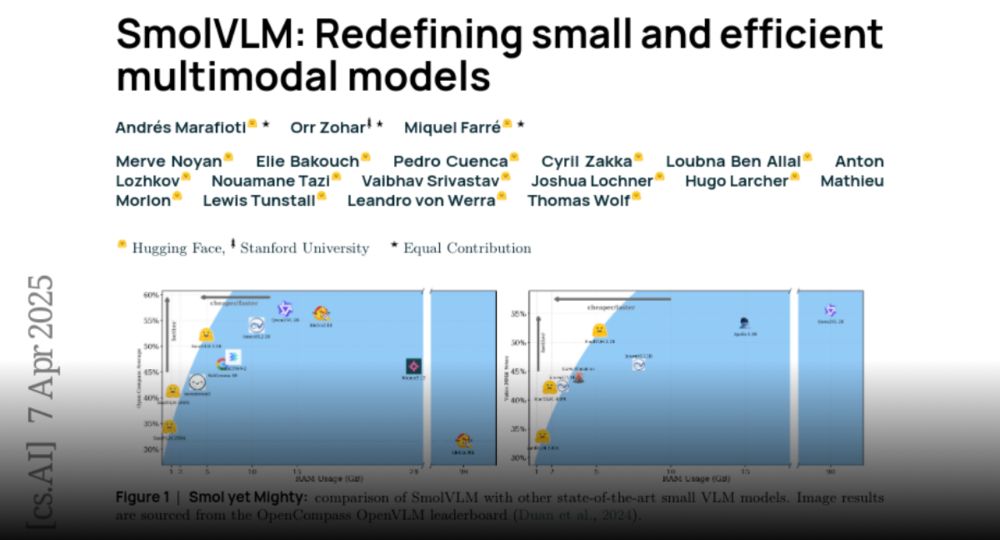

🌟 State-of-the-Art Performance, SmolVLM comes in three powerful yet compact sizes—256M, 500M, and 2.2B parameters—each setting new SOTA benchmarks for their hardware constraints in image and video understanding.

08.04.2025 15:12 — 👍 0 🔁 0 💬 1 📌 0

✨ Less CoT, more efficiency: Turns out, too much Chain-of-Thought (CoT) data actually hurts performance in small models. They dumb

✨ Longer videos, better results: Increasing video length during training enhanced performance on both video and image tasks.

✨ System prompts and special tokens are key: Introducing system prompts and dedicated media intro/outro tokens significantly boosted our compact VLM’s performance—especially for video tasks.

08.04.2025 15:12 — 👍 0 🔁 0 💬 1 📌 0

✨ Pixel shuffling magic: Aggressively pixel shuffling helped our compact VLMs "see" better, same performance with sequences 16x shorter!

✨ Learned positional tokens FTW: For compact models, learned positional tokens significantly outperform raw text tokens, enhancing efficiency and accuracy.

✨ Smaller is smarter with SigLIP: Surprise! Smaller LLMs didn't benefit from the usual large SigLIP (400M). Instead, we use the 80M base SigLIP that performs equally well at just 20% of the original size!

08.04.2025 15:12 — 👍 1 🔁 0 💬 1 📌 0

Here are the coolest insights from our experiments:

✨ Longer context = Big wins: Increasing the context length from 2K to 16K gave our tiny VLMs a 60% performance boost!

Today, we share the tech report for SmolVLM: Redefining small and efficient multimodal models.

🔥 Explaining how to create a tiny 256M VLM that uses less than 1GB of RAM and outperforms our 80B models from 18 months ago!

huggingface.co/papers/2504....

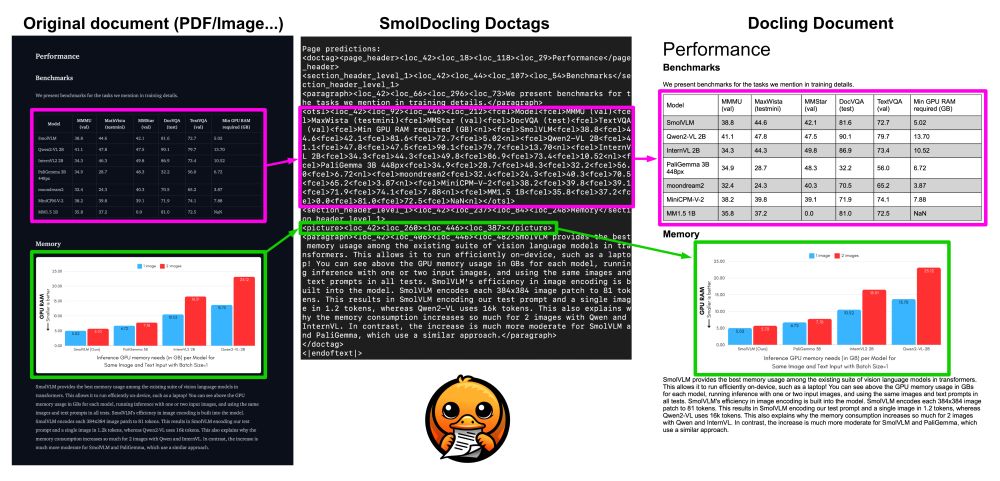

SmolDocling is available today 🏗️

🔗 Model: huggingface.co/ds4sd/SmolDo...

📖 Paper: huggingface.co/papers/2503....

🤗 Space: huggingface.co/spaces/ds4sd...

Try it and let us know what you think! 💬

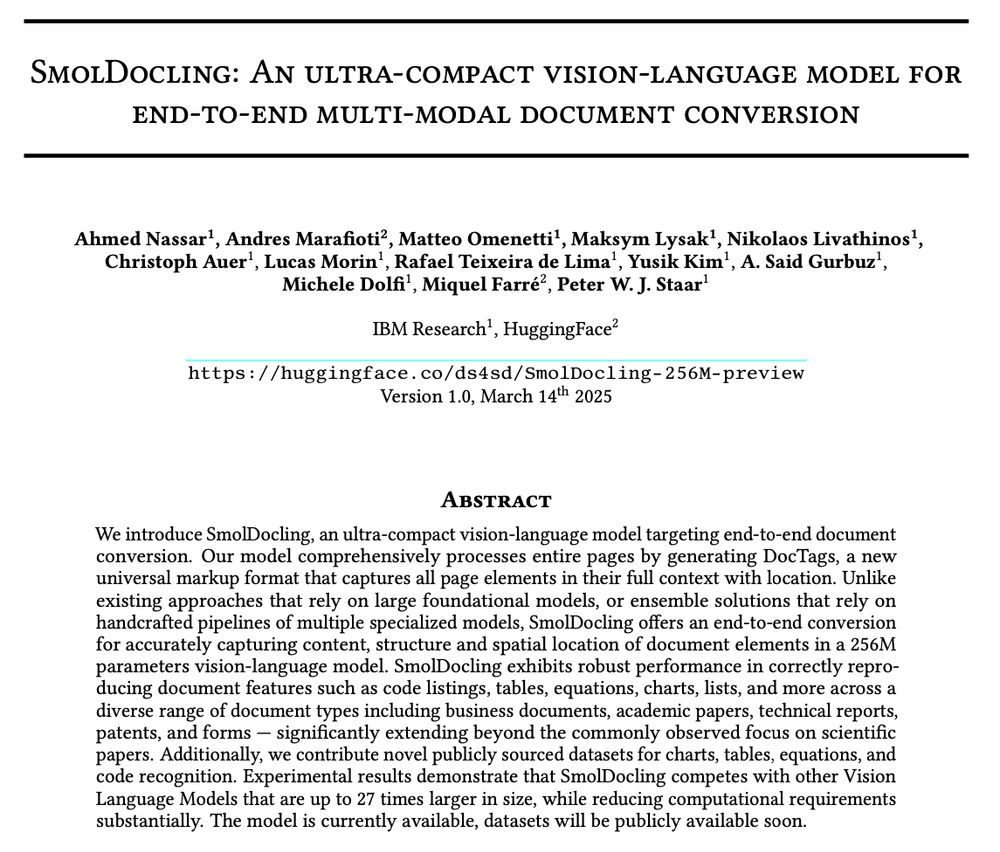

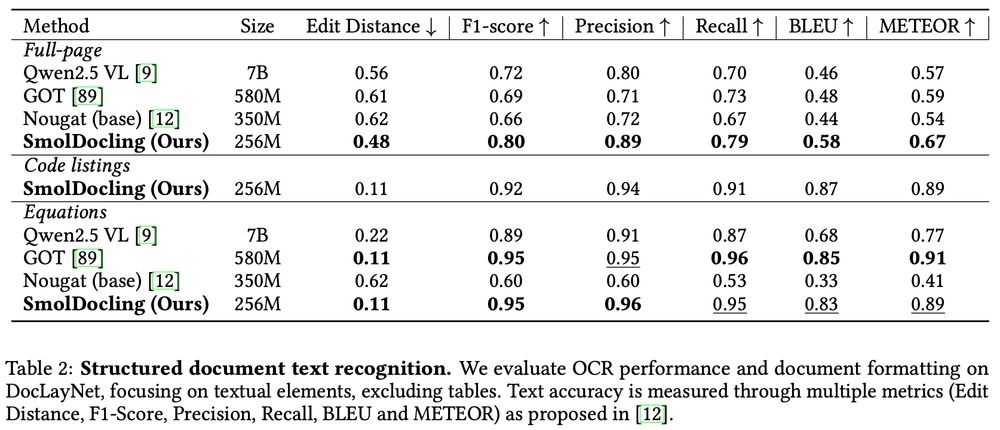

At only 256M parameters, SmolDocling outperforms much larger models on key document conversion tasks:

🖋️ Full-page transcription: Beats models 27× bigger!

📑 Equations: Matches or beats leading models like GOT

💻 Code recognition: We introduce the first benchmark for code OCR

What makes it unique?

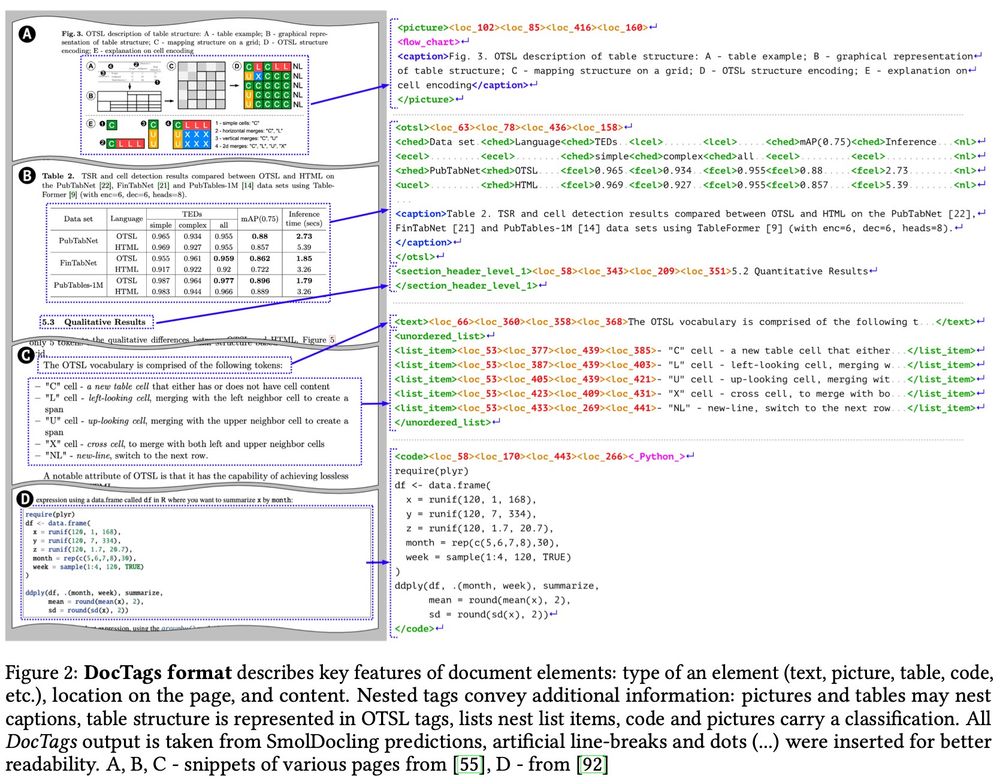

📌 Handles everything a document has: tables, charts, code, equations, lists, and more

📌 Works beyond scientific papers—supports business docs, patents, and forms

📌 It runs with less than 1GB of RAM, so running at large batch sizes is super cheap!

How does SmolDocling beat models 27× bigger? SmolDocling transforms any document into structured metadata with DocTags, being SOTA in:

✅ Full-page conversion

✅ Layout identification

✅ Equations, tables, charts, plots, code OCR

🚀 We just dropped SmolDocling: a 256M open-source vision LM for complete document OCR! 📄✨

Lightning fast, process a page in 0.35 sec on consumer GPU using < 500MB VRAM ⚡

SOTA in document conversion, beating every competing model we tested (including models 27x more params) 🤯

But how? 🧶⬇️

Extremely bullish on @CohereForAI's Aya Vision (8B & 32B) - new SOTA open-weight VLMs

- 8B wins up to 81% of the time in its class, better than Gemini Flash

- 32B beats Llama 3.2 90B!

- Integrated on @hf.co from Day 0!

Check out their blog! huggingface.co/blog/aya-vis...

Me too! Highlight of my career so far :)

31.01.2025 15:21 — 👍 1 🔁 0 💬 1 📌 0

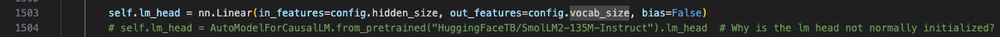

And that was why we didn't release this before. It's live research code. Most gets rewritten fairly often, and some parts have been the same for years.

It works, it manages to produce SOTA results at 256M and 80B sizes, but it's not production code.

Go check it out:

github.com/huggingface/...

And it also has a bunch of bugs like this one in our modeling_vllama3.py file. We start from a pretrained LLM, but for some reason the weights of the head are not loaded from the model. I still don't know why :(

31.01.2025 15:06 — 👍 3 🔁 0 💬 2 📌 0

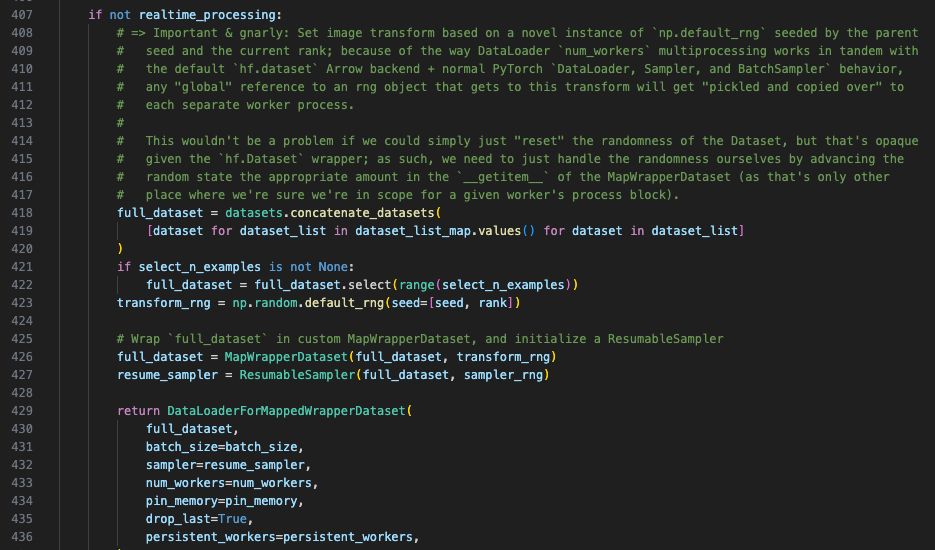

The codebase is full of interesting insights like this one in our dataset.py file: How do you get reproducible randomness in different processes across different machines?

Start different random number generators based on a tuple (seed, rank)!

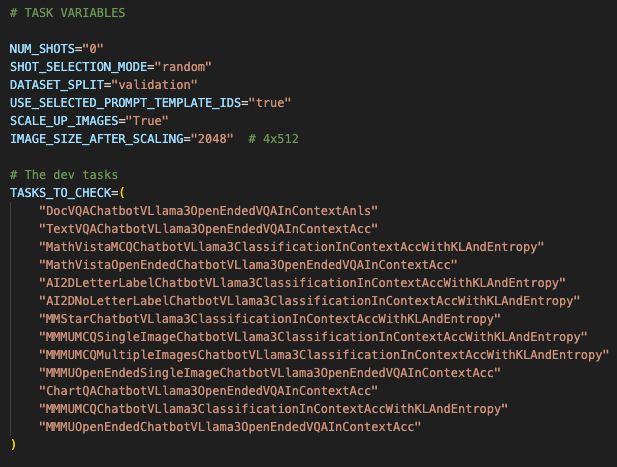

Post training, you can run the evaluation on all of these tasks by running:

sbatch vision/experiments/evaluation/vloom/async_evals_tr_346/run_evals_0_shots_val_2048 . slurm

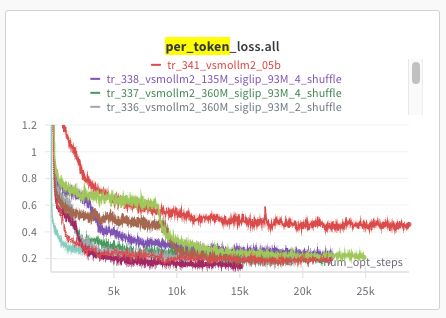

Launching the training for SmolVLM 256M is as simple as:

./vision/experiments/pretraining/vloom/tr_341_smolvlm_025b_1st_stage/01_launch . sh

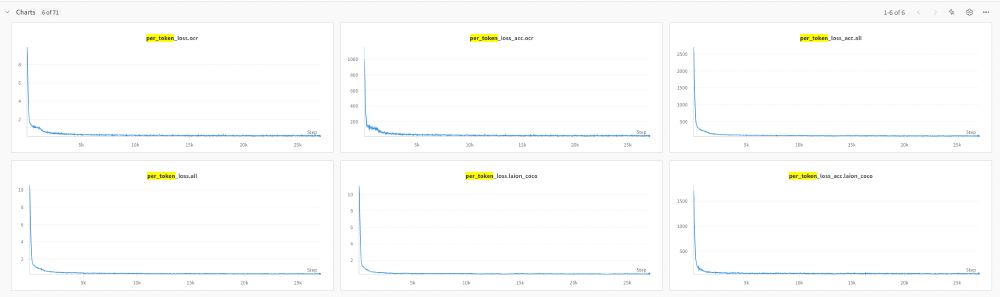

Then we use wandb to track the losses.

Check out the file to find out details!

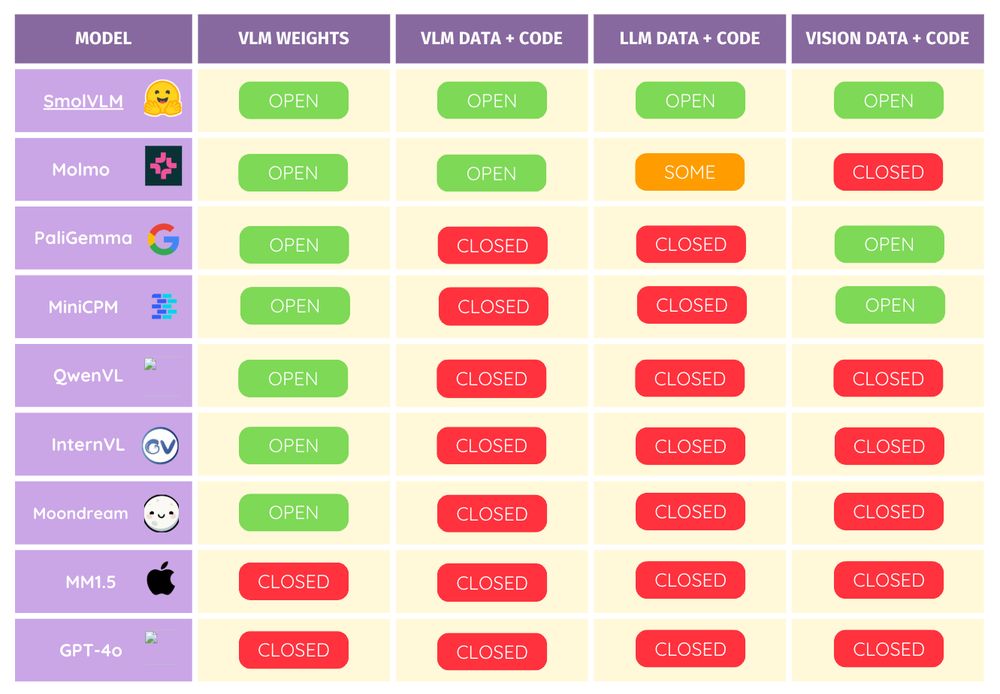

Fuck it, today we're open-sourcing the codebase used to train SmolVLM from scratch on 256 H100s 🔥

Inspired by our team's effort to open-source DeepSeek's R1, we are releasing the training and evaluation code on top of the weights 🫡

Now you can train any SmolVLM—or create your own custom VLMs!

Links :D

Demo: huggingface.co/spaces/Huggi...

Models: huggingface.co/collections/...

Blog: huggingface.co/blog/smolervlm

SmolVLM upgrades:

• New vision encoder: Smaller but higher res.

• Improved data mixtures: better OCR and doc understanding.

• Higher pixels/token: 4096 vs. 1820 = more efficient.

• Smart tokenization: Faster training and better performance. 🚀

Better, faster, smarter.

We have partnered with IBM 's Docling to build amazing smol models for document understanding. Our early results are amazing. Stay tuned for future releases!

23.01.2025 13:33 — 👍 2 🔁 0 💬 1 📌 0