🚨 New FREE self-paced course!

We are excited to launch the #EpiTrainingKit #Africa: Introduction to Infectious Disease Modelling for Public Health.

🎯 Tailored for the African context

🌍 With a gender perspective

🆓 Open-access and online

#EpiTKit #Epiverse #PublicHealth

04.08.2025 18:30 — 👍 14 🔁 8 💬 1 📌 0

#EpiverseTRACE is now on Bluesky & LinkedIn! 🎉

We’re expanding to be more inclusive & diverse, reaching a wider audience in public health & data science.

Want to know more about what we do?⁉️🤔

🧵a thread!

11.03.2025 10:28 — 👍 13 🔁 12 💬 1 📌 0

Something I don't understand is: why can't LLMs write novel-length fiction yet?

They've got the context length for it. And new models seem capable of the multi-hop reasoning required for plot. So why hasn't anyone demoed a model that can write long interesting stories?

I do have a theory ... +

30.12.2024 00:17 — 👍 205 🔁 31 💬 49 📌 19

Finally, a Replacement for BERT: Introducing ModernBERT

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

Great blog post (by a 15-author team!) on their release of ModernBERT, the continuing relevance of encoder-only models, and how they relate to, say, GPT-4/llama. Accessible enough that I might use this as an undergrad reading.

19.12.2024 19:11 — 👍 75 🔁 19 💬 1 📌 2

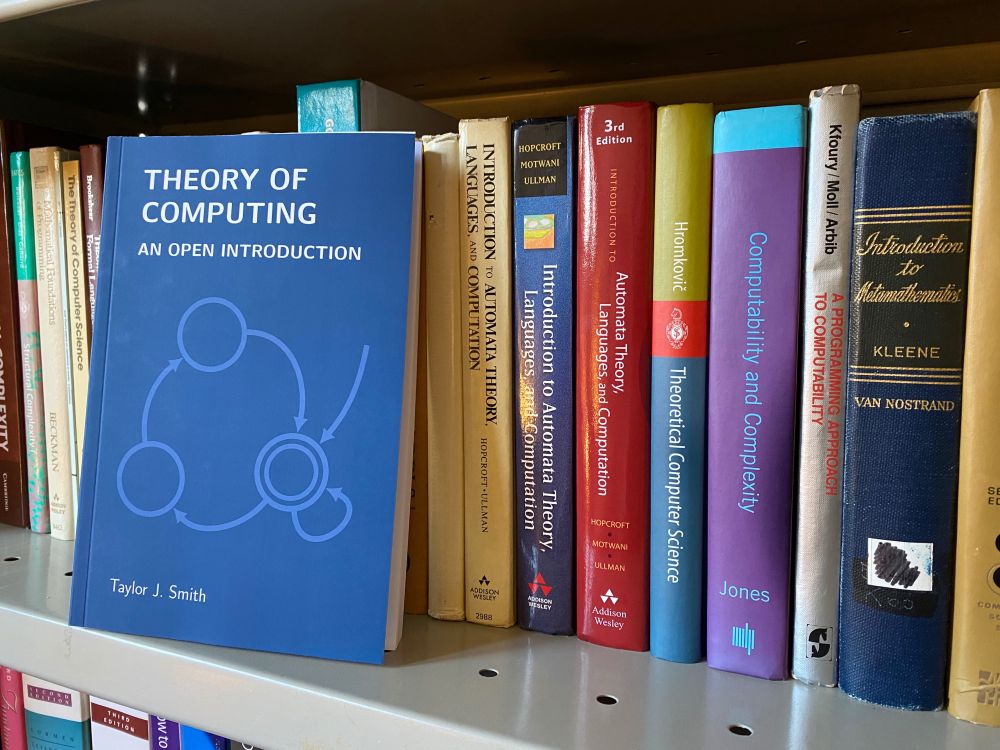

A photo of my open textbook, "Theory of Computing: An Open Introduction", on my bookshelf leaning up against some other classic theory texts.

With students writing my theory exam today, I figured it's a good time to share a link to my open textbook with all you current (and future!) theoreticians.

This term was the first time I used it in class, and students loved it. Big plans for future editions, so stay tuned!

taylorjsmith.xyz/tocopen/

16.12.2024 17:35 — 👍 10 🔁 1 💬 2 📌 0

Great tutorial on language models!

11.12.2024 08:04 — 👍 2 🔁 0 💬 0 📌 0

Check out this BEAUTIFUL interactive blog about cameras and lenses

ciechanow.ski/cameras-and-...

27.11.2024 02:54 — 👍 75 🔁 16 💬 6 📌 1

$100K or 100 Days: Trade-offs when Pre-Training with Academic Resources

Pre-training is notoriously compute-intensive and academic researchers are notoriously under-resourced. It is, therefore, commonly assumed that academics can't pre-train models. In this paper, we seek...

A timely paper exploring ways academics can pretrain larger models than they think, e.g. by trading time against GPU count.

Since the title is misleading, let me also say: US academics do not need $100k for this. They used 2,000 GPU hours in this paper; NSF will give you that. #MLSky

23.11.2024 13:50 — 👍 144 🔁 12 💬 10 📌 3

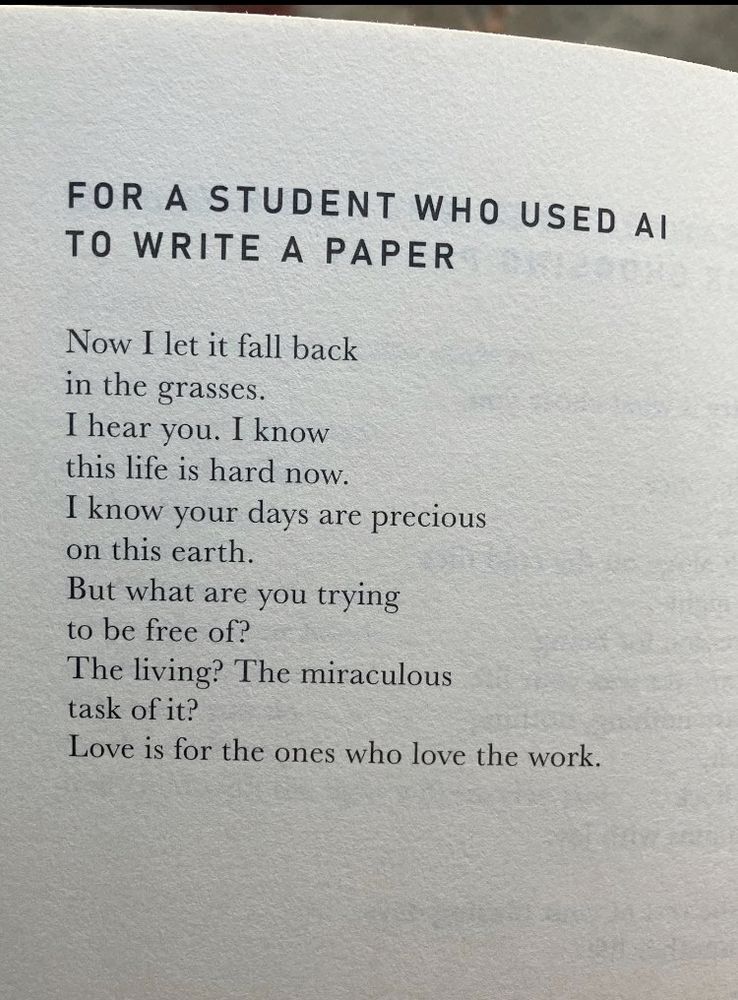

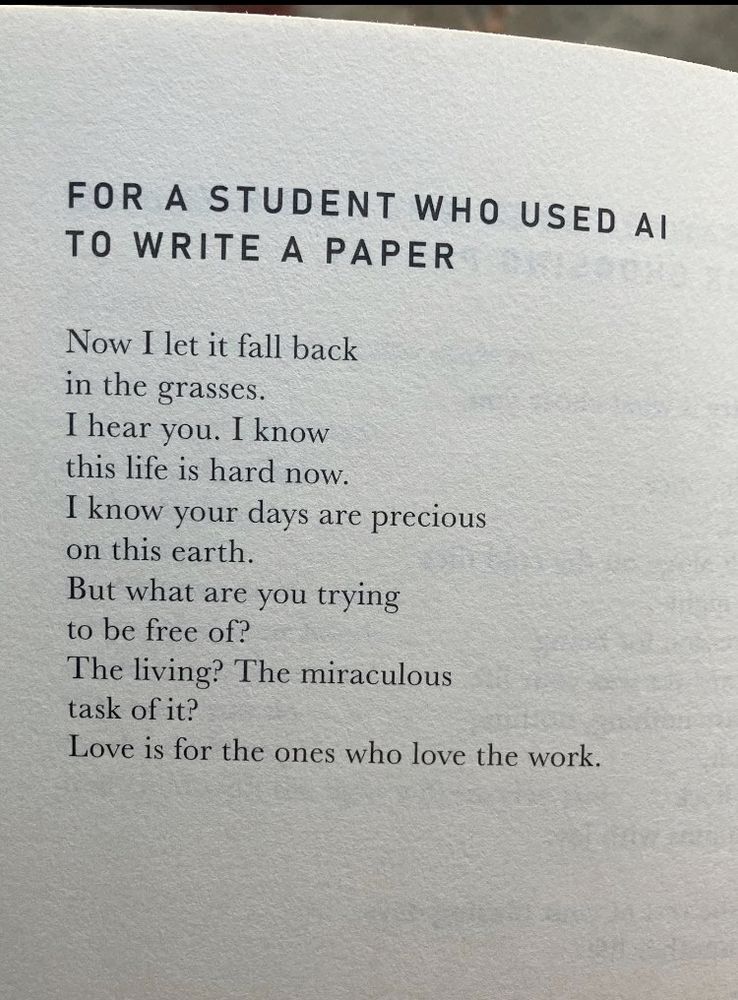

A poem for my last day working at the writing center for the semester (by Joseph Fasano)

23.11.2024 02:09 — 👍 15609 🔁 3305 💬 148 📌 133

Network scientist; director of the &-Lab at Northeastern University; data justice fellow at the Institute on Policing, Incarceration & Public Safety at Harvard University.

brennanklein.com

🔬 Ph.D. | Emerging & Vector-Borne Diseases

🧪 Molecular Epidemiologist | One Health

🦠 Zoonotic malaria, Human-wildlife interface

📍 London, UK | Open to collaborations

Reader in SynBio and Biotech @ QMUL, UKRI Futures Leaders Fellow, MA in Creative Writing from BirkbeckUoL. Evolution and #Synbio of #Photosynthesis 🌵🌺

Bogotana. I keep the wolf at the door, but he calls me up. (ENG/ESP)

Sueño con y trabajo por un mundo con oportunidades para todas las personas 🏳️🌈🏳️⚧️. A veces (casi siempre) uso esto como un diario.

Global collab (https://www.javeriana.edu.co/, https://www.uniandes.edu.co/, @mrcunitgambia.bsky.social, @lshtm.bsky.social) developing a trustworthy data analysis ecosystem to get ahead of the next public health crisis.

📌 https://epiverse-trace.github.io/

Epidemiologist working on Urban Health, Climate, Social Justice, and Latin American Cities.

Community Engagement Manager @datadotorg and #Epiverse | Colombia's top scorer in Ultimate frisbee Master category | beware of social constructs R&M

Math Assoc. Prof. (On leave, Aix-Marseille, France)

Teaching Project (non-profit): https://highcolle.com/

Husband, dad, veteran, writer, and proud Midwesterner. 19th US Secretary of Transportation and former Mayor of South Bend.

Scientist who is passionate about correcting misinformation

https://www.youtube.com/backtothescience

George S Pepper Professor of Public Health & Preventative Medicine; Biostats, Stats & Data Science, Lifelong learner & truth seeker; Views my own & not employer’s

Spreading truth about vaccines since 2015.

Profe de informática cada vez más interesado en las humanidades.

#Educación #Cultura #Ecologismo #Democracia

Neuroscience | Behavior | Aging and fertility | Ants 🐜

Instituto de Investigaciones Biomédicas, UNAM

Stanford Professor of Linguistics and, by courtesy, of Computer Science, and member of @stanfordnlp.bsky.social and The Stanford AI Lab. He/Him/His. https://web.stanford.edu/~cgpotts/

Staff Software Engineer at Meta

Software developer, telecommunication engineer, space geek, geo enthousiast and public speaker

Applied #IR & #NLP Research @zeta-alpha.bsky.social, making search good.

CS PhD @tudelft. Former @naverlabseurope.bsky.social, @bloomberg.com

CNF✈️AMS