I am at CHIL this week to present my poster (Caught in the Web of Words: Do LLMs Fall for Spin in Medical Literature?) on Thursday June 26.

Looking forward to connecting and sharing our work on spin with the CHIL community!

26.06.2025 00:52 —

👍 2

🔁 0

💬 0

📌 0

I am at CHI this week to present my poster (Framing Health Information: The Impact of Search Methods and Source Types on User Trust and Satisfaction in the Age of LLMs) on Wednesday April 30

CHI Program Link: programs.sigchi.org/chi/2025/pro...

Looking forward to connecting with you all!

29.04.2025 00:50 —

👍 1

🔁 1

💬 0

📌 0

Oxford Word of the Year 2024 - Oxford University Press

The Oxford Word of the Year 2024 is 'brain rot'. Discover more about the winner, our shortlist, and 20 years of words that reflect the world.

I'm searching for some comp/ling experts to provide a precise definition of “slop” as it refers to text (see: corp.oup.com/word-of-the-...)

I put together a google form that should take no longer than 10 minutes to complete: forms.gle/oWxsCScW3dJU...

If you can help, I'd appreciate your input! 🙏

10.03.2025 20:00 —

👍 10

🔁 8

💬 0

📌 0

Can we fix this? We tested zero-shot prompts to reduce LLMs' susceptibility to spin.

Good news: prompts that encouraged reasoning reduced their tendency to overstate trial results! 🛠️

Careful design is key to improving evidence synthesis for clinical decisions. [6/7]

15.02.2025 02:34 —

👍 3

🔁 0

💬 1

📌 0

When we asked LLMs to simplify abstracts into plain language, they often propagated spin into their summaries. This means LLMs could unintentionally mislead patients and non-experts about the effectiveness of treatments. 😱 [5/7]

15.02.2025 02:34 —

👍 2

🔁 0

💬 1

📌 0

We asked LLMs how favorably they perceived a treatment’s results (0-10 scale). Even though LLMs could detect spin, they were far more influenced by it than human experts.

Meaning: LLMs believed spun abstracts presented more favorable results! 😬 [4/7]

15.02.2025 02:34 —

👍 0

🔁 0

💬 1

📌 0

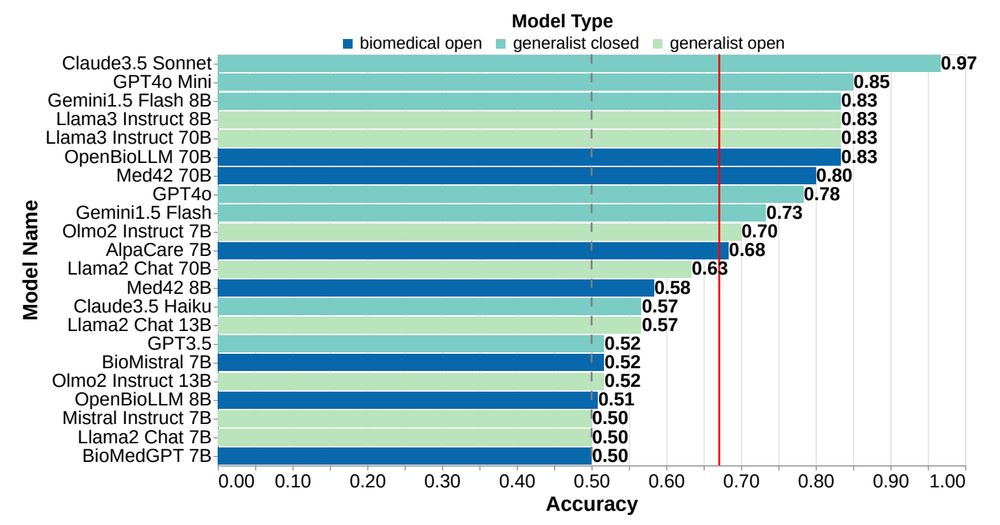

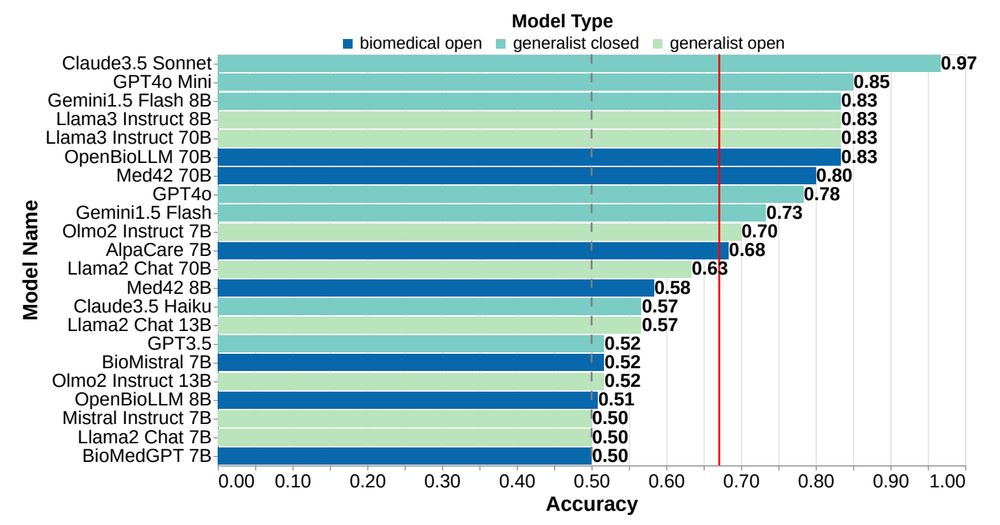

When we prompted 22 LLMs to identify spin in medical abstracts, we found that they were moderately to strongly capable of detecting spin.

However, things got interesting when we asked LLMs to interpret the results… [3/7]

🔽

15.02.2025 02:34 —

👍 1

🔁 0

💬 1

📌 0

So what is spin?

Spin refers to reporting strategies that make experimental treatments appear more beneficial than they actually are—often distracting from nonsignificant results.

Example:

❌ “The treatment shows a promising trend toward significance…”

✅ “No significant difference was found.”

[2/7]

15.02.2025 02:34 —

👍 1

🔁 0

💬 1

📌 0

🚨 Do LLMs fall for spin in medical literature? 🤔

In our new preprint, we find that LLMs are susceptible to biased reporting of clinical treatment benefits in abstracts—more so than human experts. 📄🔍 [1/7]

Full Paper: arxiv.org/abs/2502.07963

🧵👇

15.02.2025 02:34 —

👍 63

🔁 25

💬 3

📌 4

As someone interested in an academic position post-PhD, I found this post very helpful. Thank you for sharing your wisdom and advice.

24.01.2025 17:45 —

👍 1

🔁 0

💬 0

📌 0

Awesome! Thank you

07.01.2025 15:21 —

👍 1

🔁 0

💬 0

📌 0

Thank you!

07.01.2025 01:18 —

👍 1

🔁 0

💬 1

📌 0

The application form says it is no longer accepting responses. Is the application closed now?

06.01.2025 23:54 —

👍 1

🔁 0

💬 1

📌 0