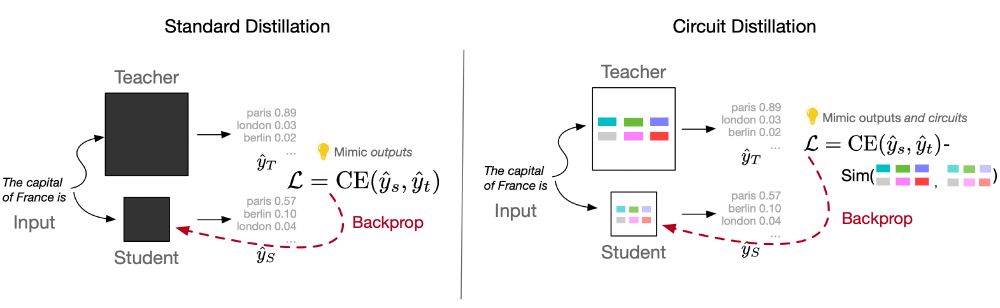

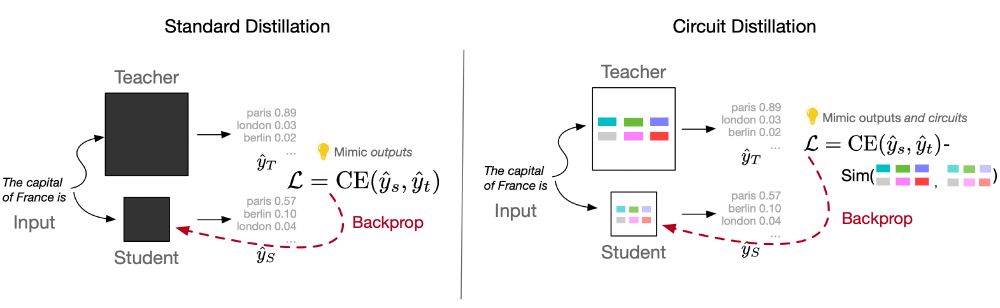

📘 How it works (high level): identify the teacher’s task circuit --> find functionally analogous student components via ablation --> align their internals during training. Outcome: the student learns the same computation, not just the outputs. (3/4)

30.09.2025 23:32 — 👍 0 🔁 0 💬 1 📌 0

🍎 On Entity Tracking and Theory of Mind, a student that updates only ~11–15% of attention heads inherits the teacher’s capability and closes much of the gap; targeted transfer over brute-force fine-tuning. (2/4)

30.09.2025 23:32 — 👍 0 🔁 0 💬 1 📌 0

🔊 New work w/ @silvioamir.bsky.social & @byron.bsky.social! We show you can distill a model’s mechanism, not just its answers -- teaching a small LM to run it's circuit same as a larger teacher model. We call it Circuit Distillation. (1/4)

30.09.2025 23:32 — 👍 3 🔁 0 💬 1 📌 1

4️⃣ Our analysis spans summarization, question answering, and instruction following, using models like Llama, Mistral, and Gemma as teachers. Across tasks, PoS templates consistently outperformed n-grams in distinguishing teachers 📊 [5/6]

11.02.2025 17:16 — 👍 0 🔁 0 💬 1 📌 0

3️⃣ But here’s the twist: Syntactic patterns (like Part-of-Speech templates) do retain strong teacher signals! Students unconsciously mimic structural patterns from their teacher, leaving behind an identifiable trace 🧩 [4/6]

11.02.2025 17:16 — 👍 0 🔁 0 💬 1 📌 0

2️⃣ Simple similarity metrics like BERTScore fail to attribute a student to its teacher. Even perplexity under the teacher model isn’t enough to reliably identify the original teacher. Shallow lexical overlap is just not a strong fingerprint 🔍 [3/6]

11.02.2025 17:16 — 👍 0 🔁 0 💬 1 📌 0

1️⃣ Model distillation transfers knowledge from a large teacher model to a smaller student model. But does the fine-tuned student reveal clues in its outputs about its origins? [2/6]

11.02.2025 17:16 — 👍 0 🔁 0 💬 1 📌 0

More good things for everyone. Public sector appreciator. Tax and welfare policy knower. Hyperinflation doubter.

Account not actively monitored.

I do elections stuff at The Argument (theargumentmag.com) and Split Ticket (https://www.split-ticket.org)

✉️ lakshya@splitticket.org

Associate Professor of Computer Science @University of Massachusetts Amherst | Co-Director of the HCI-VIS Lab. Former Harvard Radcliffe Fellow | Currently on sabbatical @Inria Saclay

Independent news and analysis on the U.S. Supreme Court. This is the official account of scotusblog.com.

Cato Institute, Director of Immigration Studies, Cato's Selz Foundation Chair in Immigration Policy, BEER, not buyer. Cato, not CATO

CS PhD student at UT Austin in #NLP

Interested in language, reasoning, semantics and cognitive science. One day we'll have more efficient, interpretable and robust models!

Other interests: math, philosophy, cinema

https://www.juandiego-rodriguez.com/

Senior Fellow at the American Immigration Council. Commenting generally on immigration law and policy. Retweets =/= endorsements, views are my own.

Minnesota guy.

"This particular activist will not stop." Sen. Chris Murphy

Senior writer at Slate covering courts and the law. Co-host of the Amicus podcast. Dad.

Waitress turned Congresswoman for the Bronx and Queens. Grassroots elected, small-dollar supported. A better world is possible.

ocasiocortez.com

🎯 Making AI less evil= human-centered + explainable + responsible AI

💼 Harvard Berkman Klein Fellow | CS Prof. @Northeastern | Data & Society

🏢 Prev-Georgia Tech, {Google, IBM, MSFT}Research

🔬 AI, HCI, Philosophy

☕ F1, memes

🌐 upolehsan.com

Working towards the safe development of AI for the benefit of all at Université de Montréal, LawZero and Mila.

A.M. Turing Award Recipient and most-cited AI researcher.

https://lawzero.org/en

https://yoshuabengio.org/profile/

Associate Professor at Northeastern University and father of 3. Interests include artificial intelligence, reinforcement learning, and robotics (he/him).

PhD candidate in CS at Northeastern University | NLP + HCI for health | she/her 🏃♀️🧅🌈

PhD (in progress) @ Northeastern! NLP 🤝 LLMs

she/her

Associate Professor at the Computational Health Informatics Program of Boston Children's Hospital, and Department of Pediatrics at Harvard Medical School. Research interests include natural language processing for biomedical texts, multi-modal ML.

Assoc. Prof in CS @ Northeastern, NLP/ML & health & etc. He/him.