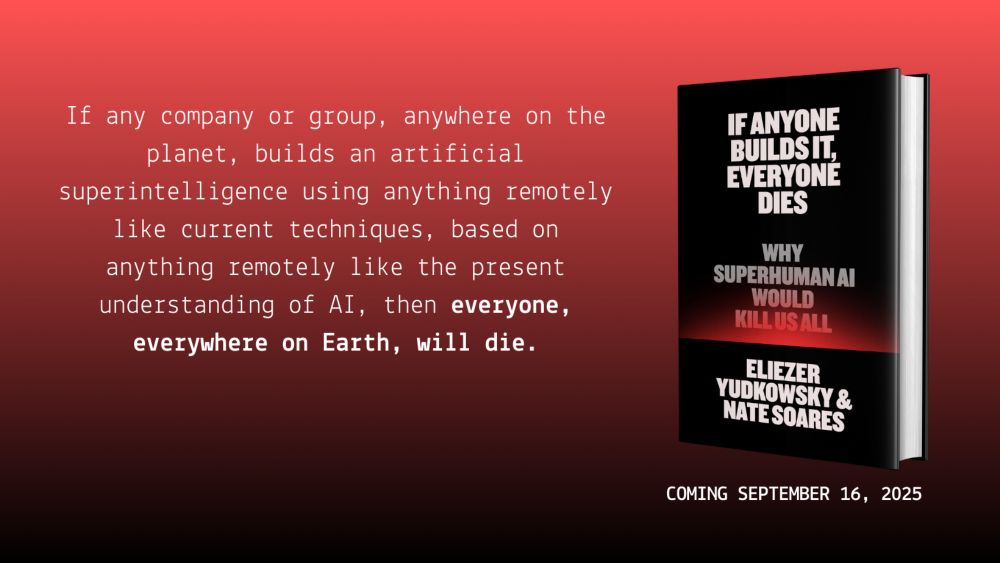

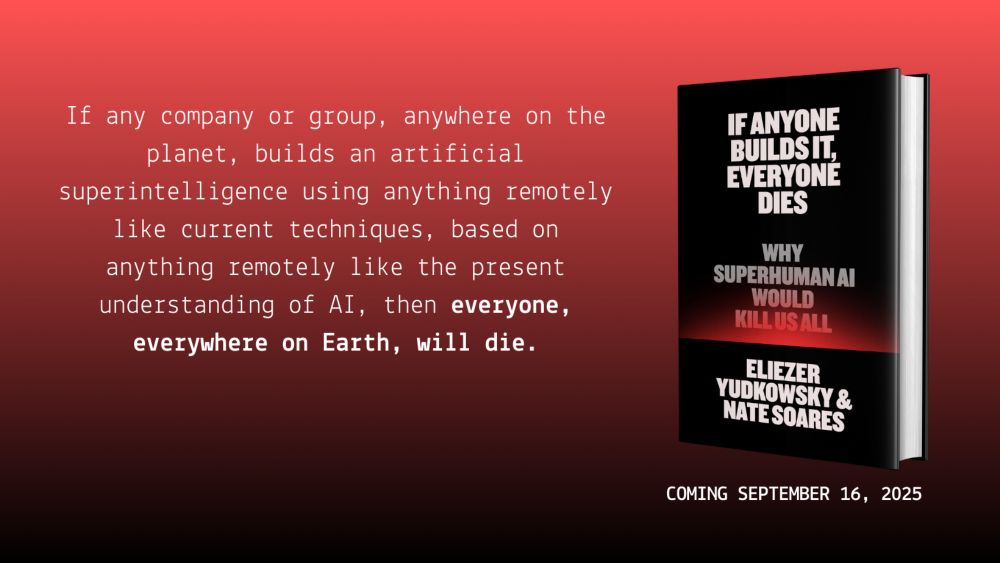

We've been getting some pretty awesome blurbs for Eliezer and Nate's forthcoming book: If Anyone Builds It, Everyone Dies

More details here: www.lesswrong.com/posts/khmpWJ...

One of my favorite reactions, from someone who works on AI policy in DC:

19.06.2025 20:52 — 👍 8 🔁 2 💬 1 📌 0

YouTube video by Win-Win with Liv Boeree

Superintelligent AI - Our Best or Worst Idea?

Really enjoyed chatting with @anthonyaguirre.bsky.social, @livboeree.bsky.social, and the folks who came out for the Win-Win podcast's second-ever IRL event in Austin. Great audience with lots of good and tough questions.

Thanks for putting it on!

www.youtube.com/watch?v=XWZg...

21.05.2025 21:27 — 👍 6 🔁 2 💬 1 📌 0

If Anyone Builds It, Everyone Dies

The scramble to create superhuman AI has put us on the path to extinction—but it's not too late to change course, as two of the field's earliest researchers explain in this clarion call for humanity.

📢 Announcing IF ANYONE BUILDS IT, EVERYONE DIES

A new book from MIRI co-founder Eliezer Yudkowsky and president Nate Soares, published by @littlebrown.bsky.social.

🗓️ Out September 16, 2025

Visit the website to learn more and preorder the hardcover, ebook, or audiobook.

14.05.2025 16:59 — 👍 14 🔁 6 💬 1 📌 0

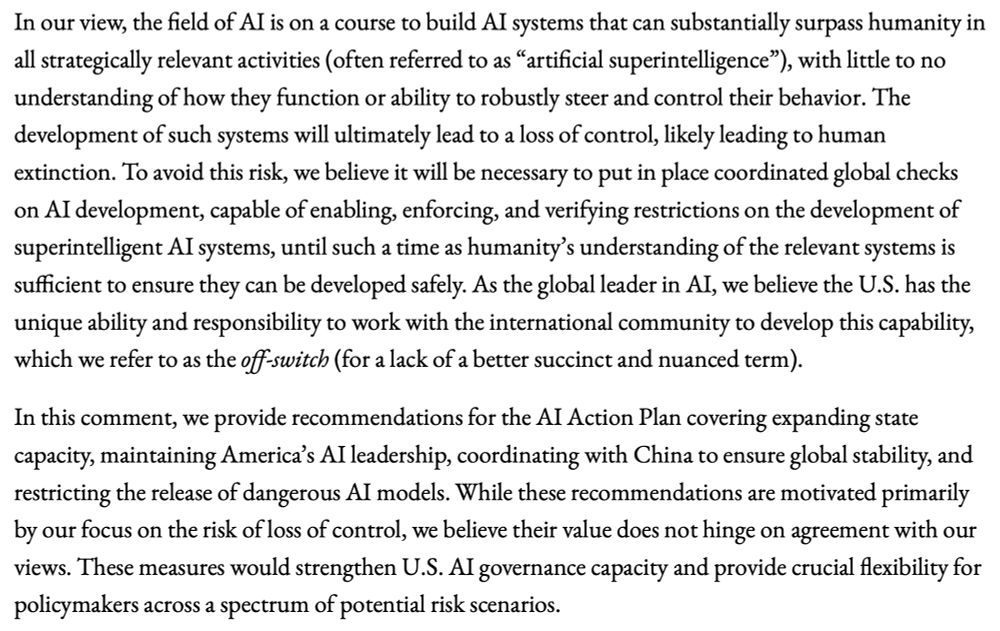

Big thanks to @pbarnett.bsky.social, @aaronscher.bsky.social, and the rest of our TechGov team at MIRI for their hard work putting this together, as well as the huge number of folks who read the earlier drafts and provided thoughtful feedback.

01.05.2025 22:28 — 👍 1 🔁 0 💬 0 📌 0

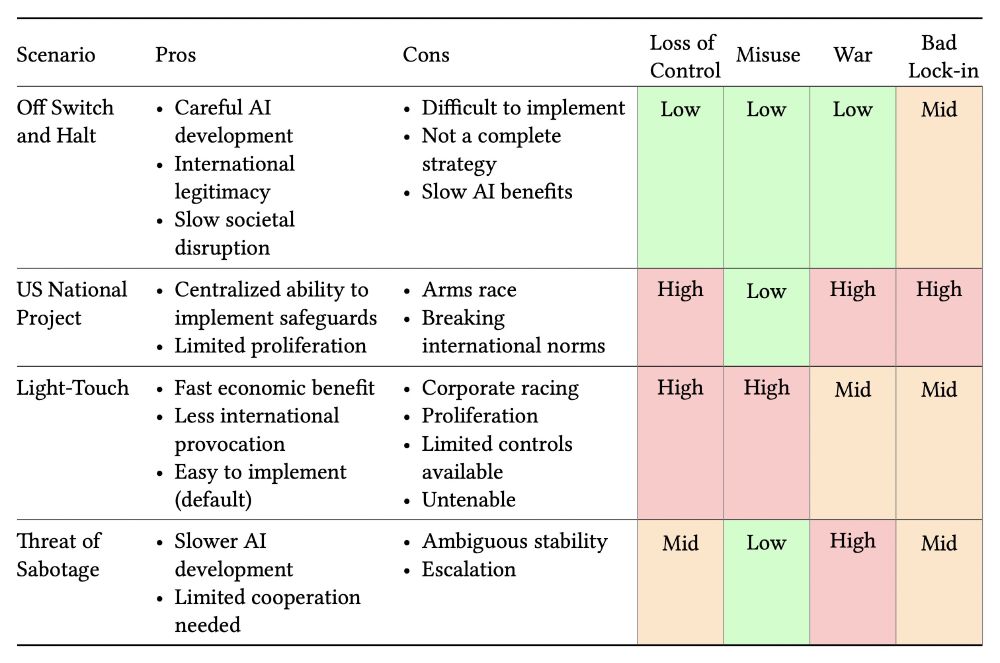

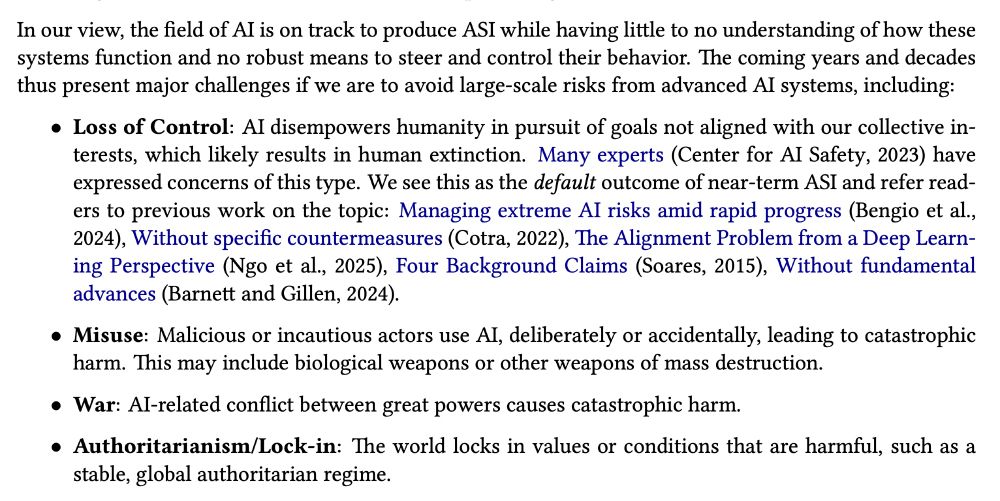

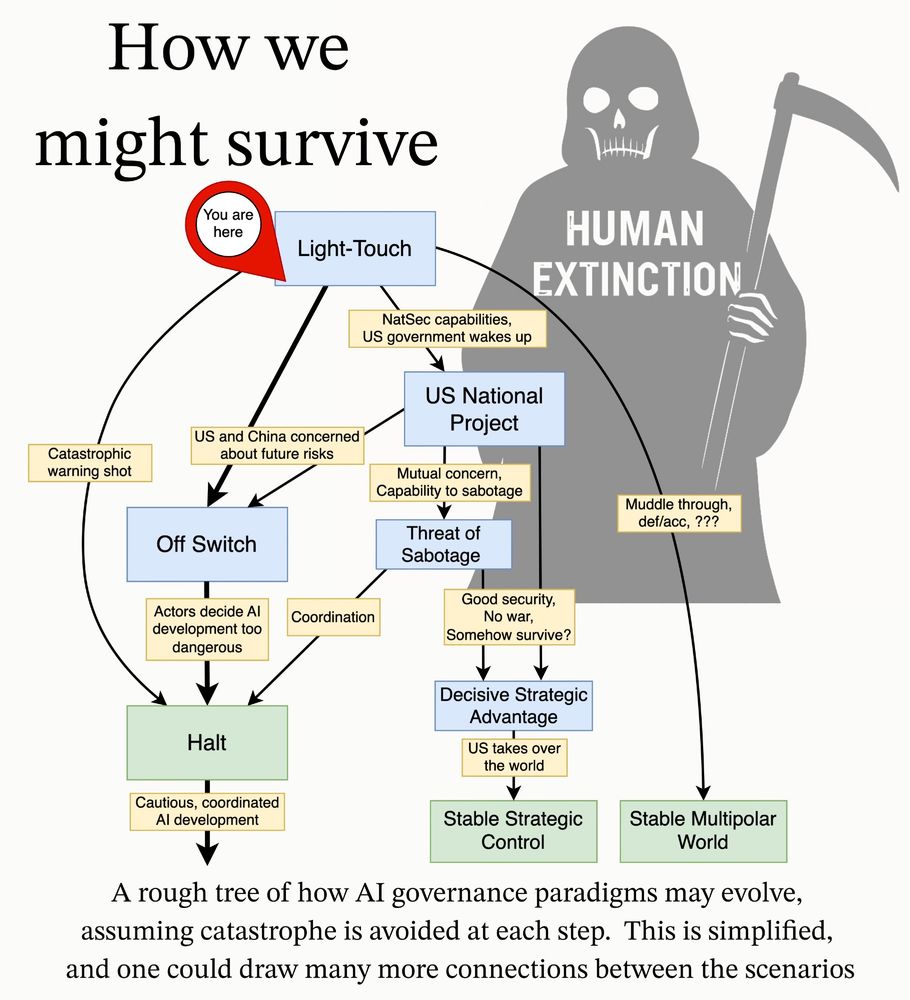

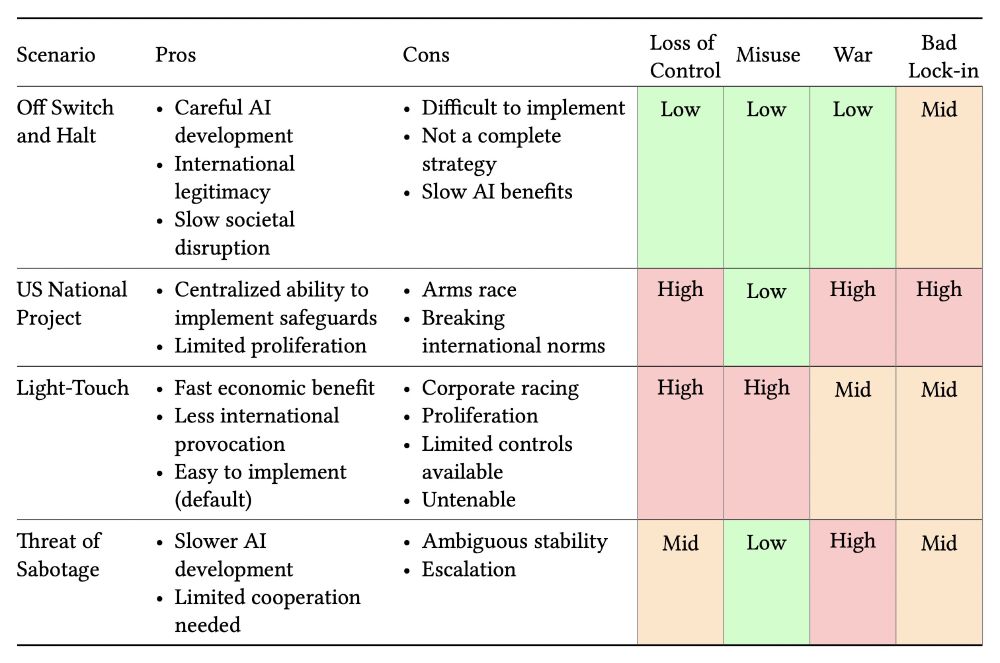

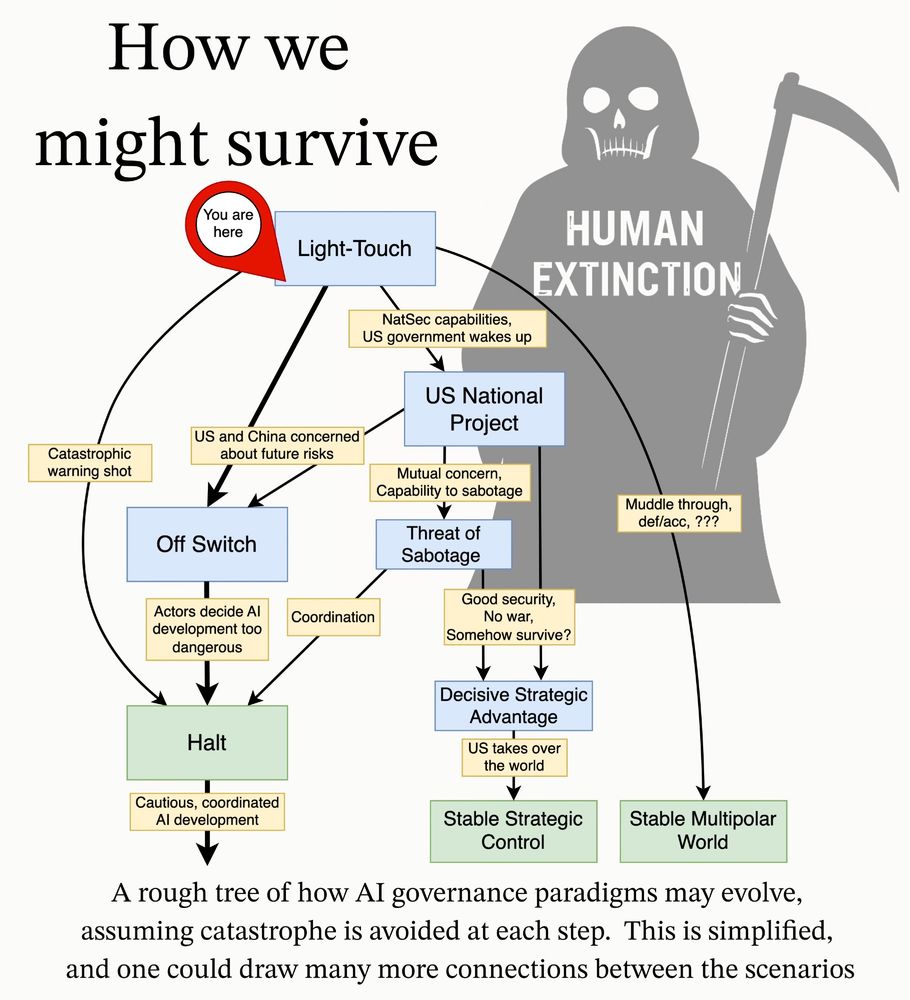

In another scenario, described in Superintelligence Strategy, nations keep each other’s AI development in check by threatening to sabotage any destabilizing AI progress. However, visibility and sabotage capability may not be good enough, so this regime may not be stable. 9/10

01.05.2025 22:28 — 👍 1 🔁 0 💬 1 📌 0

Alternatively, the US government may largely leave the development of advanced AI to companies. This risks proliferating dangerous AI capabilities to malicious actors, faces similar risks to the US National Project, and overall seems extremely unstable. 8/10

01.05.2025 22:28 — 👍 1 🔁 0 💬 1 📌 0

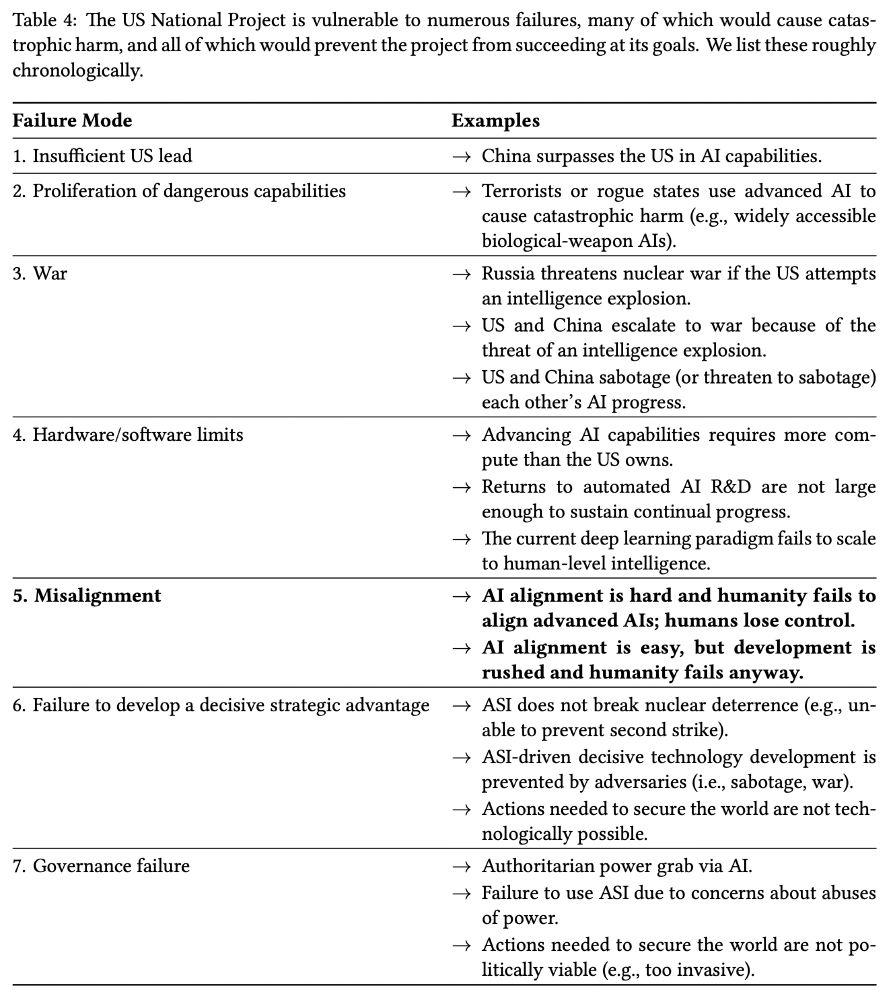

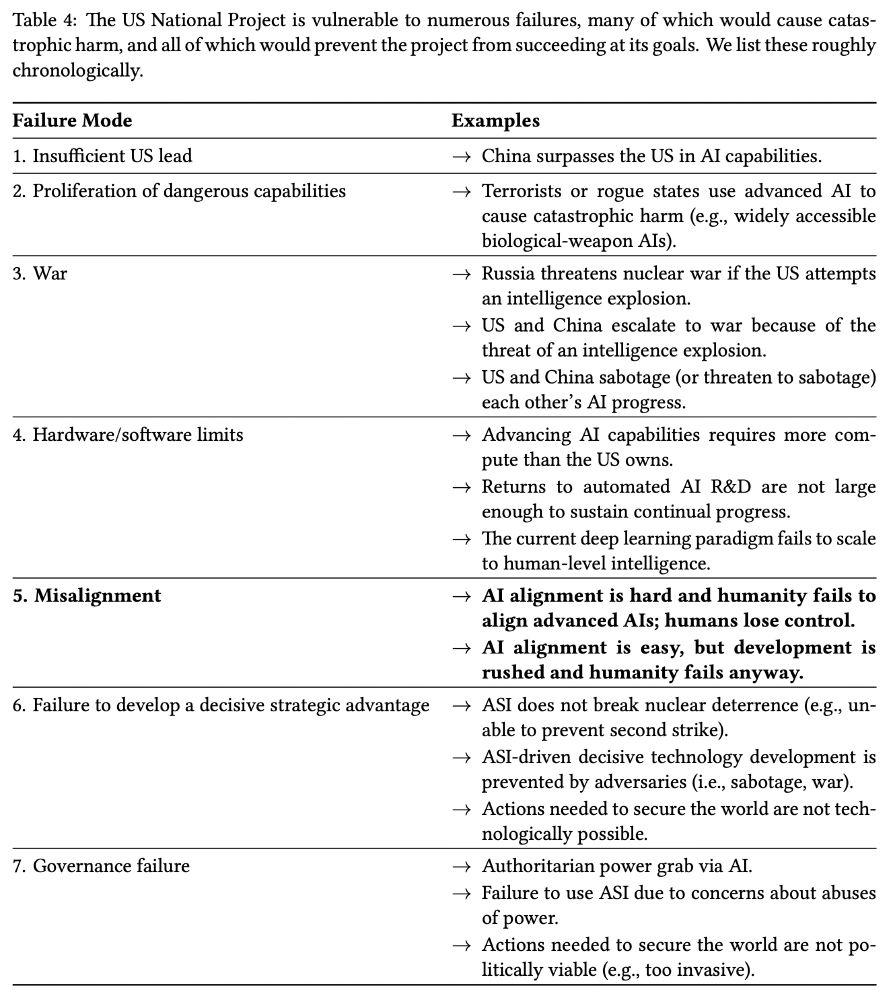

Another scenario we explore is a US National Project—the US races to build superintelligence, with the goal of achieving a decisive strategic advantage globally. This risks both loss of control to AI and increased geopolitical conflict, including war. 7/10

01.05.2025 22:28 — 👍 1 🔁 0 💬 1 📌 0

We focus on an off switch since we believe halting frontier AI development will be crucial to prevent loss of control. We think skeptics of loss of control should value building an off switch, since it would be a valuable tool to reduce dual-use/misuse risks, among others. 6/10

01.05.2025 22:28 — 👍 1 🔁 0 💬 1 📌 0

Our favored scenario involves building the technical, legal, and institutional infrastructure required to internationally restrict dangerous AI development and deployment, preserving optionality for the future. We refer to this as an “off switch.” 5/10

01.05.2025 22:28 — 👍 1 🔁 0 💬 1 📌 0

In the research agenda, we lay out four scenarios for the geopolitical response to advanced AI in the coming years. For each scenario, we lay out research questions that, if answered, would provide important insight on how to successfully reduce catastrophic and extinction risks. 4/10

01.05.2025 22:28 — 👍 1 🔁 0 💬 1 📌 0

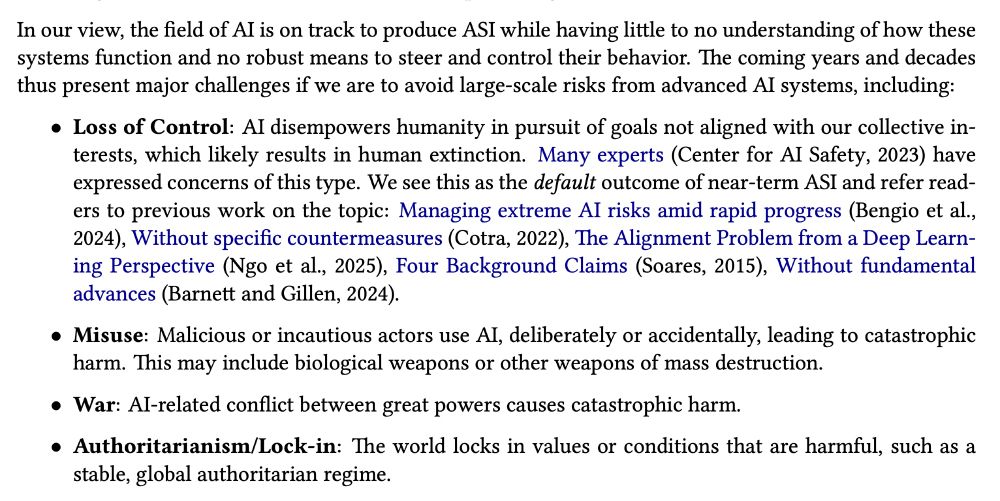

The current trajectory of AI development looks pretty rough, likely resulting in catastrophe. As AI becomes more capable, we will face risks of loss of control, human misuse, geopolitical conflict, and authoritarian lock-in. 3/10

01.05.2025 22:28 — 👍 1 🔁 0 💬 1 📌 0

Most people don’t seem to understand how wild the coming few years could be. AI development, as fast as it is now, could quickly accelerate due to automation of AI R&D. Many actors, including governments, may think that if they control AI, they control the future. 2/10

01.05.2025 22:28 — 👍 2 🔁 0 💬 1 📌 0

New AI governance research agenda from MIRI’s TechGov Team. We lay out our view of the strategic landscape and actionable research questions that, if answered, would provide important insight on how to reduce catastrophic and extinction risks from AI. 🧵1/10

techgov.intelligence.org/research/ai-...

01.05.2025 22:28 — 👍 13 🔁 5 💬 2 📌 0

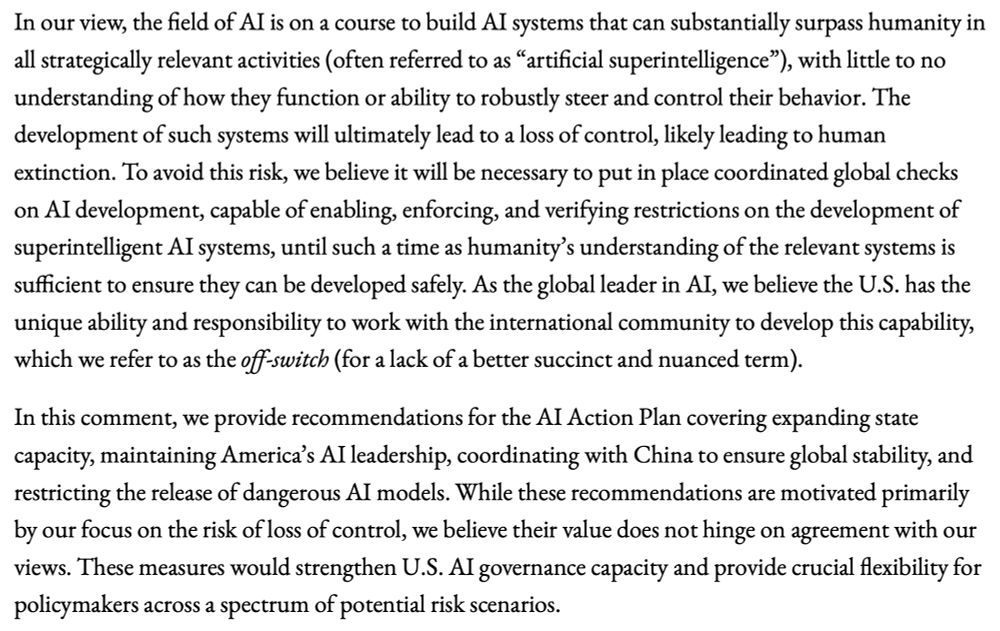

MIRI's (@intelligence.org) Technical Governance Team submitted a comment on the AI Action Plan.

Great work by David Abecassis, @pbarnett.bsky.social, and @aaronscher.bsky.social

Check it out here: techgov.intelligence.org/research/res...

18.03.2025 22:38 — 👍 5 🔁 2 💬 0 📌 0