Computer security experts, national security professionals, and Grimes come together to say: "If Anyone Builds It, Everyone Dies" is WEIRDLY GOOD.

I'm dying to hear people's reactions to the book; please spam me with your thoughts as you read it.

@robbensinger.bsky.social

Computer security experts, national security professionals, and Grimes come together to say: "If Anyone Builds It, Everyone Dies" is WEIRDLY GOOD.

I'm dying to hear people's reactions to the book; please spam me with your thoughts as you read it.

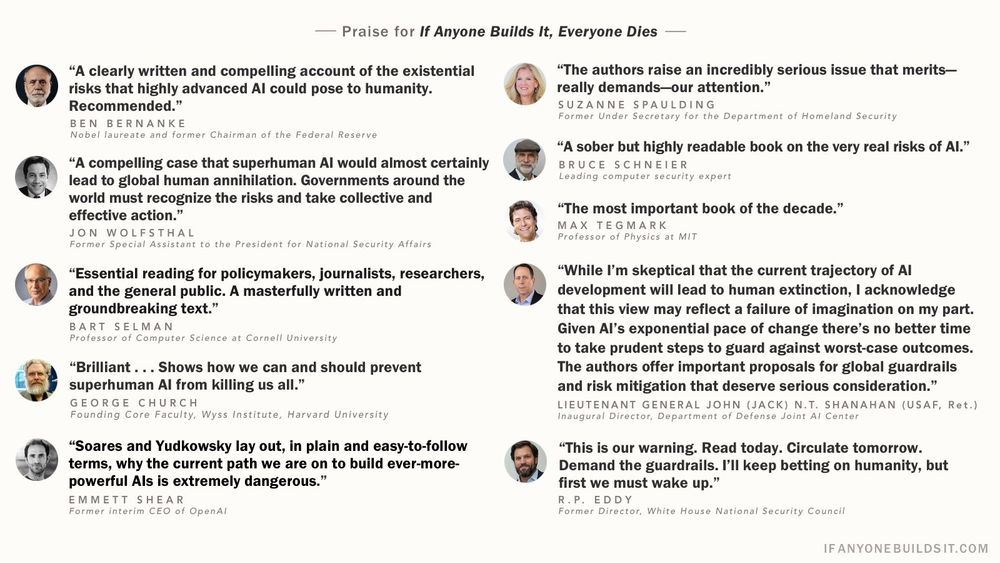

Serious slate of endorsements here!

21.06.2025 21:51 — 👍 3 🔁 1 💬 0 📌 0

You can pre-order here: ifanyonebuildsit.com#preorder

21.06.2025 17:41 — 👍 6 🔁 0 💬 0 📌 0

Senior White House officials, a retired three-star general, a Nobel laureate, and others come out to say that you should probably read Eliezer Yudkowsky and Nate Soares' "If Anyone Builds It, Everyone Dies".

21.06.2025 17:40 — 👍 17 🔁 6 💬 1 📌 1

We've been getting some pretty awesome blurbs for Eliezer and Nate's forthcoming book: If Anyone Builds It, Everyone Dies

More details here: www.lesswrong.com/posts/khmpWJ...

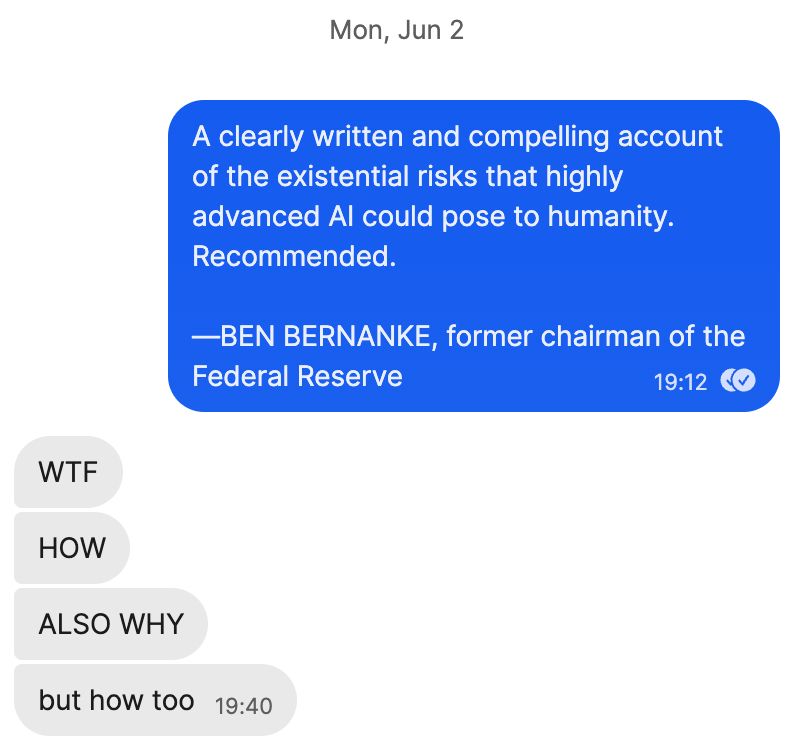

One of my favorite reactions, from someone who works on AI policy in DC:

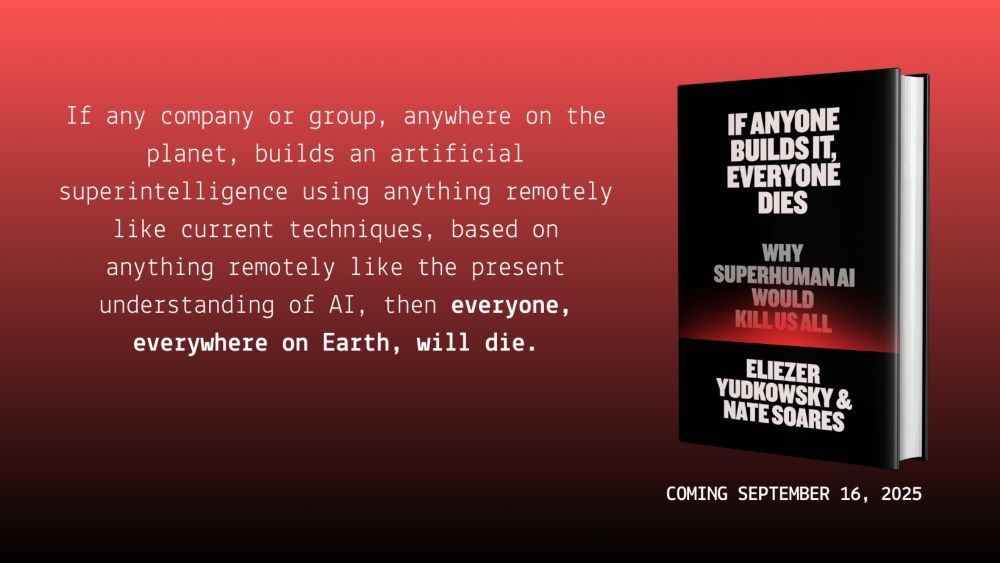

📢 Announcing IF ANYONE BUILDS IT, EVERYONE DIES

A new book from MIRI co-founder Eliezer Yudkowsky and president Nate Soares, published by @littlebrown.bsky.social.

🗓️ Out September 16, 2025

Visit the website to learn more and preorder the hardcover, ebook, or audiobook.

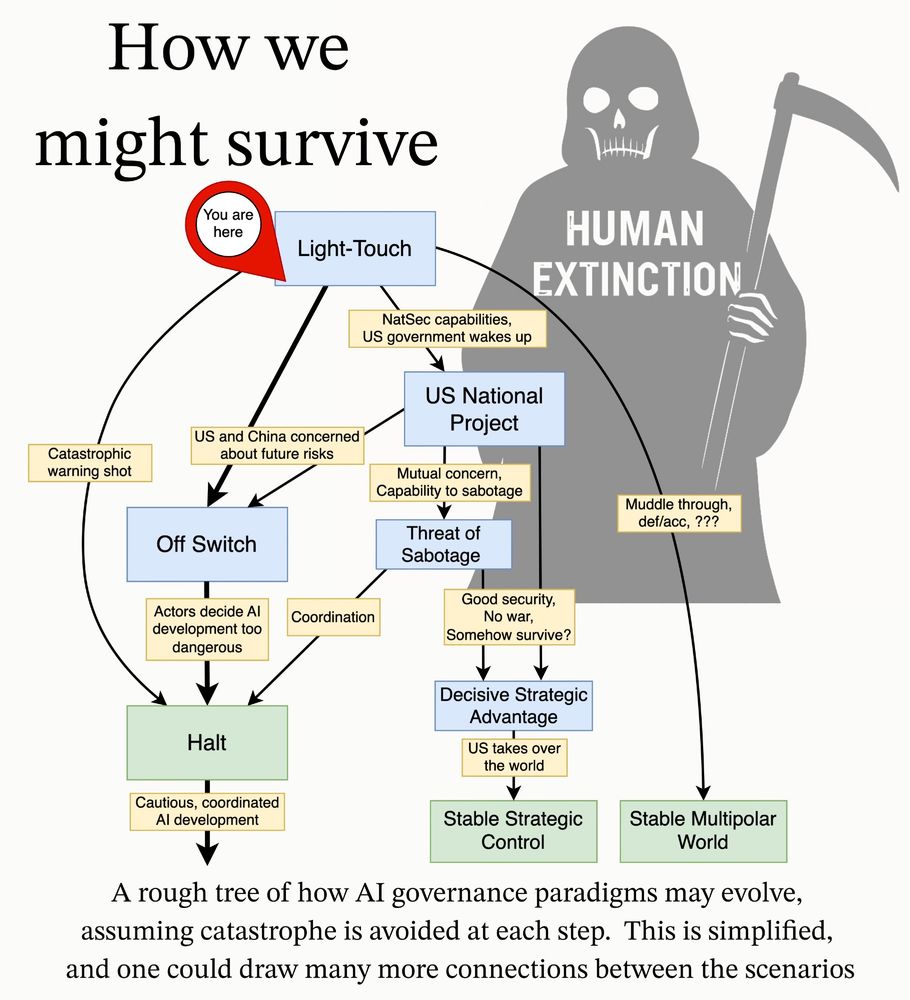

New AI governance research agenda from MIRI’s TechGov Team. We lay out our view of the strategic landscape and actionable research questions that, if answered, would provide important insight on how to reduce catastrophic and extinction risks from AI. 🧵1/10

techgov.intelligence.org/research/ai-...