We’re presenting WeatherWeaver at #ICCV2025, Poster Session 3 (Oct 22, Wed, 10:45–12:45)!

Come visit #337 and see how we make it snow in Hawaii 🏝️❄️⛄

Chih-Hao Lin

@chih-hao.bsky.social

https://chih-hao-lin.github.io/

@chih-hao.bsky.social

https://chih-hao-lin.github.io/

We’re presenting WeatherWeaver at #ICCV2025, Poster Session 3 (Oct 22, Wed, 10:45–12:45)!

Come visit #337 and see how we make it snow in Hawaii 🏝️❄️⛄

Finally, meet your #3DV2026 Publicity Chairs! 📢

@hanwenjiang1 @yanxg.bsky.social @chih-hao.bsky.social @csprofkgd.bsky.social

We’ll keep the 3DV conversation alive: posting updates, refreshing the website, and listening to your feedback.

Got questions or ideas? Tag @3dvconf.bsky.social anytime!

Introducing your #3DV2026 📝Publication Chairs &🔍Research Interaction Chairs!

📝Publication Chairs ensure accepted papers are properly published in the conference proceedings

🔍Research Interaction Chairs encourage engagement by spotlighting exceptional research in 3D vision

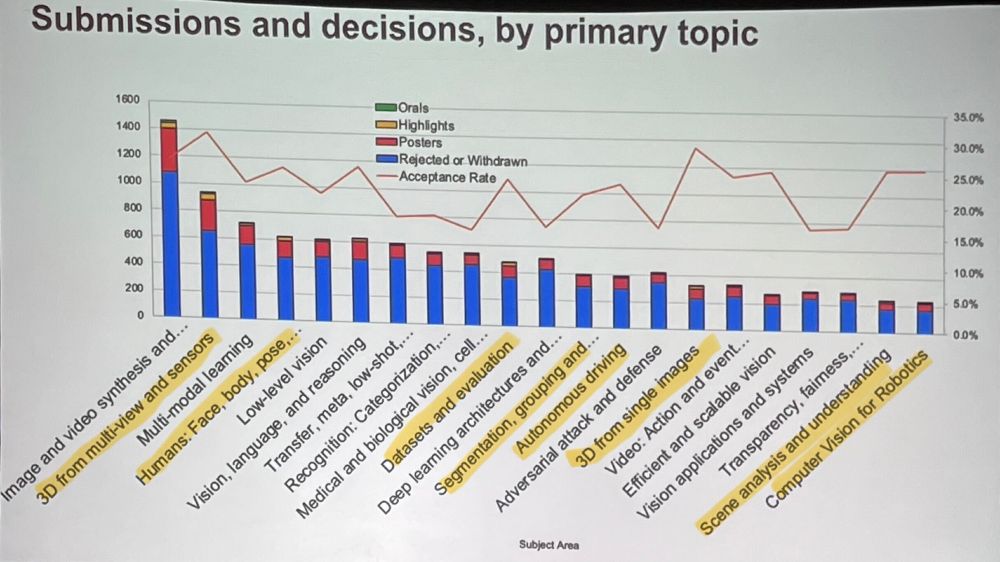

Understanding and reconstructing the 3D world are at the heart of computer vision and graphics. At #CVPR2025, we’ve seen many exciting works in 3D vision.

If you're pushing the boundaries, please consider submitting your work to #3DV2026 in Vancouver! (Deadline: Aug. 18, 2025)

This project was part of my internship at Meta, and it was a great collaboration with Jia-Bin, Zhengqin, Zhao, Christian, Tuotuo, Michael, Johannes, Shenlong, and Changil 🙌

10.06.2025 02:46 — 👍 0 🔁 0 💬 0 📌 0📍 Meet us at #CVPR2025

🗓️ June 13 (Fri.), 10:30–12:30

🪧 Come by our poster session to chat about IRIS and any research ideas

Looking forward to reconnecting — and meeting new friends — in Nashville! 🎸✨

Please check out our paper, code, and more demo videos!

🌐 Project page: irisldr.github.io

💻 GitHub: github.com/facebookrese...

📝 Paper: arxiv.org/abs/2401.12977

Excited to share our work at #CVPR2025!

👁️IRIS estimates accurate surface material, spatially-varying HDR lighting, and camera response function given a set of LDR images! It enables realistic, view-consistent, and controllable relighting and object insertion.

(links in 🧵)

I’m thrilled to share that I will be joining Johns Hopkins University’s Department of Computer Science (@jhucompsci.bsky.social, @hopkinsdsai.bsky.social) as an Assistant Professor this fall.

02.06.2025 19:46 — 👍 8 🔁 2 💬 1 📌 2

Photo of Vancouver

📢 3DV 2026 – Call for Papers is Out!

📝 Paper Deadline: Aug 18

🎥 Supplementary: Aug 21

🔗 3dvconf.github.io/2026/call-fo...

📅 Conference Date: Mar 20–23, 2026

🌆 Location: Vancouver 🇨🇦

🚀 Showcase your latest research to the world!

#3DV2026 #CallForPapers #Vancouver #Canada

🔊 New NVIDIA paper: Audio-SDS 🔊

We repurpose Score Distillation Sampling (SDS) for audio, turning any pretrained audio diffusion model into a tool for diverse tasks, including source separation, impact synthesis & more.

🎧 Demos, audio examples, paper: research.nvidia.com/labs/toronto...

🧵below

This work is a great collaboration at NVIDIAAI by Chih-Hao Lin, Zian Wang, Ruofan Liang, Yuxuan Zhang, Sanja Fidler, Shenlong Wang, Zan Gojcic

🌐Please check out our project page: research.nvidia.com/labs/toronto...

The weather removal model successfully removes both transient (e.g., rain, snowflake) and persistent effects (e.g., puddle, snow cover), and can even restore sunny-day lighting from rainy/snowy videos.

02.05.2025 14:19 — 👍 1 🔁 0 💬 1 📌 0WeatherWeaver combines two video diffusion models. The weather synthesis model generates realistic, temporally consistent weather, adapting shading naturally while preserving the original scene structure.

02.05.2025 14:19 — 👍 1 🔁 0 💬 1 📌 0By combining and adjusting multiple weather effects, WeatherWeaver can simulate complex weather transitions, e.g. on 🌧️ rainy and ☃️ snowy days — without costly real-world acquisitions.

02.05.2025 14:19 — 👍 1 🔁 0 💬 1 📌 0WeatherWeaver enables precise control of the weather effects by changing the intensity of the corresponding effects. 🌤️➡️🌥️

02.05.2025 14:19 — 👍 1 🔁 0 💬 1 📌 0We train a video diffusion model to edit weather effects with precise control, using a novel data strategy combining synthetic videos, generative image editing, and auto-labeled real-world videos.

02.05.2025 14:19 — 👍 2 🔁 0 💬 1 📌 0Realistic, controllable weather simulation opens new possibilities in 🚗 autonomous driving simulation and 🎬 filmmaking. Physics-based simulation requires accurate geometry and doesn’t scale to in-the-wild videos, while existing video editing often lacks realism and control.

02.05.2025 14:19 — 👍 2 🔁 0 💬 1 📌 0What if you could control the weather in any video — just like applying a filter?

Meet WeatherWeaver, a video model for controllable synthesis and removal of diverse weather effects — such as 🌧️ rain, ☃️ snow, 🌁 fog, and ☁️ clouds — for any input video.

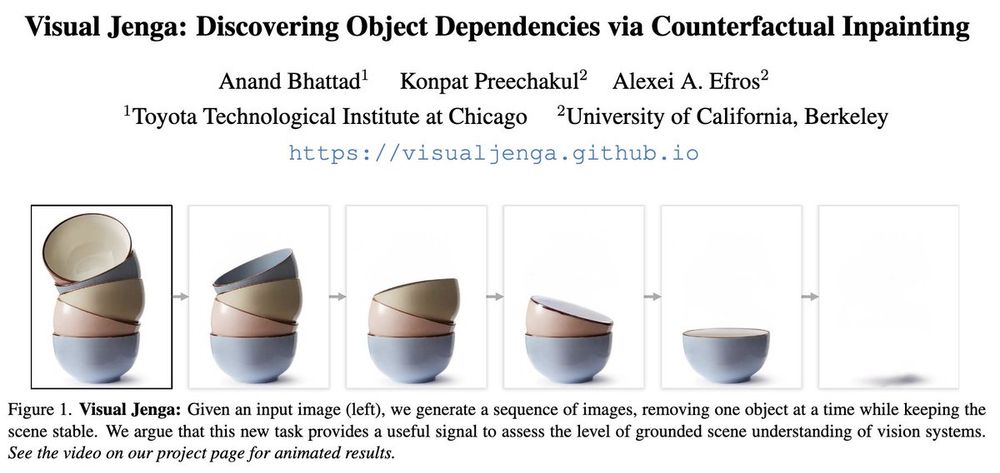

[1/10] Is scene understanding solved?

Models today can label pixels and detect objects with high accuracy. But does that mean they truly understand scenes?

Super excited to share our new paper and a new task in computer vision: Visual Jenga!

📄 arxiv.org/abs/2503.21770

🔗 visualjenga.github.io

Check out our cool demos. The code is also open-source!

Project website: haoyuhsu.github.io/autovfx-webs...

Code (GitHub): github.com/haoyuhsu/aut...

Paper: arxiv.org/abs/2411.02394

🎬Imagine creating professional visual effects (VFX) with just words! We are excited to introduce AutoVFX, a framework that creates realistic video effects from natural language instructions!

This is a cool project led by Hao-Yu, and we will present it at #3DV 2025!

Can we create realistic renderings of urban scenes from a single video while enabling controllable editing: relighting, object compositing, and nighttime simulation?

Check out our #3DV2025 UrbanIR paper, led by @chih-hao.bsky.social that does exactly this.

🔗: urbaninverserendering.github.io

Check out UrbanIR - Inverse rendering of unbounded scenes from a single video!

It’s a super cool project led by the amazing Chih-Hao!

@chih-hao.bsky.social is a rising star in 3DV! Follow him!

Learn more here👇

🙏 Huge thanks to our amazing collaborators from UIUC & UMD: Bohan, Yi-Ting, Kuan-Sheng, David, Jia-Bin (@jbhuang0604.bsky.social) , Anand (@anandbhattad.bsky.social) , and Shenlong. This work wouldn't be possible without you all.

15.03.2025 06:30 — 👍 2 🔁 0 💬 0 📌 0

📢 Meet us at 3DV 2025 in Singapore!

We’re excited to present UrbanIR at 3DV 2025 @3dvconf.bsky.social , come chat with us to discuss future directions!

Check out our interactive demos, and the code is open-source!

Project website: urbaninverserendering.github.io

Code (GitHub): github.com/chih-hao-lin...

Paper: arxiv.org/abs/2306.09349

UrbanIR precisely controls the lighting, simulating different times of day without time-consuming tripod captures.

15.03.2025 06:30 — 👍 2 🔁 0 💬 1 📌 0

With estimated scene properties, UrbanIR integrates a physically-based shading model into neural field, rendering realistic videos from novel viewpoints and lighting conditions.

15.03.2025 06:30 — 👍 2 🔁 0 💬 1 📌 0🔍 How does it work?

UrbanIR reconstructs scene properties—geometry, albedo, and shading—through inverse rendering. Since this is a highly ill-posed problem, we leverage 2D priors to guide the optimization.