Quick reminder about the EPFL PhD program deadline (EDIC) on Dec 15.

27.11.2025 10:14 — 👍 4 🔁 2 💬 0 📌 0Quick reminder about the EPFL PhD program deadline (EDIC) on Dec 15.

27.11.2025 10:14 — 👍 4 🔁 2 💬 0 📌 0You can dump the PTX intermediate representation (see the documentation), but figuring out the calling convention of the kernel for your own use will be tricky. The system is not designed to be used in this way.

22.08.2025 10:36 — 👍 1 🔁 0 💬 0 📌 0Just write your solver in plain CUDA. How hard can it be? 😛

18.08.2025 19:33 — 👍 3 🔁 0 💬 1 📌 0This approach is restricted to software that only needs the CUDA driver. If your project uses cuSolver, you will likely need to have a dependency on the CUDA python package that ships this library on PyPI (similar to PyTorch et al.)

18.08.2025 19:09 — 👍 2 🔁 0 💬 1 📌 0Differentiable rendering has transformed graphics and 3D vision, but what about other fields? Our SIGGRAPH 2025 introduces misuka, the first fully-differentiable path tracer for acoustics.

12.08.2025 19:26 — 👍 82 🔁 14 💬 1 📌 0

Wasn’t that something.. Flocke (German for “flake”) says hi!

15.08.2025 04:45 — 👍 5 🔁 0 💬 1 📌 0If you are fitting a NeRF and you want a surface out at the end, you should probably be using the idea in this paper.

12.08.2025 06:43 — 👍 1 🔁 0 💬 0 📌 0Given the focus on performance, I would suggest to switch from pybind11 to nanobind. Should just be a tiny change 😇

11.08.2025 15:05 — 👍 5 🔁 0 💬 1 📌 0For the paper and data, please check out the project page: mokumeproject.github.io

08.08.2025 11:53 — 👍 0 🔁 0 💬 0 📌 0

This video explains the process more detail: www.youtube.com/watch?v=H6N-...

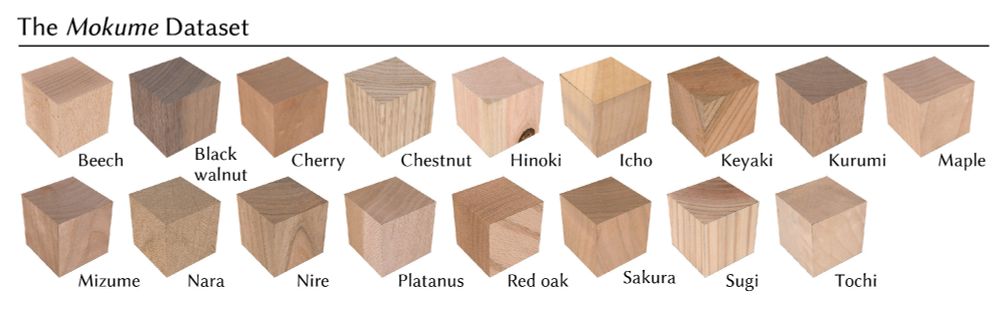

08.08.2025 11:53 — 👍 4 🔁 1 💬 1 📌 0The Mokume project is a massive collaborative effort led by Maria Larsson at the University of Tokyo (w/Hodaka Yamaguchi, Ehsan Pajouheshgar, I-Chao Shen, Kenji Tojo, Chia-Ming Chang, Lars Hansson, Olof Broman, Takashi Ijiri, Ariel Shamir, and Takeo Igarashi).

08.08.2025 11:53 — 👍 0 🔁 0 💬 1 📌 0

To reconstruct their interior, we: 1️⃣Localize annual rings on cube faces 2️⃣ Optimize a procedural growth field that assigns an age to every 3D point (when that wood formed during the tree's life) 3️⃣ Synthesize detailed textures via procedural model or a neural cellular automaton

08.08.2025 11:53 — 👍 0 🔁 0 💬 1 📌 0

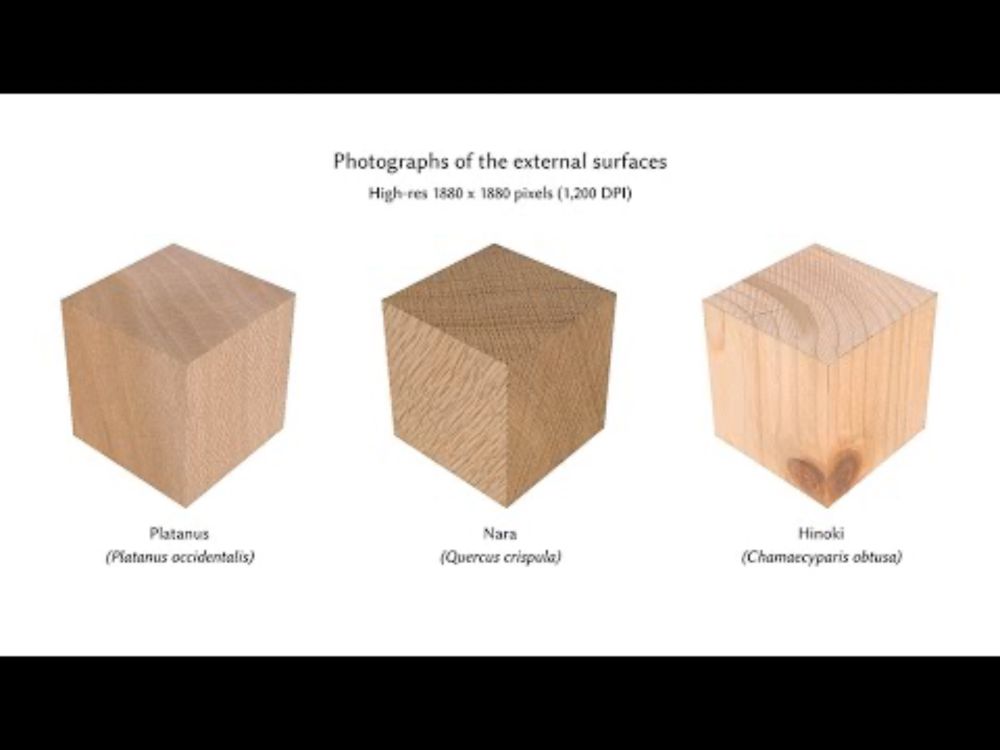

The Mokume dataset consists of 190 physical wood cubes from 17 species, each documented with:

- High-res photos of all 6 faces

- Annual ring annotations

- Photos of slanted cuts for validation

- CT scans revealing the true interior structure (for future use)

Wood textures are everywhere in graphics, but realistic texturing requires knowing what wood looks like throughout its volume, not just on the surfaces.

The patterns depend on tree species, growth conditions, and where and how the wood was cut from the tree.

How can one reconstruct the complete 3D interior of a wood block using only photos of its surfaces? 🪵

At SIGGRAPH'25 (Thursday!), Maria Larsson will present *Mokume*: a dataset of 190 diverse wood samples and a pipeline that solves this inverse texturing challenge. 🧵👇

My lab will be recruiting at all levels. PhD students, postdocs, and a research engineering position (worldwide for PhD/postdoc, EU candidates only for the engineering position). If you're at SIGGRAPH, I'd love to talk to you if you are interested in any of these.

08.08.2025 08:09 — 👍 12 🔁 7 💬 0 📌 1The reason is that the "volume" of this paper is always rendered as a surface (without alpha blending) during the optimization. Think of it as an end-to-end optimization that accounts for meshing, without actually meshing the object at each step.

08.08.2025 08:01 — 👍 3 🔁 0 💬 1 📌 0To get a triangle mesh out at the end, you will still need a meshing step (e.g. marching cubes). The key difference is that NeRF requires addtl. optimization & heuristics to create a volume that will ultimately produce a high quality surface. With this new method, it just works.

08.08.2025 08:01 — 👍 6 🔁 3 💬 1 📌 0Wow, this is such a cool paper! Basically with a surprisingly small modification to existing NeRF optimization, this paper gets a really good direct surface reconstruction technique that doesn't require all of the usual mess that meshing a NeRF requires (raymarching, marching cubes, etc).

08.08.2025 07:39 — 👍 24 🔁 5 💬 1 📌 0

Check out our paper for more details at rgl.epfl.ch/publications...

07.08.2025 12:21 — 👍 9 🔁 1 💬 0 📌 0

This is a joint work with @ziyizh.bsky.social, @njroussel.bsky.social, Thomas Müller, @tizian.bsky.social, @merlin.ninja, and Fabrice Rousselle.

07.08.2025 12:21 — 👍 2 🔁 0 💬 1 📌 0

Our method minimizes the expected loss, whereas NeRF optimizes the loss of the expectation.

It generalizes deterministic surface evolution methods (e.g., NvDiffrec) and elegantly handles discontinuities. Future applications include physically based rendering and tomography.

Instead of blending colors along rays and supervising the resulting images, we project the training images into the scene to supervise the radiance field.

Each point along a ray is treated as a surface candidate, independently optimized to match that ray's reference color.

By changing just a few lines of code, we can adapt existing NeRF frameworks for surface reconstruction.

This patch shows the necessary changes to Instant NGP, which was originally designed for volume reconstruction.

Methods like NeRF and Gaussian Splats model the world as radioactive fog, rendered using alpha blending. This produces great results.. but are volumes the only way to get there?🤔 Our new SIGGRAPH'25 paper directly reconstructs surfaces without heuristics or regularizers.

07.08.2025 12:21 — 👍 104 🔁 23 💬 3 📌 2It also adds support for function freezing so that the process of rendering a scene can be captured and cheaply replayed. See mitsuba.readthedocs.io/en/latest/re... for details.

07.08.2025 11:15 — 👍 2 🔁 0 💬 0 📌 0Mitsuba significantly improves performance on the OptiX backend and adopts SER (Shader Execution Reordering) throughout various integrators. It adds special shapes and an integrator for gaussian splatting, a sun-sky emitter, and fixes missing partial derivative terms in differentiable integrators.

07.08.2025 11:15 — 👍 3 🔁 0 💬 1 📌 0Dr.Jit adds matrix operations that compile to tensor core (CUDA) or vector instruction sets like AVX512, neural network abstractions, grid/permutohedral encodings, function freezing, and shader execution reordering (SER). Many improvements simplify development. drjit.readthedocs.io/en/stable/ch...

07.08.2025 11:15 — 👍 2 🔁 0 💬 1 📌 0

Dr.Jit+Mitsuba just added support for fused neural networks, hash grids, and function freezing to eliminate tracing overheads. This significantly accelerates optimization &realtime workloads and enables custom Instant NGP and neural material/radiosity/path guiding projects. What will you do with it?

07.08.2025 11:15 — 👍 33 🔁 6 💬 2 📌 2

𝐎𝐯𝐞𝐫𝐩𝐫𝐢𝐧𝐭𝐢𝐧𝐠 𝐰𝐢𝐭𝐡 𝐓𝐨𝐦𝐨𝐠𝐫𝐚𝐩𝐡𝐢𝐜 𝐕𝐨𝐥𝐮𝐦𝐞𝐭𝐫𝐢𝐜 𝐀𝐝𝐝𝐢𝐭𝐢𝐯𝐞 𝐌𝐚𝐧𝐮𝐟𝐚𝐜𝐭𝐮𝐫𝐢𝐧𝐠

Imagine 3D printing directly onto an object that already exists.

With Tomographic Volumetric Additive Manufacturing (TVAM), we can now print over existing components, opening up a world of possibilities for multi-component devices.