Percepta | A General Catalyst Transformation Company

Transforming critical institutions using applied AI. Let's harness the frontier.

We're finally out of stealth: percepta.ai

We're a research / engineering team working together in industries like health and logistics to ship ML tools that drastically improve productivity. If you're interested in ML and RL work that matters, come join us 😀

02.10.2025 15:35 — 👍 118 🔁 18 💬 7 📌 2

We've hired some *fantastic* researchers but our startup is still looking for 2-3 more people with skills in ML/RL/LLMs. If you'd like to work on some transformative applied problems, hit me up. We'll be launching publicly soon too...

23.09.2025 17:31 — 👍 37 🔁 8 💬 0 📌 0

YouTube video by Jia-Bin Huang

How AI Taught Itself to See [DINOv3]

How AI Taught Itself to See

Self-supervised learning is fascinating! How can AI learn from images only without labels?

In this video, we’ll build the method from first principles and uncover the key ideas behind CLIP, MAE, SimCLR, and DINO (v1–v3).

Video link: youtu.be/oGTasd3cliM

16.09.2025 23:13 — 👍 13 🔁 2 💬 0 📌 0

Putting the final touches on your submission? Just a few days left to enter the @iccv.bsky.social NAVSIM Challenge! Deadline: September 20. $8K in prizes and several travel grants are on the line!

15.09.2025 14:02 — 👍 1 🔁 0 💬 0 📌 0

CS208: Canon of Computer Science, Spring 2010

Found this marvelous little course full of readings to trace the evolution of computer science and its canonical ideas

graphics.stanford.edu/courses/cs20...

14.09.2025 19:58 — 👍 46 🔁 4 💬 2 📌 0

Our Principal Investigators Antonio Orvieto, Celestine Mendler-Dünner, Maximilian Dax, Rediet Abebe, @shiweiliu.bsky.social, T. Konstantin Rusch, and @wielandbrendel.bsky.social are looking for motivated students interested in internships.

Apply here: docs.google.com/forms/d/e/1F...

11.09.2025 09:53 — 👍 2 🔁 2 💬 1 📌 1

Announcing the @iccv.bsky.social NAVSIM Challenge! What's new? We're testing not only on real recordings, but also perturbed futures generated from the real ones via pseudo-simulation! $8K in prizes + several $1.5k travel grants. Submit by September 20! opendrivelab.com/challenge2025/ 🧵👇

01.09.2025 09:14 — 👍 14 🔁 9 💬 1 📌 1

Announcing the @iccv.bsky.social NAVSIM Challenge! What's new? We're testing not only on real recordings, but also perturbed futures generated from the real ones via pseudo-simulation! $8K in prizes + several $1.5k travel grants. Submit by September 20! opendrivelab.com/challenge2025/ 🧵👇

01.09.2025 09:14 — 👍 14 🔁 9 💬 1 📌 1

Let's push for the obvious solution: Dear @neuripsconf.bsky.social ! Allow authors to present accepted papers at EurIPS instead of NeurIPS rather than just additionally. Likely, at least 500 papers would move to Copenhagen, problem solved.

28.08.2025 19:19 — 👍 56 🔁 15 💬 2 📌 0

🚀 Introducing our new paper, MDPO: Overcoming the Training-Inference Divide of Masked Diffusion Language Models.

📄 Paper: www.scholar-inbox.com/papers/He202...

arxiv.org/pdf/2508.13148

💻 Code: github.com/autonomousvi...

🌐 Project Page: cli212.github.io/MDPO/

20.08.2025 09:30 — 👍 12 🔁 8 💬 1 📌 2

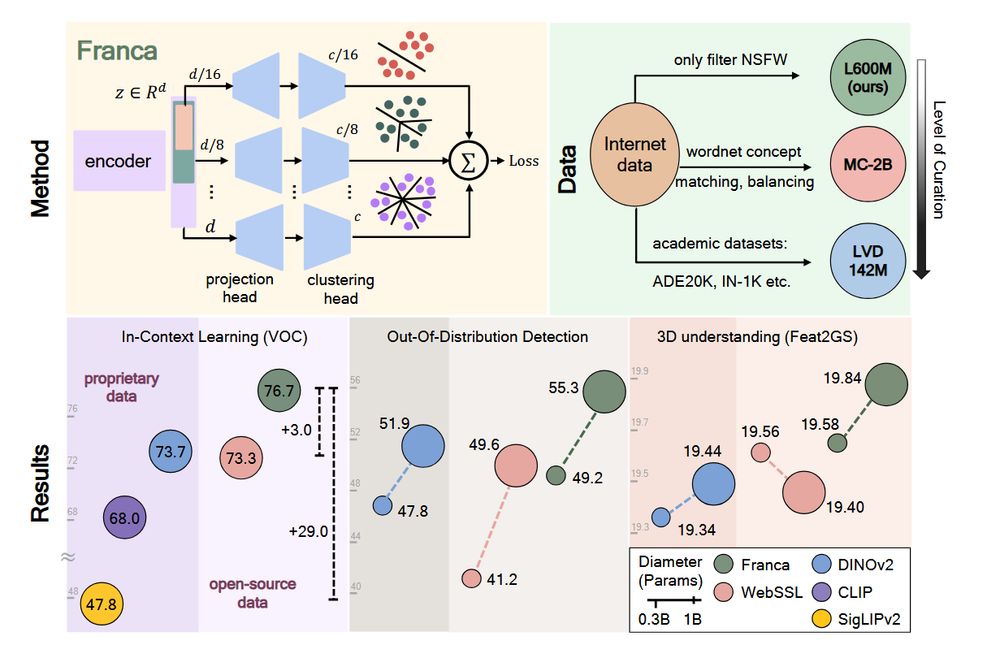

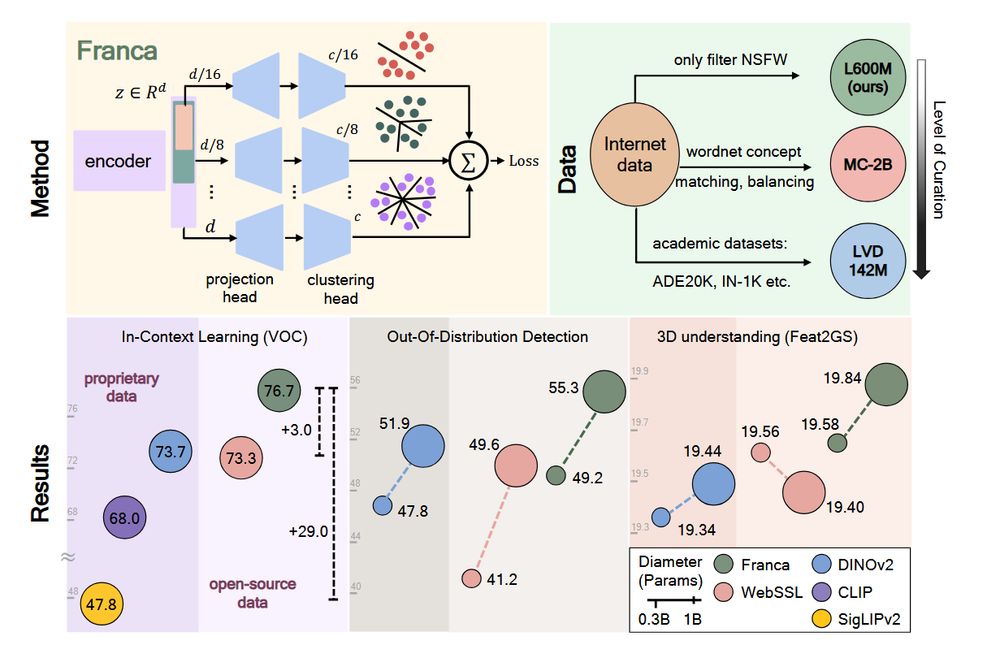

Introducing DINOv3 🦕🦕🦕

A SotA-enabling vision foundation model, trained with pure self-supervised learning (SSL) at scale.

High quality dense features, combining unprecedented semantic and geometric scene understanding.

Three reasons why this matters👇

14.08.2025 18:50 — 👍 24 🔁 8 💬 2 📌 2

GitHub - autonomousvision/CaRL: [CoRL 2025] CaRL: Learning Scalable Planning Policies with Simple Rewards

[CoRL 2025] CaRL: Learning Scalable Planning Policies with Simple Rewards - autonomousvision/CaRL

Our paper "CaRL: Learning Scalable Planning Policies with Simple Rewards" has been accepted to the Conference on Robot Learning (CoRL 2025).

See you in Seoul at the end of September.

Code & Paper:

github.com/autonomousvi...

08.08.2025 11:38 — 👍 3 🔁 1 💬 0 📌 0

At TRI’s newest division: Automated Driving Advanced Development, we’re building a clean-slate, end-to-end autonomy stack. We're hiring, with open roles in learning, infra, and validation: www.tri.global/careers#open...

05.08.2025 22:57 — 👍 4 🔁 1 💬 0 📌 0

1/ Can open-data models beat DINOv2? Today we release Franca, a fully open-sourced vision foundation model. Franca with ViT-G backbone matches (and often beats) proprietary models like SigLIPv2, CLIP, DINOv2 on various benchmarks setting a new standard for open-source research.

21.07.2025 14:47 — 👍 84 🔁 21 💬 2 📌 3

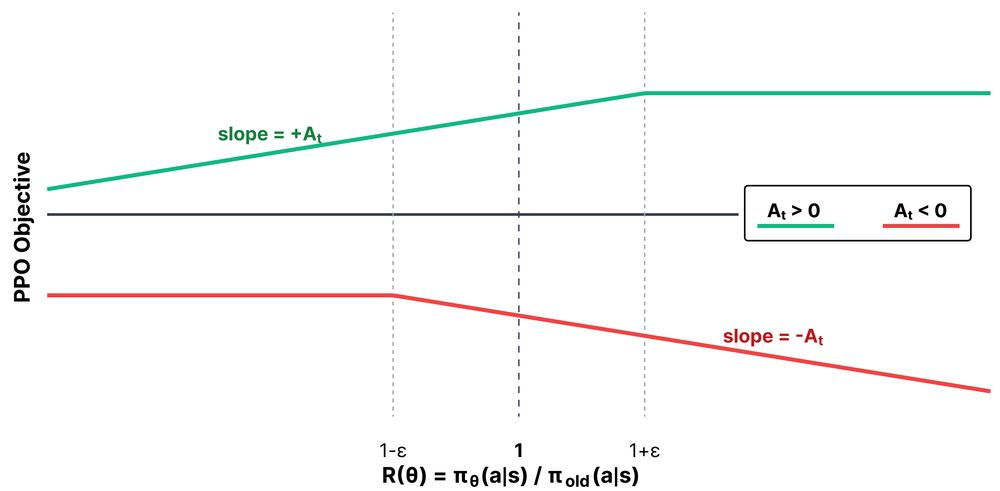

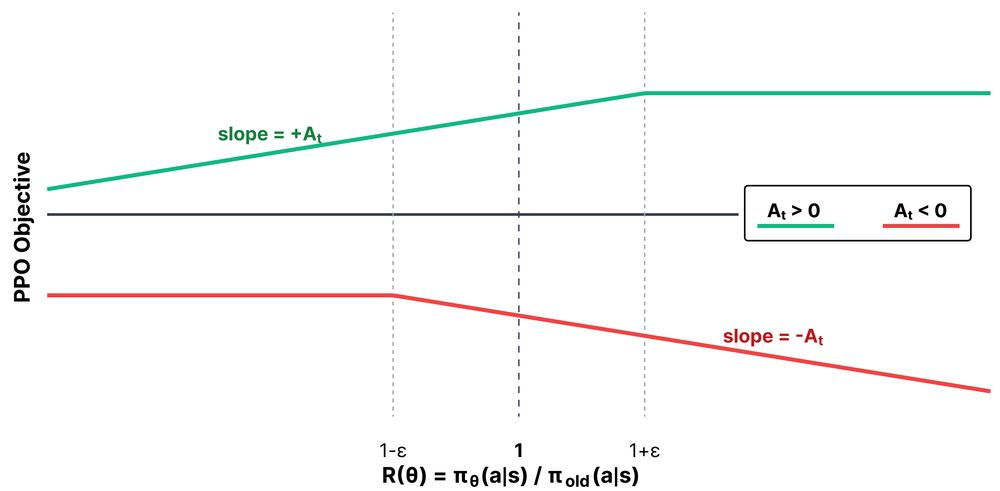

Adding a nice way to visualize the PPO objective to the rlhf book.

Core for policy-gradient is L is proportional to R*A (R=policy ratio, A = advantage).

PPO makes good actions more likely, up to a point.

PPO makes bad actions less likely, up to a point.

19.07.2025 23:10 — 👍 12 🔁 2 💬 2 📌 0

What if the cherry was actually cake? (source: arxiv.org/abs/2506.08007)

20.07.2025 10:18 — 👍 5 🔁 0 💬 1 📌 0

🚨Job Alert

W2 (TT W3) Professorship in Computer Science "AI for People & Society"

@saarland-informatics-campus.de/@uni-saarland.de is looking to appoint an outstanding individual in the field of AI for people and society who has made significant contributions in one or more of the following areas:

18.07.2025 07:11 — 👍 14 🔁 18 💬 1 📌 0

EurIPS is coming! 📣 Mark your calendar for Dec. 2-7, 2025 in Copenhagen 📅

EurIPS is a community-organized conference where you can present accepted NeurIPS 2025 papers, endorsed by @neuripsconf.bsky.social and @nordicair.bsky.social and is co-developed by @ellis.eu

eurips.cc

16.07.2025 22:00 — 👍 139 🔁 70 💬 2 📌 17

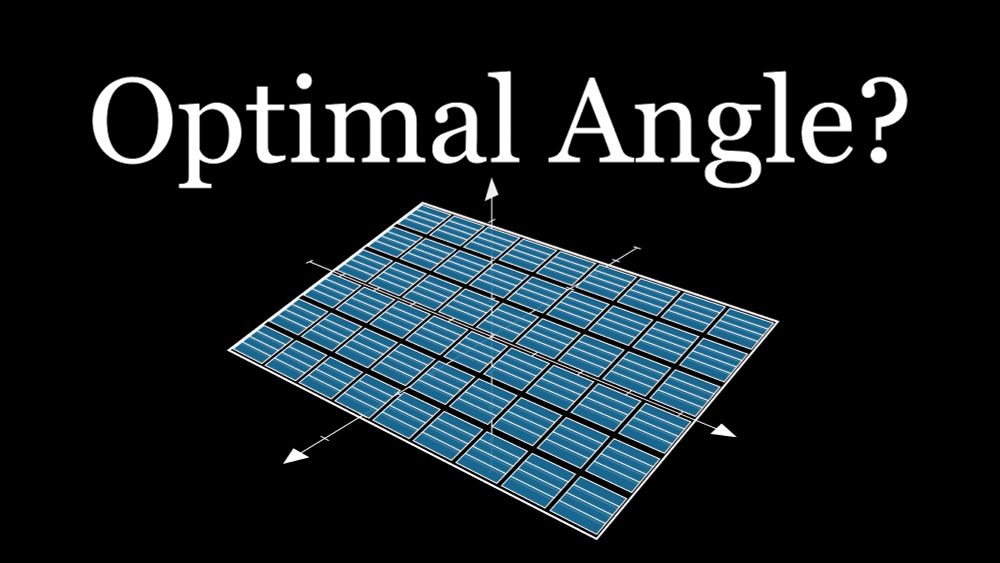

In an era of billion-parameter models everywhere, it's incredibly refreshing to see how a fundamental question can be formulated and solved with simple, beautiful math.

- How should we orient a solar panel ☀️🔋? -

Zero AI! If you enjoy math, you'll love this!

Video: www.youtube.com/watch?v=ZKzL...

16.07.2025 14:25 — 👍 9 🔁 2 💬 1 📌 0

📢 Present your NeurIPS paper in Europe!

Join EurIPS 2025 + ELLIS UnConference in Copenhagen for in-person talks, posters, workshops and more. Registration opens soon; save the date:

📅 Dec 2–7, 2025

📍 Copenhagen 🇩🇰

🔗eurips.cc

#EurIPS

@euripsconf.bsky.social

16.07.2025 23:00 — 👍 61 🔁 17 💬 2 📌 3

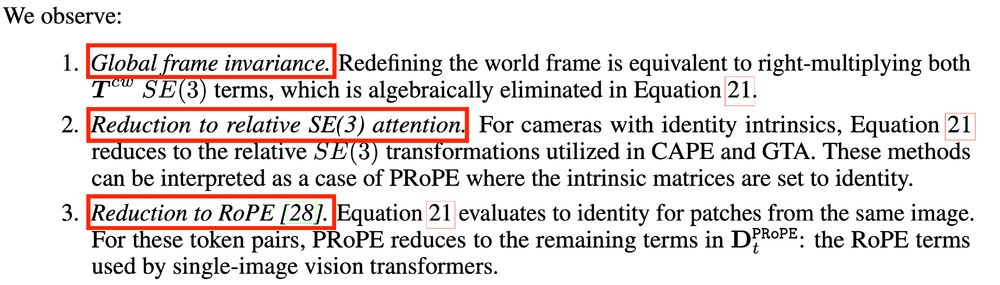

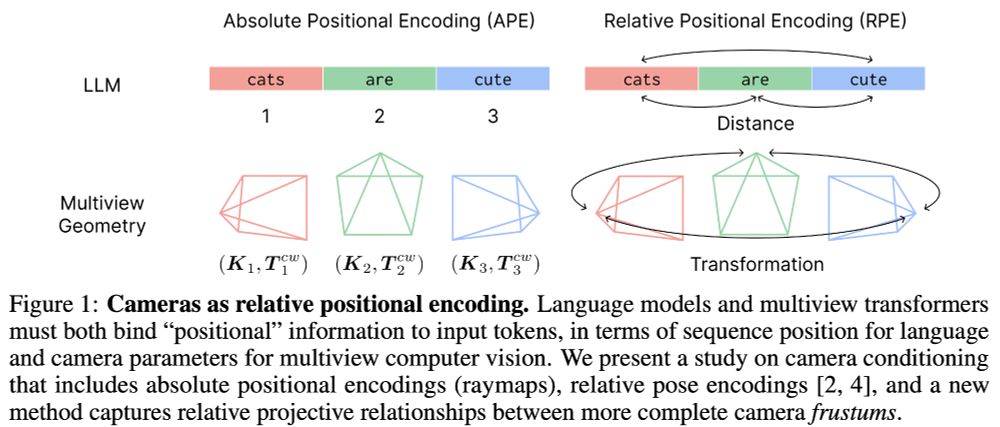

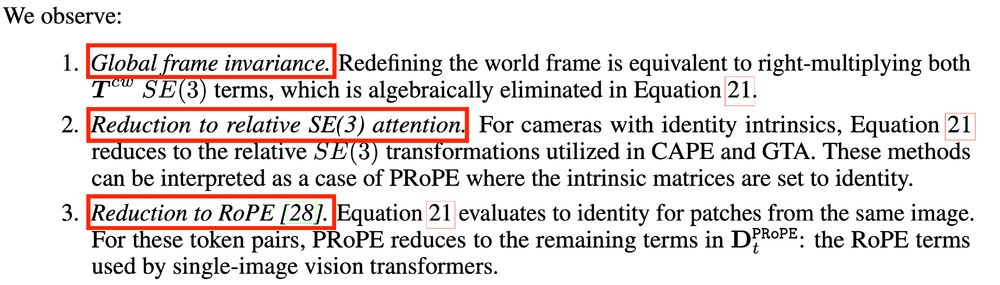

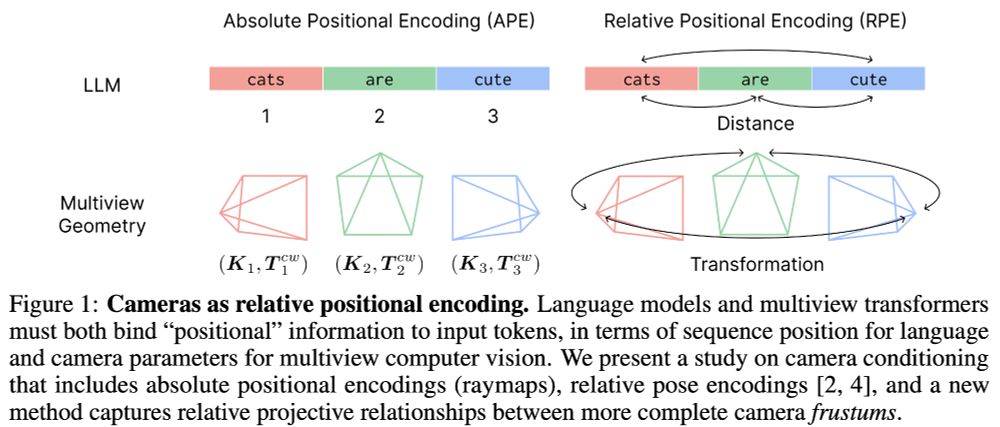

I really like this paper on relative positional encodings using projective geometry for multi-view transformers, by Li et al. (Berkeley/Nvidia/HKU).

It is elegant: in special situations, it defaults to known baselines like GTA (if identity intrinsics) and RoPE (same cam).

arxiv.org/abs/2507.10496

15.07.2025 14:40 — 👍 23 🔁 3 💬 0 📌 1

GitHub - autonomousvision/CaRL: [ArXiv 2025] CaRL: Learning Scalable Planning Policies with Simple Rewards

[ArXiv 2025] CaRL: Learning Scalable Planning Policies with Simple Rewards - autonomousvision/CaRL

We have released the code for our work, CaRL: Learning Scalable Planning Policies with Simple Rewards.

The repository contains the first public code base for training RL agents with the CARLA leaderboard 2.0 and nuPlan.

github.com/autonomousvi...

15.07.2025 16:05 — 👍 20 🔁 7 💬 0 📌 2

YouTube video by Daniel Dauner

CaRL: Learning Scalable Planning Policies with Simple Rewards

In case you find it as relaxing as we do: Here is a 2h+ video of our autonomous RL driving agent CaRL in action! @danieldauner.bsky.social @bernhard-jaeger.bsky.social @kashyap7x.bsky.social

youtube.com/watch?v=_god...

15.07.2025 06:17 — 👍 21 🔁 5 💬 0 📌 1

🏟️But we’re not done yet - our workshop continues at #ICCV2025! And the challenge moves forward too, with more prizes and exciting updates. opendrivelab.com/challenge2025/

13.07.2025 16:39 — 👍 3 🔁 2 💬 0 📌 0

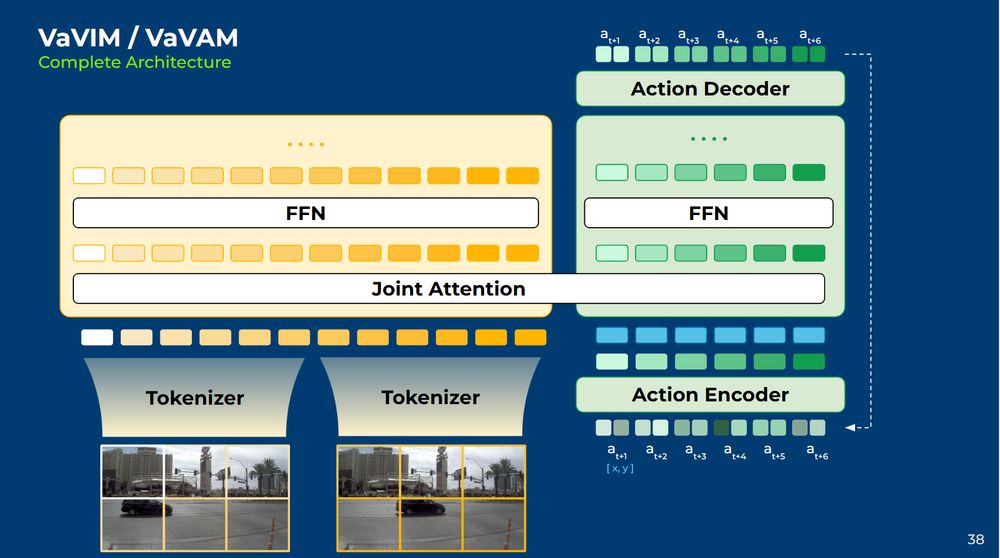

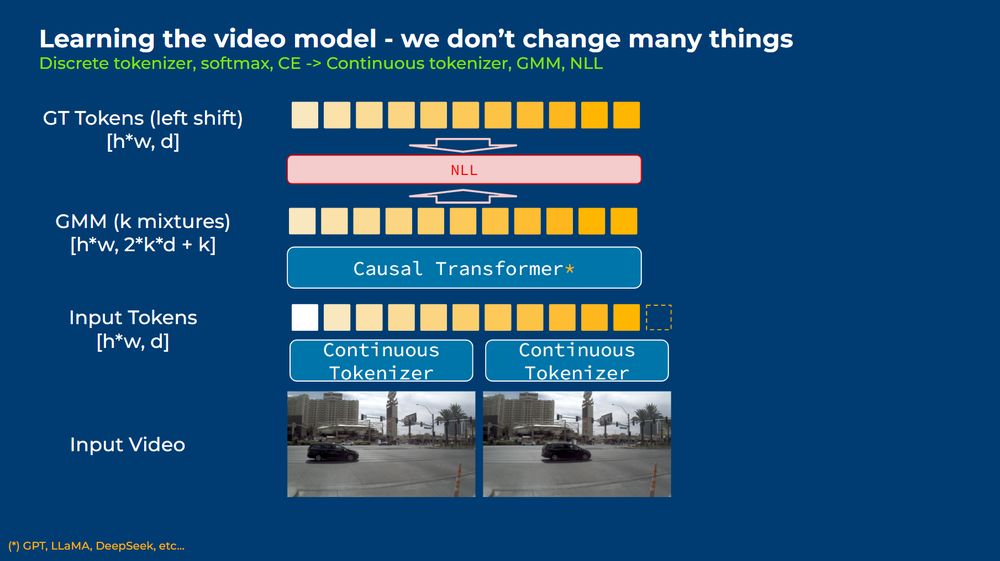

Do you also plan to open-source this continuous token version? As I understand, the current release is for a discrete-tokenized model set, right?

10.07.2025 23:37 — 👍 0 🔁 0 💬 1 📌 0

🚗 Excited to announce our RealADSim Workshop & Challenges @ ICCV 2025 — join us!

🏆 Over $40,000 in prizes

🌆 Competition 1: Extrapolated Novel View Synthesis

🚘 Competition 2: Closed-loop Driving in a 3DGS-based Simulator

🎤 Featuring great speakers

🔗 realadsim.github.io/2025/

30.06.2025 14:09 — 👍 6 🔁 2 💬 0 📌 1

Assistant Professor of Computer Science at the University of British Columbia. I also post my daily finds on arxiv.

I enjoy playing with family, reading science fiction and history, gardening, board games, and jazzercise. I'm a father, husband, Navy veteran, and graduate student studying computer science at George Mason University.

Mastodon: https://scicomm.xyz/@David

PhD Candidate in the Princeton Computational Imaging Lab researching 3D generation and perception 🤖

www.ostjul.com

Asst. Professor of Physics at @uwmadison.bsky.social, AI for science researcher, performing artist/creative technologist.

A world-class research hub in AI and machine learning, in partnership with universities, RDI organizations and businesses in Finland. We are the 2nd institute in the @ellis.eu network.

🔗 ellisinstitute.fi

Robotics PhD student at Dartmouth doing research in the A2R Lab [@a2r-lab.bsky.social]. Researching MPC+RL applied to locomotion and manipulation, all that fun stuff! @NSF GRFP Fellow

🤖

PhD Student at the Robot Learning Lab, University of Freiburg.

Reinforcement Learning, World Models and Robot Manipulation.

https://akshaychandra.com

Research Scientist in the DINO team at Meta FAIR. Previously: PhD at Max-Planck Institute for Intelligent Systems, Tübingen. Representation learning, agents, structure.

Scientist interested in developing algorithms to enable robots to interact seamlessly with humans 🧠🦾

Personal page: https://oceliktutan.github.io

SAIR's page: https://sairlab.github.io

WirStiftenWissen – auch hier auf BlueSky. Folgt uns für spannende Förderangebote, mutige Forschungsprojekte und faszinierende Veranstaltungen.

🌐 www.volkswagenstiftung.de

PhD candidate in #ComputerVision and #ArtificialIntelligence for #AutonomousVehicles at AcSIR, CSIR-CMERI. Academic website: https://scinv22.github.io

Postdoc UvA Amsterdam.

PhD in ETH Zürich and MPI IS, intern in Amazon AWS.

Structured representation learning for and by autonomous agents.

zadaianchuk.github.io

Faculty at the ELLIS Institute Tübingen and Max Planck Institute for Intelligent Systems. Leading the AI Safety and Alignment group. PhD from EPFL supported by Google & OpenPhil PhD fellowships.

More details: https://www.andriushchenko.me/

For more AI&Tech content, check here www.luok.ai

🍎Apple Die Hard Fan| 苹果骨灰粉

🤖GenAI Observer | GenAI观察者

👨🏻🎤Cutting Edge Tech Enthusiast | 科技爱好者

Convergent Research enables fundamental research that requires unusual levels of scale and coordination.

EEML Organizer, ML researcher

Head of Video & Image Sense Lab | University of Amsterdam | Scientific Director Amsterdam AI | https://www.ceessnoek.info/

![How AI Taught Itself to See [DINOv3]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:medlwnut6g2efyoas543imbq/bafkreibnok7drasbhlomrtgnd7erdh3yorbzf4ui7zcjvhjutufysikhmi@jpeg)