Howdy all. I'm unfortunately not going to be with my employer for much longer due to team relocation. If anyone has any info on roles that would allow me to continue my Rust compiler work (in New York City), they'd be greatly appreciated.

02.07.2025 17:31 — 👍 92 🔁 45 💬 1 📌 1

🦀 Hello World!

The Rust project now has an official presence on Bluesky! ✨

We'll be posting the same on our Mastodon and Bluesky accounts, so you won't miss anything on either platform.

05.04.2025 10:51 — 👍 1480 🔁 287 💬 32 📌 25

fleetwood.dev

fleetwood.dev

Want an in depth exploration of the different hardware architectures within AI?

Of course you do :)

Another great article by Chris Fleetwood:

fleetwood.dev/posts/domain...

09.03.2025 13:05 — 👍 1 🔁 1 💬 0 📌 0

Performance leap: TGI v3 is out. Processes 3x more tokens, 13x faster than vLLM on long prompts. Zero config !

10.12.2024 10:08 — 👍 19 🔁 6 💬 1 📌 1

True, but at the same time my man JJB famously said «Ooh! Ooh! Mooie! Woohoo! Aah!»

So yeah

08.12.2024 13:46 — 👍 1 🔁 0 💬 1 📌 0

Chart Title: Model Hardware vs Energy per GigaFLOP.

Vertical Axis: mJ/GFLOP(Log)

Horizontal Axis: Hardware Type(CPU, CPU + GPU, CPU + ANE)

CPU: min 6.9 1st quartile 11.7 median 13.4 3rd quartile 35.6 max 53.1

CPU + GPU: 4.6 4.6 4.7 6.2 9.6

CPU + ANE: 0.9 1.0 1.1 1.4 1.8

Preliminary data shows the Apple Neural Engine uses ~94% less energy than the CPU and ~75% less than the GPU 🤯

On the On-Device team at Hugging Face, we've been profiling energy usage for CoreML models. Here’s some data I collected:

05.12.2024 20:08 — 👍 4 🔁 1 💬 2 📌 0

I, for one, don’t immediately see anything wrong with what you’ve said here.

There are perhaps some exaggerations here and there to drive home your points, but the best thread/rant on the subject (from the side of outraged bluesky users) that I’ve seen

28.11.2024 17:57 — 👍 1 🔁 0 💬 0 📌 0

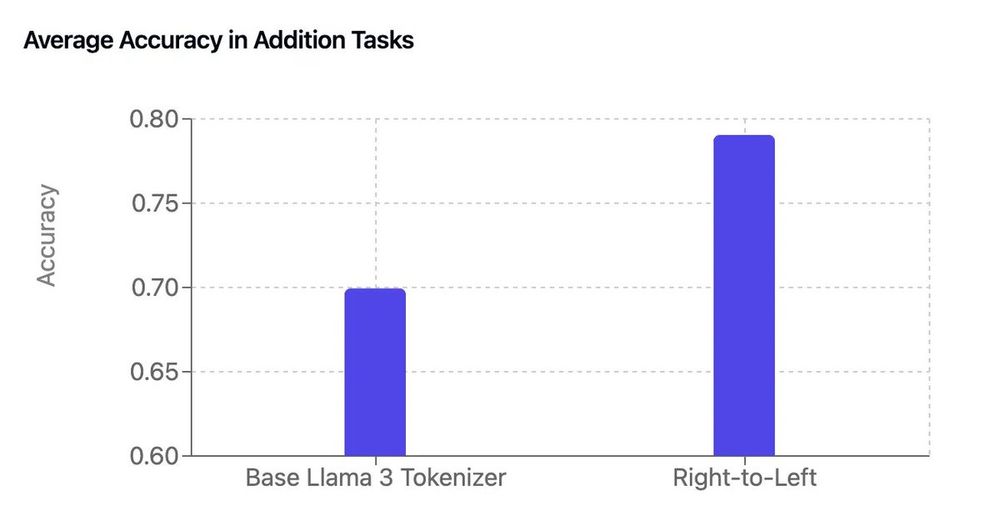

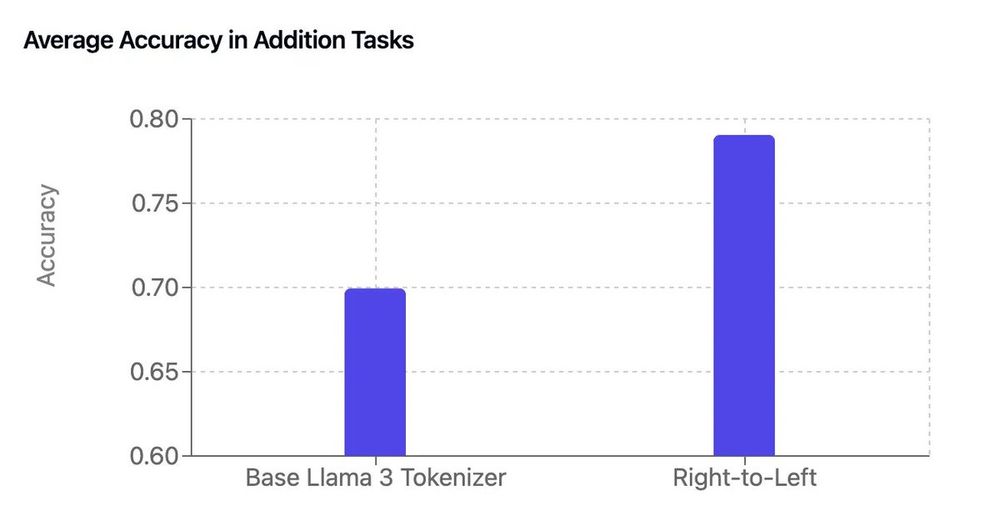

It's Sunday morning so taking a minute for a nerdy thread (on math, tokenizers and LLMs) of the work of our intern Garreth

By adding a few lines of code to the base Llama 3 tokenizer, he got a free boost in arithmetic performance 😮

[thread]

24.11.2024 11:05 — 👍 272 🔁 34 💬 5 📌 5

I guess you’ll have to engage fervently with that content to bring it back. Good luck 🫡

21.11.2024 17:02 — 👍 0 🔁 0 💬 0 📌 0

Sky tweet

21.11.2024 08:34 — 👍 1 🔁 0 💬 1 📌 0

21.11.2024 00:51 — 👍 1 🔁 0 💬 0 📌 0

21.11.2024 00:51 — 👍 1 🔁 0 💬 0 📌 0

I was just about to tag you hehe

20.11.2024 21:53 — 👍 1 🔁 0 💬 0 📌 0

Nils Olav - Wikipedia

In Norway we let penguins lead 🫡

en.m.wikipedia.org/wiki/Nils_Olav

20.11.2024 21:46 — 👍 4 🔁 0 💬 2 📌 0

If you want to dive into async allocators a bit more:

open.spotify.com/episode/2YGI...

20.11.2024 19:13 — 👍 1 🔁 0 💬 0 📌 0

Our hardware is usually async in many different ways, but our default programming approach usually isn’t.

For example we approach allocating memory as a sync operation, but it usually isn’t. We could be doing stuff while allocating. Async allocators has a host of fun problems though :)

20.11.2024 19:12 — 👍 2 🔁 0 💬 1 📌 0

when you try to convert your text into smaller pieces but all it gives you is Elvish, that’s a tolkienizer

20.11.2024 17:50 — 👍 1011 🔁 104 💬 35 📌 17

fleetwood.dev

fleetwood.dev

RoPE can be confusing, so here’s a great write up by my buddy Chris Fleetwood on the topic:

fleetwood.dev/posts/you-co...

18.11.2024 15:42 — 👍 3 🔁 0 💬 0 📌 0

I think you’re applying your own (better) logic and improving on what he actually means. Your point has merit, his does not.

Hold people accountable to their exact phrasing.

17.11.2024 22:36 — 👍 0 🔁 0 💬 0 📌 0

He specifically said to stop worrying about climate goals and instead funnel money into AI, no?

That’s what you should either agree with or not.

Applying AI in various fields is something else. Sounds good.

17.11.2024 20:52 — 👍 0 🔁 0 💬 0 📌 0

Generalisations outside what exists/can be inferred in the training set?

That is unfortunately impossible simply by how training works.

I think continuing to fund ML research is essential. But there are a limited amount of geniuses out there. Wild spending will not improve anything.

17.11.2024 20:27 — 👍 0 🔁 0 💬 1 📌 0

Hah I thought you blocked me for agreeing with you because my comments disappeared. Phew.

I guess they’re gone because the parent comments were removed.

17.11.2024 19:47 — 👍 0 🔁 0 💬 0 📌 0

MLE here.

I don’t want to put you down or anything, but I think you should look into the specifics a little closer.

It is correct that it can only solve the types of problems it has seen.

If the model is able to generalise beyond that then we’ve achieved AGE. Which we have not.

17.11.2024 19:27 — 👍 1 🔁 0 💬 1 📌 0

I work with AI. Specifically the actual implementation of them.

The way LLMs approach to a problem is the exact same approach as finishing a poem.

In other words it does not have the concept of problem solving, it is simply finishing text to the best of its abilities.

17.11.2024 19:23 — 👍 0 🔁 0 💬 2 📌 0

Why would I follow you if I didn’t want rants and tangents?

Go ahead :)

17.11.2024 13:41 — 👍 0 🔁 0 💬 0 📌 0

It is!

When he first shared the findings some time back I spent some time thinking about how to extract something valuable from it but came up short. All I’m left with is that it’s fascinating.

I feel like maybe it could tell us something about how to choose optimal precision, but 🤷

17.11.2024 13:35 — 👍 1 🔁 0 💬 0 📌 0

Trying to make Rust x AI a reality.

Python survivor, book lover and weird music enjoyer.

The home of storytelling around the table! From our Campaign 4 saga in Aramán to Daggerheart and beyond - join the actual play adventure on Beacon.tv, YT, & Twitch

Please consider donating to the Palestine Children’s Relief Fund —> https://www.pcrf.net

A programming language empowering everyone to build reliable and efficient software.

Website: https://rust-lang.org/

Blog: https://blog.rust-lang.org/

Mastodon: https://social.rust-lang.org/@rust

#rustlang, #jj-vcs, atproto, shitposts, urbanism. I contain multitudes.

Working on #ruelang but just for fun.

Currently in Austin, TX, but from Pittsburgh. Previously in Bushwick, the Mission, LA.

PhD Candidate, Fanatic about Pizzas and Coffee.

https://mohitsharma29.github.io/

Twitter: @CFGeek

Mastodon: @cfoster0@sigmoid.social

When I choose to speak, I speak for myself.

🪄 Tensor-enjoyer 🧪

🤗 LLM whisperer @huggingface

📖 Co-author of "NLP with Transformers" book

💥 Ex-particle physicist

🤘 Occasional guitarist

🇦🇺 in 🇨🇭

America’s Finest News Source. A @globaltetrahedron.bsky.social subsidiary.

Get the paper delivered to your door: membership.theonion.com

Join The Onion Newsletter: https://theonion.com/newsletters/

We're a campaign to focus attention and resources on building information technologies that serve the interests of people over companies.

It's time to free social media from billionaire control.

Join: FreeOurFeeds.com | Donate: https://gofund.me/2e144bae

Google Chief Scientist, Gemini Lead. Opinions stated here are my own, not those of Google. Gemini, TensorFlow, MapReduce, Bigtable, Spanner, ML things, ...

RecSys, AI, Engineering; Principal Applied Scientist @ Amazon. Led ML @ Alibaba, Lazada, Healthtech Series A. Writing @ eugeneyan.com, aiteratelabs.com.

Machine Learning Engineer @ HuggingFace

(He/Him). Previously at LARA Lab @UMBC, Mohsin Lab @BRACU | Accessibility, Explainability and Multimodal DL. My opinions are mine.

I'm on the PhD application cycle for Fall '26!

www.shadabchy.com

Forever expanding my nerd/bimbo Pareto frontier. Ex-OpenAI, AGI safety and governance, fellow @rootsofprogress.

21.11.2024 00:51 — 👍 1 🔁 0 💬 0 📌 0

21.11.2024 00:51 — 👍 1 🔁 0 💬 0 📌 0