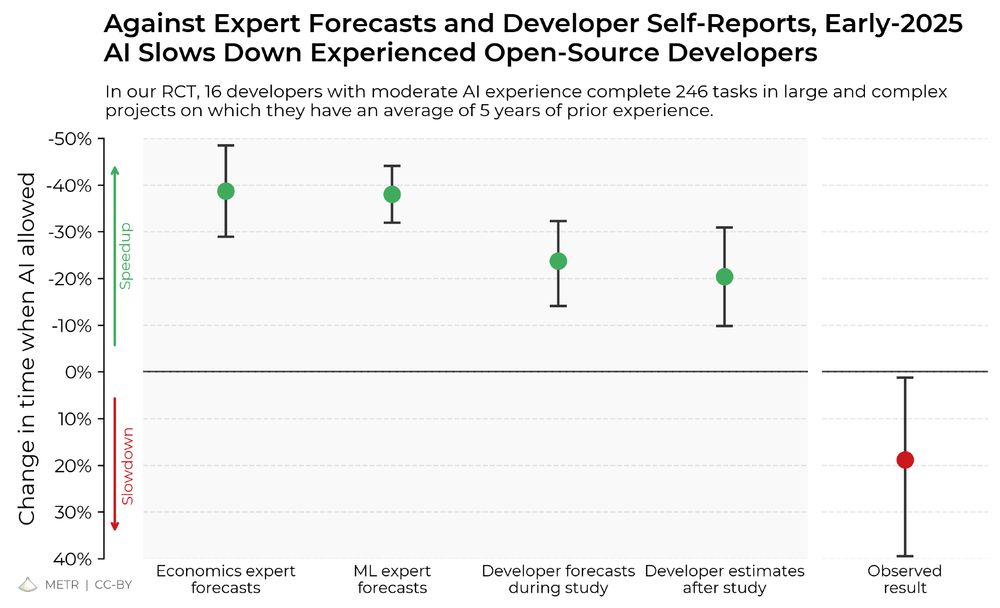

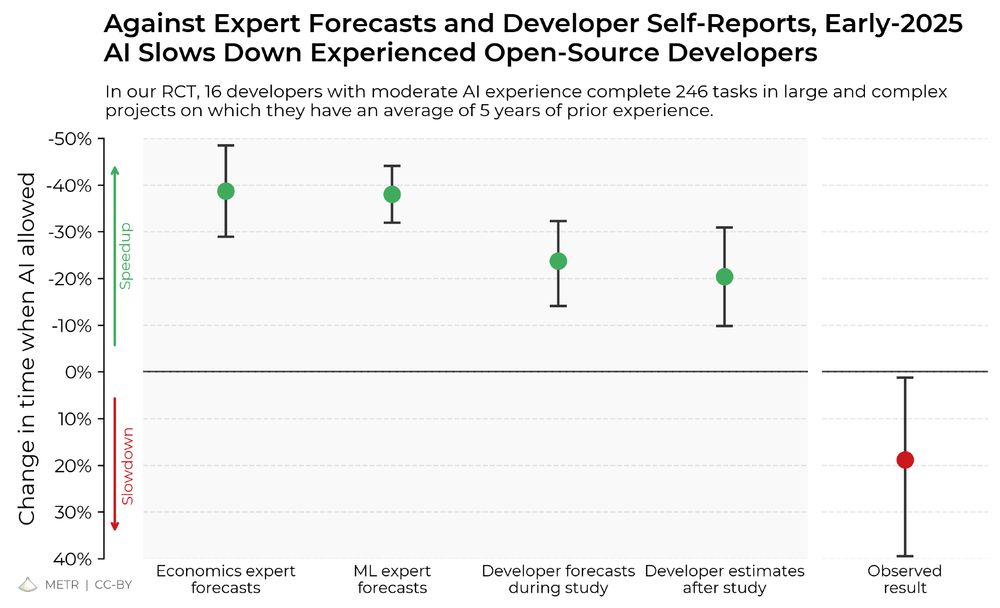

We ran a randomized controlled trial to see how much AI coding tools speed up experienced open-source developers.

The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

10.07.2025 19:46 — 👍 6904 🔁 3021 💬 106 📌 626

Update for those who’ve left the other app:

I’m now on the policy team at Model Evaluation and Threat Research (METR). Excited to be “doing AI policy” full-time.

07.03.2025 03:19 — 👍 15 🔁 1 💬 3 📌 0

Why aren’t our AI evaluations better? AFAICT a key reason is that the incentives around them are kinda bad.

In a new post, I explain how the standardized testing industry works and write about lessons it may have for the AI evals ecosystem.

open.substack.com/pub/contextw...

26.02.2025 04:35 — 👍 5 🔁 1 💬 0 📌 0

This is perfect in its own way

31.01.2025 21:20 — 👍 3 🔁 0 💬 0 📌 0

Natural minds and natural bodies are irreplaceable. Artificial minds are costless to replace. We might value artificial bodies more, since they aren’t so disposable, at least in the brief period when they are still few and costly. Could be a good period to set stories in.

14.01.2025 01:12 — 👍 4 🔁 0 💬 0 📌 0

YouTube video by TEMAC INDIA

TOYOTA AIR JET LOOMS JAT 810 JA4S-190 CM RUNNING AT 1200 RPM

When we optimize automation, we sometimes optimize *hard*. Like this automated loom working away at an inhuman 1200 RPM. Wild. youtu.be/WweMNDqDYhc?...

11.01.2025 02:10 — 👍 5 🔁 0 💬 1 📌 0

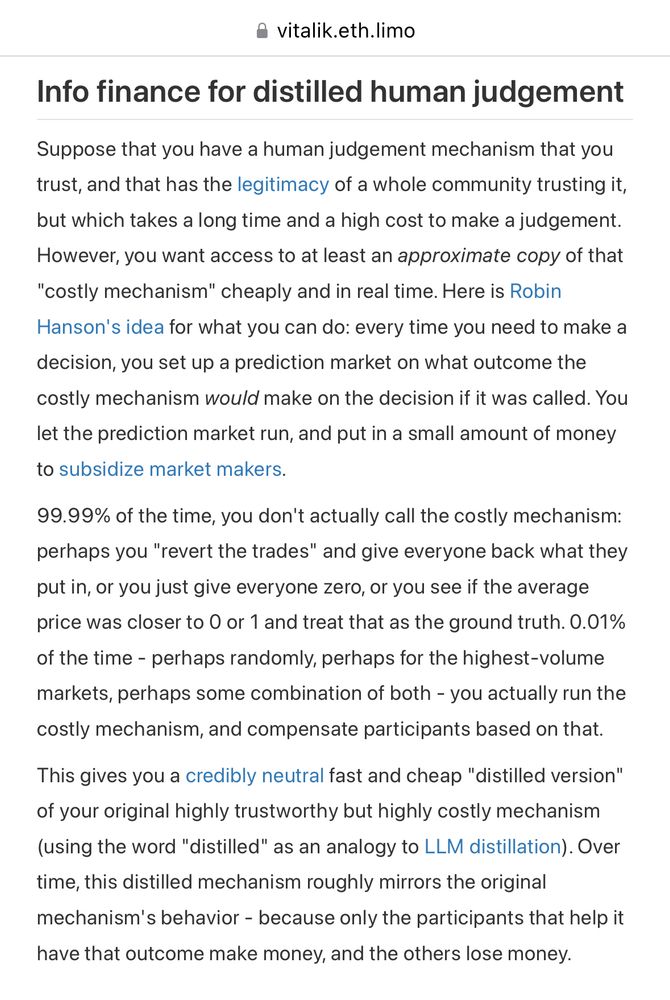

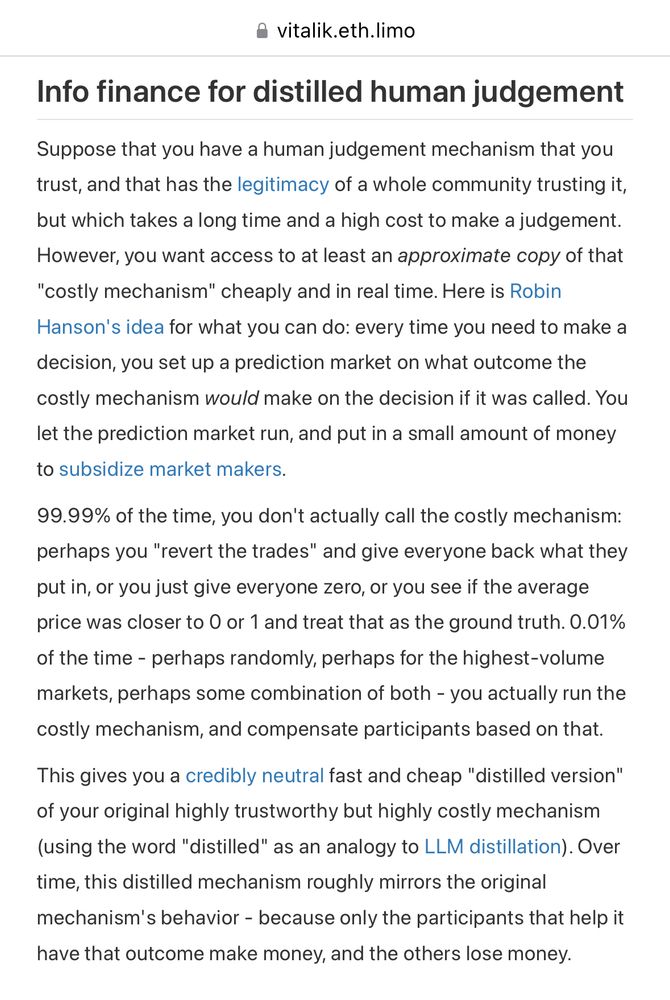

In Vitalik’s post he mentions resolving only the highest-volume markets, which I think would address this concern even more directly, but I’m less confident I understand that version.

24.12.2024 21:21 — 👍 1 🔁 0 💬 0 📌 0

I dunno! Would be fun to find out

24.12.2024 21:17 — 👍 1 🔁 0 💬 0 📌 0

I wouldn’t say it was free, really. Like, if the creator would’ve needed to spend $1 in subsidies on a regular market, on each market that has a 90% chance of reversion they would need to offer $10 in subsidies to compensate, or whatever.

24.12.2024 21:17 — 👍 1 🔁 0 💬 1 📌 0

Since the expected payouts on each market are much lower, you probably need big subsidies to compensate. And since you don’t know ahead of time which markets you will resolve, you have to fund them all.

24.12.2024 21:01 — 👍 1 🔁 0 💬 1 📌 0

You want traders to give you cheap but calibrated estimates for all the claims. The randomization reduces the expected size of payouts they’d receive for their bets, since each market only has a 10% chance of getting audited & resolved, but it preserves the incentive to bet their true probabilities.

24.12.2024 20:58 — 👍 1 🔁 1 💬 1 📌 0

Let’s take this to DMs :)

24.12.2024 19:45 — 👍 1 🔁 0 💬 1 📌 0

So if you create a prediction market because you want information on a question, you can think of the market subsidy as the compensation you’re paying folks for their information.

24.12.2024 18:54 — 👍 1 🔁 0 💬 2 📌 0

Yeah. It’s kinda subtle. With a subsidy, you’re basically giving away money as an incentive. But you can increase liquidity without giving away money.

24.12.2024 18:51 — 👍 1 🔁 0 💬 1 📌 0

You’re thinking of liquidity, which is related but not the same. Subsidy here just means committing money to increase the payouts to whoever is right.

24.12.2024 18:42 — 👍 1 🔁 0 💬 1 📌 0

What do you mean by “solve”?

You wanted information about all 100, so you subsidize markets on all of them, and traders can’t tell ahead of time which ones will be resolved, so if your subsidies were big they are incentivized to trade on any/all of the markets that they have information about.

24.12.2024 18:27 — 👍 1 🔁 0 💬 2 📌 0

Feel like they’ve made a lot of wild statements but I don’t know if anybody has collected those in one place for easy reference.

22.12.2024 05:25 — 👍 3 🔁 0 💬 1 📌 0

Is there a website/database out there that tracks what major AI company executives say about the future of AI?

22.12.2024 05:24 — 👍 8 🔁 0 💬 2 📌 0

Transformers and other parallel sequence models like Mamba are in TC⁰. That implies they can't internally map (state₁, action₁ ... actionₙ) → stateₙ₊₁

But they can map (state₁, action₁, state₂, action₂ ... stateₙ, actionₙ) → stateₙ₊₁

Just reformulate the task!

18.12.2024 06:59 — 👍 7 🔁 0 💬 0 📌 0

YouTube

Share your videos with friends, family, and the world

Atticus Geiger gave a take on when sparse autoencoder (SAEs) are/aren’t what you should use. I basically agree with his recommendations. youtube.com/clip/UgkxKWI...

10.12.2024 22:28 — 👍 5 🔁 0 💬 0 📌 0

These days, flow-based models are typically defined via (neural) differential equations, requiring numerical integration or simulation-free alternatives during training. This paper revisits autoregressive flows, using Transformer layers to define the sequence of flow transformations directly.

10.12.2024 17:08 — 👍 2 🔁 0 💬 0 📌 0

It isn’t super clear to me what the monthly pricing will be. Like, on the one hand in a competitive market I think the price of AI services will tend downward toward the marginal cost. But also there are only a few providers and constraints on supply. Not sure how it comes out on balance.

05.12.2024 17:11 — 👍 3 🔁 0 💬 1 📌 0

It might be like that! If so I would expect an experiment like this to indicate that. :)

04.12.2024 06:10 — 👍 0 🔁 0 💬 1 📌 0

Re: instruction-tuning and RLHF as “lobotomy”

I’m interested in experiments that look into how much finetuning can “roll back” a post-trained model to its base model perplexity on the original distribution.

Has anyone seen an experiment like this run?

04.12.2024 05:44 — 👍 4 🔁 0 💬 1 📌 0

Ah. Yeah I don’t think there’s anything special about services that brand themselves as “AI agents”. What matters IMO is it’s opaquely doing expensive work on behalf of the client without human oversight.

For those, I think they might want to advertise their guarantees. Not certain, though.

02.12.2024 01:18 — 👍 2 🔁 0 💬 0 📌 0

Can you say more? Not sure that I understand.

02.12.2024 00:37 — 👍 1 🔁 0 💬 1 📌 0

“Provider pays” for failed automation services

If your AI works as well as you claim, why not make that a promise?

I’ve been wondering when it would make sense for “AI agent” services to offer money-back guarantees. Wrote a short post about this on a flight.

open.substack.com/pub/contextw...

01.12.2024 23:26 — 👍 7 🔁 0 💬 1 📌 0

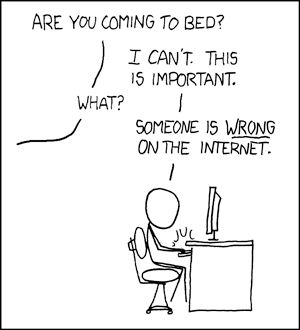

xkcd comic 386, with back and forth that goes:

“Are you going to bed?”

“I can’t. This is important.”

“What?”

“Someone is WRONG on the internet.”

https://xkcd.com/386/

Neat thing about real-money prediction markets is that you can get paid for doing this.

30.11.2024 16:40 — 👍 4 🔁 0 💬 0 📌 0

From prediction markets to info finance

h/t @vitalik.ca, though I believe the idea is borrowed from @robinhanson.bsky.social

vitalik.eth.limo/general/2024...

28.11.2024 00:54 — 👍 2 🔁 0 💬 0 📌 0

A bit of clever mechanism design: prediction markets + randomized auditing.

If you have 100 verifiable claims you want information on but can only afford to check 10, fund markets on each. Later, use a randomized ordering of them to check the first 10. Resolve those to yes/no, refund the rest.

28.11.2024 00:54 — 👍 6 🔁 0 💬 3 📌 0

AI safety at Anthropic, on leave from a faculty job at NYU.

Views not employers'.

I think you should join Giving What We Can.

cims.nyu.edu/~sbowman

Research scientist at Google DeepMind. All opinions are my own.

https://turntrout.com

Interpretable Deep Networks. http://baulab.info/ @davidbau

Scruting matrices @ Apollo Research

This is a profile. There are many like it, but this one's mine.

Blogs: https://kajsotala.fi , https://kajsotala.substack.com/ .

Tech lawyer. Generates plausible bullshit in 6 minute increments. More active on https://x.com/lefthanddraft

ai enjoyer, human flourishing, cat ✝️

speechmap.ai

mindmeldai.substack.com

xlr8harder.substack.com

your friendly neighborhood slop janitor

Independent AI researcher, creator of datasette.io and llm.datasette.io, building open source tools for data journalism, writing about a lot of stuff at https://simonwillison.net/

The world's leading venue for collaborative research in theoretical computer science. Follow us at http://YouTube.com/SimonsInstitute.

CEO of Coefficient Giving

i like philos and computers and other things - building llms @ cohere

Research Assistant in the AI for Cyber Defence research centre at The Alan Turing Institute.

building Cursor @ Anysphere

open-source enthusiast and full stack developer

Senior Resident China Fellow at AtlanticCouncil's DFRLab. PhD candidate at Georgetown focusing on comparative China-U.S.-EU data policy. Methodologically promiscuous. 🏳️🌈

Head of AI @ NormalComputing. Tweets on Math, AI, Chess, Probability, ML, Algorithms and Randomness. Author of tensorcookbook.com