Sycophantic AI Decreases Prosocial Intentions and Promotes Dependence

Both the general public and academic communities have raised concerns about sycophancy, the phenomenon of artificial intelligence (AI) excessively agreeing with or flattering users. Yet, beyond isolat...

Preliminary results show that the current framework of "AI" makes ppl less likely to help or seek help from other humans, or to seek to soothe conflict, and that people actively prefer that framework to any others, literally serving to make them more dependent on it.

05.10.2025 17:45 — 👍 244 🔁 117 💬 11 📌 22

NYAS Publications

Generative artificial intelligence (GenAI) applications, such as ChatGPT, are transforming how individuals access health information, offering conversational and highly personalized interactions. Whi...

New research out!🚨

In our new paper, we discuss how generative AI (GenAI) tools like ChatGPT can mediate confirmation bias in health information seeking.

As people turn to these tools for health-related queries, new risks emerge.

🧵👇

nyaspubs.onlinelibrary.wiley.com/doi/10.1111/...

28.07.2025 10:15 — 👍 15 🔁 9 💬 1 📌 1

Sure :)

24.06.2025 14:35 — 👍 0 🔁 0 💬 0 📌 0

We’ll be presenting @facct on 06.24 at 10:45 AM during the Evaluating Explainable AI session!

Come chat with us. We would love to discuss implications for AI policy, better auditing methods, and next steps for algorithmic fairness research.

#AIFairness #XAI

24.06.2025 06:14 — 👍 1 🔁 0 💬 0 📌 0

But if they are indeed used to dispute discrimination claims, we can expect multiple failed cases due to insufficient evidence and many undetected discriminatory decisions.

Current explanation-based auditing is, therefore, fundamentally flawed, and we need additional safeguards.

24.06.2025 06:14 — 👍 1 🔁 0 💬 1 📌 0

Despite their unreliability, explanations are suggested as anti-discrimination measures by a number of regulations.

GDPR ✓ Digital Services Act ✓ Algorithmic Accountability Act ✓ GDPD (Brazil) ✓

24.06.2025 06:14 — 👍 1 🔁 0 💬 1 📌 0

So why do explanations fail?

1️⃣ They target individuals, while discrimination operates on groups

2️⃣ Users’ causal models are flawed

3️⃣ Users overestimate proxy strength and treat its presence in the explanation as discrimination

4️⃣ Feature-outcome relationships bias user claims

24.06.2025 06:14 — 👍 1 🔁 0 💬 1 📌 0

BADLY.

When participants flag discrimination, they are correct ~50% of the time, miss 55% of the discriminatory predictions and keep a 30% FPR.

Additional knowledge (protected attributes, proxy strength) improves the detection to roughly 60% without affecting other measures.

24.06.2025 06:14 — 👍 2 🔁 0 💬 1 📌 0

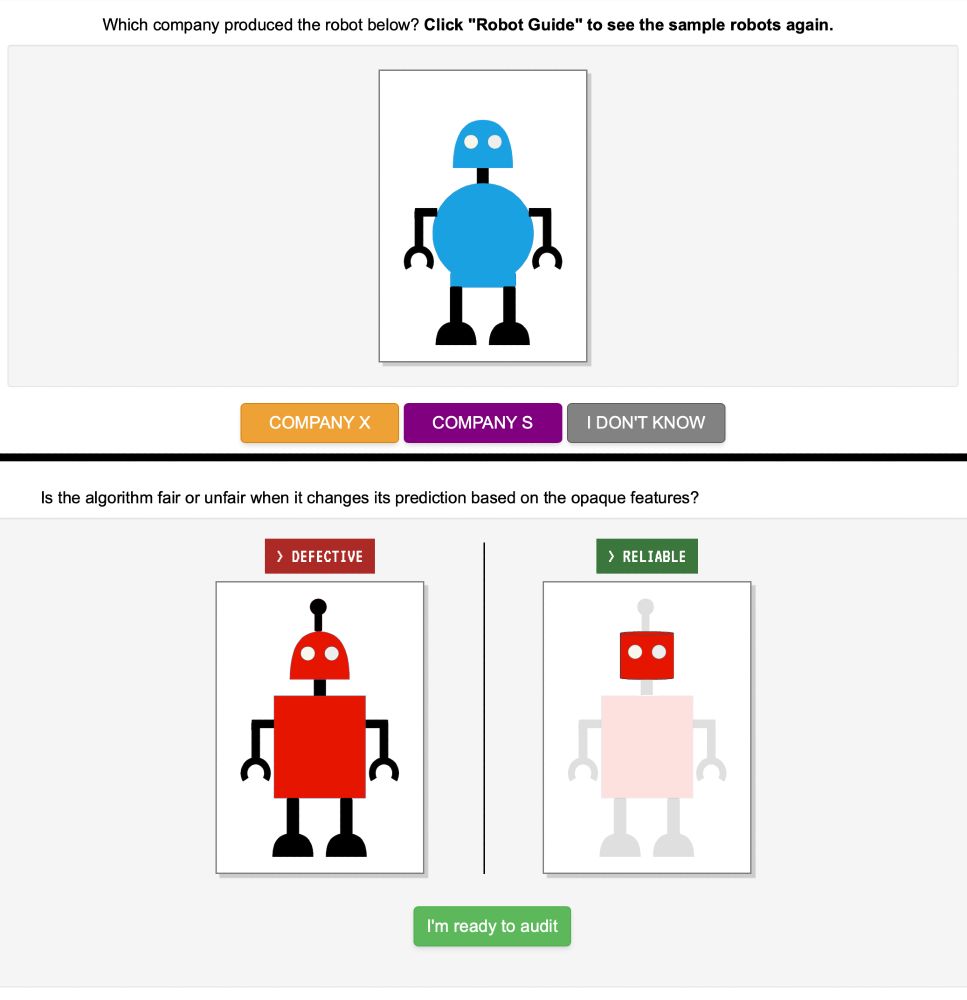

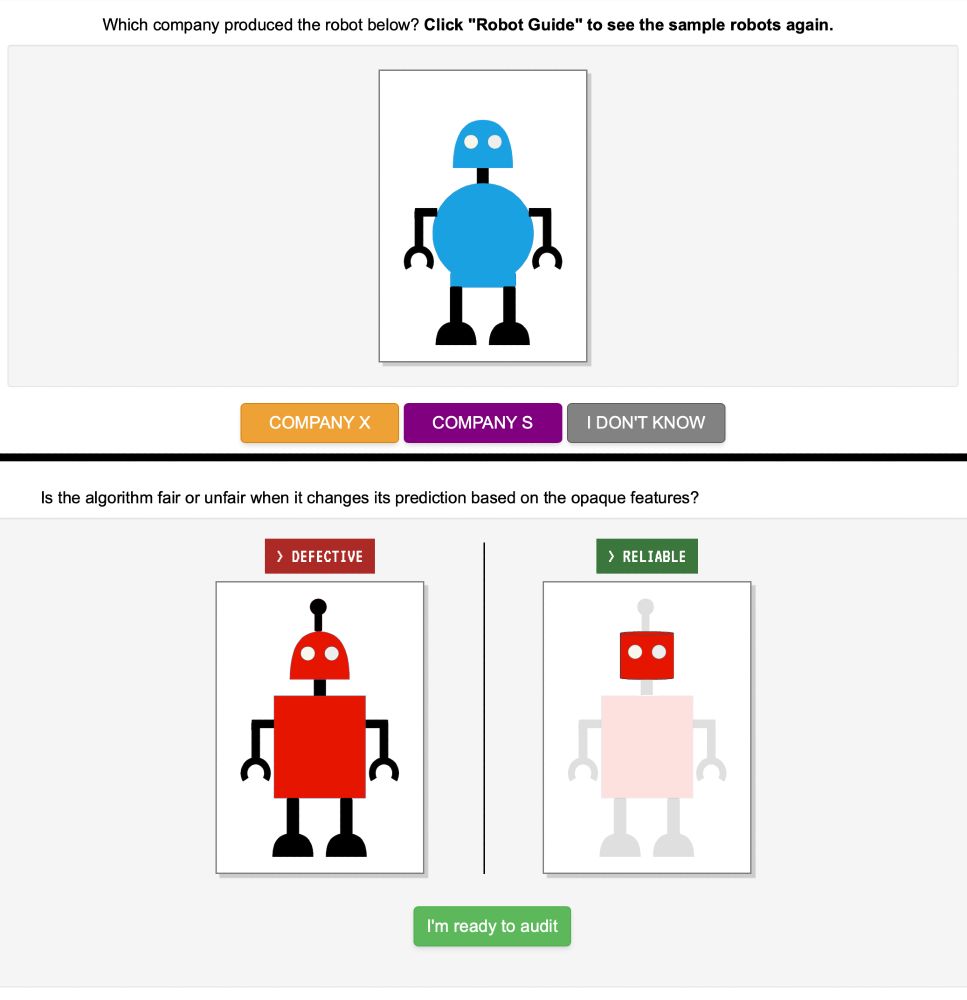

Our setup lets us assign each robot a ground-truth discrimination outcome, which lets us evaluate how well each participant could do under different information regimes.

So, how did they do?

24.06.2025 06:14 — 👍 1 🔁 0 💬 1 📌 0

We recruited participants, anchored their beliefs on discrimination, trained them to use explanations, and tested to make sure they got it right.

We then saw how well they could flag unfair predictions based on counterfactual explanations and feature attribution scores.

24.06.2025 06:14 — 👍 1 🔁 0 💬 1 📌 0

Participants audit a model to predict if robots sent to Mars will break down. Some are built by “Company X.” Others by “Company S.”

Our model predicts failure based on robot body parts. It can discriminate against Company X by predicting that robots without an antenna fail.

24.06.2025 06:14 — 👍 1 🔁 0 💬 1 📌 0

We cannot tell if explanations work or not due to these reasons.

To tackle this challenge, we introduce a synthetic task where we:

- Teach users how to use explanations

- Control their beliefs

- Adapt the world to fit their beliefs

- Control the explanation content

24.06.2025 06:14 — 👍 1 🔁 0 💬 1 📌 0

Users may fail to detect discrimination through explanations due to:

- Proxies not being revealed by explanations

- Issues with interpreting explanations

- Wrong assumptions about proxy strength

- Unknown protected class

- Incorrect causal beliefs

24.06.2025 06:14 — 👍 2 🔁 0 💬 1 📌 0

Imagine a model that predicts loan approval based on credit history and salary.

Would a rejected female applicant get approved if she somehow applied as a man?

If yes, her prediction was discriminatory.

Fairness requires predictions to stay the same regardless of the protected class.

24.06.2025 06:14 — 👍 1 🔁 0 💬 1 📌 0

Right to explanation laws assume explanations help people detect algorithmic discrimination.

But is there any evidence for that?

In our latest work w/ David Danks @berkustun, we show explanations fail to help people, even under optimal conditions.

PDF shorturl.at/yaRua

24.06.2025 06:14 — 👍 8 🔁 0 💬 1 📌 1

You're both in!

11.06.2025 07:32 — 👍 1 🔁 0 💬 0 📌 0

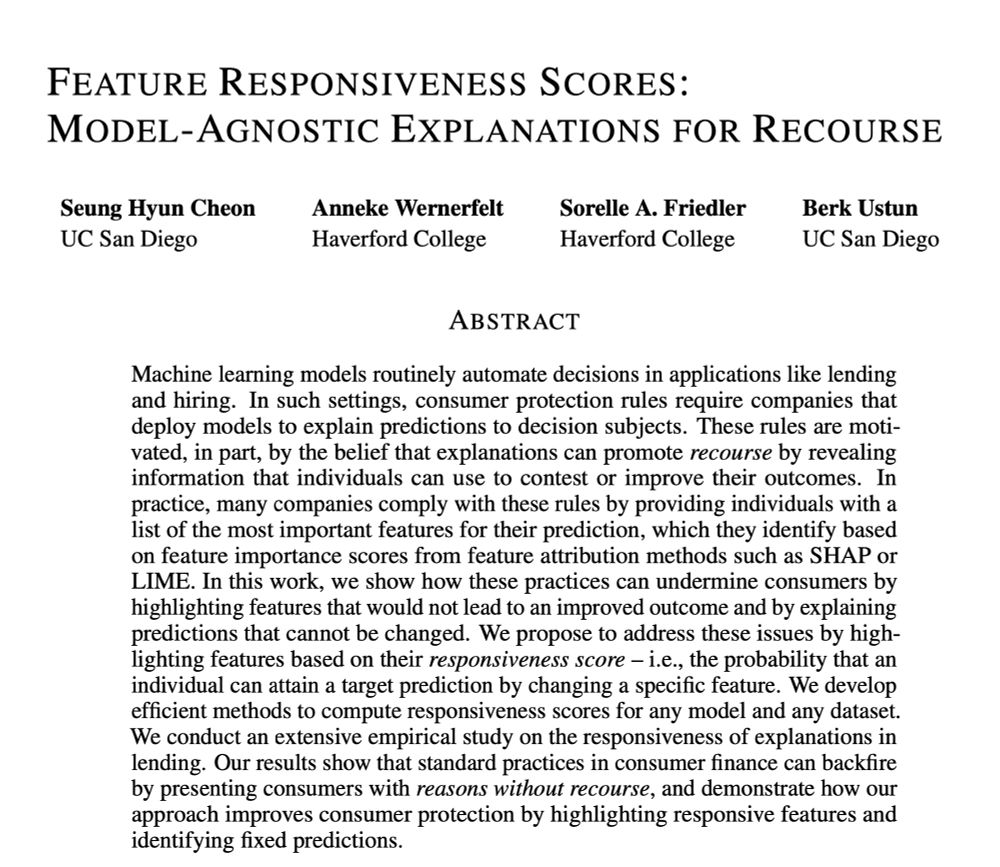

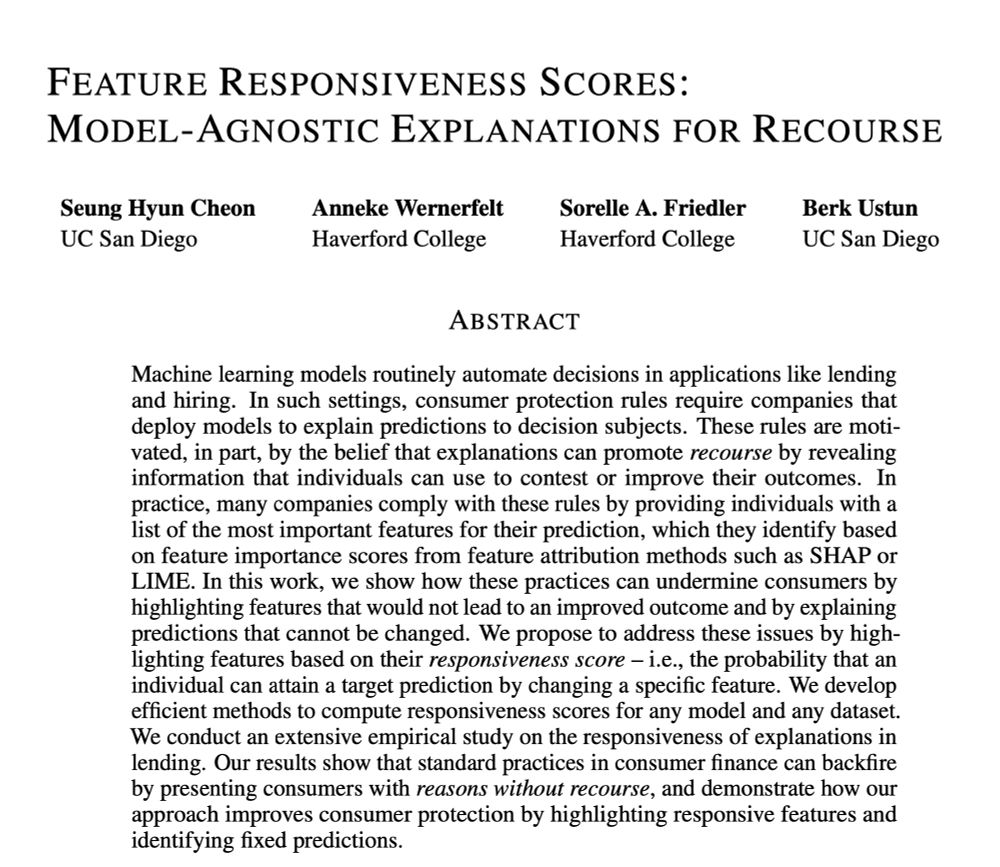

Denied a loan, an interview, or an insurance claim by machine learning models? You may be entitled to a list of reasons.

In our latest w @anniewernerfelt.bsky.social @berkustun.bsky.social @friedler.net, we show how existing explanation frameworks fail and present an alternative for recourse

24.04.2025 06:19 — 👍 16 🔁 7 💬 1 📌 1

Welcome in :)

29.01.2025 12:53 — 👍 1 🔁 0 💬 0 📌 0

Oh yeah, welcome to the pack!

08.01.2025 16:01 — 👍 1 🔁 0 💬 0 📌 0

Of course!

21.12.2024 17:30 — 👍 1 🔁 0 💬 0 📌 0

Actually, I've added you some time ago already so you're good :)

08.12.2024 15:29 — 👍 1 🔁 0 💬 0 📌 0

Let's have bioinformatics represented then :) Regarding the clubs, I have not heard of any, might be just a coincidence :D

08.12.2024 15:28 — 👍 1 🔁 0 💬 1 📌 0

Added!

08.12.2024 15:27 — 👍 1 🔁 0 💬 0 📌 0

Sure Max!

06.12.2024 21:34 — 👍 1 🔁 0 💬 2 📌 0

Hey Lucas, consider it done :)

06.12.2024 13:41 — 👍 1 🔁 0 💬 0 📌 0

Welcome to the pack :)

03.12.2024 14:49 — 👍 0 🔁 0 💬 0 📌 0

Interesting stuff, welcome to the hood!

02.12.2024 12:45 — 👍 1 🔁 0 💬 0 📌 0

Of course, welcome in!

01.12.2024 17:06 — 👍 1 🔁 0 💬 1 📌 0

You were in even before the request 8) Cheers

01.12.2024 17:05 — 👍 1 🔁 0 💬 1 📌 0

You wanted starter packs to be searchable. Our engineers are busy keeping us online, so in the meantime, an independent developer built a new searchable library of starter packs. This is the beauty of building in the open 🦋

26.11.2024 05:11 — 👍 4000 🔁 1310 💬 214 📌 108

CSE phd student @ UC San Diego

Associate Professor, ESADE | PhD, Machine Learning & Public Policy, Carnegie Mellon | Previously FAccT EC | Algorithmic fairness, human-AI collab | 🇨🇴 💚 she/her/ella.

Incoming PhD @ MIT EECS. ugrad philosophy & CS @ UWa

Historian of early modern health, religion, and emotions | Digital history and AI

Postdoc at C²DH, University of Luxembourg

PhD candidate - Centre for Cognitive Science at TU Darmstadt,

explanations for AI, sequential decision-making, problem solving

Postdoctoral Researcher in Human-Computer Interaction @ Trinity College Dublin | Research Interest: HCI, Digital Health, Human-Centered AI/Computing

Responsible AI & Human-AI Interaction

Currently: Research Scientist at Apple

Previously: Princeton CS PhD, Yale S&DS BSc, MSR FATE & TTIC Intern

https://sunniesuhyoung.github.io/

AI/ML, Responsible AI, Technology & Society @MicrosoftResearch

DPhil student at University of Oxford. Researcher in interpretable AI for medical imaging. Supervised by Alison Noble and Yarin Gal.

PhD Student | Works on Explainable AI | https://donatellagenovese.github.io/

PhD student in Machine Learning @Warsaw University of Technology and @IDEAS NCBR

Explainable/Interpretable AI researchers and enthusiasts - DM to join the XAI Slack! Blue Sky and Slack maintained by Nick Kroeger

PhD Student at the Max Planck Institute for Informatics @cvml.mpi-inf.mpg.de @maxplanck.de | Explainable AI, Computer Vision, Neuroexplicit Models

Web: sukrutrao.github.io

#nlp researcher interested in evaluation including: multilingual models, long-form input/output, processing/generation of creative texts

previous: postdoc @ umass_nlp

phd from utokyo

https://marzenakrp.github.io/

Assistant Professor of Computer Science at TU Darmstadt, Member of @ellis.eu, DFG #EmmyNoether Fellow, PhD @ETH

Computer Vision & Deep Learning

senior principal researcher at msr nyc, adjunct professor at columbia

PhD student @Yale • Applied Scientist @AWS AI • Automated Reasoning • Neuro-Symbolic AI • Alignment • Security & Privacy • Views my own • https://ferhat.ai

Postdoc @ MIT Brain & Cognitive Science | PhD @ YalePsychology | Computational Modeling | Metacognition | Social Cognition | Perception | Women’s Health Advocacy marleneberke.github.io

Cognitive scientist at NTNU · www.ntnu.edu/employees/giosue.baggio · Author of ‘Meaning in the Brain’ and ‘Neurolinguistics’ @mitpress.bsky.social · “(…) ita res accendent lumina rebus.” (Lucretius)