📢🎓 We have open PhD positions in Computer Vision & Machine Learning at @tuda.bsky.social and @hessianai.bsky.social within the Reasonable AI Cluster of Excellence — supervised by @stefanroth.bsky.social, @simoneschaub.bsky.social and many others!

www.career.tu-darmstadt.de/tu-darmstadt...

04.11.2025 14:04 — 👍 7 🔁 6 💬 0 📌 0

🎉 Today, Simon Kiefhaber will present our ICCV oral paper on how to make optical flow estimators more efficient (faster inference and lower memory usage) with state-of-the-art accuracy:

🌍 visinf.github.io/recover

Talk: Tue 09:30 AM, Kalakaua Ballroom

Poster: Tue 11:45 AM, Exhibit Hall I #76

21.10.2025 19:13 — 👍 5 🔁 1 💬 0 📌 0

📢Excited to share our IROS 2025 paper “Boosting Omnidirectional Stereo Matching with a Pre-trained Depth Foundation Model”!

Work by Jannik Endres, @olvrhhn.bsky.social, Charles Cobière, @simoneschaub.bsky.social, @stefanroth.bsky.social and Alexandre Alahi.

17.10.2025 21:27 — 👍 5 🔁 5 💬 1 📌 0

[1/8] We are presenting four main conference papers, two workshop papers, and a workshop at @iccv.bsky.social 2025 in Hawaii! 🎉🏝

19.10.2025 15:35 — 👍 7 🔁 3 💬 1 📌 0

ELLIS PhD Program: Call for Applications 2025

The ELLIS mission is to create a diverse European network that promotes research excellence and advances breakthroughs in AI, as well as a pan-European PhD program to educate the next generation of AI...

🎓 Looking for a PhD position in computer vision? Apply to the European Laboratory for Learning & Intelligent Systems (ELLIS) and work with @stefanroth.bsky.social & @simoneschaub.bsky.social! Join the info session on Oct 1.

@ellis.eu @tuda.bsky.social

ellis.eu/news/ellis-p...

29.09.2025 09:34 — 👍 10 🔁 6 💬 0 📌 0

We are presenting five papers at the DAGM German Conference on Pattern Recognition (GCPR, @gcpr-by-dagm.bsky.social) in Freiburg this week!

23.09.2025 17:45 — 👍 6 🔁 2 💬 1 📌 0

Efficient Masked Attention Transformer for Few-Shot Classification and Segmentation (GCPR 2025)

by @dustin-carrion.bsky.social, @stefanroth.bsky.social, and @simoneschaub.bsky.social

🌍: visinf.github.io/emat

Poster: Wednesday, 03:30 PM, Postern 8

23.09.2025 17:45 — 👍 5 🔁 1 💬 1 📌 0

Removing Cost Volumes from Optical Flow Estimators (ICCV 2025 Oral)

by @skiefhaber.de, @stefanroth.bsky.social, and @simoneschaub.bsky.social

🌍: visinf.github.io/recover

Poster: Friday, 10:30 AM, Poster 14

23.09.2025 17:45 — 👍 5 🔁 1 💬 1 📌 0

🚀 Open-Mic Opinions! 🚀

We welcome you to voice your opinion on the state of XAI. You get 5 minutes to speak (in-person only) during the workshop.

📷 Submit your proposals here: lnkd.in/d7_EWKXp

For more details: lnkd.in/dpYWVYXS

@iccv.bsky.social #ICCV2025 #eXCV

16.09.2025 13:48 — 👍 7 🔁 4 💬 1 📌 0

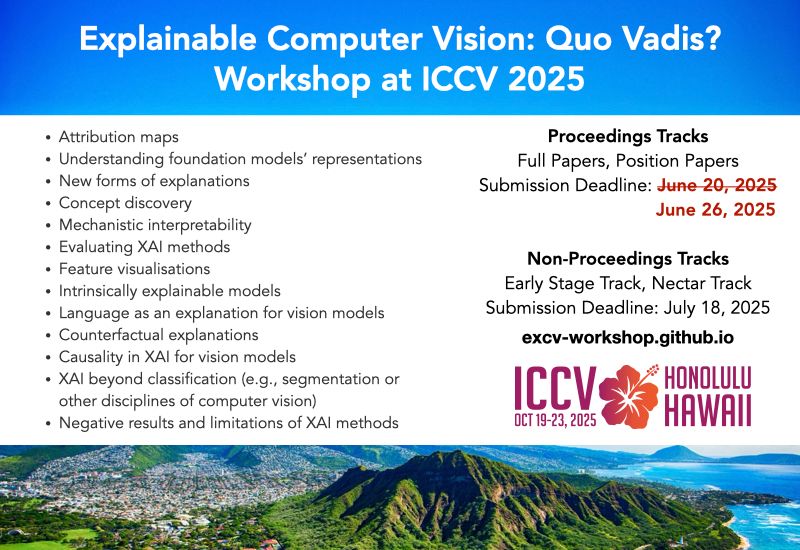

🚨Deadline Approaching! 🚨

Non-Proceedings track closes in 2 days!

Be sure to submit on time!

We are awaiting your submissions!

More info at: excv-workshop.github.io

@iccv.bsky.social #ICCV2025 #eXCV

13.08.2025 08:33 — 👍 1 🔁 1 💬 1 📌 0

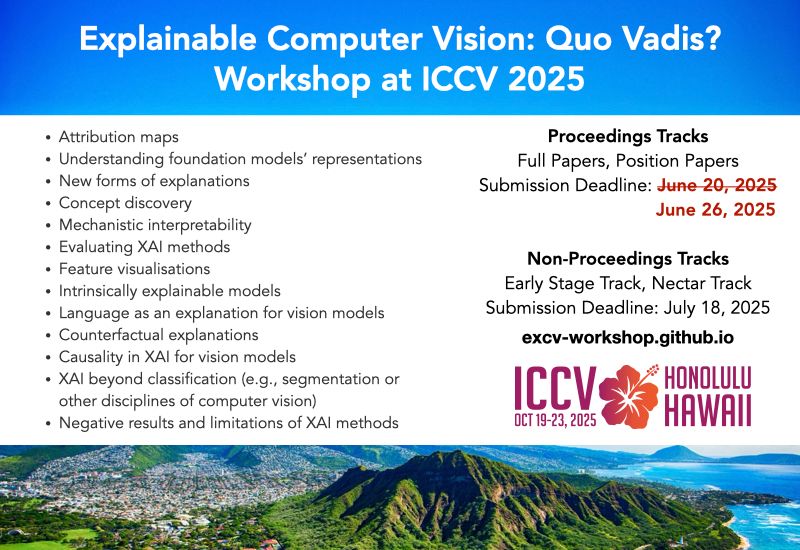

Got a strong XAI paper rejected from ICCV? Submit it to our ICCV eXCV Workshop today—we welcome high-quality work!

🗓️ Submissions open until June 26 AoE.

📄 Got accepted to ICCV? Congrats! Consider our non-proceedings track.

#ICCV2025 @iccv.bsky.social

26.06.2025 09:21 — 👍 20 🔁 9 💬 0 📌 3

Call for papers at the eXCV workshop at ICCV 2025.

Join us in taking stock of the state of the field of explainability in computer vision, at our Workshop on Explainable Computer Vision: Quo Vadis? at #ICCV2025!

@iccv.bsky.social

14.06.2025 15:47 — 👍 13 🔁 5 💬 1 📌 1

We are presenting 3 papers at #CVPR2025!

11.06.2025 20:56 — 👍 7 🔁 2 💬 1 📌 0

"Reasonable AI" got selected as a cluster of excellence www.tu-darmstadt.de/universitaet...

Overwhelmingly happy to be part of RAI & continue working with the smart minds at TU Darmstadt & hessian.AI, while also seeing my new home at Uni Bremen achieve a historic success in the excellence strategy!

23.05.2025 11:57 — 👍 8 🔁 3 💬 1 📌 0

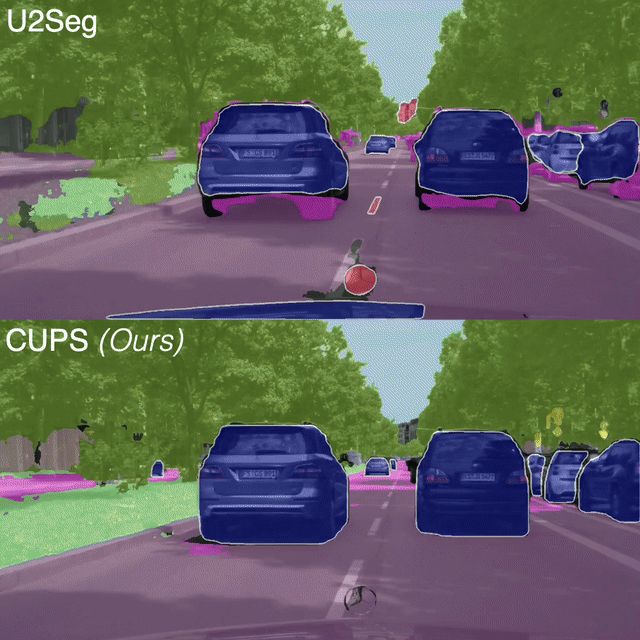

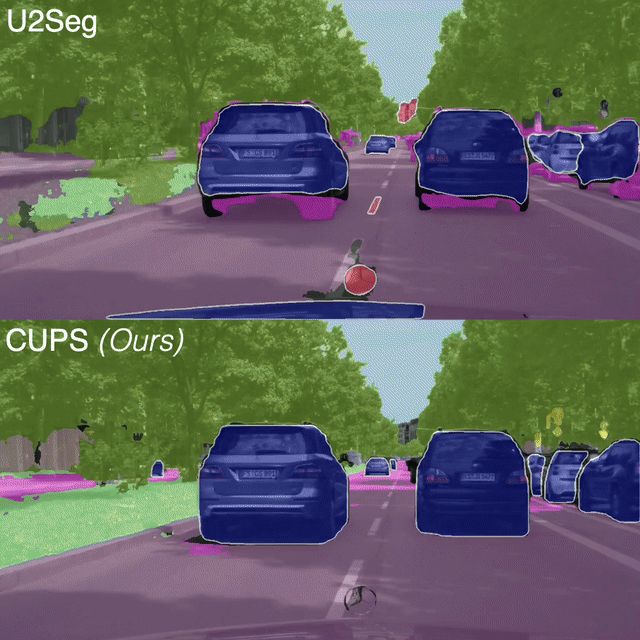

📢 #CVPR2025 Highlight: Scene-Centric Unsupervised Panoptic Segmentation 🔥

We present CUPS, the first unsupervised panoptic segmentation method trained directly on scene-centric imagery.

Using self-supervised features, depth & motion, we achieve SotA results!

🌎 visinf.github.io/cups

04.04.2025 13:38 — 👍 22 🔁 6 💬 1 📌 2

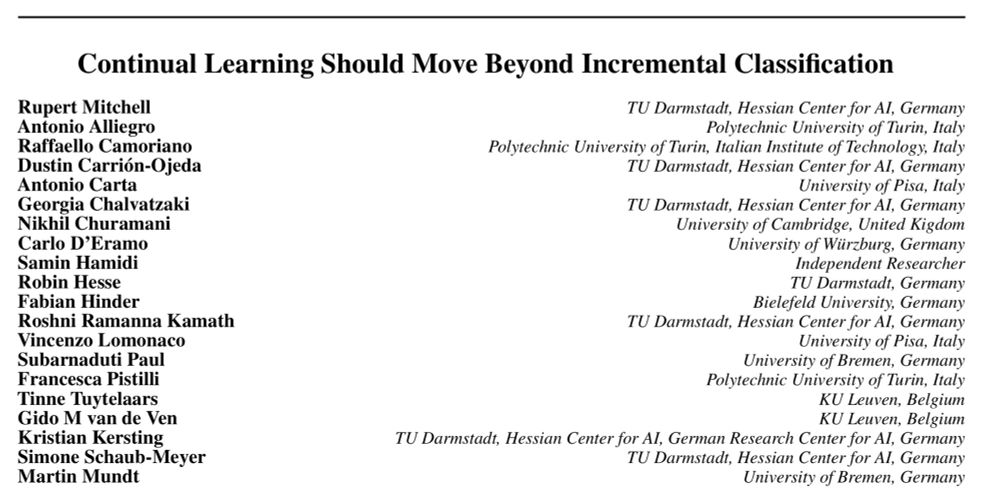

Why has continual ML not had its breakthrough yet?

In our new collaborative paper w/ many amazing authors, we argue that “Continual Learning Should Move Beyond Incremental Classification”!

We highlight 5 examples to show where CL algos can fail & pinpoint 3 key challenges

arxiv.org/abs/2502.11927

18.02.2025 13:33 — 👍 10 🔁 3 💬 0 📌 0

🏔️⛷️ Looking back on a fantastic week full of talks, research discussions, and skiing in the Austrian mountains!

31.01.2025 19:38 — 👍 32 🔁 11 💬 0 📌 0

Excited to share that today our paper recommender platform www.scholar-inbox.com has reached 20k users! We hope to reach 100k by the end of the year.. Lots of new features are being worked on currently and rolled out soon.

15.01.2025 22:03 — 👍 190 🔁 26 💬 12 📌 8

YouTube video by Technische Universität Darmstadt

Verstehen, was KI-Modelle können und was nicht: RAI-Forschende Dr. Simone Schaub-Meyer im Interview

Verstehen, was KI-Modelle können – und was nicht: Interview mit @simoneschaub.bsky.social, Early-Career-Forscherin im Clusterprojekt „RAI“ (Reasonable Artificial Intelligence).

"RAI" ist eines der Projekte, mit denen sich die TUDa um einen Exzellenzcluster bewirbt.

www.youtube.com/watch?v=2VAm...

13.01.2025 12:18 — 👍 14 🔁 3 💬 0 📌 0

Hi Julian, just joined bluesky, I am working on XAI in Computer Vision, would be great to be added to the list as well, thanks

08.01.2025 15:41 — 👍 1 🔁 0 💬 1 📌 0

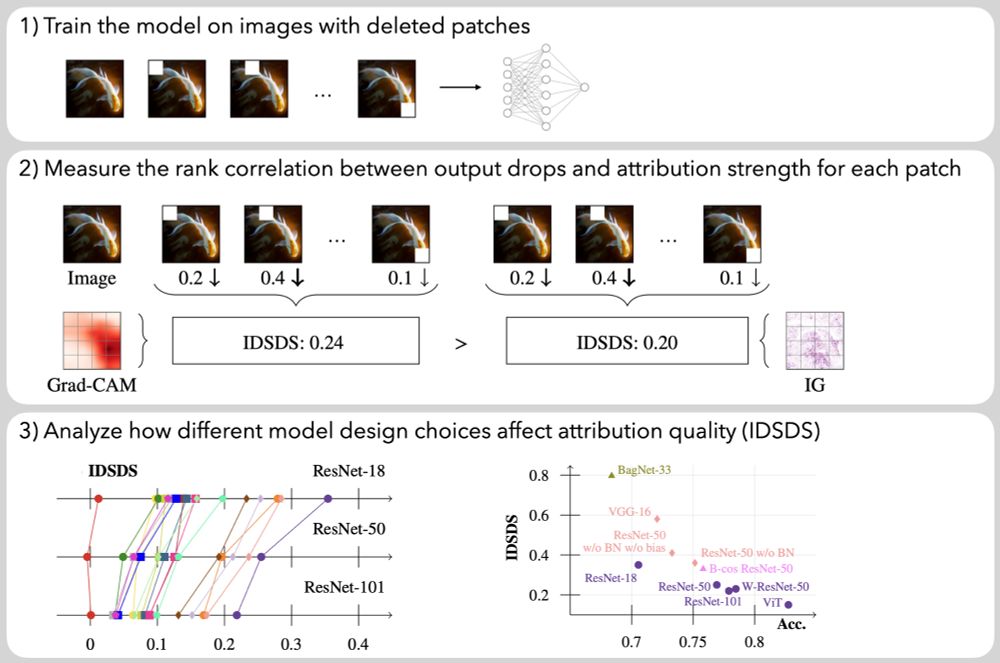

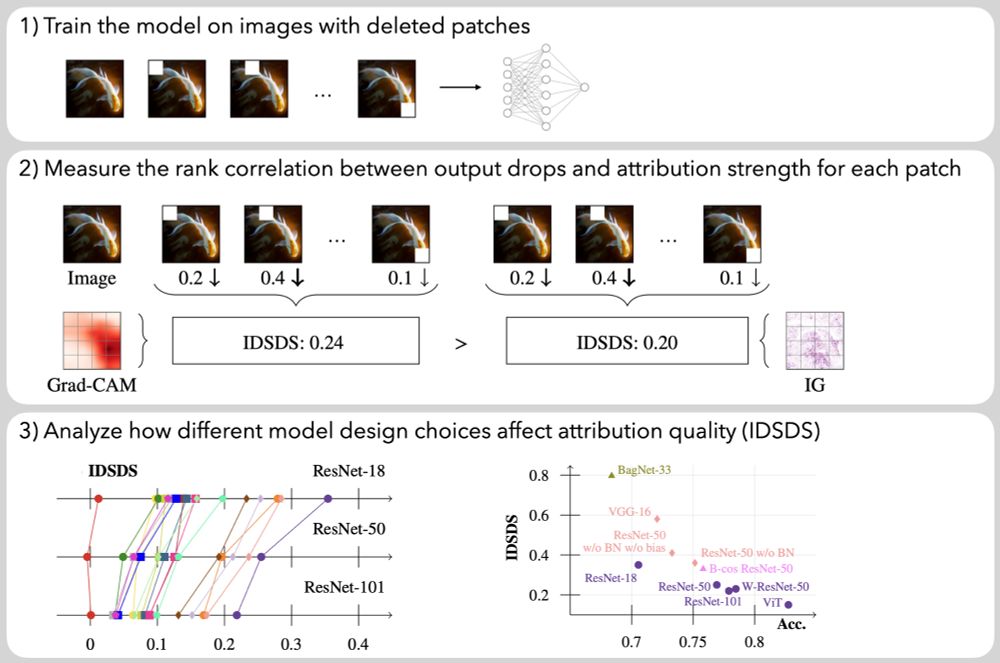

Want to learn about how model design choices affect the attribution quality of vision models? Visit our #NeurIPS2024 poster on Friday afternoon (East Exhibition Hall A-C #2910)!

Paper: arxiv.org/abs/2407.11910

Code: github.com/visinf/idsds

13.12.2024 10:10 — 👍 21 🔁 7 💬 1 📌 1

Our work, "Boosting Unsupervised Semantic Segmentation with Principal Mask Proposals" is accepted at TMLR! 🎉

visinf.github.io/primaps/

PriMaPs generate masks from self-supervised features, enabling to boost unsupervised semantic segmentation via stochastic EM.

28.11.2024 17:41 — 👍 17 🔁 7 💬 1 📌 0

I am passionate about artificial intelligence. Currently, I am

researching AI in the field of medical imaging. My vision is to advance AI algorithms to assist physicians, improve medical diagnosis, and help patients.

International research group at @uniuef focusing on AI and RL. Topics include deepfake detection, LLMs, RecSystems, superalignment, speaker recognition, and multi-agent RL. Advancing trustworthy, human-aligned AI. More info coming soon!

Explainable AI research from the machine learning group of Prof. Klaus-Robert Müller at @tuberlin.bsky.social & @bifold.berlin

Groundbreaking foundational research in Big Data Management, Machine Learning, and their intersection. #AI #Research

www.bifold.berlin

📰News: www.bifold.berlin/news-events/news

🔑Data Privacy: www.bifold.berlin/data-privacy

PhD Student for Unsupervised Segmentation & Representation Learning @ Ulm University 👁️👨💻

https://leonsick.github.io

PhD student in Interpretable Machine Learning at @tuberlin.bsky.social & @bifold.berlin

https://web.ml.tu-berlin.de/author/laura-kopf/

I do my PhD on physically based (differentiable) rendering, material appearance modeling/perception/capturing @tsawallis.bsky.social's Perception Lab

I enjoy photography, animation/VFX, working on my renderer, languages and contributing to open source.

hessian.AI conducts cutting-edge AI research, provides computing infrastructure & services, supports start-up projects, ensures the transfer to business and society and thus strengthens the AI ecosystem in Hesse & beyond. https://hessian.ai/legal-notice

PhD candidate - Centre for Cognitive Science at TU Darmstadt,

explanations for AI, sequential decision-making, problem solving

PhD student in computer vision at Imagine, ENPC

Senior Lecturer @QUT Centre for Robotics & ARC DECRA Fellow. Blending neuroscience and robotics for robot localisation & underwater perception.

PhD Student at the Max Planck Institute for Informatics @cvml.mpi-inf.mpg.de @maxplanck.de | Explainable AI, Computer Vision, Neuroexplicit Models

Web: sukrutrao.github.io

Post-Doctoral Researcher at @eml-munich.bsky.social, in

@www.helmholtz-munich.de and @tumuenchen.bsky.social.

Optimal Transport, Explainability, Robustness, Deep Representation Learning, Computer Vision.

https://qbouniot.github.io/

Principal Scientist at Naver Labs Europe, Lead of Spatial AI team. AI for Robotics, Computer Vision, Machine Learning. Austrian in France. https://chriswolfvision.github.io/www/

The German Conference on Pattern Recognition (GCPR) is the annual symposium of the German Association for Pattern Recognition (DAGM). It is the national venue for recent advances in image processing, pattern recognition, and computer vision.

Ziel unserer Forschung ist der Schutz digitaler Städte vor Katastrophen. Dazu entwickeln wir widerstandsfähige Infrastrukturen, die Menschenleben retten.

Cybersicherheit und Privacy

https://www.sit.fraunhofer.de/de/impressum/

https://www.sit.fraunhofer.de/datenschutzerklaerung/

PhD student at the University of Tuebingen. Computer vision, video understanding, multimodal learning.

https://ninatu.github.io/

We are sqIRL(squirrel), the Interpretable Representation Learning Lab based at IDLab - University of Antwerp & imec.

Research Areas: #RepresentationLearning, #Interpretability, #explainability

#ML #AI #XAI #mechinterp

Website: https://sqirllab.github.io/