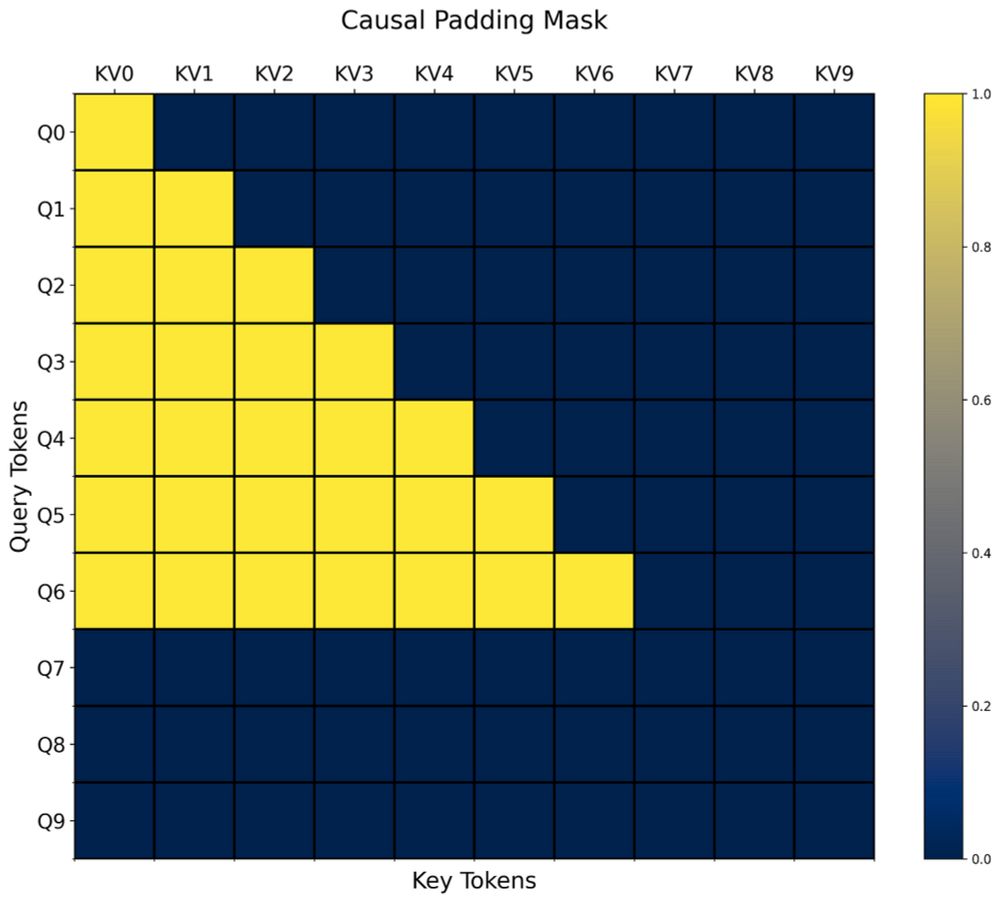

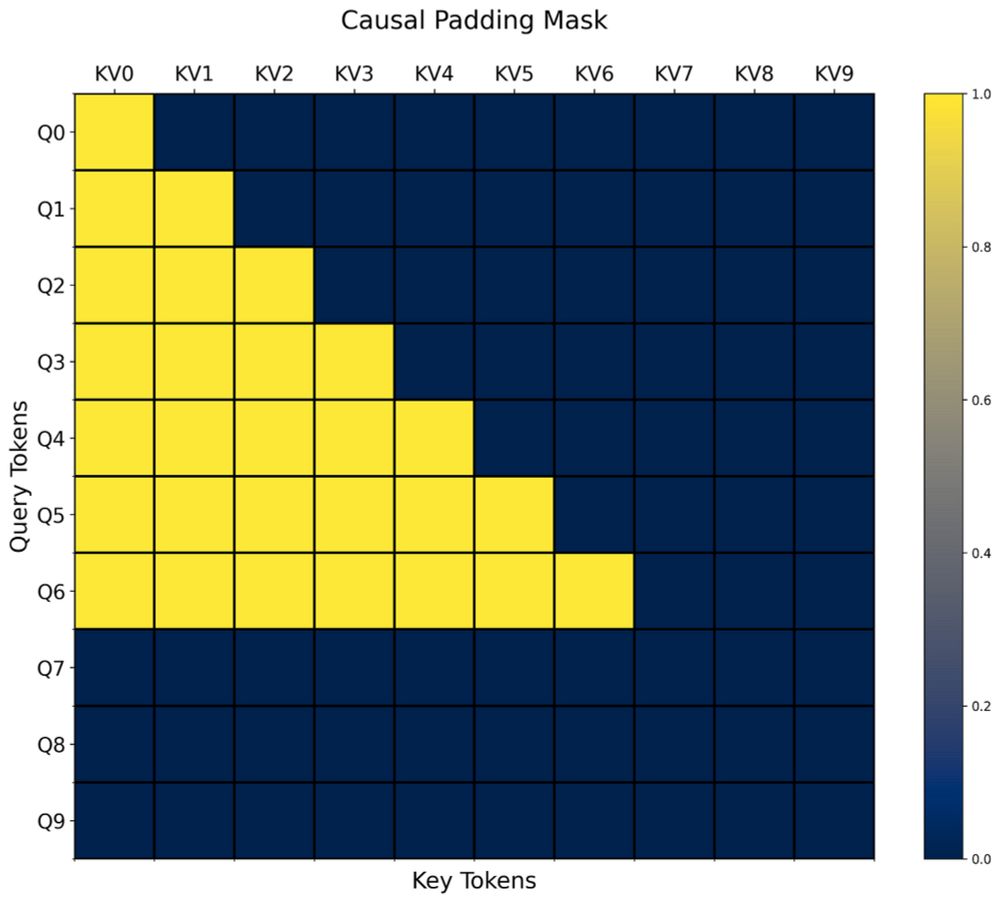

I couldn't find anything online showing how to use the new FlexAttention methods from torch>=2.5 to handle causal attention masking of padded inputs.

So I made a tutorial...

github.com/lucasmgomez/...

medium.com/@lucasmgomez...

@lucasmgomez.bsky.social

I couldn't find anything online showing how to use the new FlexAttention methods from torch>=2.5 to handle causal attention masking of padded inputs.

So I made a tutorial...

github.com/lucasmgomez/...

medium.com/@lucasmgomez...

🌟 New Research Alert! 🌟

Excited to share our latest work (accepted to NeurIPS2024) on understanding working memory in multi-task RNN models using naturalistic stimuli!: with @takuito.bsky.social and @bashivan.bsky.social

#tweeprint below:

1/ I work in #NeuroAI, a growing field of research, which many people have only the haziest conception of...

As way of introduction to this research approach, I'll provide here a very short thread outlining the definition of the field I gave recently at our BRAIN NeuroAI workshop at the NIH.

🧠📈