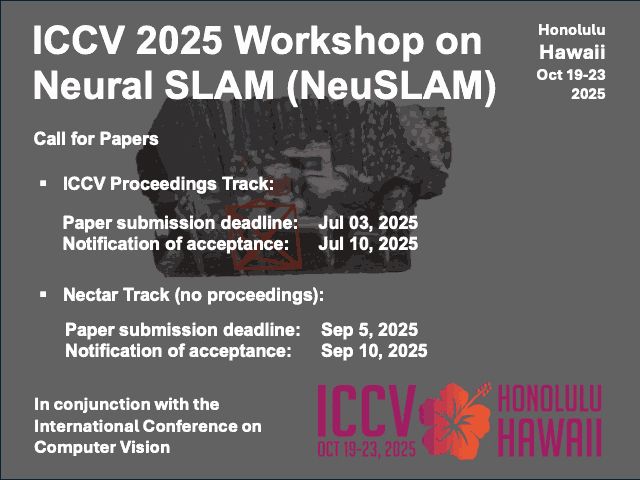

Your #ICCV2025 paper got rejected? Give it another try and submit to our proceedings track!

Your #ICCV2025 paper got accepted? Congrats! Give it even more visibility by joining our nectar track.

More info: sites.google.com/view/neuslam...

@dimtzionas.bsky.social

Assistant Professor for 3D Computer Vision at University of Amsterdam. 3D Human-centric Perception & Synthesis: bodies, hands, objects. Past: MPI for Intelligent Systems, Univ. of Bonn, Aristotle Univ. of Thessaloniki Website: https://dtzionas.com

Your #ICCV2025 paper got rejected? Give it another try and submit to our proceedings track!

Your #ICCV2025 paper got accepted? Congrats! Give it even more visibility by joining our nectar track.

More info: sites.google.com/view/neuslam...

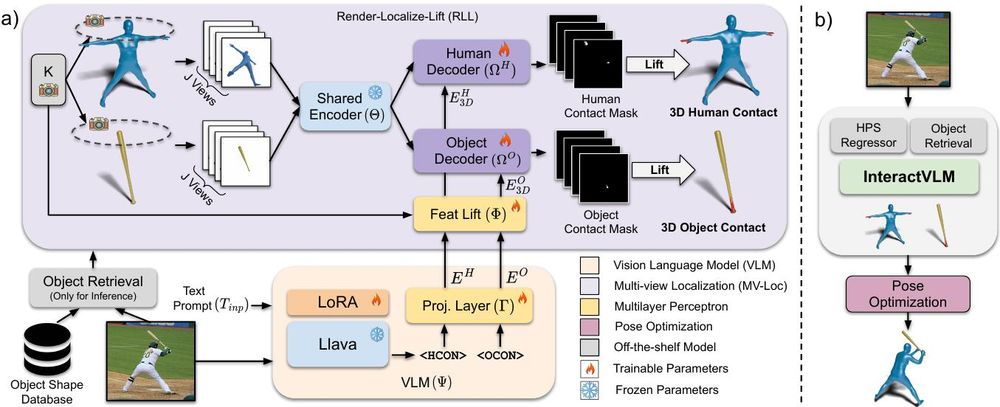

Why does 3D human-object reconstruction fail in the wild or get limited to a few object classes? A key missing piece is accurate 3D contact. InteractVLM (#CVPR2025) uses foundational models to infer contact on humans & objects, improving reconstruction from a single image. (1/10)

15.06.2025 12:23 — 👍 5 🔁 2 💬 1 📌 0

𝗜𝗻𝘁𝗲𝗿𝗮𝗰𝘁𝗩𝗟𝗠: 𝟯𝗗 𝗜𝗻𝘁𝗲𝗿𝗮𝗰𝘁𝗶𝗼𝗻 𝗥𝗲𝗮𝘀𝗼𝗻𝗶𝗻𝗴 𝗳𝗿𝗼𝗺 𝟮𝗗 𝗙𝗼𝘂𝗻𝗱𝗮𝘁𝗶𝗼𝗻𝗮𝗹 𝗠𝗼𝗱𝗲𝗹𝘀

Sai Kumar Dwivedi, Dimitrije Antić, Shashank Tripathi ... Dimitrios Tzionas

arxiv.org/abs/2504.05303

Trending on www.scholar-inbox.com

If you are at #3DV2025 @3dvconf.bsky.social today, George and Omid will be presenting our work on '3D Whole-Body Grasps with Directional Controllability'. Pass by to discuss human avatars and interactions! 😃

👉 Poster 6-15

👉 Local time 15:30 - 17:00

👉 Website: gpaschalidis.github.io/cwgrasp/

CWGrasp will be presented @3dvconf.bsky.social #3DV2025

Authors: G. Paschalidis, R. Wilschut, D. Antić, O. Taheri, D. Tzionas

Colab: University of Amsterdam, MPI for Intelligent Systems

Project: gpaschalidis.github.io/cwgrasp

Paper: arxiv.org/abs/2408.16770

Code: github.com/gpaschalidis...

🧵 10/10

🧩 Our code is modular - each model has its own repo.

You can easily integrate these into your code & build new research!

🧩 CGrasp: github.com/gpaschalidi...

🧩 CReach: github.com/gpaschalidi...

🧩 ReachingField: github.com/gpaschalidi...

🧩 CWGrasp: github.com/gpaschalidi...

🧵 9/10

⚙️ CWGrasp:

👉 requires 500x less samples & runs 10x faster than SotA,

👉 produces grasps that are perceived as more realistic than SotA ~70% of the times,

👉 works well for objects placed at various "heights" from the floor,

👉 generates both right- & left-hand grasps.

🧵 8/10

👉 We condition both CGrasp & CReach on the same direction.

👉 This produces a hand-only guiding grasp & a reaching body that are already mutually compatible!

🎯 Thus, we need to conduct a *small* refinement *only* for the body so that its fingers match the guiding hand!

🧵 7/10

⚙️ CGrasp & CReach - generate a hand-only grasp & reaching body, respectively, with varied pose by sampling their latent space.

👉 Importantly, the palm & arm direction satisfy a desired (condition) 3D direction vector!

👉 This direction is sampled from ⚙️ ReachingField!

🧵 6/10

⚙️ ReachingField - is a probabilistic 3D ray field encoding directions from which a body’s arm & hand likely reach an object without penetration.

👉 Objects near the ground are likely grasped from high above

👉 Objects high above the ground are likely grasped from below

🧵 5/10

💡 Our key idea is to perform local-scene reasoning *early on*, so we generate an *already-compatible* guiding-hand & body, so *only* the body needs a *small* refinement to match the hand.

CWGrasp - consists of three novel models:

👉 ReachingField,

👉 CGrasp,

👉 CReach.

🧵 4/10

🎯 We tackle this with CWGrasp in a divide-n-conquer way

This is inspired by FLEX [Tendulkar et al] that:

👉 generates a guiding hand-only grasp,

👉 generates many random bodies,

👉 post-processes the guiding hand to match the body, & the body to match the guiding hand.

🧵 3/10

This is challenging 🫣 because:

👉 the body needs to plausibly reach the object,

👉 fingers need to dexterously grasp the object,

👉 hand pose and object pose need to look compatible with each other, and

👉 training datasets for 3D whole-body grasps are really scarce.

🧵 2/10

📢 We present CWGrasp, a framework for generating 3D Whole-body Grasps with Directional Controllability 🎉

Specifically:

👉 given a grasping object (shown in red color) placed on a receptacle (brown color)

👉 we aim to generate a body (gray color) that grasps the object.

🧵 1/10

📢 Short deadline extension (24/2) -- One more week left to submit your application!

16.02.2025 22:42 — 👍 6 🔁 2 💬 0 📌 0

📢 I am #hiring 2x #PhD candidates to work on Human-centric #3D #ComputerVision at the University of #Amsterdam!

The positions are funded by an #ERC #StartingGrant.

For details and for submitting your application please see:

werkenbij.uva.nl/en/vacancies...

🆘 Deadline: Feb 16 🆘

Pls RT

Permanent Assistant Professor (Lecturer) position in Computer Vision @bristoluni.bsky.social [DL 6 Jan 2025]

This is a research+teaching permanent post within MaVi group uob-mavi.github.io in Computer Science. Suitable for strong postdocs or exceptional PhD graduates.

t.co/k7sRRyfx9o

1/2

If you are at #BMVC2024 , I will give a talk (remotely) at the ANIMA 2024 interdisciplinary workshop on "non-invasive human motion characterization".

👉 Room M2. Thursday at 14:00++ (UK).

👉 Talk title: "Towards 3D Perception and Synthesis of Humans in Interaction".