++ Major News ++

AABI is now ProbML: the Symposium on Probabilistic Machine Learning! Very excited about this!

ProbML will be co-located with ICML in Seoul!

Check out our new website: probml.cc!

10.02.2026 14:30 — 👍 6 🔁 1 💬 0 📌 0

📢The Information Society Project (@yaleisp.bsky.social) at Yale Law School is recruiting a new batch of *Resident Fellows*!

It's a great community and a good opportunity for anyone interested in the intersection of *AI governance and the law*.

Deadline: Dec 31

Apply: law.yale.edu/isp/join-us#...

16.10.2025 15:42 — 👍 3 🔁 0 💬 0 📌 0

YouTube video by Lawfare

The Ivory Tower and AI (Live from IHS's Technology, Liberalism, and Abundance Conference)

Today's Lawfare Daily is a @scalinglaws.bsky.social episode, produced with @utexaslaw.bsky.social, where @kevintfrazier.bsky.social spoke to @gushurwitz.bsky.social and @neilchilson.bsky.social about how academics can overcome positively contribute to the work associated with AI governance.

01.10.2025 13:39 — 👍 6 🔁 3 💬 0 📌 0

Beautiful paper!

01.10.2025 12:39 — 👍 1 🔁 0 💬 0 📌 0

It was a pleasure speaking at @yaleisp.bsky.social yesterday!

26.09.2025 10:50 — 👍 2 🔁 0 💬 0 📌 0

Tomorrow’s ISP Ideas Lunch update:

We’re excited to host @timrudner.bsky.social (U. Toronto & Vector Institute). He’ll speak on “formal guarantees” in AI + key AI safety concepts!

25.09.2025 01:53 — 👍 1 🔁 1 💬 1 📌 0

I'm thrilled to join the Schwartz Reisman Institute for Technology and Society as a Faculty Affiliate!

16.08.2025 16:14 — 👍 4 🔁 0 💬 0 📌 0

Congrats! CDS PhD Student Vlad Sobal, Courant PhD Student Kevin Zhang, CDS Faculty Fellow timrudner.bsky.social, CDS Profs @kyunghyuncho.bsky.social and @yann-lecun.bsky.social, and Brown's Randall Balestriero won the Best Paper Award at ICML's 'Building Physically Plausible World Models' Workshop!

12.08.2025 16:12 — 👍 1 🔁 1 💬 1 📌 0

Congratulations again!

14.07.2025 17:13 — 👍 1 🔁 0 💬 0 📌 0

Congratulations Umang!

15.05.2025 16:26 — 👍 1 🔁 0 💬 0 📌 0

AI in Military Decision Support: Balancing Capabilities with Risk

CDS Faculty Fellow Tim G. J. Rudner and colleagues at CSET outline responsible practices for deploying AI in military decision-making.

CDS Faculty Fellow Tim G. J. Rudner (@timrudner.bsky.social) and colleagues at CSET — @emmyprobasco.bsky.social, @hlntnr.bsky.social, and Matthew Burtell — examine responsible AI deployment in military decision-making.

Read our post on their policy brief: nyudatascience.medium.com/ai-in-milita...

14.05.2025 19:23 — 👍 2 🔁 1 💬 0 📌 0

The result in this paper I'm most excited about:

We showed that planning in world model latent space allows successful zero-shot generalization to *new* tasks!

Project website: latent-planning.github.io

Paper: arxiv.org/abs/2502.14819

07.05.2025 21:26 — 👍 7 🔁 0 💬 0 📌 0

#1: Can Transformers Learn Full Bayesian Inference In Context? with @arikreuter.bsky.social @timrudner.bsky.social @vincefort.bsky.social

01.05.2025 12:36 — 👍 6 🔁 1 💬 0 📌 0

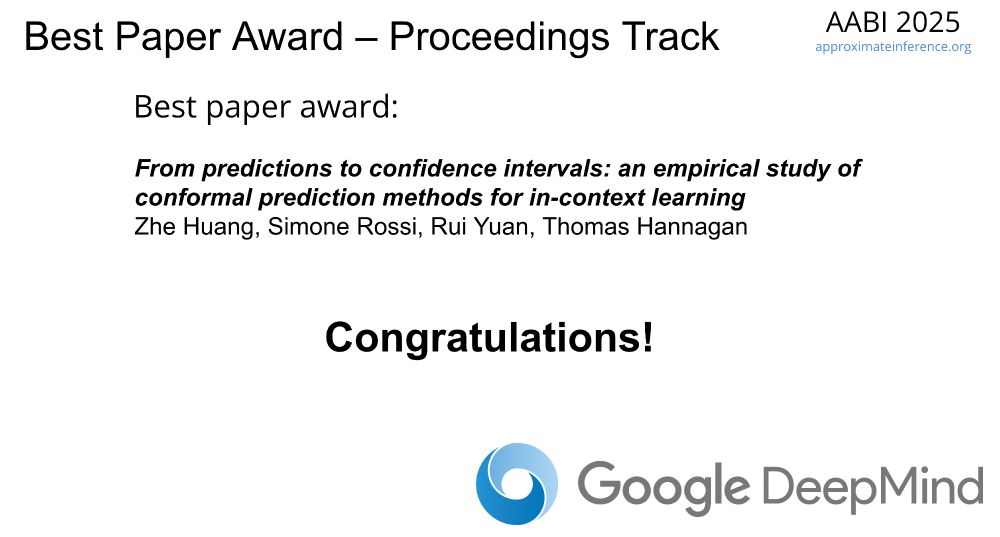

Very excited that our work (together with my PhD student @gbarto.bsky.social and our collaborator Dmitry Vetrov) was recognized with a Best Paper Award at #AABI2025!

#ML #SDE #Diffusion #GenAI 🤖🧠

30.04.2025 00:02 — 👍 19 🔁 2 💬 1 📌 0

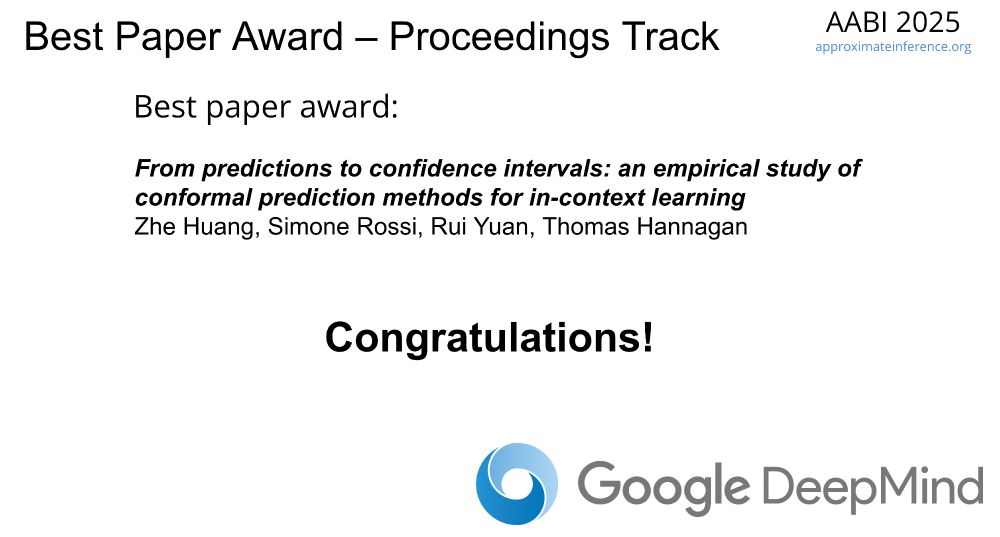

Congratulations to the #AABI2025 Proceedings Track Best Paper Award recipients!

29.04.2025 20:55 — 👍 10 🔁 1 💬 0 📌 0

Congratulations to the #AABI2025 Workshop Track Outstanding Paper Award recipients!

29.04.2025 20:54 — 👍 20 🔁 8 💬 0 📌 1

We concluded #AABI2025 with a panel discussion on

**The Role of Probabilistic Machine Learning in the Age of Foundation Models and Agentic AI**

Thanks to Emtiyaz Khan, Luhuan Wu, and @jamesrequeima.bsky.social for participating!

29.04.2025 20:49 — 👍 10 🔁 3 💬 1 📌 0

.@jamesrequeima.bsky.social gave the third invited talk of the day at #AABI2025!

**LLM Processes**

29.04.2025 20:41 — 👍 5 🔁 2 💬 0 📌 0

Luhuan Wu is giving the second invited talk of the day at #AABI2025!

**Bayesian Inference for Invariant Feature Discovery from Multi-Environment Data**

Watch it on our livestream: timrudner.com/aabi2025!

29.04.2025 04:02 — 👍 3 🔁 2 💬 0 📌 0

Emtiyaz Khan is giving the first invited talk of the day at #AABI2025!

29.04.2025 01:55 — 👍 7 🔁 2 💬 0 📌 0

We just kicked off #AABI2025 at NTU in Singapore!

We're livestreaming the talks here: timrudner.com/aabi2025!

Schedule: approximateinference.org/schedule/

#ICLR2025 #ProbabilisticML

29.04.2025 01:47 — 👍 10 🔁 4 💬 0 📌 1

AABI 2025 · Luma

7th Symposium on Advances of Approximate Bayesian Inference (AABI)

https://approximateinference.org/schedule

Make sure to get your tickets to #AABI2025 if you are in Singapore on April 29 (just after #ICLR2025) and interested in probabilistic ML, inference, and decision-making!

Tickets (free but limited!): lu.ma/5syzr79m

More info: approximateinference.org

#ProbabilisticML #Bayes #UQ #ICLR2025 #AABI2025

18.04.2025 03:42 — 👍 6 🔁 2 💬 0 📌 0

Make sure to get your tickets to AABI if you are in Singapore on April 29 (just after #ICLR2025) and interested in probabilistic modeling, inference, and decision-making!

Tickets (free but limited!): lu.ma/5syzr79m

More info: approximateinference.org

#Bayes #MachineLearning #ICLR2025 #AABI2025

13.04.2025 07:43 — 👍 17 🔁 8 💬 0 📌 1

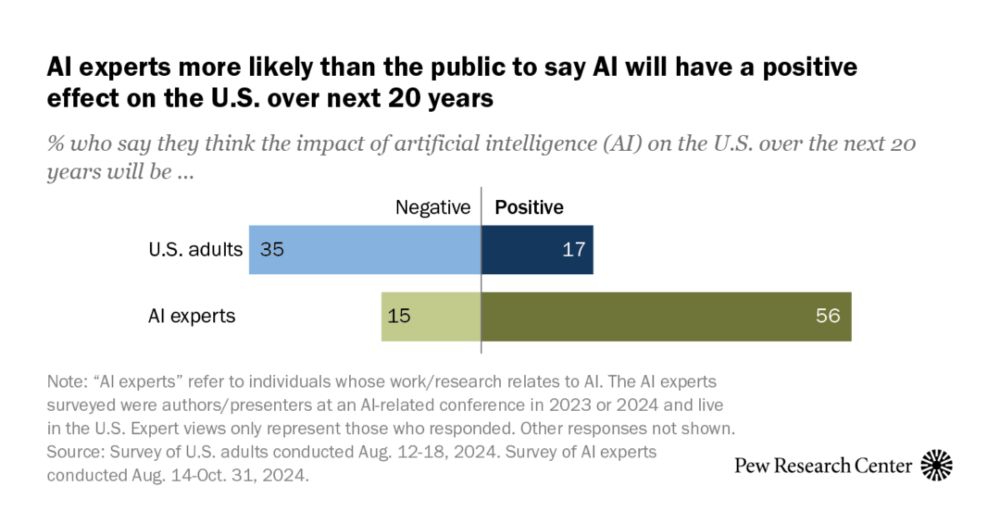

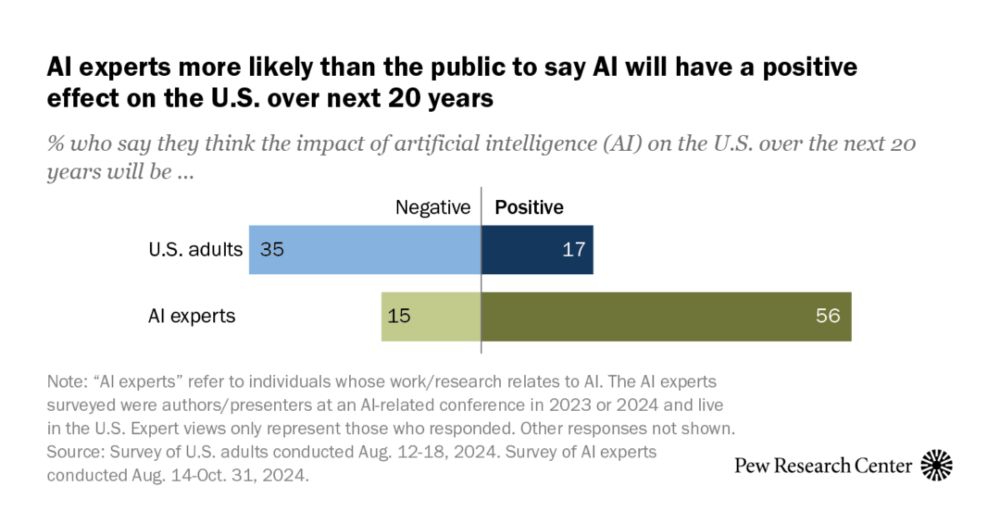

How the U.S. Public and AI Experts View Artificial Intelligence

These groups are far apart in their enthusiasm and predictions for AI, but both want more personal control and worry about too little regulation.

A great Pew Research survey:

"How the U.S. Public and AI Experts View Artificial Intelligence"

Everyone working in ML should read this and ask themselves why experts and non-experts have such divergent views about the potential of AI to have a positive impact.

www.pewresearch.org/internet/202...

05.04.2025 21:28 — 👍 10 🔁 3 💬 2 📌 1

This is an excellent article!

Steering foundation models towards trustworthy behaviors is one of the most important research directions today.

Helen is a deep and rigorous thinker, and you should definitely subscribe to her Substack!

04.04.2025 01:42 — 👍 4 🔁 1 💬 0 📌 0

I'm super excited to see our #CSET report on **AI-enabled military decision support systems** being released today!

Great work by @emmyprobasco.bsky.social, @hlntnr.bsky.social, and Matthew Burtell!

01.04.2025 15:32 — 👍 0 🔁 1 💬 0 📌 0

Symposium on Probabilistic Machine Learning (ProbML), formerly known as Symposium on Advances in Approximate Bayesian Inference (AABI).

🔗 https://probml.cc

Principal Scientist at Naver Labs Europe, Lead of Spatial AI team. AI for Robotics, Computer Vision, Machine Learning. Austrian in France. https://chriswolfvision.github.io/www/

Research Scientist at Apple for uncertainty quantification.

ORISE Intelligence Community Postdoc @Harvard || agency, affect, & uncertainty in learning, decision-making, & computational psychiatry || www.hayleydorfman.com

I'm the Guardian's deputy political editor in Westminster

Story? Email - jessica.elgot@theguardian.com

Signal - jessicaelgot.51

https://www.theguardian.com/profile/jessica-elgot

The AI policy podcast brought to you by The University of Texas School of Law and Lawfare. Join hosts Kevin Frazier and Alan Rozenshtein for deep dives into the AI weeds.

The Information Society Project at Yale Law School is an intellectual center addressing issues at the intersection of law, tech & society. https://law.yale.edu/isp/community

Researcher on MDPs and RL. Retired prof. #orms #rl

The real jbouie. Columnist for the New York Times Opinion section. Co-host of the Unclear and Present Danger podcast. b-boy-bouiebaisse on TikTok. jbouienyt on Twitch. National program director of the CHUM Group.

Send me your mutual aid requests.

Incoming Assistant Professor at Johns Hopkins University | RAP at Toyota Technological Institute at Chicago | web: https://anandbhattad.github.io/ | Knowledge in Generative Image Models, Intrinsic Images, Image-based Relighting, Inverse Graphics

CEO of Bluesky, steward of AT Protocol.

dec/acc 🌱 🪴 🌳

Senior Fellow at the American Immigration Council. Commenting generally on immigration law and policy. Retweets =/= endorsements, views are my own.

Zoe and Samson’s mom

Former New York State Assemblymember 🐺Former Congressional Candidate for NY-10

loves doggos 🐕

(personal account)

she/her/hers

Activist, Researcher, Professor at @ucm.es Madrid, faculty associate at Berkman Klein Center at @Harvard.edu.

Lead EU ERC project P2P Models and others.

#commons #socialcomputing #blockchain #decentralization

he/they 🍉

https://samer.hassan.name

Fighting for Truth & Justice | Ranking Member: @robertgarcia.house.gov

Associate Professor of Computer Science and Psychology @ Princeton. Posts are my views only. https://www.cs.princeton.edu/~bl8144/

Powered by American Family Insurance

The Other One. Crooked Media, Pod Save America, Obama alum.

Prof at the University of British Columbia. Research in statistics, ML, and AI for science. Views are my own. https://charlesm93.github.io./