Confirmed keynote speakers for the Greeks 🇬🇷 in #AI 2025 Symposium in Athens, Greece 🤗

@alexdimakis.bsky.social @manoliskellis.bsky.social

www.greeksin.ai

11.04.2025 19:10 — 👍 10 🔁 1 💬 0 📌 1

Excited to be part of Greeksin.ai

13.04.2025 07:30 — 👍 4 🔁 1 💬 0 📌 0

GitHub - open-thoughts/open-thoughts: Fully open data curation for reasoning models

Fully open data curation for reasoning models. Contribute to open-thoughts/open-thoughts development by creating an account on GitHub.

We are excited to release the OpenThinker2 reasoning models and data:

1. Outperforms DeepSeekR1-32B in reasoning.

2. Fully open source, open weights and open data (1M samples).

3. Post-trained only with SFT. RL post-training will likely further improve performance.

github.com/open-thought...

03.04.2025 17:28 — 👍 11 🔁 3 💬 0 📌 0

Please let us know what you find out.

16.02.2025 09:01 — 👍 4 🔁 0 💬 0 📌 0

Yes we have been thinking of doing this. DCLM 7B is a fully open model (full pre-training data and code open) and we could post-train it with open-thoughts.

15.02.2025 04:15 — 👍 3 🔁 0 💬 1 📌 0

Performance of the best known Reasoning models on various Benchmarks. OpenThinker-32B matches the current state of the art.

We are releasing OpenThinker-32B, the best 32B reasoning model with open data. We match or outperform Deepseek-R1-32B (a closed data model) in reasoning benchmarks. Congrats to Negin and the whole Open Thoughts team.

github.com/open-thought...

12.02.2025 20:11 — 👍 25 🔁 8 💬 2 📌 1

Congratulations Constantine for this big effort for supporting Greek students.

29.01.2025 19:11 — 👍 1 🔁 0 💬 1 📌 0

for the 17k traces used in Berkeley Sky-T1, I joked that you could take 1000 student Berkeley course and give 17 homework problems to each student. On a more serious note universities are a great way to collect such data I think.

29.01.2025 09:00 — 👍 1 🔁 0 💬 1 📌 0

Our repo: github.com/open-thought...

Open code, Open reasoning data (114k and growing), Open weight models.

Please let us know if you want to participate in the Open Thoughts community effort. (2/n)

www.openthoughts.ai

28.01.2025 18:23 — 👍 3 🔁 1 💬 0 📌 0

What if we had the data that DeepSeek-R1 was post-trained on?

We announce Open Thoughts, an effort to create such open reasoning datasets. Using our data we trained Open Thinker 7B an open data model with performance very close to DeepSeekR1-7B distill. (1/n)

28.01.2025 18:23 — 👍 9 🔁 1 💬 1 📌 0

Thanks for featuring us Nathan !

28.01.2025 03:12 — 👍 1 🔁 0 💬 0 📌 0

https://www.bespokelabs.ai/blog/bespoke-stratos-the-unreasonable-effectiveness-of-reasoning-distillation

We just did a crazy 48h sprint to create the best public reasoning dataset using Berkeley's Sky-T1, Curator and DeepSeek R1. We can get o1-Preview reasoning on a 32B model and 48x less data than Deepseek.

t.co/WO5UV2LZQM

22.01.2025 18:59 — 👍 6 🔁 2 💬 1 📌 0

Answer. Another way it could be done: Get data by teaching a 1000 student class and assign 17 homework problems. Side benefit: make $10M by charging $10K tuition.

14.01.2025 18:09 — 👍 4 🔁 0 💬 0 📌 0

The Berkeley Sky computing lab just trained a GPT-o1 level reasoning model, spending only $450 to create the instruction dataset. The data is 17K math and coding problems solved step by step. They created this dataset by prompting QwQ at $450 cost. Q: Impossible without distilling a bigger model?

14.01.2025 18:09 — 👍 7 🔁 1 💬 1 📌 0

Creating small specialized models is currently hard. Evaluation, post-training data curation and fine-tuning are tricky, and better tools are needed. Still, its good to go back to UNIX philosophy to inform our future architectures. (n/n)

08.01.2025 23:28 — 👍 4 🔁 0 💬 0 📌 0

This is related to the "Textbooks is all you need", but for narrow jobs like summarization, legalQA, and so on, as opposed to general-purpose small models. Research shows how to post-train using big models to create small models that are faster and outperform their big teachers in narrow tasks(6/n)

08.01.2025 23:28 — 👍 1 🔁 0 💬 1 📌 0

I believe that the best way to engineer AI systems will be to use post-training to specialize Llama small models into narrow focused jobs. 'Programming' specialized models can be done by creating post-training datasets created from internal data by prompting foundation models and distilling. (5/n)

08.01.2025 23:28 — 👍 1 🔁 0 💬 1 📌 0

Instead, I would like to make the case for Small Specialized Models following Unix philosophy:

1. Write programs that do one thing and do it well

2. Write programs to work together

3. Write programs to handle text streams, because that is a universal interface. Replace programs with AI models (4/n)

08.01.2025 23:28 — 👍 3 🔁 0 💬 1 📌 0

Monolithic AI systems are also extremely wasteful in terms of energy and cost: using GPT4o as a summarizer, fact checker, or user intent detector, reminds me of the first days of the big data wave, when people where spinning Hadoop clusters to process 1GB of data. (3/n)

08.01.2025 23:28 — 👍 1 🔁 0 💬 1 📌 0

This is not working very well. This monolith view of AI is in contrast to how we teach engineers to build systems. To build complex systems engineers create modular components. This makes systems reliable and helps teams to coordinate with specs that are easy to explain, engineer and evaluate. (2/n)

08.01.2025 23:28 — 👍 1 🔁 0 💬 1 📌 0

AI monoliths vs Unix Philosophy:

The case for small specialized AI models.

Current thinking is that AGI is coming, and one gigantic model will be able to solve everything. Current Agents are mostly prompts on one big model and prompt engineering is used for executing complex processes. (1/n)

08.01.2025 23:28 — 👍 9 🔁 2 💬 1 📌 0

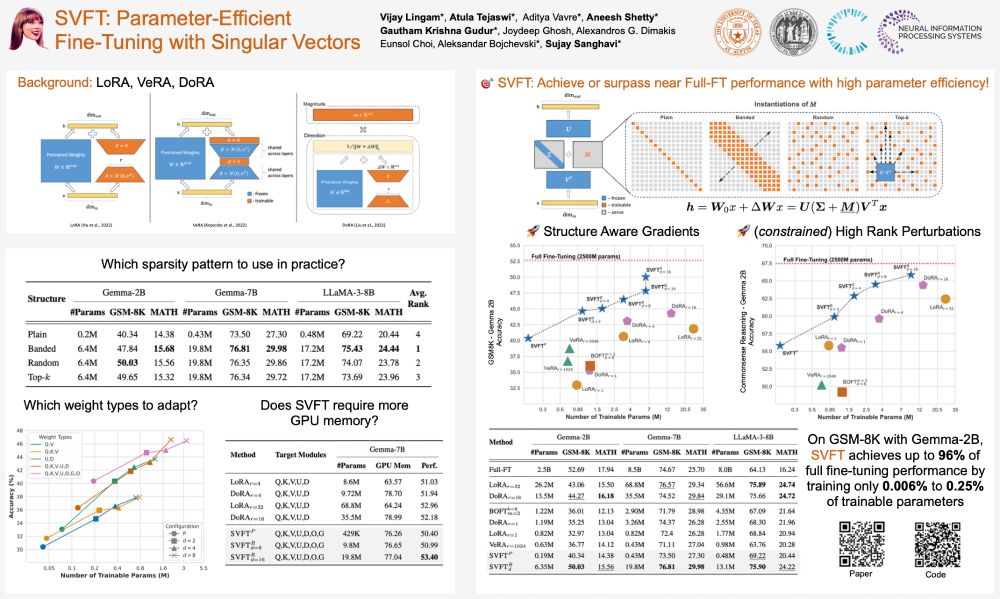

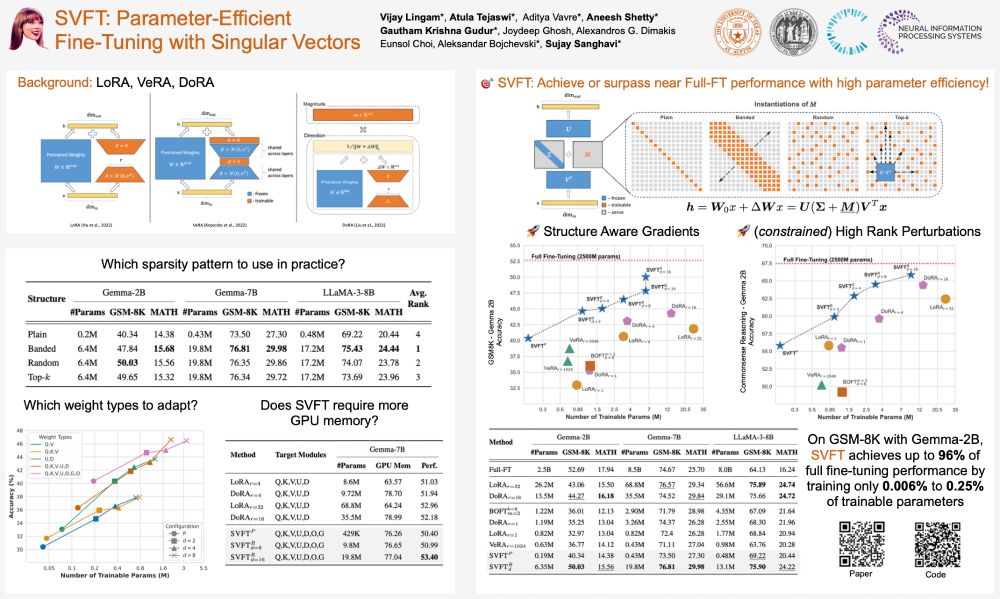

Missed out on #Swift tickets? No worries—swing by our #SVFT poster at #NeurIPS2024 and catch *real* headliners! 🎤💃🕺

📌Where: East Exhibit Hall A-C #2207, Poster Session 4 East

⏲️When: Thu 12 Dec, 4:30 PM - 7:30 PM PST

#AI #MachineLearning #PEFT #NeurIPS24

09.12.2024 05:55 — 👍 9 🔁 2 💬 1 📌 0

hello friends, I heard there is a party here?

19.11.2024 22:39 — 👍 7 🔁 0 💬 2 📌 0

hello world

19.11.2024 22:33 — 👍 4 🔁 0 💬 0 📌 0

The 2025 Conference on Language Modeling will take place at the Palais des Congrès in Montreal, Canada from October 7-10, 2025

LM/NLP/ML researcher ¯\_(ツ)_/¯

yoavartzi.com / associate professor @ Cornell CS + Cornell Tech campus @ NYC / nlp.cornell.edu / associate faculty director @ arXiv.org / researcher @ ASAPP / starting @colmweb.org / building RecNet.io

Assistant Professor at UC Berkeley EECS. PL+HCI. Into making programming easier for social scientists and domain experts.

The world's leading venue for collaborative research in theoretical computer science. Follow us at http://YouTube.com/SimonsInstitute.

Assistant Professor @PrincetonCS

Research: Theoretical Computer Science, Optimization, Algorithmic Statistics.

PhD student @ UW, research @ Ai2

Professor at UT Austin. Research in ML & Optimization. Always rethinking how I teach. Amateur accordion player. Committed bike commuter. Online classes in English & Greek. https://caramanis.github.io/

I work at Sakana AI 🐟🐠🐡 → @sakanaai.bsky.social

https://sakana.ai/careers

PhD @ucberkeleyofficial.bsky.social | Past: AI4Code Research Fellow @msftresearch.bsky.social | Summer @EPFL Scholar, CS and Applied Maths @IIITDelhi | Hobbyist Saxophonist

https://lakshyaaagrawal.github.io

Maintainer of https://aka.ms/multilspy

I like tokens! Lead for OLMo data at @ai2.bsky.social (Dolma 🍇) w @kylelo.bsky.social. Open source is fun 🤖☕️🍕🏳️🌈 Opinions are sampled from my own stochastic parrot

more at https://soldaini.net

Chief AI Scientist at Databricks. Founding team at MosaicML. MIT/Princeton alum. Lottery ticket enthusiast. Working on data intelligence.

- Prof at Harvard Business School

- Advisor, Teacher, Author, Sensei, Founder

- 2020 MBA Prof of the Year

- Books: Negotiation Genius | Negotiating the Impossible | The Peacemaker’s Code

Professor @UCLA, Research Scientist @ByteDance | Recent work: SPIN, SPPO, DPLM 1/2, GPM, MARS | Opinions are my own

I work on AI at OpenAI.

Former VP AI and Distinguished Scientist at Microsoft.

Researcher in machine learning

Professor of CS and Math @ KAUST. Interested in Optimization for Machine Learning. Federated learning guru. Likes 🏓🏋️♂️🎾🏐⛷️⛸️🧘♂️🤿🎹🎸✈️🏔️📷☀️🐈🍅🥚☕️