proud to share this work, led by the brilliant @ilinabg.bsky.social, now out in Nature! Ilina finds that speech-sound neural processing is VERY similar in a language you know and one you don't. differences only emerge at the level of word boundaries and learnt statistical structure 🧠✨

20.11.2025 19:11 — 👍 58 🔁 11 💬 2 📌 1

Human cortical dynamics of auditory word form encoding

We perceive continuous speech as a series of discrete words, despite the lack of clear acoustic boundaries. The superior temporal gyrus (STG) encodes …

happy to share our new paper, out now in Neuron! led by the incredible Yizhen Zhang, we explore how the brain segments continuous speech into word-forms and uses adaptive dynamics to code for relative time - www.sciencedirect.com/science/arti...

07.11.2025 18:16 — 👍 49 🔁 17 💬 2 📌 1

YouTube video by FNRLux

FNR Awards 2025: Outstanding PhD Thesis - Jill Kries

I am so honored to have received an Outstanding PhD Thesis award from the Luxembourg National Research Fund @fnr.lu! 🏆

My PhD research was about how language is processed in the brain, with a focus on patients with a language disorder called aphasia 🧠 Find out more➡️ youtu.be/E-Zww-B1jFQ?...

05.11.2025 17:29 — 👍 12 🔁 2 💬 2 📌 0

PNAS

Proceedings of the National Academy of Sciences (PNAS), a peer reviewed journal of the National Academy of Sciences (NAS) - an authoritative source of high-impact, original research that broadly spans...

Delighted to share our new paper, now out in PNAS! www.pnas.org/doi/10.1073/...

"Hierarchical dynamic coding coordinates speech comprehension in the brain"

with dream team @alecmarantz.bsky.social, @davidpoeppel.bsky.social, @jeanremiking.bsky.social

Summary 👇

1/8

22.10.2025 05:21 — 👍 92 🔁 35 💬 2 📌 5

Fantastic commentary on @smfleming.bsky.social & @matthiasmichel.bsky.social's BBS paper by @renrutmailliw.bsky.social, @lauragwilliams.bsky.social & Hinze Hogendoorn. Hits lots of nails on the head. As @neddo.bsky.social & I also argue: postdiction doesn't prove consciousness is slow! 1/3

29.10.2025 13:22 — 👍 16 🔁 2 💬 3 📌 0

Thanks Ian, nice to hear you liked it!

30.10.2025 01:29 — 👍 1 🔁 0 💬 0 📌 0

Super happy to share my very first first-author paper out in

@sfnjournals.bsky.social! We show content-specific predictions are represented in an alpha rhythm. It’s been a beautiful, inspiring, yet challenging journey.

Huge thanks to everyone, especially @peterkok.bsky.social @jhaarsma.bsky.social

21.10.2025 15:57 — 👍 23 🔁 6 💬 1 📌 1

Contents of visual predictions oscillate at alpha frequencies

Predictions of future events have a major impact on how we process sensory signals. However, it remains unclear how the brain keeps predictions online in anticipation of future inputs. Here, we combin...

@dotproduct.bsky.social's first first author paper is finally out in @sfnjournals.bsky.social! Her findings show that content-specific predictions fluctuate with alpha frequencies, suggesting a more specific role for alpha oscillations than we may have thought. With @jhaarsma.bsky.social. 🧠🟦 🧠🤖

21.10.2025 11:05 — 👍 94 🔁 38 💬 4 📌 2

really fun getting to think about the "time to consciousness" with this dream team! we discuss interesting parallels between vision and language processing on phenomena like postdictive perceptual effects, among other things! check it out 😄

01.10.2025 19:04 — 👍 6 🔁 1 💬 0 📌 0

A picture of our paper's abstract and title: The order of task decisions and confidence ratings has little effect on metacognition.

Task decisions and confidence ratings are fundamental measures in metacognition research, but using these reports requires collecting them in some order. Only three orders exist and are used in an ad hoc manner across studies. Evidence suggests that when task decisions precede confidence, this report order can enhance metacognition. If verified, this effect pervades studies of metacognition and will lead the synthesis of this literature to invalid conclusions. In this Registered Report, we tested the effect of report order across popular domains of metacognition and probed two factors that may underlie why order effects have been observed in past studies: report time and motor preparation. We examined these effects in a perception experiment (n = 75) and memory experiment (n = 50), controlling task accuracy and learning. Our registered analyses found little effect of report order on metacognitive efficiency, even when timing and motor preparation were experimentally controlled. Our findings suggest the order of task decisions and confidence ratings has little effect on metacognition, and need not constrain secondary analysis or experimental design.

🚨 Out now in @commspsychol.nature.com 🚨

doi.org/10.1038/s442...

Our #RegisteredReport tested whether the order of task decisions and confidence ratings bias #metacognition.

Some said decisions → confidence enhances metacognition. If true, decades of findings will be affected.

30.09.2025 08:10 — 👍 27 🔁 10 💬 1 📌 0

Sensory Horizons and the Functions of Conscious Vision | Behavioral and Brain Sciences | Cambridge Core

Sensory Horizons and the Functions of Conscious Vision

Thanks to Steve and Matthias for writing this interesting and ambitious theoretical perspective: bit.ly/4jF4kRp.

Although we don’t (yet) agree w/ one of their foundational claims, we think this perspective is valuable, and should spawn lots of important discussions and follow-up work :)

29.09.2025 19:00 — 👍 2 🔁 0 💬 1 📌 0

OSF

New BBS article w/ @lauragwilliams.bsky.social and Hinze Hogendoorn, just accepted! We respond to a thought-provoking article by @smfleming.bsky.social & @matthiasmichel.bsky.social, and argue that it's premature to conclude that conscious perception is delayed by 350-450ms: bit.ly/4nYNTlb

29.09.2025 19:00 — 👍 26 🔁 10 💬 1 📌 2

We present our preprint on ViV1T, a transformer for dynamic mouse V1 response prediction. We reveal novel response properties and confirm them in vivo.

With @wulfdewolf.bsky.social, Danai Katsanevaki, @arnoonken.bsky.social, @rochefortlab.bsky.social.

Paper and code at the end of the thread!

🧵1/7

19.09.2025 12:37 — 👍 17 🔁 12 💬 2 📌 0

🚨Our preprint is online!🚨

www.biorxiv.org/content/10.1...

How do #dopamine neurons perform the key calculations in reinforcement #learning?

Read on to find out more! 🧵

19.09.2025 13:05 — 👍 195 🔁 71 💬 11 📌 4

Research Coordinator, Minds, Experiences, and Language Lab in Graduate School of Education, Stanford, California, United States

The Stanford Graduate School of Education (GSE) seeks a full-time Research Coordinator (acting lab manager) to help launch and coordinate the Minds,.....

I’m hiring!! 🎉 Looking for a full-time Lab Manager to help launch the Minds, Experiences, and Language Lab at Stanford. We’ll use all-day language recording, eye tracking, & neuroimaging to study how kids & families navigate unequal structural constraints. Please share:

phxc1b.rfer.us/STANFORDWcqUYo

15.09.2025 18:57 — 👍 73 🔁 48 💬 2 📌 0

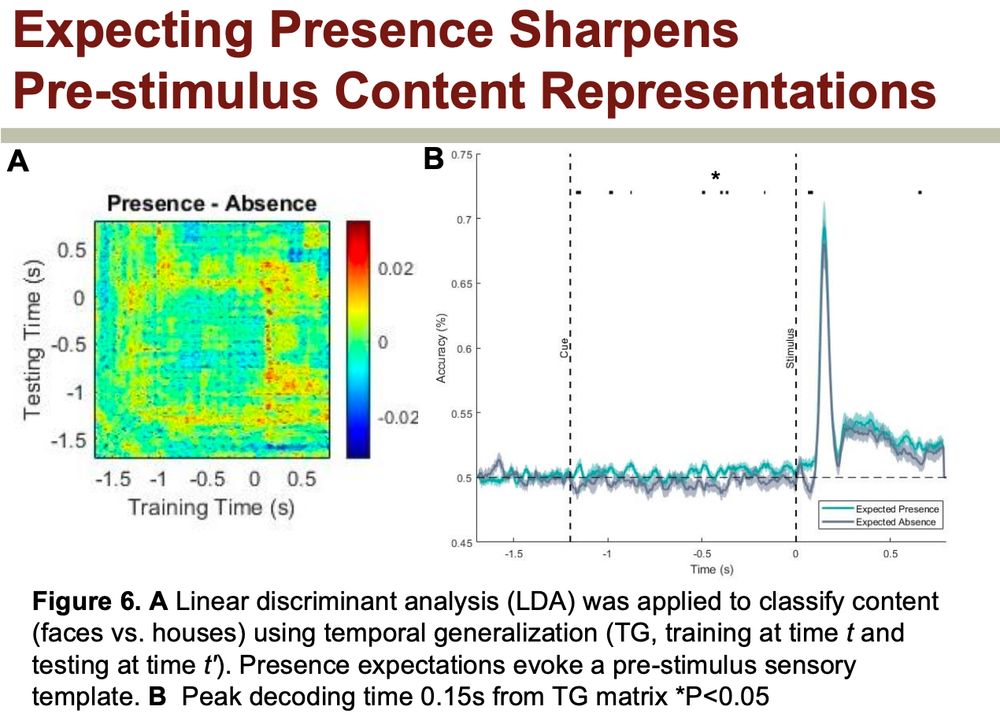

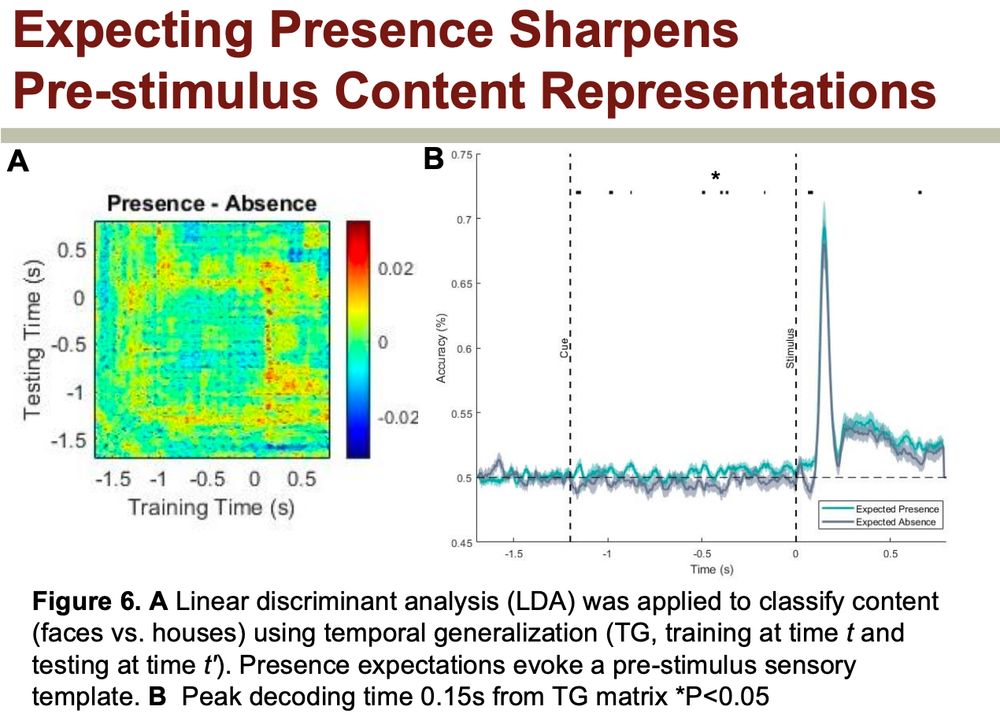

Looking forward to #ICON2025 next week! We will have several presentations on mental imagery, reality monitoring and expectations:

To kick us off, on Tuesday at 15:30, Martha Cottam will present:

P2.12 | Presence Expectations Modulate the Neural Signatures of Content Prediction Errors

11.09.2025 15:28 — 👍 23 🔁 5 💬 1 📌 0

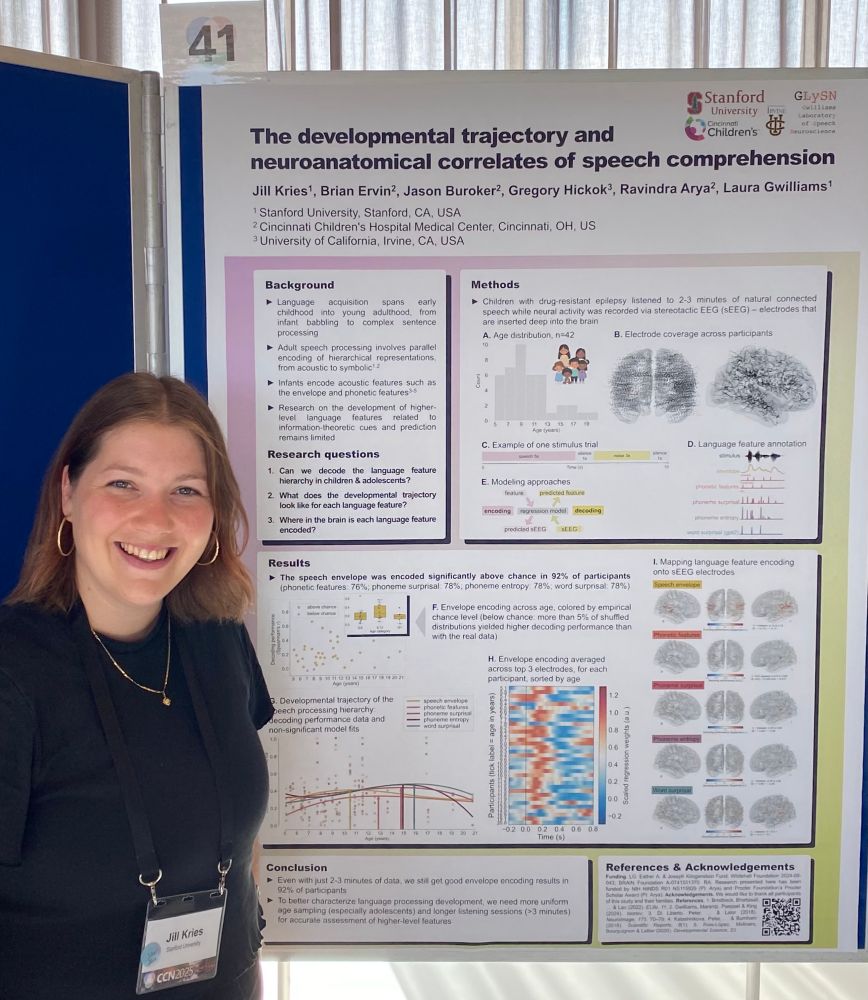

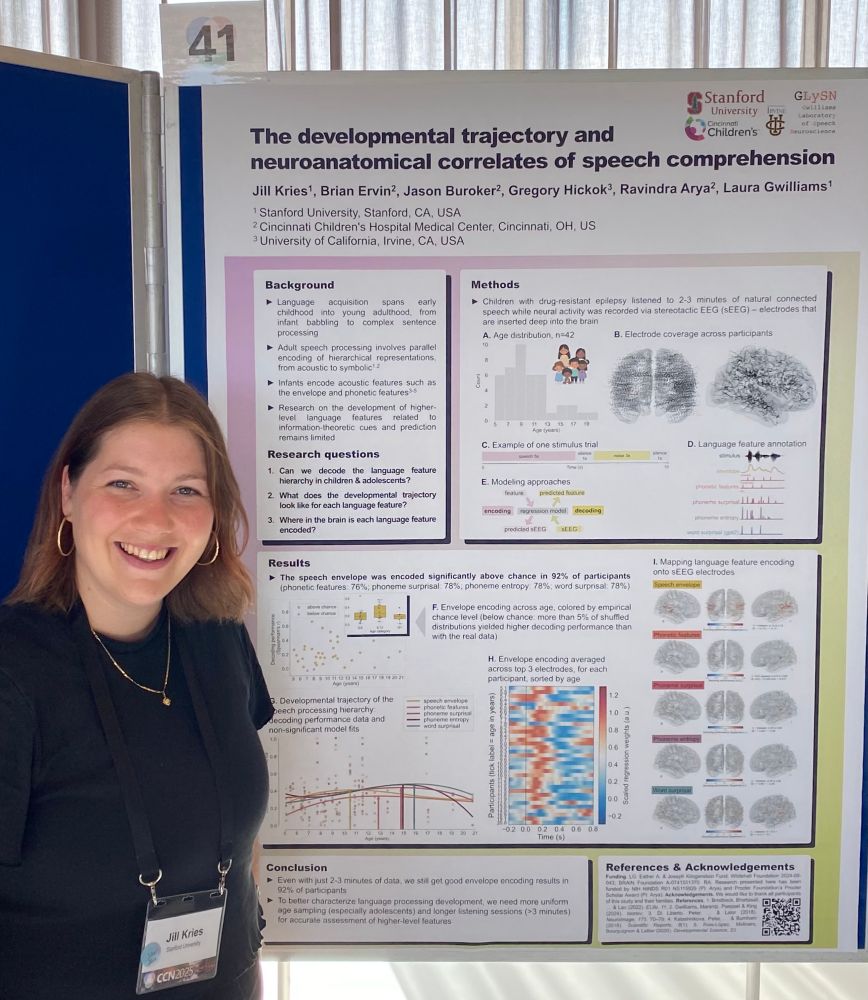

In August I had the pleasure to present a poster at the Cognitive Computational Neuroscience (CCN) conference in Amsterdam. My poster was about 𝘁𝗵𝗲 𝗱𝗲𝘃𝗲𝗹𝗼𝗽𝗺𝗲𝗻𝘁𝗮𝗹 𝘁𝗿𝗮𝗷𝗲𝗰𝘁𝗼𝗿𝘆 𝗮𝗻𝗱 𝗻𝗲𝘂𝗿𝗼𝗮𝗻𝗮𝘁𝗼𝗺𝗶𝗰𝗮𝗹 𝗰𝗼𝗿𝗿𝗲𝗹𝗮𝘁𝗲𝘀 𝗼𝗳 𝘀𝗽𝗲𝗲𝗰𝗵 𝗰𝗼𝗺𝗽𝗿𝗲𝗵𝗲𝗻𝘀𝗶𝗼𝗻 🧒➡️🧑 🧠

08.09.2025 21:50 — 👍 23 🔁 1 💬 2 📌 0

The Latency of a Domain-General Visual Surprise Signal is Attribute Dependent

Predictions concerning upcoming visual input play a key role in resolving percepts. Sometimes input is surprising, under which circumstances the brain must calibrate erroneous predictions so that perc...

🚨Pre-print of some cool data from my PhD days!

doi.org/10.1101/2025...

☝️Did you know that visual surprise is (probably) a domain-general signal and/or operates at the object-level?

✌️Did you also know that the timing of this response depends on the specific attribute that violates an expectation?

19.08.2025 00:30 — 👍 15 🔁 9 💬 2 📌 1

Humans largely learn language through speech. In contrast, most LLMs learn from pre-tokenized text.

In our #Interspeech2025 paper, we introduce AuriStream: a simple, causal model that learns phoneme, word & semantic information from speech.

Poster P6, tomorrow (Aug 19) at 1:30 pm, Foyer 2.2!

19.08.2025 01:12 — 👍 52 🔁 10 💬 1 📌 1

looking forward to seeing everyone at #CCN2025! here's a snapshot of the work from my lab that we'll be presenting on speech neuroscience 🧠 ✨

10.08.2025 18:09 — 👍 53 🔁 8 💬 0 📌 2

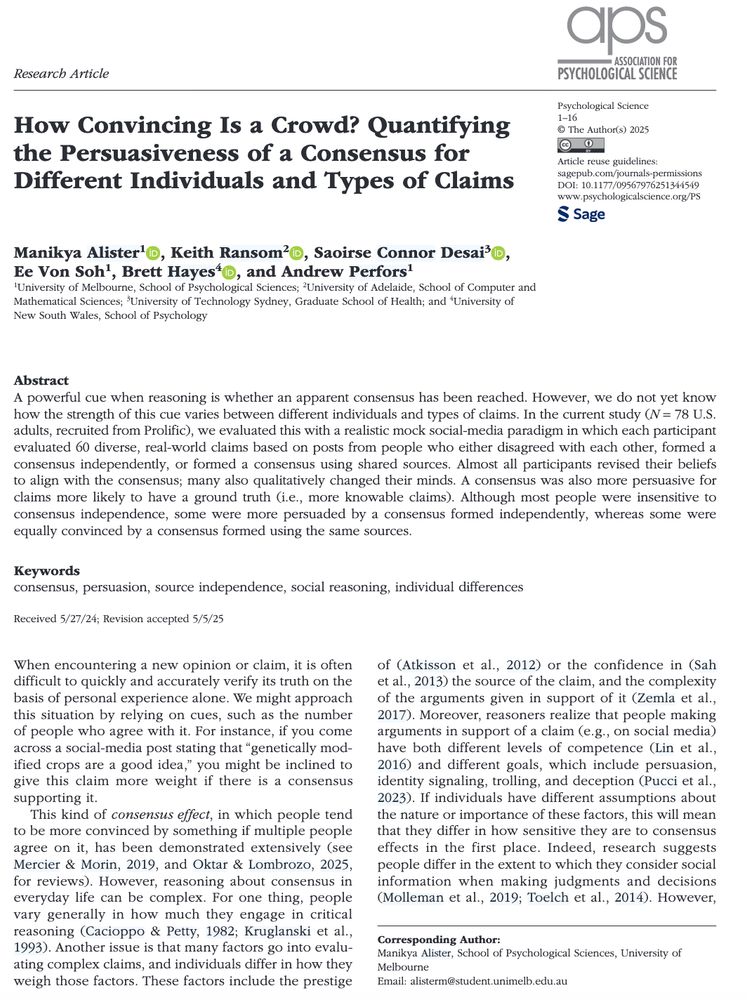

Screenshot of the article "How Convincing Is a Crowd? Quantifying the Persuasiveness of a Consensus for Different Individuals and Types of Claims"

We know that a consensus of opinions is persuasive, but how reliable is this effect across people and types of consensus, and are there any kinds of claims where people care less about what other people think? This is what we tested in our new(ish) paper in @psychscience.bsky.social

10.08.2025 23:11 — 👍 65 🔁 32 💬 5 📌 2

I really like this paper. I fear that people think the authors are claiming that the brain isn’t predictive though, which this study cannot (and does not) address. As the title says, the data purely show that evoked responses are not necessarily prediction errors, which makes sense!

15.07.2025 11:43 — 👍 17 🔁 4 💬 2 📌 1

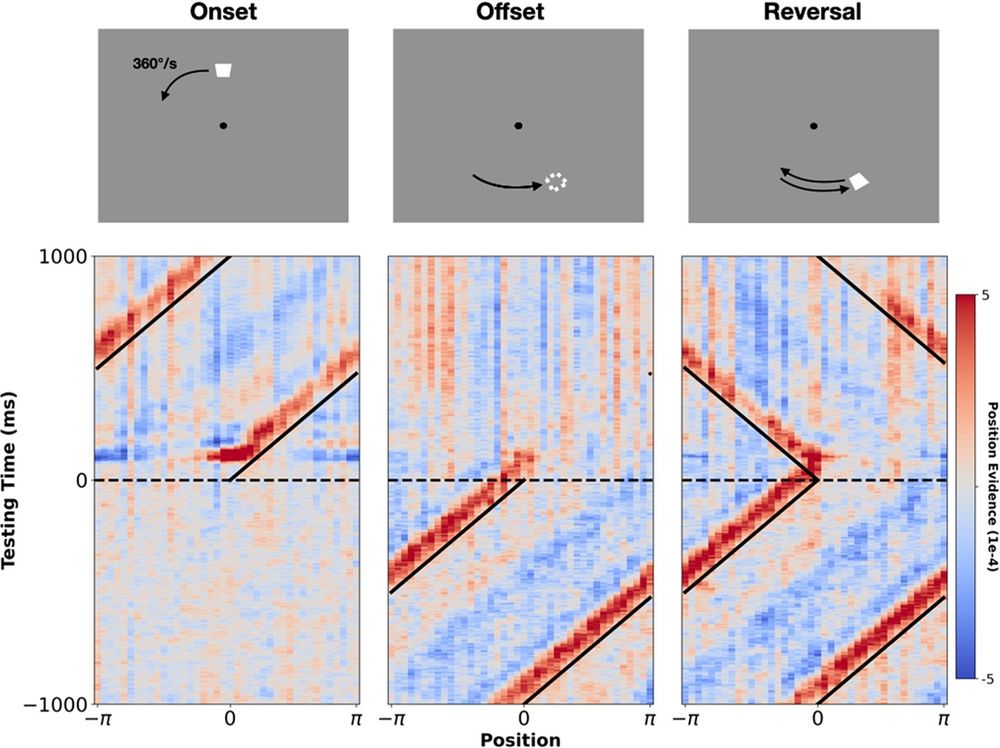

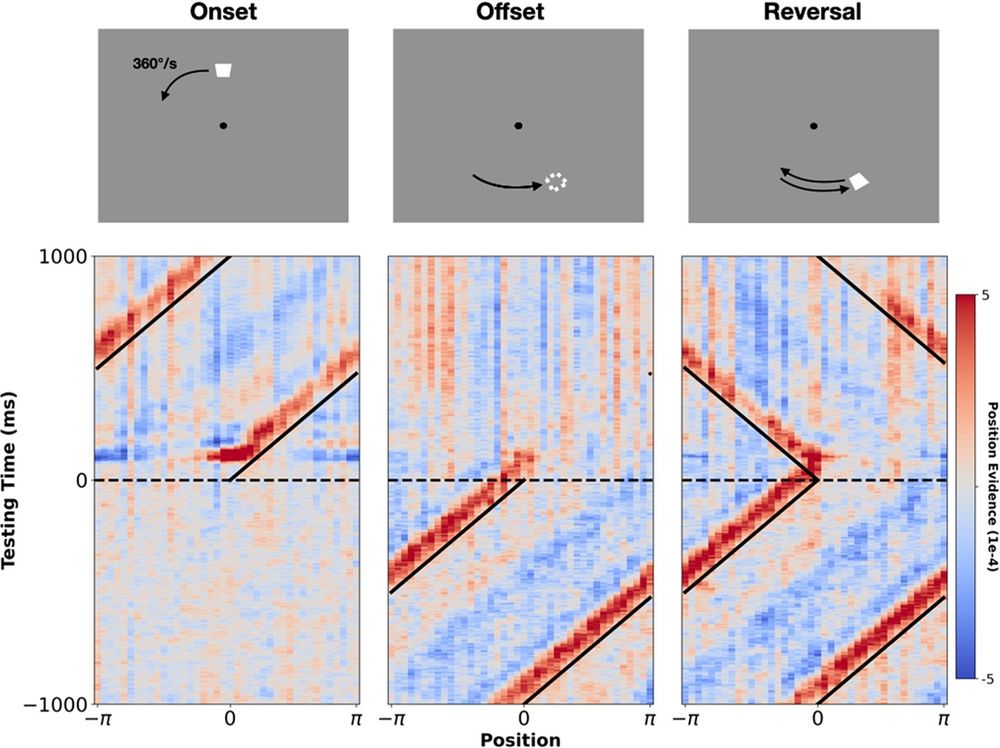

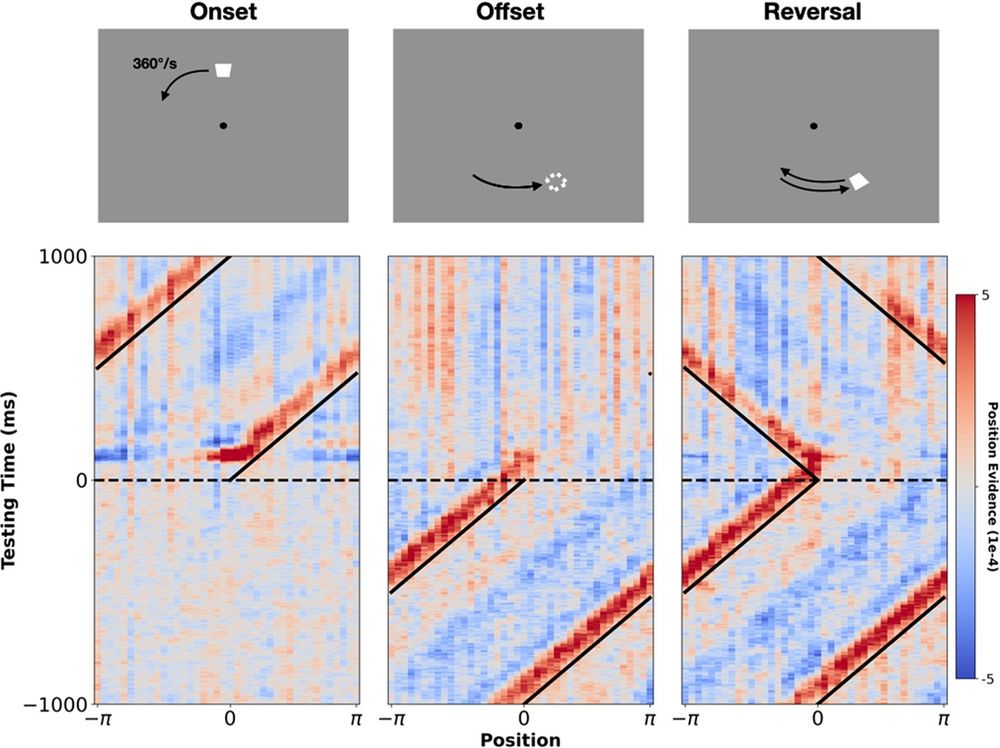

Mapping the position of moving stimuli. The top three panels show the three events of interest: stimulus onset, stimulus offset, and stimulus reversal (left to right). The bottom three panels show group-level probabilistic spatio-temporal maps centered around these three events. Diagonal black lines mark the true position of the stimulus. Horizontal dashed lines mark the time of the event of interest (stimulus onset, offset, or reversal). Red indicates high probability regions and blue indicates low probability regions (‘position evidence’ gives the difference between the posterior probability and chance). Note: these maps were generated from recordings at posterior/occipital sites.

It takes time for the #brain to process information, so how can we catch a flying ball? @renrutmailliw.bsky.social &co reveal a multi-stage #motion #extrapolation occurring in the #HumanBrain, shifting the represented position of moving objects closer to real time @plosbiology.org 🧪 plos.io/3Fm83Fc

27.05.2025 18:06 — 👍 18 🔁 5 💬 0 📌 0

Mapping the position of moving stimuli. The top three panels show the three events of interest: stimulus onset, stimulus offset, and stimulus reversal (left to right). The bottom three panels show group-level probabilistic spatio-temporal maps centered around these three events. Diagonal black lines mark the true position of the stimulus. Horizontal dashed lines mark the time of the event of interest (stimulus onset, offset, or reversal). Red indicates high probability regions and blue indicates low probability regions (‘position evidence’ gives the difference between the posterior probability and chance). Note: these maps were generated from recordings at posterior/occipital sites.

It takes time for the #brain to process information, so how can we catch a flying ball? This study provides evidence of multi-stage #motion #extrapolation occurring in the #HumanBrain, shifting the represented position of moving objects closer to real time @plosbiology.org 🧪 plos.io/3Fm83Fc

27.05.2025 13:17 — 👍 2 🔁 1 💬 0 📌 0

Thanks Henry! All kudos really go to Charlie for the modelling! Hope all is well in Brissy :)

23.05.2025 21:36 — 👍 1 🔁 0 💬 0 📌 0

What are the organizing dimensions of language processing?

We show that voxel responses during comprehension are organized along 2 main axes: processing difficulty & meaning abstractness—revealing an interpretable, topographic representational basis for language processing shared across individuals

23.05.2025 16:59 — 👍 71 🔁 30 💬 3 📌 0

Assistant Professor of Cognitive Science at Johns Hopkins. My lab studies human vision using cognitive neuroscience and machine learning. bonnerlab.org

Wildlife artist and ecologist based in Buckfastleigh, Devon, UK

PhD student at the University of Sydney. Interested in visual cognition, time perception, and the sense of agency.

deweidai.com

Official profile of the Australasian Cognitive Neuroscience Society (acns.org.au)

neuroscientist, psychiatrist, writer

optogenetics.org

karldeisseroth.org

https://www.amazon.com/Projections-Story-Emotions-Karl-Deisseroth/dp/1984853694

she/her • PhD student at the University of Melbourne, Australia • neural basis of dietary decision making

PhD student in the Language and Cognition lab at Stanford.

samahabdelrahim.github.io

PI of Action & Perception Lab at UCL. Professor. Cognitive neuroscience, action, perception, learning, prediction. Cellist, lazy runner, mum.

https://www.ucl.ac.uk/pals/action-and-perception-lab/

https://www.fil.ion.ucl.ac.uk/team/action-and-perception/

Chair in Speech and Hearing Sciences, UCL

Post-doctoral research fellow in cognitive neuroscience (Oxford), interested in complex systems and in simple systems who believe they are complex systems

Ph.D. student | Cognitive and Computational Neuroscience | Consciousness | mesec.co Co-founder and VP

Melbourne based PhD candidate interested in all things social reasoning, cognition, modelling, and philosophy of science 🤓

https://manikyaalister.github.io/

PhD Candidate at the Predictive Brain Lab @DondersInstitute | MSc in Brain and Cognitive Sciences @UvA_Amsterdam.

NCCs & theories of consciousness, philosophy in neurobiology

Senior research fellow & head of the Dept. of Analytic Philosophy at the Institute of Philosophy of the Czech Academy of Sciences in Prague

https://sites.google.com/view/tommarvan/homepage

Phil. prof; cogsci, time, temporal consciousness, neuroscience; here for the news

Postdoctoral researcher: synthetic philosophy, the mind and life sciences, history of medicine, psychiatry

Producer @manymindspod.bsky.social;

Contributing editor @publicdomainrev.bsky.social

https://www.linkedin.com/in/urte-laukaityte

Assistant professor at the Department of Cognitive Neuroscience of the University of Maastricht, studying the Neuroscience of false percepts.

Girl Dad

PhD candidate at the MPI for Psycholinguistics

Language & speech production

Psycholinguistics 🤝 speech motor control