Taking Jaggedness Seriously

Why we should expect AI capabilities to keep being extremely uneven, and why that matters

This blog post by Helen Toner (well-known by some for a stint on the OpenAI board) is *really really* interesting because it is the first thing I've read by someone in Frontier AI that starts to get to grips with sociotechnical concerns open.substack.com/pub/helenton...

29.11.2025 06:32 — 👍 82 🔁 27 💬 8 📌 11

We're hiring! May be a good fit for you if you're excited about:

* Working on frontier AI issues (specific examples in the job description)

* Joining a team with a range of interests & expertise

* Bringing data, evidence, & nuance to policy conversations about frontier AI

Open until Nov 10:

17.10.2025 19:58 — 👍 9 🔁 3 💬 0 📌 0

I honestly don't know how big the potential harms of personalization are—I think it's possible we end up coping fine. But it's crazy to me how little mindshare this seems to be getting among people who think about unintended systemic effects of AI for a living.

22.07.2025 00:49 — 👍 4 🔁 0 💬 1 📌 0

Thinking about this, I keep coming back to two stories—

1) how FB allegedly trained models to identify moments when users felt worthless, then sold that data to advertisers

2) how we're already seeing chatbots addicting kids & adults and warping their sense of what's real

22.07.2025 00:49 — 👍 1 🔁 0 💬 1 📌 0

Personalized AI is rerunning the worst part of social media's playbook

The incentives, risks, and complications of AI that knows you

AI companies are starting to promise personalized assistants that “know you.” We’ve seen this playbook before — it didn’t end well.

In a guest post for @hlntnr.bsky.social’s Rising Tide, I explore how leading AI labs are rushing toward personalization without learning from social media’s mistakes

21.07.2025 18:32 — 👍 14 🔁 5 💬 0 📌 3

YouTube video by FAR․AI

Helen Toner - Unresolved Debates on the Future of AI [Tech Innovations AI Policy]

Full video here (21 min):

www.youtube.com/watch?v=dzwi...

30.06.2025 20:40 — 👍 3 🔁 0 💬 0 📌 1

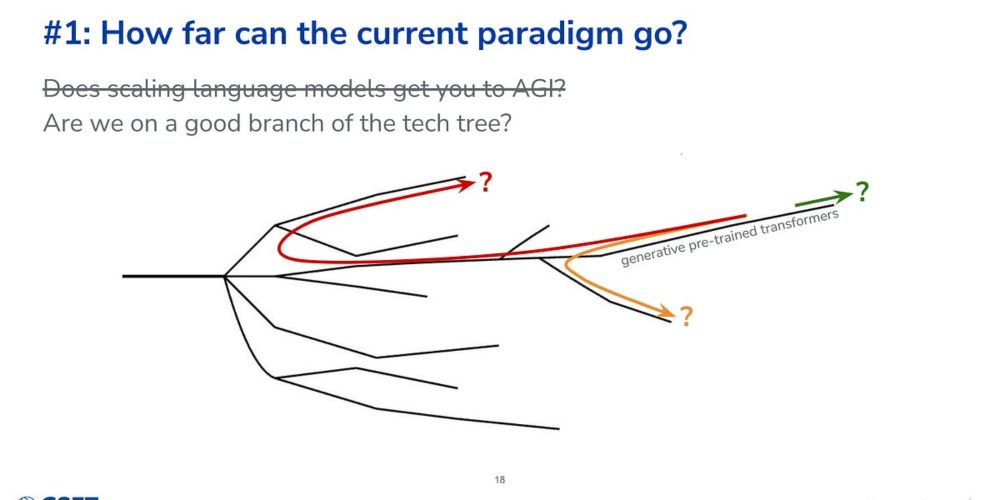

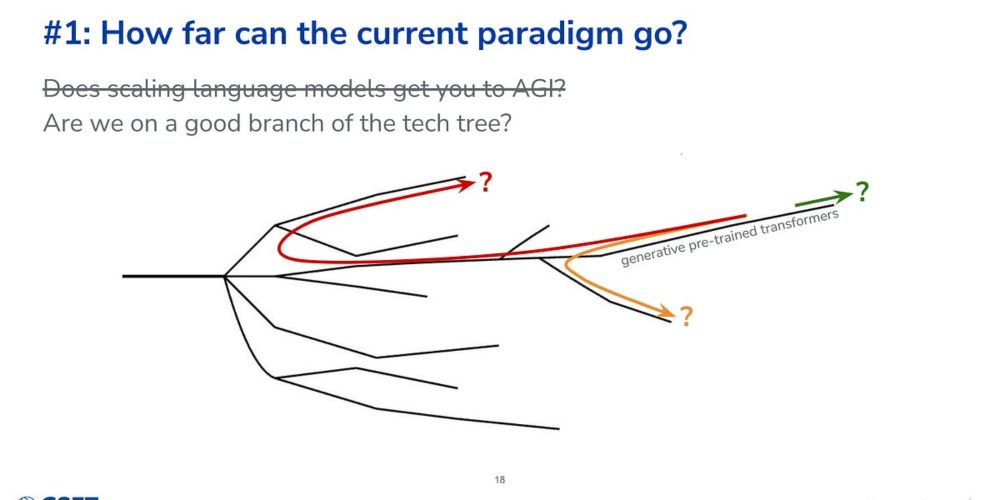

Unresolved debates about the future of AI

How far the current paradigm can go, AI improving AI, and whether thinking of AI as a tool will keep making sense

The 3 disagreements are:

How far can the current paradigm go?

How much can AI improve AI?

Will future AI still basically be tools, or will they be something else?

Thanks to

@farairesearch

for the invitation to do this talk! Transcript here:

helentoner.substack.com/p/unresolved...

30.06.2025 20:40 — 👍 6 🔁 0 💬 1 📌 0

Been thinking recently about how central "AI is just a tool" is to disagreements about the future of AI. Is it? Will it continue to be?

Just posted a transcript from a talk where I go into this + a couple other key open qs/disagreements (not p(doom)!).

🔗 below, preview here:

30.06.2025 20:40 — 👍 12 🔁 4 💬 1 📌 0

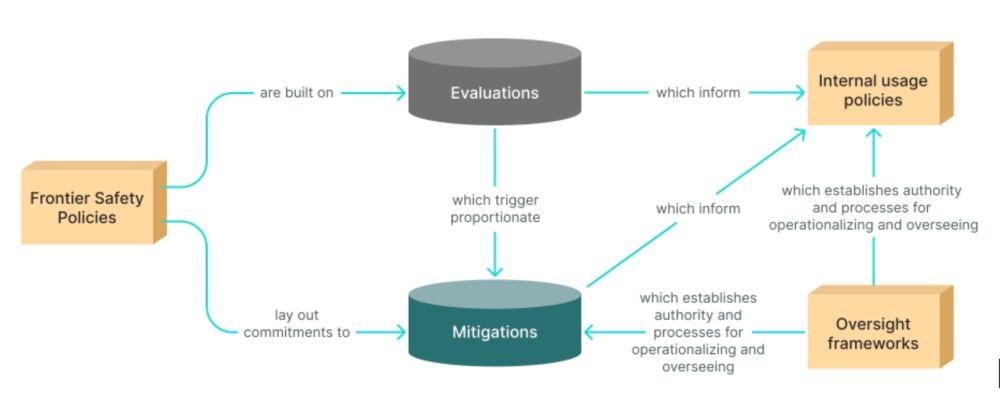

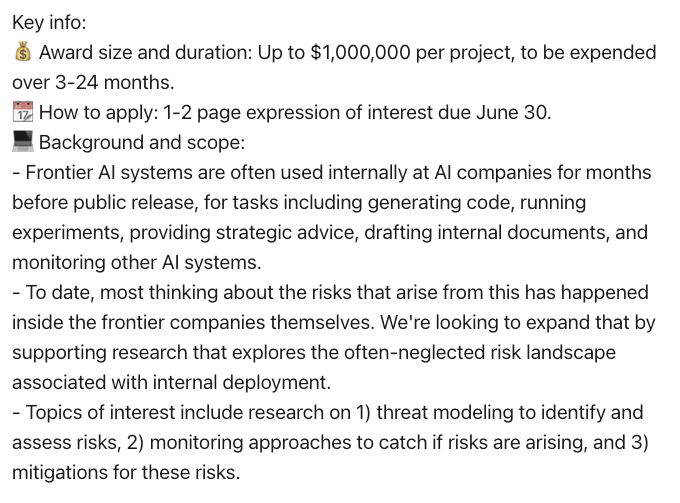

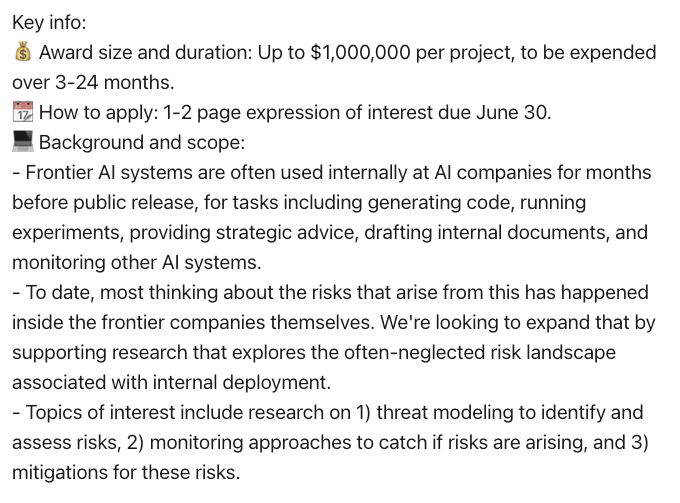

2 weeks left on this open funding call on risks from internal deployments of frontier AI models—submissions are due June 30.

Expressions of interest only need to be 1-2 pages, so still time to write one up!

Full details: cset.georgetown.edu/wp-content/u...

16.06.2025 18:37 — 👍 7 🔁 2 💬 0 📌 0

💡Funding opportunity—share with your AI research networks💡

Internal deployments of frontier AI models are an underexplored source of risk. My program at @csetgeorgetown.bsky.social just opened a call for research ideas—EOIs due Jun 30.

Full details ➡️ cset.georgetown.edu/wp-content/u...

Summary ⬇️

19.05.2025 16:59 — 👍 9 🔁 5 💬 1 📌 1

We’re Arguing About AI Safety Wrong | AI Frontiers

Helen Toner, May 12, 2025 — Dynamism vs. stasis is a clearer lens for criticizing controversial AI safety prescriptions.

...But too many critics of those stasist ideas try to shove the underlying problems under the rug. With this post, I"m trying to help us hold both things at once.

Read the full post on AI Frontiers: www.ai-frontiers.org/articles/wer...

Or my substack: helentoner.substack.com/p/dynamism-v...

12.05.2025 18:21 — 👍 4 🔁 2 💬 1 📌 0

From The Future and Its Enemies by Virginia Postrel:

Dynamism: "a world of constant creation, discovery, and competition"

Stasis: "a regulated, engineered world... [that values] stability and control"

Too many AI safety policy ideas would push us toward stasis. But...

12.05.2025 18:21 — 👍 0 🔁 0 💬 1 📌 0

Criticizing the AI safety community as anti-tech or anti-risktaking has always seemed off to me. But there *is* plenty to critique. My latest on Rising Tide (xposted with @aifrontiers.bsky.social!) is on the 1998 book that helped me put it into words.

In short: it's about dynamism vs stasis.

12.05.2025 18:21 — 👍 4 🔁 3 💬 1 📌 0

Cognitive Revolution (🇺🇸): More insidery chat with @nathanlabenz.bsky.social getting into why nonproliferation is the wrong way to manage AI misuse; AI in military decision support systems, and a bunch of other stuff.

Clip on my beef with talk about the "offense-defense" balance in AI:

22.04.2025 01:26 — 👍 0 🔁 0 💬 1 📌 0

Stop the World (🇦🇺): Fun, wide-ranging conversation with David Wroe of @aspi-org.bsky.social on where we're at with AI, reasoning models, DeepSeek, scaling laws, etc etc.

Excerpt on whether we can "just" keep scaling language models:

22.04.2025 01:26 — 👍 1 🔁 0 💬 1 📌 0

2 new podcast interviews out in the last couple weeks—one for more of a general audience, one more inside baseball.

You can also pick your accent (I'm from Australia and sound that way when I talk to other Aussies, but mostly in professional settings I sound ~American)

22.04.2025 01:26 — 👍 2 🔁 0 💬 1 📌 0

cc @binarybits.bsky.social re hardening the physical world,

@vitalik.ca re d/acc, @howard.fm re power concentration... plus many others I'm forgetting whose takes helped inspire this post. I hope this is a helpful framing for these tough tradeoffs.

05.04.2025 18:09 — 👍 2 🔁 0 💬 0 📌 0

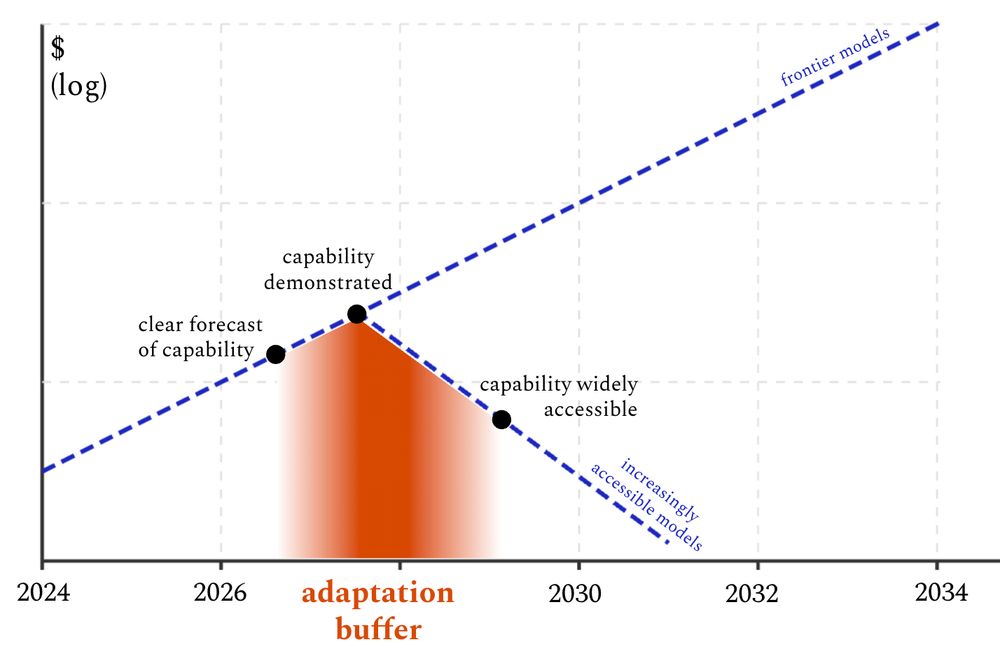

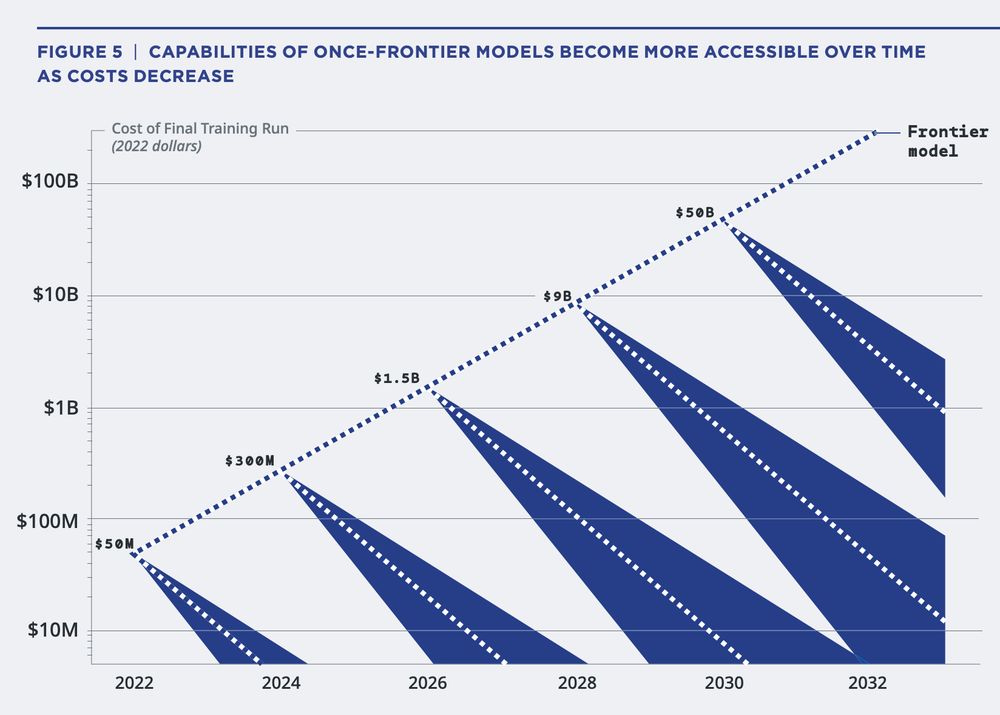

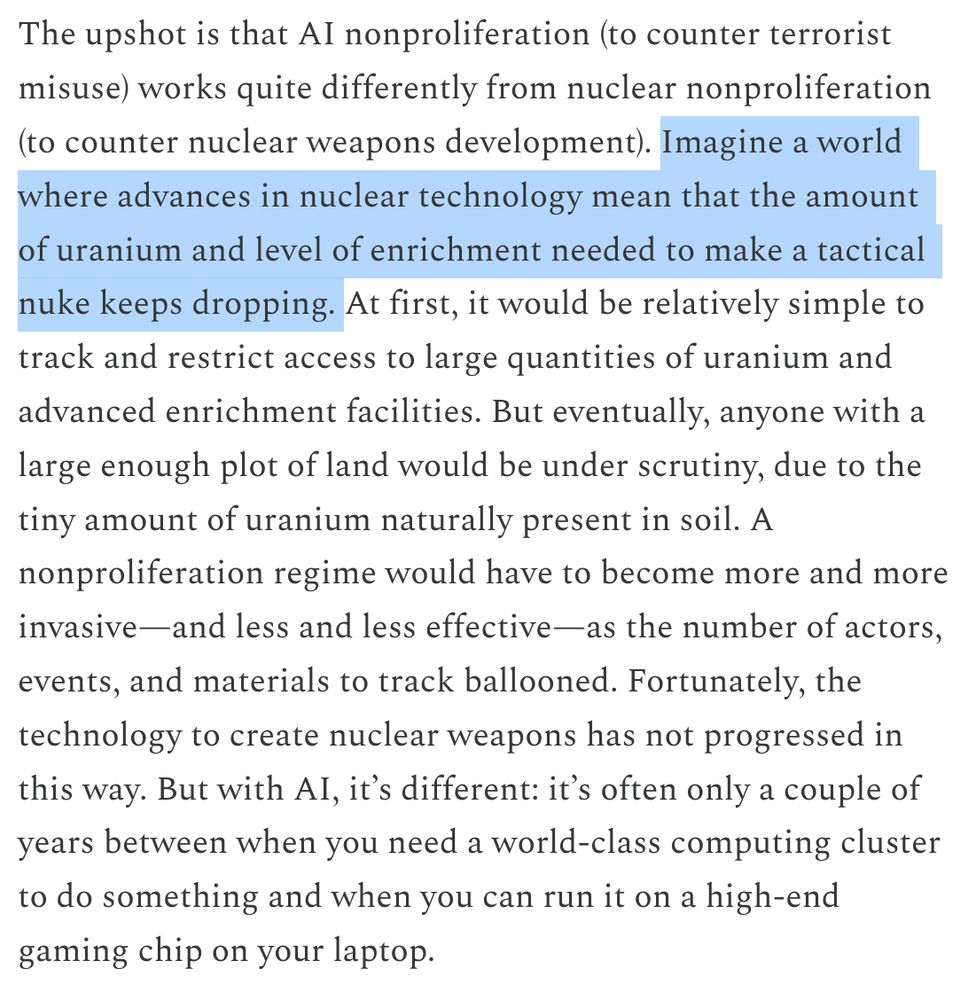

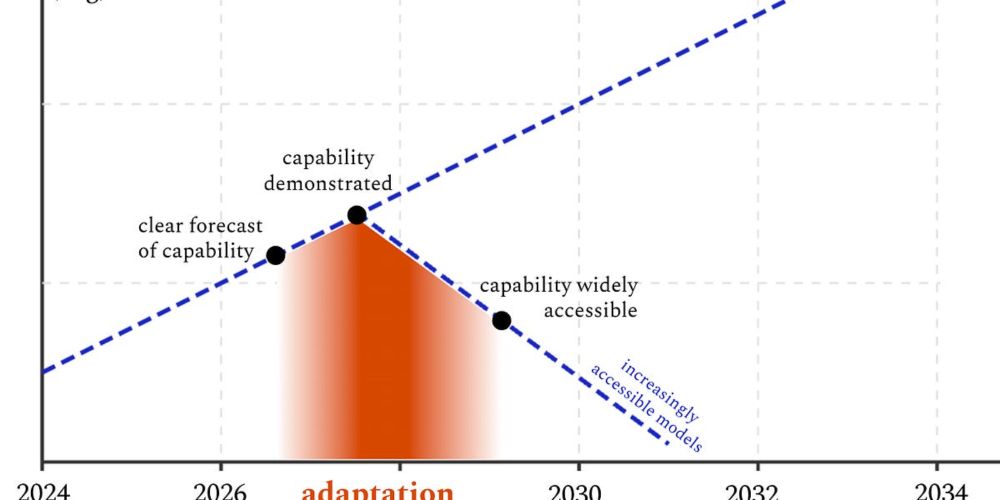

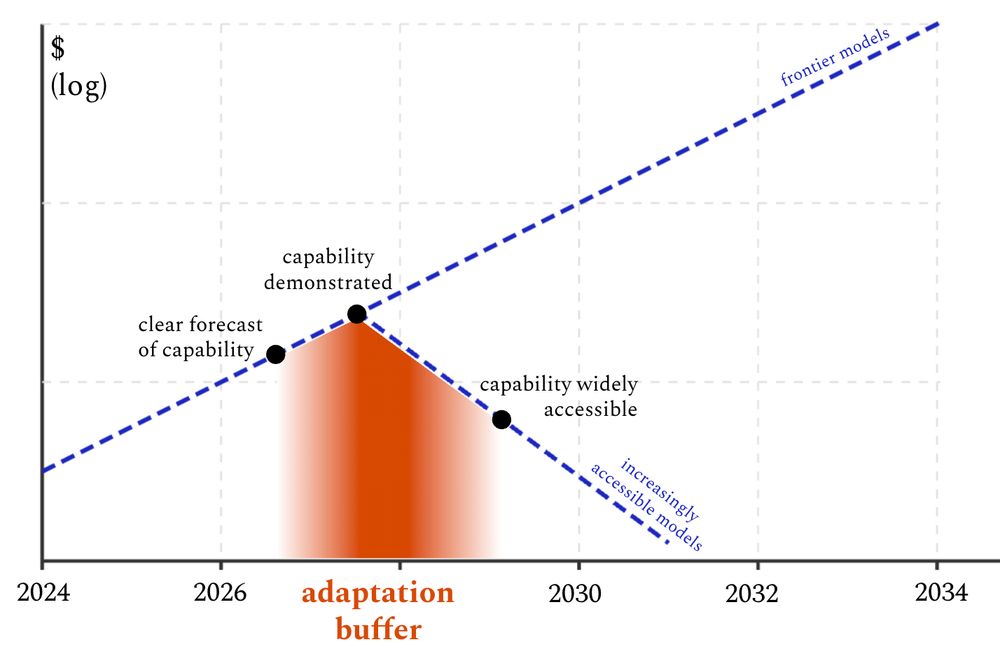

Nonproliferation is the wrong approach to AI misuse

Making the most of “adaptation buffers” is a more realistic and less authoritarian strategy

I don't think this approach will obviously be enough, I don't think it's equivalent to "just open source everything YOLO," and I don't think any of my argument applies to tracking/managing the frontier or loss of control risks.

More in the full piece: helentoner.substack.com/p/nonprolife...

05.04.2025 18:09 — 👍 1 🔁 0 💬 1 📌 0

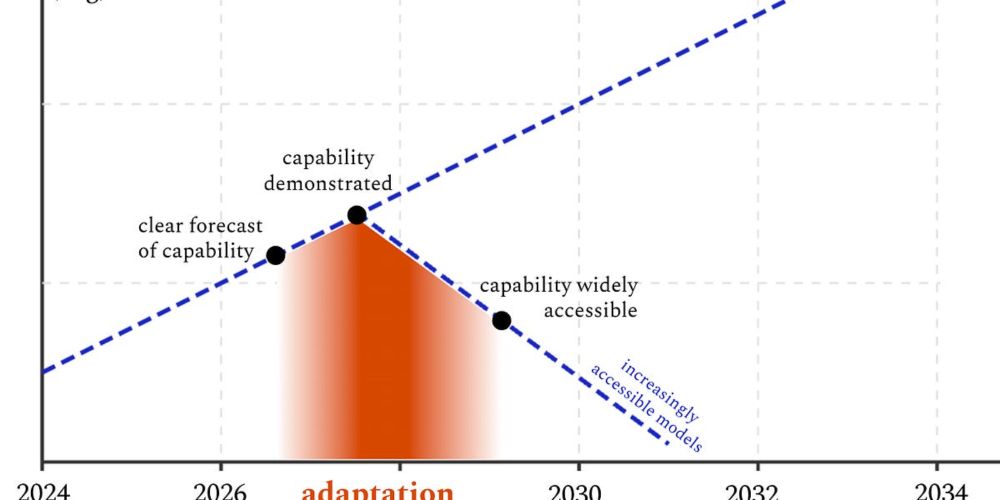

What to do instead? IMO the best option is to think in terms of "adaptation buffers," the gap between when we know a new misusable capability is coming and when it's actually widespread.

During that time, we need massive efforts to build as much societal resilience as we can.

05.04.2025 18:09 — 👍 2 🔁 0 💬 1 📌 0

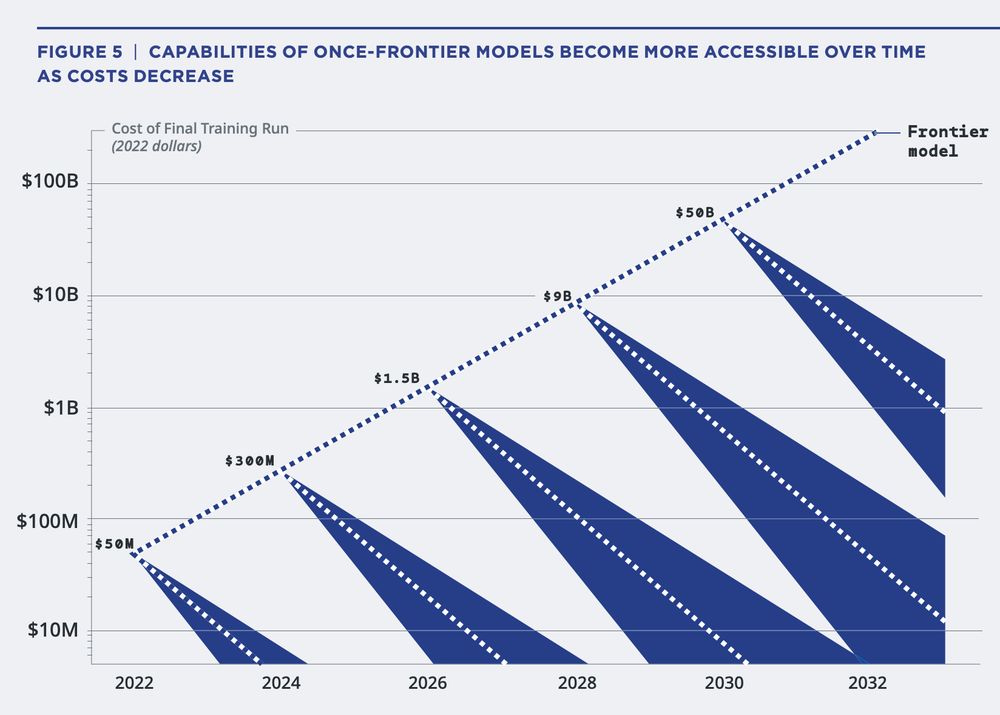

The basic problem is that the kind of AI that's relevant here (for "misuse" risks) is going to get way cheaper & more accessible over time. This means that to indefinitely prevent/control its spread, your nonprolif regime will get more & more invasive and less & less effective.

05.04.2025 18:09 — 👍 1 🔁 0 💬 1 📌 0

Seems likely that at some point AI will make it much easier to hack critical infrastructure, create bioweapons, etc etc. Many argue that if so, a hardcore nonproliferation strategy is our only option.

Rising Tide launch week post 3/3 is on why I disagree 🧵

helentoner.substack.com/p/nonprolife...

05.04.2025 18:09 — 👍 18 🔁 4 💬 4 📌 1

The idea of AI "alignment" seems increasingly confused—is it about content moderation or controlling superintelligence? Is it basically solved or wide open?

Rising Tide #2 is about how we got here, and how the core problem is whether we can steer advanced AI at all.

03.04.2025 14:49 — 👍 5 🔁 0 💬 0 📌 1

AI Safety and Security. Fellow @ CSET | Georgetown. CS/AI PhD. Nerd.

this bio is left as an exercise to the reader

🌱 emilyliu.me

Researcher at @ox.ac.uk (@summerfieldlab.bsky.social) & @ucberkeleyofficial.bsky.social, working on AI alignment & computational cognitive science. Author of The Alignment Problem, Algorithms to Live By (w. @cocoscilab.bsky.social), & The Most Human Human.

Blog at thezvi.substack.com, this is a pure backup, same handle on Twitter.

proud dad, snack enthusiast, tech reporter at CalMatters/The Markup, formerly at WIRED and VentureBeat

Signal —> kharijohnson.67

Professor, Santa Fe Institute. Research on AI, cognitive science, and complex systems.

Website: https://melaniemitchell.me

Substack: https://aiguide.substack.com/

President, Renaissance Philanthropy

NTI | bio Vice President, biophysicist. Working to strengthen biotech governance, biosecurity & pandemic preparedness. Formerly US Health & Human Services and Dept of Defense, Federation of American Scientists, Chatham House, Open Phil, UC Berkeley

physics grad student @ucsc | author @astrobites | chihuahua enthusiast | he/him

Living on Larrakia Land, raising my little family and trying to call out oppression when I see it.

this is for all my freaks out there

singer of allsorts | represented worldwide by Askonas Holt | Ambassador of Donne UK | Associate Royal Academy of Music | 2024 Young Artist Winner - Royal Philharmonic Society

www.lottebettsdean.com

Chief Architect for Co-op. Bike racer for Bioracer UK. Husband and Dad.

![Helen Toner - Unresolved Debates on the Future of AI [Tech Innovations AI Policy]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:pild6idslih4kv34jctz2oq3/bafkreidt3jrnbhni3zbm7ldrhjwnzm7kqfhoejj5c22tbquuu2ffznuxza@jpeg)