https://arxiv.org/abs/2410.23501

Joint work with Sebastian Weichwald, Sebastien Lachapelle, and Luigi Gresele 🙏

For more info, check the full paper 👇

arxiv.org/abs/2410.235...

17.06.2025 15:12 — 👍 0 🔁 0 💬 0 📌 0

🧵Summary

A mathematical proof that, under suitable conditions, linear properties hold for either all or none of the equivalent models with same next-token distribution 😎

Exciting open questions on empirical findings remain🤔 - check Section 6 (Discussion) in the paper!

8/9

17.06.2025 15:12 — 👍 0 🔁 0 💬 1 📌 0

3⃣ We demonstrate what linear properties are shared by all or none LLMs.

🔥 Under mild assumptions, relational linear properties are shared!

⚠️ Parallel vectors may not be shared (they are under diversity)!

7/9

17.06.2025 15:12 — 👍 0 🔁 0 💬 1 📌 0

We also describe other linear properties: linear subspaces, probing, steering, based on relational strings (Paccanaro and Hinton, 2001).

💡They arise when the LLM can predict next-tokens for textual queries like: "What is the written language?" for many context strings!

6/9

17.06.2025 15:12 — 👍 0 🔁 0 💬 1 📌 0

2⃣ We reformulate linear properties of LLMs based on textual strings, depending on how LLMs predict next tokens

💡Parallel vectors arise from same log-ratios of next-token probs

E.g. same ratio for "easy"/"easiest" and "strong"/"strongest" in all contexts => parallel vecs

5/9

17.06.2025 15:12 — 👍 0 🔁 0 💬 1 📌 0

💡The extended linear equivalence underlies that two models' representations are linearly related, but in a subspace

‼️Outside that subspace, representations can differ a lot!

4/9

17.06.2025 15:12 — 👍 0 🔁 0 💬 1 📌 0

1⃣We extend the results by Khemakem et al. (2020), Roeder et al. (2021), removing a diversity assumption.

For the first time, we relate models with different repr. dimensions & find that repr.s of LLMs with same distribution are related by an “extended linear equivalence”!

3/9

17.06.2025 15:12 — 👍 0 🔁 0 💬 1 📌 0

Contributions:

1⃣ An identifiability result for LLMs

2⃣A 𝙧𝙚𝙡𝙖𝙩𝙞𝙤𝙣𝙖𝙡 reformulation of linear properties

3⃣ A proof of what properties are 𝙘𝙤𝙫𝙖𝙧𝙞𝙖𝙣𝙩 (~to Physics, cf. Villar et al. (2023)): hold for all or none of the LLMs with same next-token distribution

2/9

17.06.2025 15:12 — 👍 0 🔁 0 💬 1 📌 0

🧵Why are linear properties so ubiquitous in LLM representations?

We explore this question through the lens of 𝗶𝗱𝗲𝗻𝘁𝗶𝗳𝗶𝗮𝗯𝗶𝗹𝗶𝘁𝘆:

“All or None: Identifiable Linear Properties of Next-token Predictors in Language Modeling”

Published at #AISTATS2025🌴

1/9

17.06.2025 15:12 — 👍 3 🔁 0 💬 1 📌 0

Tesi etd-01262025-170550

Only yesterday I discovered that my PhD thesis has been made public to everyone 😅

I worked three years on "Learning concepts" and I tried to spot the connection between #concepts, #symbols, and #representations, and how they're used in ML today 👾🪄

etd.adm.unipi.it/t/etd-012620...

17.04.2025 07:22 — 👍 13 🔁 0 💬 0 📌 2

Hey hey! We have an accepted paper at #AISTATS2025!!

Time to prepare for Thailand 🪷🏖️🌴🐒

Huge thanks to my coauthors

Luigi Gresele, Sebastian Weichwald, and @seblachap.bsky.social for all the joint effort!

More details soon 👇

arxiv.org/abs/2410.235...

23.01.2025 12:18 — 👍 5 🔁 2 💬 0 📌 0

Don't miss the chance to learn more about our new #benchmark suite @ #NeurIPS2024

📊 New benchmarks to test concept quality learned by all kinds of models: Neural, NeSy, Concept-based, and Foundation models.

🤔 All models learn to solve the task but, beware, do they learn concepts??

Spoiler: 😱

11.12.2024 12:39 — 👍 4 🔁 1 💬 0 📌 0

📣 Does your model learn high-quality #concepts, or does it learn a #shortcut?

Test it with our #NeurIPS2024 dataset & benchmark track paper!

rsbench: A Neuro-Symbolic Benchmark Suite for Concept Quality and Reasoning Shortcuts

What's the deal with rsbench? 🧵

10.12.2024 19:10 — 👍 35 🔁 8 💬 1 📌 4

The #NeurIPS experience is about to start! ✈

Drop me a line if you want to chat about #neurosymbolic reasoning #shortcuts, human-interpretable machine #concepts, logically-consistent #LLMs, or human-in-the-➰ #XAI!

See you in Vancouver!

09.12.2024 01:25 — 👍 11 🔁 2 💬 0 📌 0

For those attending #NeurIPS2024 go to UNIREPS @unireps.bsky.social workshop to know more about representations similarity. Nice work led by @beatrixmgn.bsky.social 🌟

06.12.2024 16:21 — 👍 10 🔁 2 💬 0 📌 0

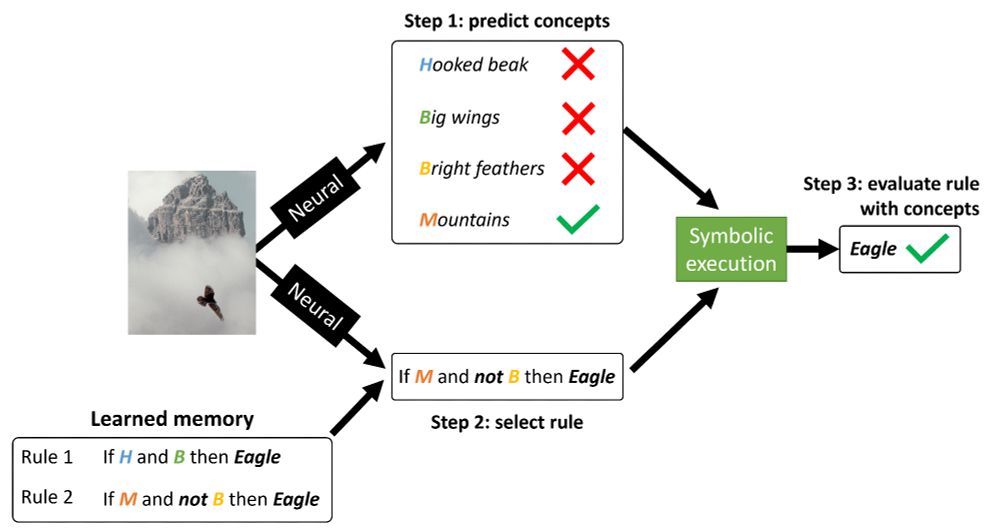

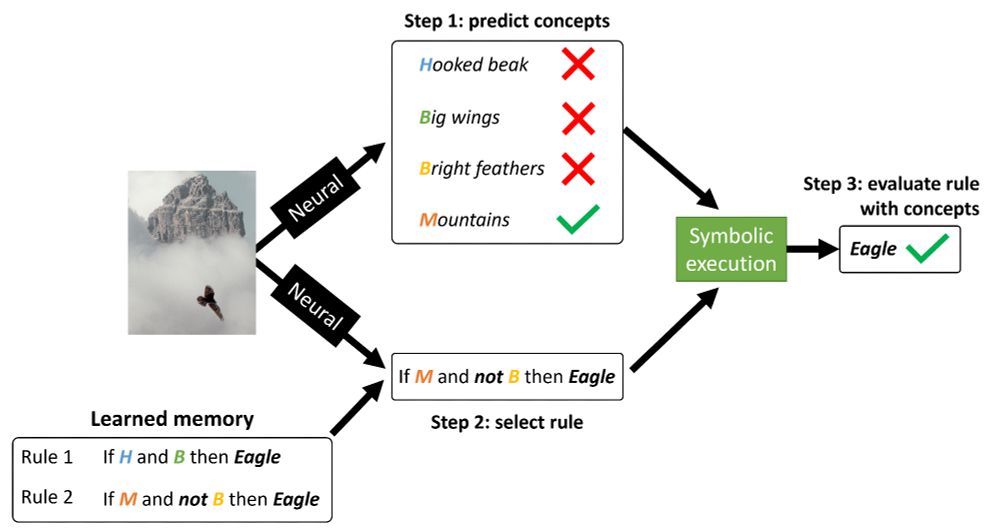

🚨 Interpretable AI often means sacrificing accuracy—but what if we could have both? Most interpretable AI models, like Concept Bottleneck Models, force us to trade accuracy for interpretability.

But not anymore, due to Concept-Based Memory Reasoner (CMR)! #NeurIPS2024 (1/7)

04.12.2024 08:45 — 👍 24 🔁 7 💬 2 📌 0

Ask you to please add me :)

04.12.2024 08:23 — 👍 1 🔁 0 💬 1 📌 0

We are the premier conference on #uncertainty in #AI and #ML since 1985 🧓

Hello, 🦋!

Follow us to reduce uncertainty!

03.12.2024 16:08 — 👍 29 🔁 12 💬 0 📌 2

Then I agree 😄

02.12.2024 19:54 — 👍 1 🔁 0 💬 0 📌 0

What is the precise definition of feature?

02.12.2024 19:33 — 👍 1 🔁 0 💬 1 📌 0

I would like to ask for some back stabs to reviewer 2 🤬

28.11.2024 18:41 — 👍 2 🔁 0 💬 1 📌 0

I know @looselycorrect.bsky.social well enough eheh

21.11.2024 18:41 — 👍 2 🔁 0 💬 1 📌 0

The Symbol Grounding Problem

A symbol is a "physical token" (Harnad 1990, arxiv.org/html/cs/9906...)

21.11.2024 18:20 — 👍 2 🔁 0 💬 2 📌 0

Do you have answers? 😁

21.11.2024 18:14 — 👍 0 🔁 0 💬 1 📌 0

Assistant Professor at Bar-Ilan University

https://yanaiela.github.io/

🧑🎓 PhD stud. @ Sapienza, Rome

🥷stealth-mode CEO

🔬prev visiting @ Cambridge | RS intern @ Amazon Search | RS intern @ Alexa.

🆓 time 🎭improv theater, 🤿scuba diving, ⛰️hiking

PostDoc Researcher @ IIT, Continual and Lifelong Learning -> Robots, Graph Neural Networks, Sequence Processing | CoLLAs 2024 Local Chair

🏠 mtiezzi.github.io

[bridged from https://blog.neurips.cc/ on the web: https://fed.brid.gy/web/blog.neurips.cc ]

Senior Research Scientist at NEC. PhD in CS @ UniPi, Bayesian Deep Learning for Graphs. Trying to do Science 🔍, not SotA 🥇.

PhD student @dtai-kuleuven.bsky.social in neurosymbolic AI and concept-based learning

https://daviddebot.github.io/

Association for Uncertainty in AI.

Upcoming conference: #uai2025 July 21-25th in Rio de Janeiro, Brazil 🇧🇷 !

https://auai.org/uai2025

PhD student in machine learning at DTU, Copenhagen.

Especially interested in model representations.

Ph.D Student at the University of Trento

PhD student in NLP at Sapienza | Prev: Apple MLR, @colt-upf.bsky.social , HF Bigscience, PiSchool, HumanCentricArt #NLProc

www.santilli.xyz

French mathematician doing XAI and Fairness for #NLProc.

Prev: PhD on CS at ANITI Toulouse & MSc on maths at École polytechnique Paris.

https://fanny-jourdan.github.io/

Computation & Complexity | AI Interpretability | Meta-theory | Computational Cognitive Science

https://fedeadolfi.github.io

PostDoc @ISTAustria 🧑🏻💻 | Organizer of @unireps.bsky.social | Member @ellis.eu | Prev. PhD @SapienzaRoma @ELLISforEurope | @amazon AWS AI | @autodesk AI Lab | (he/him)

ELLIS PhD student @ UNITN/UniCambridge || Prev. Visiting Research Student at UniCambridge || Prev. Research intern at SISLab

Pahadi 🇮🇳| Assistant Professor at TU Eindhoven | Causality, Neuro-symbolic AI, Probabilistic Circuits and pretty much all of Machine Learning ;)

Research Fellow @ University of Trento. Studying ML models that know what they do not know. He/Him.

💻 PhD Student at @dh-fbk.bsky.social @mobs-fbk.bsky.social @land-fbk.bsky.social

🇮🇹 FBK, University of Trento

🇪🇺 @ellis.eu

☕ NLP, CSS and coffee

https://nicolopenzo.github.io/

Working towards the safe development of AI for the benefit of all at Université de Montréal, LawZero and Mila.

A.M. Turing Award Recipient and most-cited AI researcher.

https://lawzero.org/en

https://yoshuabengio.org/profile/