Steve Azzolin

@steveazzolin.bsky.social

ELLIS PhD student @ UNITN/UniCambridge || Prev. Visiting Research Student at UniCambridge || Prev. Research intern at SISLab

@steveazzolin.bsky.social

ELLIS PhD student @ UNITN/UniCambridge || Prev. Visiting Research Student at UniCambridge || Prev. Research intern at SISLab

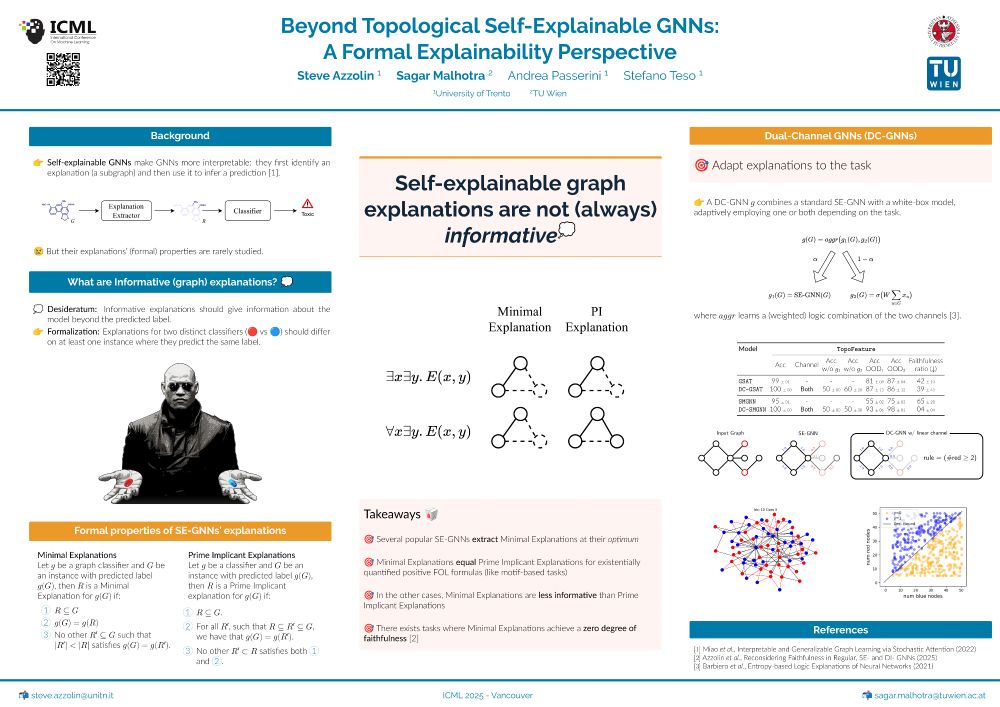

💡What can we do to make self-explanations less ambiguous?

-> We propose to automatically adapt explanations to the task by stitching together SE-GNNs with white-box models and combining their explanations.

- Self-explanations can be "unfaithful" by design

13.07.2025 17:29 — 👍 0 🔁 0 💬 1 📌 0- Models encoding different tasks can produce the same self-explanations, limiting the usefulness of explanations

13.07.2025 17:29 — 👍 0 🔁 0 💬 1 📌 0Studying some popular models, we found that:

- The information that self-explanations convey can radically change based on the underlying task to be explained, which is, however, generally unknown

🤔 What are the properties of self-explanations in GNNs? What can we expect from them?

We investigate this in our #ICML25 paper.

Come to have a chat at poster session 5, Thu 17 11 am.

w. Sagar Malhotra @andreapasspr.bsky.social @looselycorrect.bsky.social

Happening tomorrow!

Poster number 508

Saturday's session 10-12:30

3. ITS ROLE IN OOD GENERALISATION

Domain-Invariant GNNs make predictions over a domain-invariant subgraph to achieve OOD generalisation. We show that unless this subgraph is also *sufficient*, DIGNNs are not domain-invariant.

5/5

2. HOW GNNs AIM TO ACHIEVE IT

We highlight several architectural design choices of Self-Explainable GNNs favoring information leakage from nodes outside the explanation, and propose mitigations.

4/5

We propose rethinking faithfulness from three essential angles:

1. HOW TO COMPUTE IT

Many ways to compute faithfulness exists, but we show:

- they are not interchangeable

- some of them do not have the desired semantics

3/5

Paper: "Reconsidering Faithfulness in Regular, Self-Explainable and Domain Invariant GNNs"

Link: openreview.net/forum?id=kiO...

Poster session: 26 April 10am

2/5

Faithfulness of GNN explanations isn’t one-size-fits-all🧢

Our last @iclr-conf.bsky.social paper breaks it down across:

1. Evaluation metrics

2. Model implementations

3. OOD generalisation

w: Antonio L. @looselycorrect.bsky.social @andreapasserini.bsky.social

1/5

Kudos to the organisers for setting up the poster session in the fanciest room I've ever seen👀

08.12.2024 21:26 — 👍 3 🔁 0 💬 1 📌 0Hello World!

02.12.2024 09:45 — 👍 7 🔁 0 💬 1 📌 0