Just under 10 days left to submit your latest endeavours in #tractable probabilistic models!

Join us at TPM @auai.org #UAI2025 and show how to build #neurosymbolic / #probabilistic AI that is both fast and trustworthy!

14.05.2025 17:48 — 👍 11 🔁 9 💬 0 📌 0

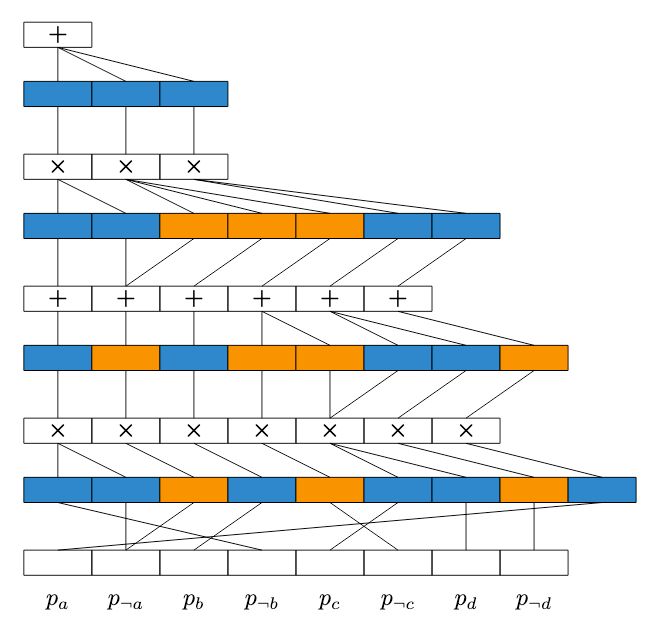

We developed a library to make logical reasoning embarrasingly parallel on the GPU.

For those at ICLR 🇸🇬: you can get the juicy details tomorrow (poster #414 at 15:00). Hope to see you there!

23.04.2025 08:12 — 👍 24 🔁 7 💬 1 📌 2

If you're at #AAAI2025, come check out our demo on neurosymbolic reinforcement learning with probabilistic logic shields 🤖 Tomorrow (Sat, March 1) from 12:30–2:30 PM during the poster session 💻

28.02.2025 22:53 — 👍 4 🔁 1 💬 0 📌 0

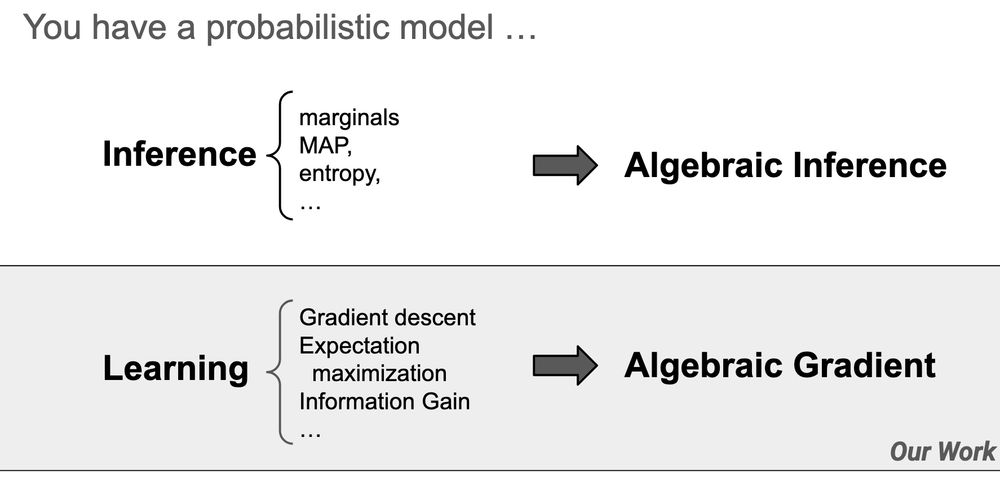

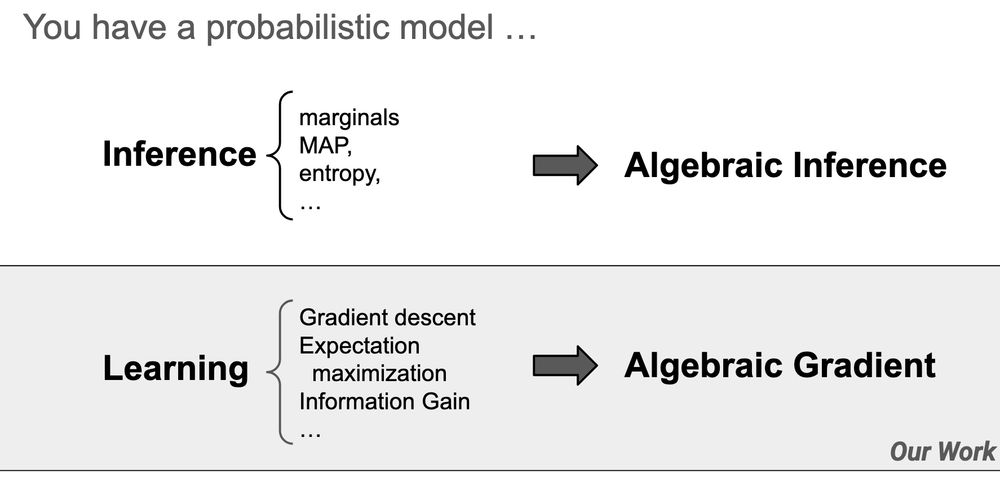

We all know backpropagation can calculate gradients, but it can do much more than that!

Come to my #AAAI2025 oral tomorrow (11:45, Room 119B) to learn more.

27.02.2025 23:45 — 👍 27 🔁 10 💬 1 📌 0

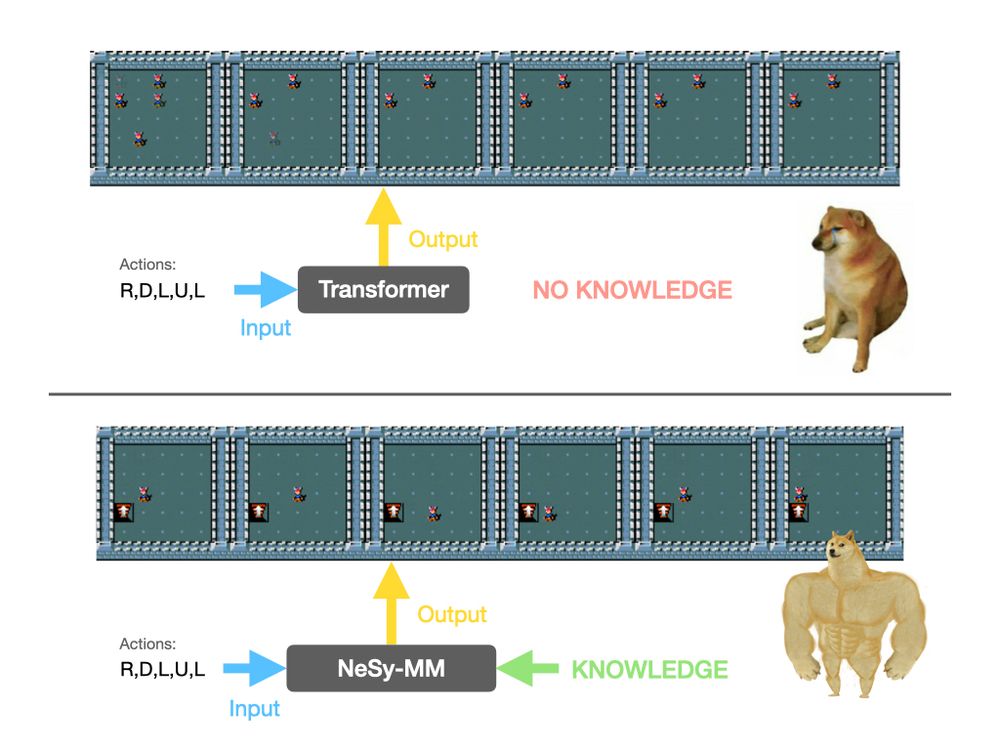

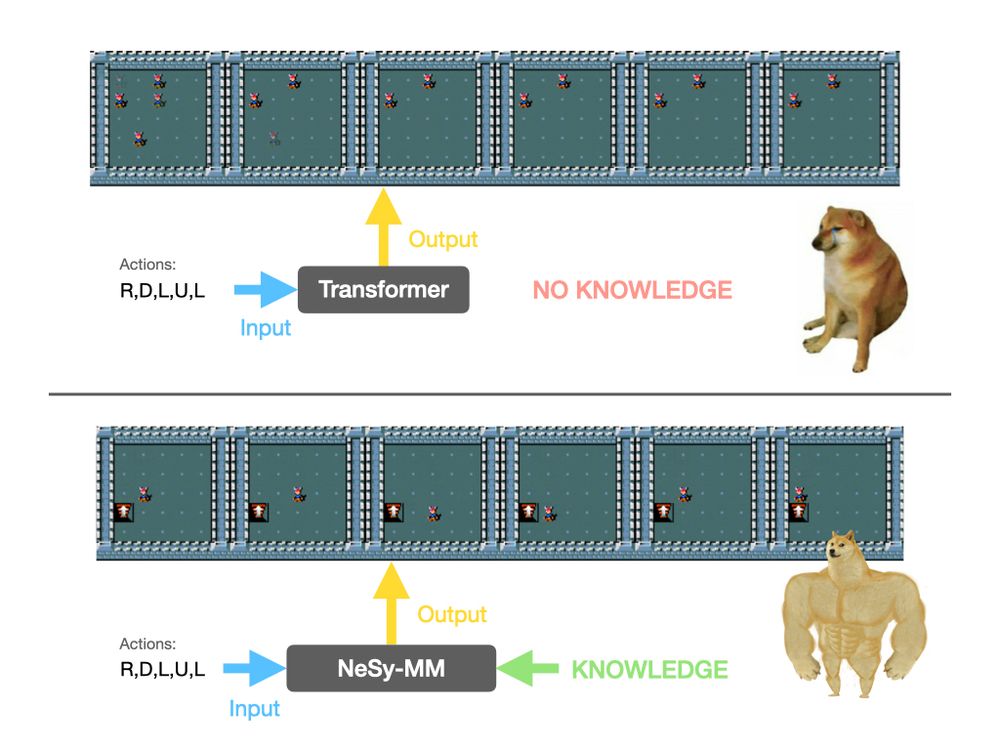

🔥 Can AI reason over time while following logical rules in relational domains? We will present Relational Neurosymbolic Markov Models (NeSy-MMs) next week at #AAAI2025! 🎉

📜 Paper: arxiv.org/pdf/2412.13023

💻 Code: github.com/ML-KULeuven/...

🧵⬇️

25.02.2025 11:01 — 👍 24 🔁 11 💬 1 📌 1

See you at #AAAI2025!

Site: dtai.cs.kuleuven.be/projects/nes...

Video: youtu.be/3uLVxwlcSQc?...

@daviddebot.bsky.social, @gabventurato.bsky.social, @giuseppemarra.bsky.social, @lucderaedt.bsky.social

#ReinforcementLearning #AI #MachineLearning #NeurosymbolicAI

(8/8)

24.02.2025 12:29 — 👍 0 🔁 0 💬 0 📌 0

Open-source & easy to use!

🔷 Code: github.com/ML-KULeuven/...

🔷 Based on MiniHack & Stable Baselines3

🔷 Define new shields in just a few lines of code!

🚀 Let’s make RL safer & smarter, together!

(7/8)

24.02.2025 12:28 — 👍 0 🔁 0 💬 1 📌 0

Want to try it yourself? 🎮

Use our interactive web demo!

🔷 Modify environments (add lava, monsters!)

🔷 Test shielded vs. non-shielded agents

🖥️ Play with it here: dtai.cs.kuleuven.be/projects/nes...

(6/8)

24.02.2025 12:28 — 👍 0 🔁 0 💬 1 📌 0

Why does this matter?

🔷 Faster training ⌛

🔷 Safer exploration 🔒

🔷 Better generalization 🌍

(5/8)

24.02.2025 12:27 — 👍 0 🔁 0 💬 1 📌 0

How does it work? 🤔🛡️

The shield:

✅ Exploits symbolic data from sensors 🌍

✅ Uses logical rules 📜

✅ Prevents unsafe actions 🚫

✅ Still allows flexible learning 🤖

A perfect blend of symbolic reasoning & deep learning!

(4/8)

24.02.2025 12:27 — 👍 1 🔁 0 💬 1 📌 0

Enter MiniHack, our demo's testing ground! 🏰🗡️

There, RL agents face:

✅ Lava cliffs & slippery floors

✅ Chasing monsters

✅ Locked doors needing keys

Findings:

🔷 Standard RL struggles to find an optimal, safe policy.

🔷 Shielded RL agents stay safe & learn faster!

(3/8)

24.02.2025 12:27 — 👍 0 🔁 0 💬 1 📌 0

Deep RL is powerful, but...

⚠️ It can take dangerous actions

⚠️ It lacks safety guarantees

⚠️ It struggles with real-world constraints

Yang et al.'s probabilistic logic shields fix this, enforcing safety without breaking learning efficiency! 🚀

(2/8)

24.02.2025 12:26 — 👍 0 🔁 0 💬 1 📌 0

🚀 Do you care about safe AI? Do you want RL agents that are both smart & trustworthy?

At #AAAI2025, we present our demo for neurosymbolic RL—combining deep learning with probabilistic logic shields for safer, interpretable AI in complex environments. 🏰🔥

🧵👇

(1/8)

24.02.2025 12:26 — 👍 6 🔁 4 💬 1 📌 1

YouTube video by David Debot

Interpretable Concept-Based Memory Reasoning - NeurIPS 2024

A short overview video can be found on YouTube: youtu.be/CgSDhQKESD0?...

#NeurIPS2024

23.12.2024 10:23 — 👍 0 🔁 0 💬 0 📌 0

Or check out our Medium post: 👉 medium.com/@pyc.devteam... (7/7)

04.12.2024 08:50 — 👍 2 🔁 0 💬 0 📌 0

NeurIPS Poster Interpretable Concept-Based Memory ReasoningNeurIPS 2024

With CMR, we’re reaching the sweet spot of accuracy and interpretability. Check it out at our poster at #NeurIPS2024! 👉 neurips.cc/virtual/2024... (6/7)

04.12.2024 08:49 — 👍 3 🔁 0 💬 1 📌 0

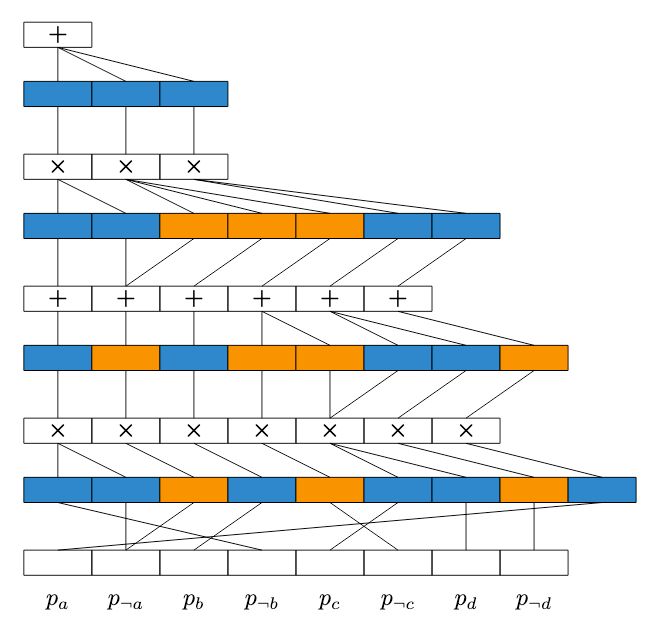

During training, CMR learns embeddings as latent representations of logic rules, and a neural rule selector identifies the most relevant rule for each instance. Due to a clever factorization and rule selector, inference is linear in the number of concepts and rules. (5/7)

04.12.2024 08:49 — 👍 1 🔁 0 💬 1 📌 0

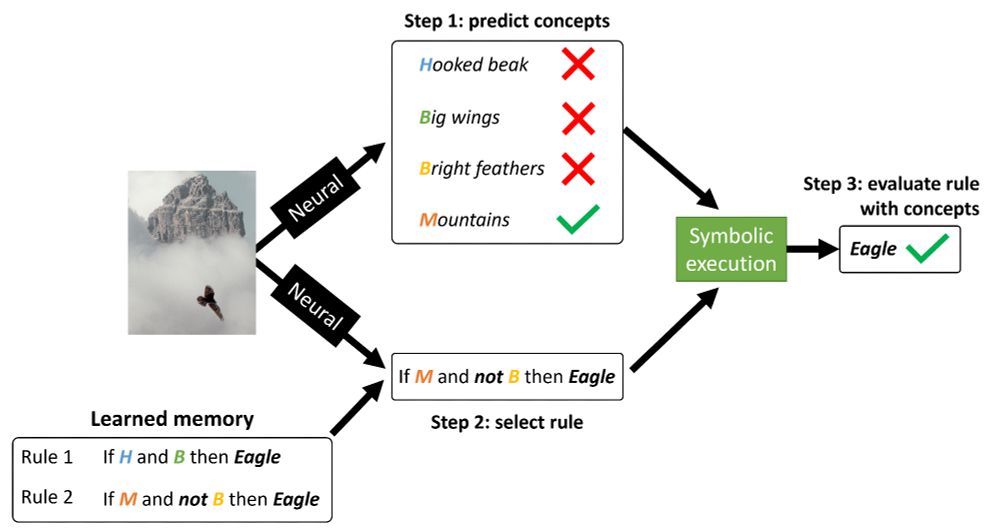

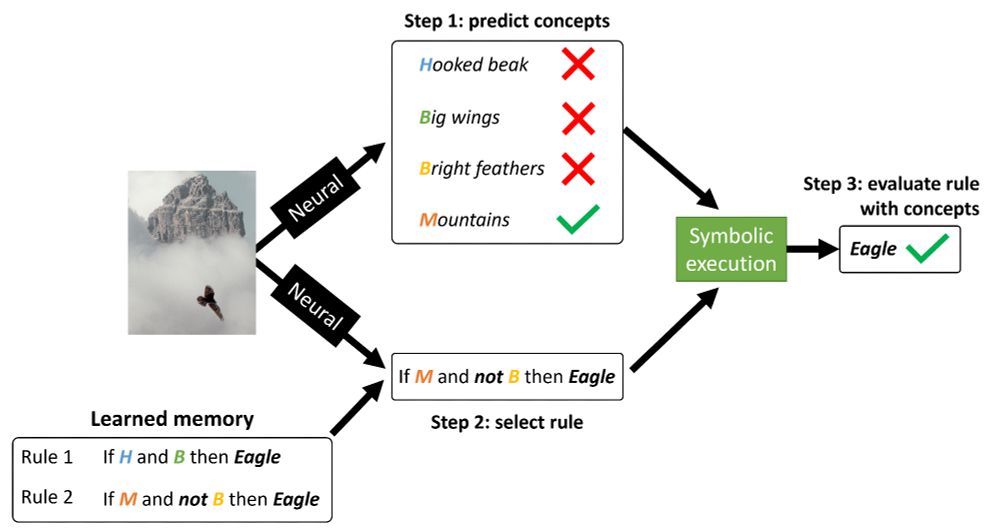

CMR makes a prediction in 3 steps:

1) Predict concepts from the input

2) Neurally select a rule from a memory of learned logic rules ➨ Accuracy

3) Evaluate the selected rule with the concepts to make a final prediction ➨ Interpretability (4/7)

04.12.2024 08:48 — 👍 1 🔁 0 💬 1 📌 0

CMR has:

⚡ State-of-the-art accuracy that rivals black-box models

🚀 Pure probabilistic semantics with linear-time exact inference

👁️ Transparent decision-making so human users can interpret model behavior

🛡️ Pre-deployment verifiability of model properties (3/7)

04.12.2024 08:47 — 👍 1 🔁 0 💬 1 📌 0

CMR is our latest neurosymbolic concept-based model. A proven 𝘶𝘯𝘪𝘷𝘦𝘳𝘴𝘢𝘭 𝘣𝘪𝘯𝘢𝘳𝘺 𝘤𝘭𝘢𝘴𝘴𝘪𝘧𝘪𝘦𝘳 irrespective of the concept set, CMR achieves near-black-box accuracy by combining 𝗿𝘂𝗹𝗲 𝗹𝗲𝗮𝗿𝗻𝗶𝗻𝗴 and 𝗻𝗲𝘂𝗿𝗮𝗹 𝗿𝘂𝗹𝗲 𝘀𝗲𝗹𝗲𝗰𝘁𝗶𝗼𝗻! (2/7)

04.12.2024 08:47 — 👍 1 🔁 0 💬 1 📌 0

🚨 Interpretable AI often means sacrificing accuracy—but what if we could have both? Most interpretable AI models, like Concept Bottleneck Models, force us to trade accuracy for interpretability.

But not anymore, due to Concept-Based Memory Reasoner (CMR)! #NeurIPS2024 (1/7)

04.12.2024 08:45 — 👍 24 🔁 7 💬 2 📌 0

#Professor, #ComputerScience, #KULeuven, #Geek4life, #ComputerGraphics, #universiteit, #HigherEducation, #hogeronderwijs, #onderwijs, #MilitaryHistory, #Wargaming, #Tolkien, #LedZep, #booklover #doglover. Messages = pers opinions.

PhD Student at @dtai-kuleuven.bsky.social

The KU Leuven Institute for Artificial Intelligence

- website: https://ai.kuleuven.be/

- stories: https://ai.kuleuven.be/stories/

- jobs: https://ai.kuleuven.be/job-opportunities

NLP assistant prof at KU Leuven, PI @lagom-nlp.bsky.social. I like syntax more than most people. Also multilingual NLP, interpretability, mountains and beer. (She/her)

Researcher on continual learning, taking a deep learning as well as cognitive science perspective. At KU Leuven, Belgium.

Computational biologist interested in deciphering the genomic regulatory code at vib.ai

Geert is a practising lawyer and full professor at KU Leuven, and a visiting prof i.a. at Monash University, Melbourne. Mostly law. Just law. Blogs at www.gavclaw.com

Assistant Prof. in Philosophy of Technology Maastricht University, fascinated by #synbio, #experimentation, #historicalepistemology, #HOPOS, #HOPOT, #sciencefiction and #conspiracytheory - he/him

Head of EU Policy and Research at the Future of Life Institute | PhD Researcher at KU Leuven | Systemic risks from general-purpose AI

Law & Ethics of Digital Tech at UofT Law. Author of 'Algorithmic Rule By Law' (CUP, 2025). Previously at EU Commission's AI HLEG, Council of Europe's CAHAI, KU Leuven & NYU Law.

Reasoning and Experimental Pragmatics

Associate professor @KULeuven+TUDelft

🛰 remote sensing of ❄️🌦🏔 🌲 | big data & machine learning mapplications | digital twins 🌍 🌐

Political scientist- public administration, emerging technologies, blockchain, AI, decentralization

Professor in Process and Business Analytics at KU Leuven

wannabe scientist, cyclist, effective altruist but actually doing some philosophy