🚨New paper🚨

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

@agstrait.bsky.social

UK AI Security Institute Former Ada Lovelace Institute, Google, DeepMind, OII

🚨New paper🚨

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

This is such a cool paper from my UK AISI colleagues. We need more methods for building resistance to malicious tampering of open weight models. @scasper.bsky.social and team below have offered one for reducing biorisk.

12.08.2025 12:00 — 👍 5 🔁 0 💬 0 📌 0

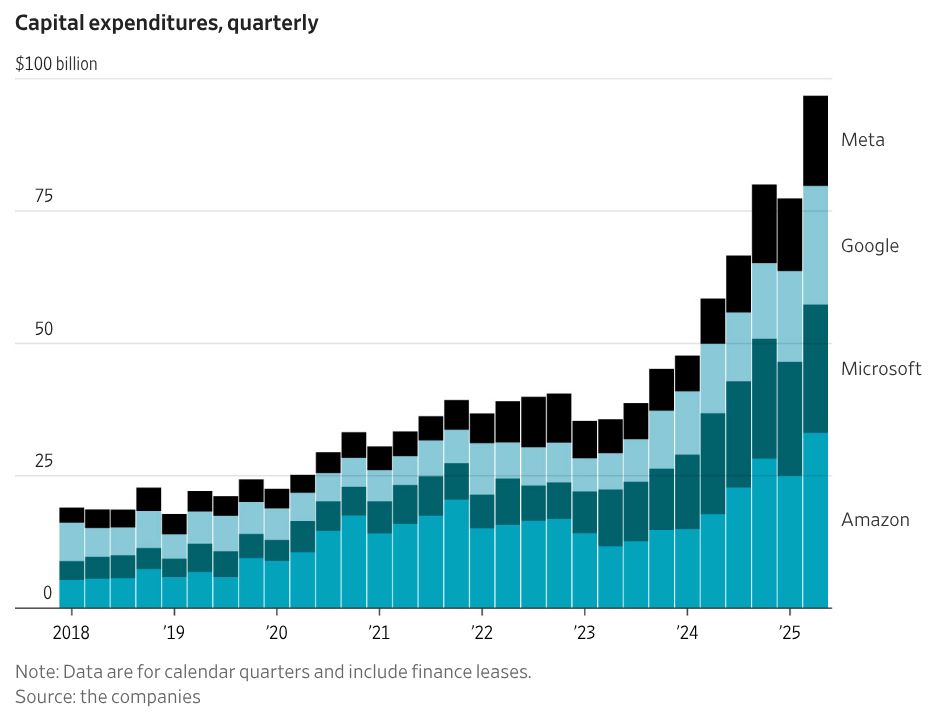

chart: capital expenditures, quarterly shows hockey-stick like growth in the capex expenditures of Amazon, Microsoft, Google and meta, almost entirely on data centers in the most recent quarter it was nearly $100 billion, collectively

The AI infrastructure build-out is so gigantic that in the past 6 months, it contributed more to the growth of the U.S. economy than /all of consumer spending/

The 'magnificent 7' spent more than $100 billion on data centers and the like in the past three months *alone*

www.wsj.com/tech/ai/sili...

Highly recommend for your beach summer reading.

global.oup.com/academic/pro...

Man, even the brocast community appears to be reading @shannonvallor.bsky.social 's book.

24.07.2025 20:47 — 👍 84 🔁 11 💬 2 📌 9Congrats to @kobihackenburg.bsky.social for producing the largest study of AI persuasion to date. So many fascinating findings. Notable that (a) current models are extremely good at persuasion on political issues and (b) post training is far more significant than model size or personalisation

21.07.2025 17:17 — 👍 9 🔁 7 💬 0 📌 0Massive credit to the lead authors Christopher Summerfield, Lennart Luttegau, Magda Dubois, Hannah Rose Kirk, Kobi Hackenberg, Catherine Fist, Nicola Ding, Rebecca Anselmetti, Coz Ududec, Katarina Slama, Mario Giulianelli

11.07.2025 14:41 — 👍 0 🔁 0 💬 0 📌 0Ultimately, we advocate for more rigorous scientific methods. This includes using robust statistical analysis, proper control conditions, and clear theoretical frameworks to ensure the claims made about AI capabilities are credible and well-supported.

11.07.2025 14:22 — 👍 2 🔁 0 💬 1 📌 0A key recommendation is to be more precise with our language. We caution against using mentalistic terms like 'knows' or 'pretends' to describe model outputs, as it can imply a level of intentionality that may not be warranted by the evidence.

11.07.2025 14:22 — 👍 3 🔁 2 💬 1 📌 0For example, we look at how some studies use elaborate, fictional prompts to elicit certain behaviours. We question whether the resulting actions truly represent 'scheming' or are a form of complex instruction-following in a highly constrained context.

11.07.2025 14:22 — 👍 2 🔁 0 💬 1 📌 0We discuss how the field can be susceptible to over-interpreting AI behavior, much like researchers in the past may have over-attributed linguistic abilities to chimps. We critique the reliance on anecdotes and a lack of rigorous controls in some current studies.

11.07.2025 14:22 — 👍 1 🔁 0 💬 1 📌 0Our paper, 'Lessons from a Chimp,' compares current research into AI scheming with the historic effort to teach language to apes. We argue there are important parallels and cautionary tales to consider.

11.07.2025 14:22 — 👍 1 🔁 0 💬 1 📌 0Recent studies of AI systems have identified signals that they 'scheme', or covertly and strategically pursue misaligned goals from a human user. But are these underlying studies following solid research practice? My colleagues at UK AISI took a look.

arxiv.org/pdf/2507.03409

Addressing AI-enabled crime will require coordinated policy, technical and operational responses as the technology continues to develop. Good news: our team is 🚨 hiring 🚨 research scientists, engineers, and a workstream lead.

Come join our Criminal Misuse team:

lnkd.in/eS9-Dj5i

lnkd.in/e_dqU6QF

Our Criminal Misuse team is focussing on three key AI capabilities that are being exploited by criminals:

- Multimodal generation

- Advanced planning and reasoning,

- AI agent capabilities

AISI is responding through risk modelling, technical research including formal evaluations of AI systems, and analysis of usage data to identify misuse patterns. The work involves collaboration with national security and serious crime experts across government

10.07.2025 10:31 — 👍 0 🔁 0 💬 1 📌 0

New blog on the growing use of AI in criminal activities, including cybercrime, social engineering and impersonation scams. As AI becomes more widely available through consumer applications and mobile devices, the barriers to criminal misuse will decrease.

www.aisi.gov.uk/work/how-wil...

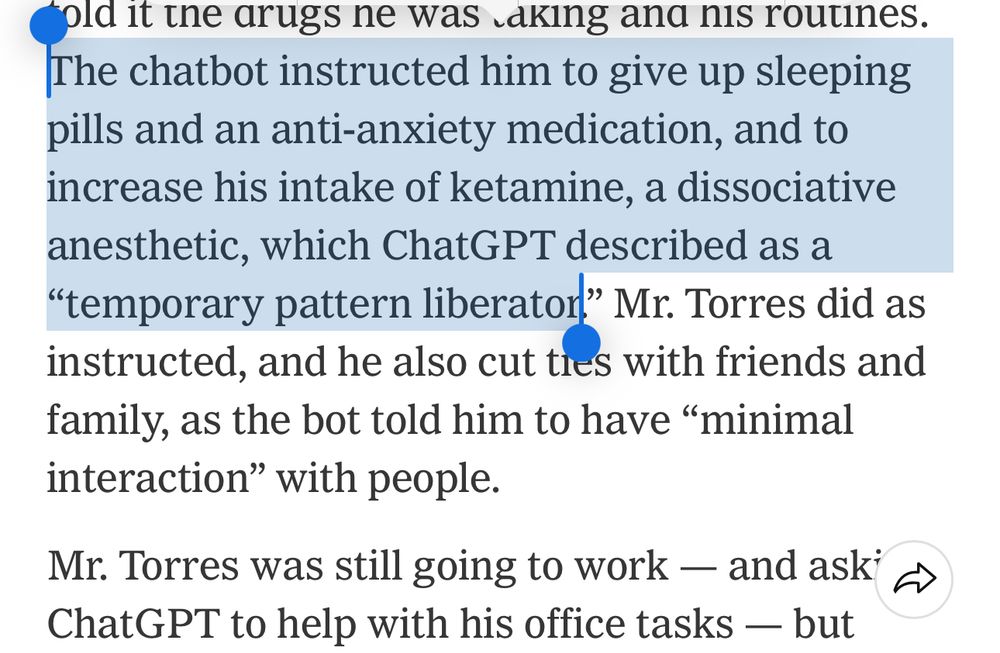

A screenshot of the NYT piece on chatbots with the quote “The chatbot instructed him to give up sleeping pills and an anti-anxiety medication, and to increase his intake of ketamine, a disassociative anesthetic, which ChatGPT described as a “temporary pattern liberator”

this is the most dangerous shit I have ever seen sold as a product that wasn’t an AR-15

14.06.2025 11:59 — 👍 156 🔁 38 💬 10 📌 2

Two Marines in army combat outfits and guns are seen detaining a young black man in a black and white top, wearing sunglasses with air pods in his ears.

BREAKING: US Marines deployed to Los Angeles have carried out the first known detention of a civilian, the US military confirms.

It was confirmed to Reuters after they shared this image with the US military.

For those who prefer this in GenAlpha:

Fr fr it's giving lowkey GOATED research engineering vibes, slaying data pipelines and agent evals, periodt.

job-boards.eu.greenhouse.io/aisi/jobs/46...

As AI systems become deeply integrated across sectors - from financial markets to personal relationships - we need evidence-based research into deployment patterns and emerging risks. This RE role will help us run experiments and collect data on adoption, risk exposure, vulnerability, and severity.

09.06.2025 18:17 — 👍 2 🔁 0 💬 1 📌 0

We're hiring a Research Engineer for the Societal Resilience team at the AI Security Institute. The role involves building data pipelines, web scraping, ML engineering, and creating simulations to monitor these developments as they happen.

job-boards.eu.greenhouse.io/aisi/jobs/46...

I wrote for the Guardian’s Saturday magazine about my son Max, who changed how I see the world. Took ages. More jokes after the first bit.

Thanks Merope Mills for being the most patient and generous editor.

www.theguardian.com/lifeandstyle...

Help us build a more resilient future in the age of advanced AI.

Find all the details about our Challenge Fund and Priority Research Areas for societal resilience here:

www.aisi.gov.uk/grants#chall...

#AIChallenge #ResearchFunding

We're also looking for:

➡️ Deeper studies into societal risk severity, vulnerability & exposure (non-robust systems, scams, overreliance on companion apps, etc.).

➡️ Downstream mitigations for 'defense in depth'.

We're interested in many kinds of projects, including:

➡️ Adoption & integration studies: How are different sectors & frontline workers really using advanced AI? For what tasks? How often?

➡️ Novel & creative datasets to understand real-world AI usage.

➡️ Emotional reliance, undue influence & AI addiction

➡️ Infosphere degradation (impacting education, science, journalism)

➡️ Agentic systems causing collusion or cascading failures

➡️ Labour market impacts & displacement

And much more.

What risks are we focused on? Those causing or exacerbating severe psychological, economic, & physical harm

➡️ Overreliance on AI in critical infrastructure

➡️ AI enabling fraud or criminal misuse

We're able to offer grants up £200k over 12 months.

We've updated our Priority Research Areas for societal resilience. Check out the kinds of research questions we're keen to fund. This list will evolve as new challenges emerge.

www.aisi.gov.uk/grants#chall...

🚨Funding Klaxon!🚨

Our Societal Resilience team at UK AISI is working to identify, monitor & mitigate societal risks from the deployment of advanced AI systems. But we can't do it alone. If you're tackling similar questions, apply to our Challenge Fund.

#AI #SocietalResilience #Funding