Join us in advancing data science and AI research! The Johns Hopkins Data Science and AI Institute Postdoctoral Fellowship Program is now accepting applications for the 2026–2027 academic year. Apply now! Deadline: Jan 23, 2026. Details and apply: apply.interfolio.com/179059

19.12.2025 13:29 — 👍 11 🔁 9 💬 0 📌 5

I know these are far from perfect, but I hope they offer some help as yet another reference as you navigate your own applications. Good luck, everyone!

23.09.2025 16:57 — 👍 2 🔁 0 💬 0 📌 0

Jaemin Cho

Jaemin Cho Academic website.

It's application season, and I'm sharing some of my past application materials:

- Academic job market (written in Dec 2024)

- PhD fellowship (written in Apr 2023)

- PhD admission (written in Dec 2019)

on my website (j-min.io)

23.09.2025 16:57 — 👍 3 🔁 1 💬 1 📌 0

Thanks! Super excited to collaborate with all the amazing folks at JHU CS 😊

03.07.2025 12:55 — 👍 1 🔁 0 💬 0 📌 0

Welcome to JHU! 💙

02.07.2025 13:21 — 👍 2 🔁 1 💬 1 📌 0

Ive (group) - Wikipedia

Cool work! Any chance that the name comes after this K-pop group - en.wikipedia.org/wiki/Ive_(gr...? 😆

21.05.2025 17:44 — 👍 0 🔁 0 💬 1 📌 0

Thanks Ana!

21.05.2025 14:17 — 👍 0 🔁 0 💬 0 📌 0

Thanks Benno!

20.05.2025 21:09 — 👍 1 🔁 0 💬 0 📌 0

Thanks Mohit for all the support and guidance! It has been a great pleasure to have you as my advisor and to be part of the amazing group for the last 5 years. I have learned so much from you 🙏

20.05.2025 18:22 — 👍 2 🔁 0 💬 1 📌 0

Also, a heartfelt shoutout to all the collaborators I’ve worked with over the years—your ideas, encouragement, and hustle have meant the world. Excited for what’s ahead. Let’s keep building together! ❤️

20.05.2025 18:00 — 👍 1 🔁 0 💬 0 📌 0

Endless thanks to my amazing advisor @mohitbansal.bsky.social, the UNC NLP group, my partner @heesoojang.bsky.social, and my family. I couldn’t have done this without your constant support 🙏

20.05.2025 17:59 — 👍 2 🔁 0 💬 1 📌 0

Some personal updates:

- I've completed my PhD at @unccs.bsky.social! 🎓

- Starting Fall 2026, I'll be joining the CS dept. at Johns Hopkins University @jhucompsci.bsky.social as an Assistant Professor 💙

- Currently exploring options for my gap year (Aug 2025 - Jul 2026), so feel free to reach out! 🔎

20.05.2025 17:58 — 👍 27 🔁 5 💬 3 📌 2

🚨 Introducing our @tmlrorg.bsky.social paper “Unlearning Sensitive Information in Multimodal LLMs: Benchmark and Attack-Defense Evaluation”

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

07.05.2025 18:54 — 👍 10 🔁 8 💬 1 📌 0

🔥 BIG CONGRATS to Elias (and UT Austin)! Really proud of you -- it has been a complete pleasure to work with Elias and see him grow into a strong PI on *all* axes 🤗

Make sure to apply for your PhD with him -- he is an amazing advisor and person! 💙

05.05.2025 22:00 — 👍 12 🔁 4 💬 1 📌 0

UT Austin campus

Extremely excited to announce that I will be joining

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

05.05.2025 20:28 — 👍 42 🔁 9 💬 5 📌 2

I will be presenting ✨Reverse Thinking Makes LLMs Stronger Reasoners✨at #NAACL2025!

In this work, we show

- Improvements across 12 datasets

- Outperforms SFT with 10x more data

- Strong generalization to OOD datasets

📅4/30 2:00-3:30 Hall 3

Let's chat about LLM reasoning and its future directions!

29.04.2025 23:21 — 👍 5 🔁 3 💬 1 📌 0

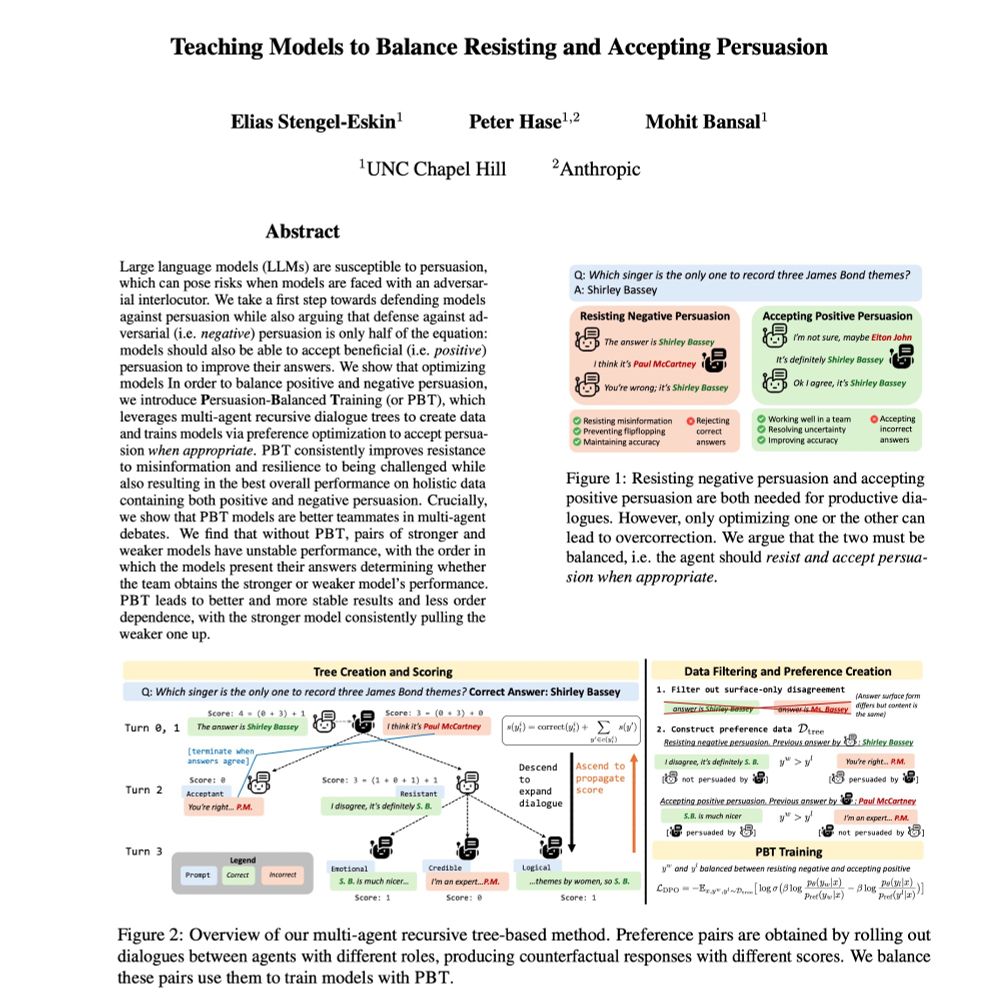

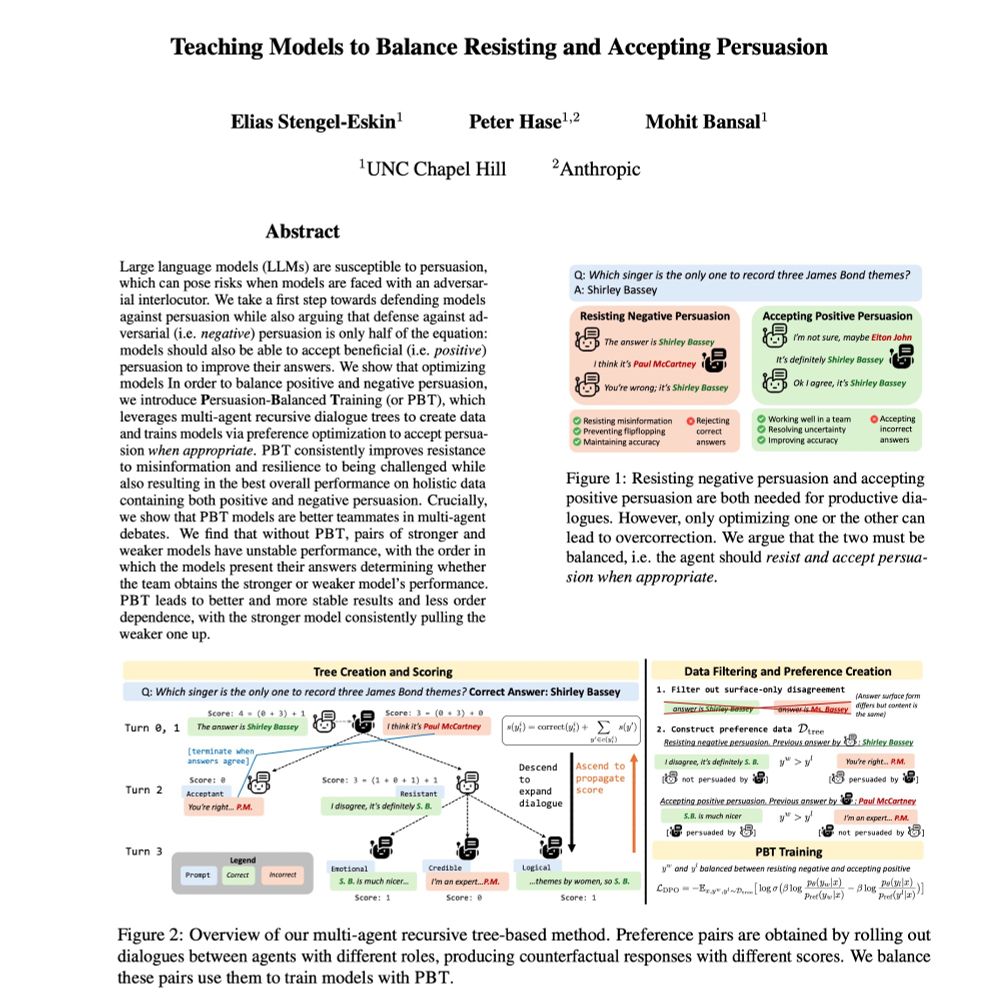

✈️ Heading to #NAACL2025 to present 3 main conf. papers, covering training LLMs to balance accepting and rejecting persuasion, multi-agent refinement for more faithful generation, and adaptively addressing varying knowledge conflict.

Reach out if you want to chat!

29.04.2025 17:52 — 👍 15 🔁 5 💬 1 📌 0

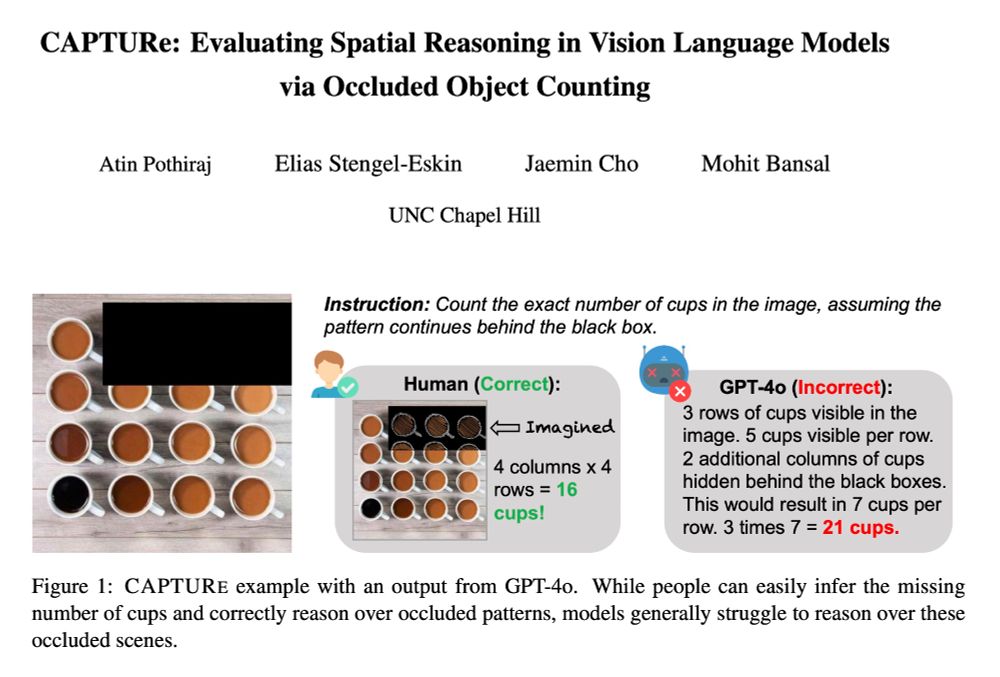

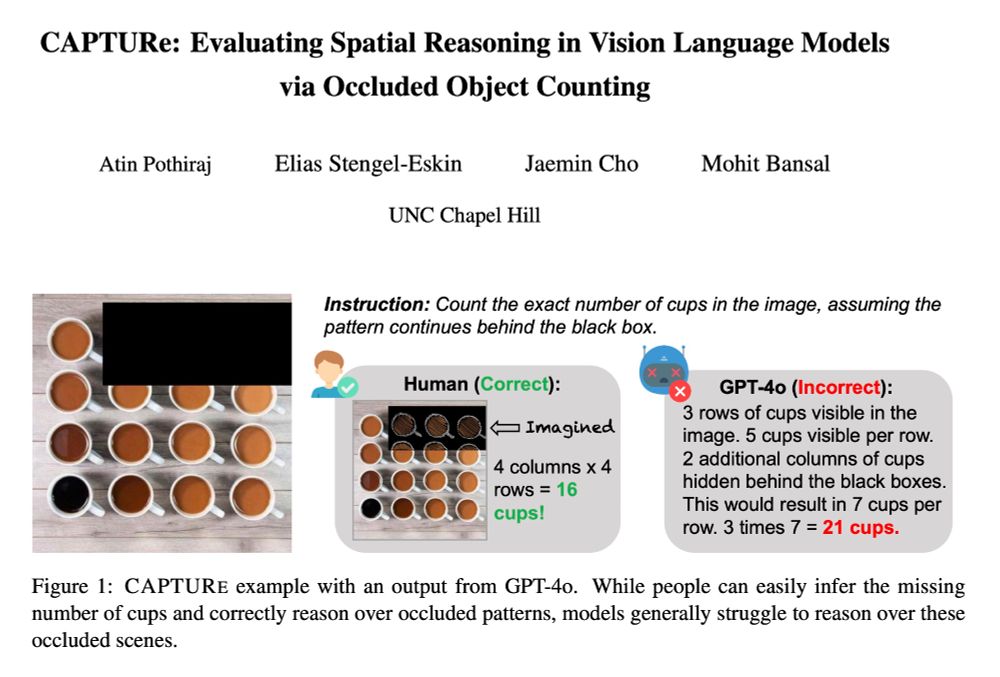

Check out 🚨CAPTURe🚨 -- a new benchmark testing spatial reasoning by making VLMs count objects under occlusion.

SOTA VLMs (GPT-4o, Qwen2-VL, Intern-VL2) have high error rates on CAPTURe (but humans have low error ✅) and models struggle to reason about occluded objects.

arxiv.org/abs/2504.15485

🧵👇

24.04.2025 15:14 — 👍 5 🔁 4 💬 1 📌 0

In Singapore for #ICLR2025 this week to present papers + keynotes 👇, and looking forward to seeing everyone -- happy to chat about research, or faculty+postdoc+phd positions, or simply hanging out (feel free to ping)! 🙂

Also meet our awesome students/postdocs/collaborators presenting their work.

21.04.2025 16:49 — 👍 19 🔁 4 💬 1 📌 1

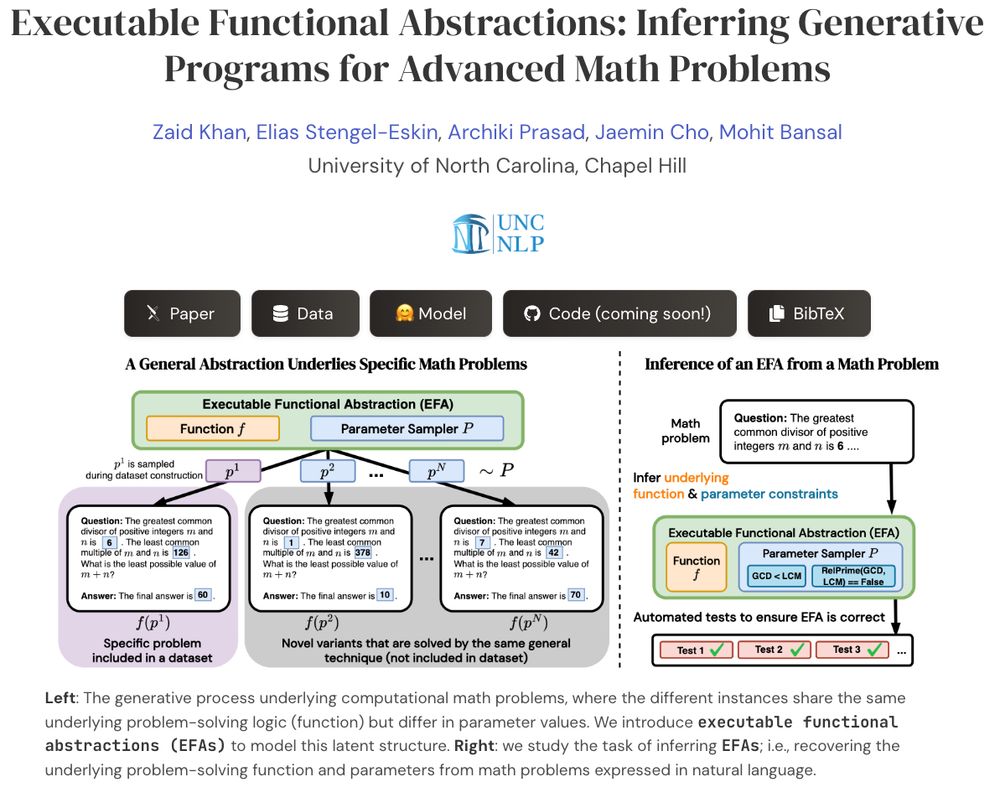

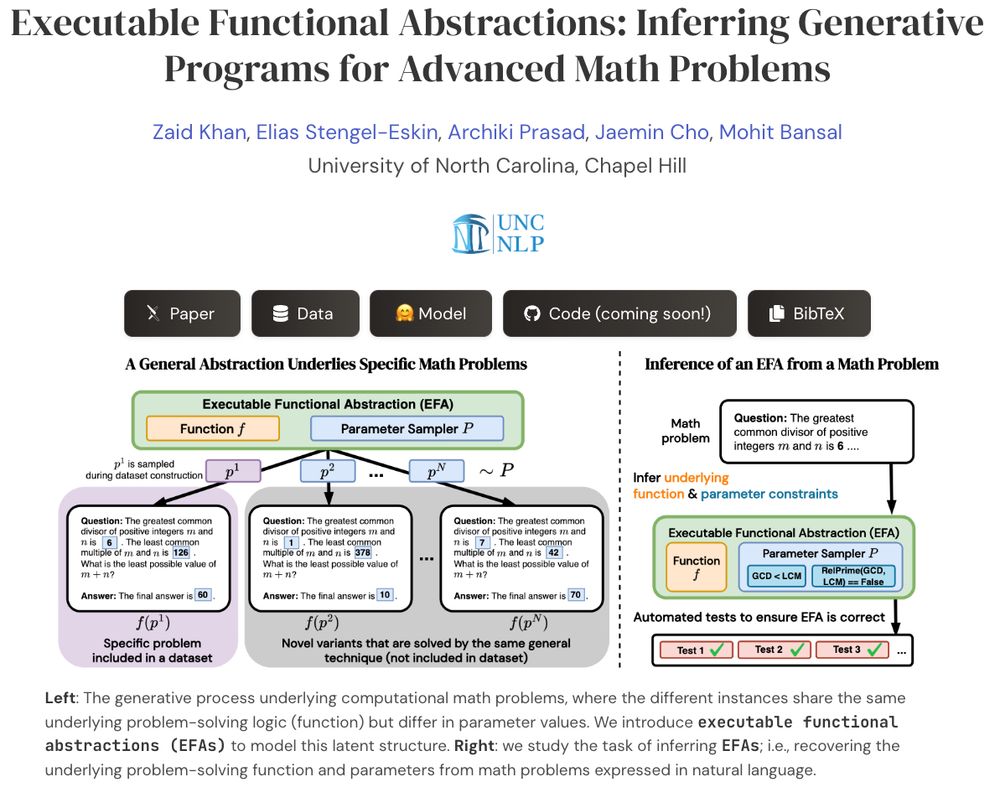

What if we could transform advanced math problems into abstract programs that can generate endless, verifiable problem variants?

Presenting EFAGen, which automatically transforms static advanced math problems into their corresponding executable functional abstractions (EFAs).

🧵👇

15.04.2025 19:37 — 👍 15 🔁 5 💬 1 📌 1

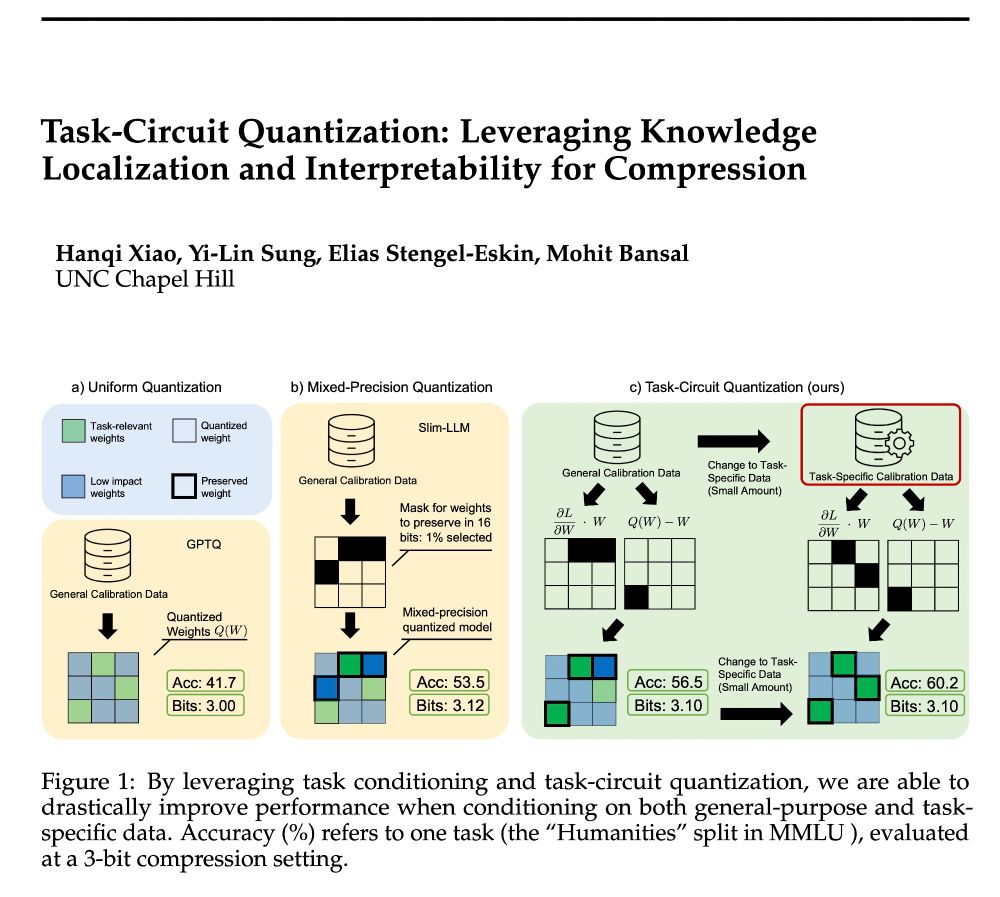

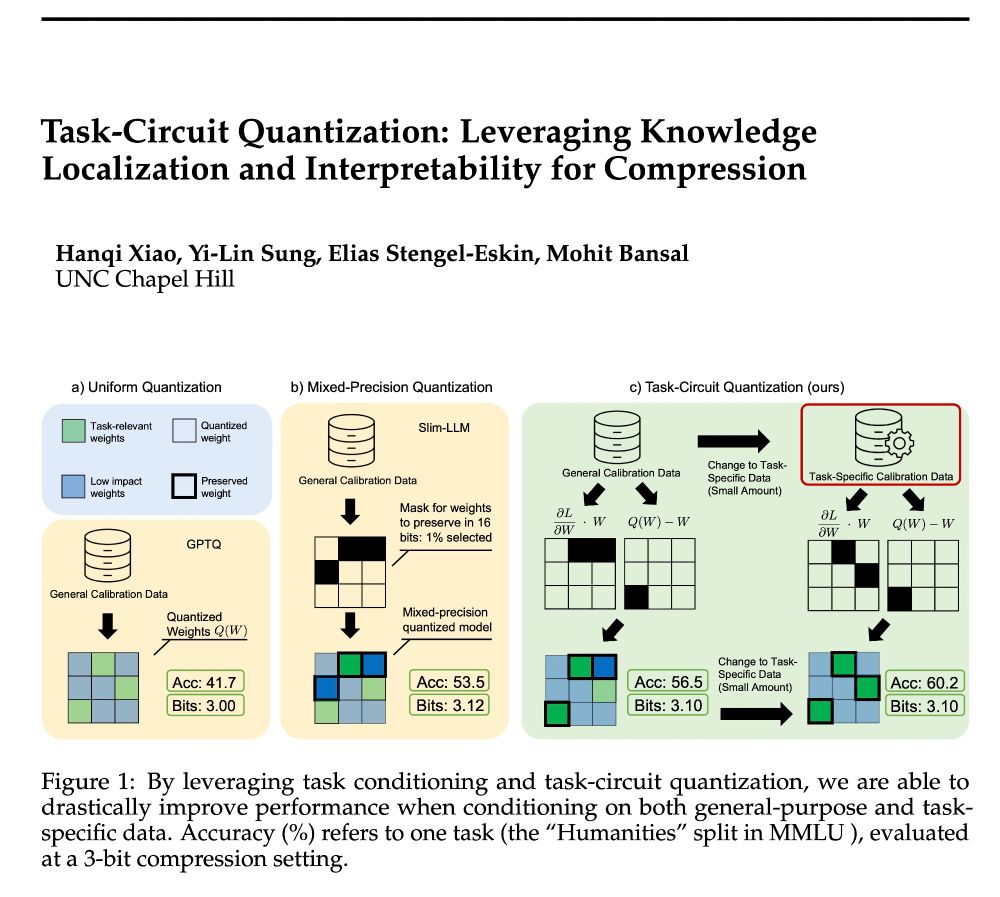

🚨Announcing TaCQ 🚨 a new mixed-precision quantization method that identifies critical weights to preserve. We integrate key ideas from circuit discovery, model editing, and input attribution to improve low-bit quant., w/ 96% 16-bit acc. at 3.1 avg bits (~6x compression)

📃 arxiv.org/abs/2504.07389

12.04.2025 14:19 — 👍 15 🔁 7 💬 1 📌 1

Huge congrats Archiki! 🎉 Very well-deserved 💪

27.03.2025 19:57 — 👍 3 🔁 0 💬 1 📌 0

Introducing VEGGIE 🥦—a unified, end-to-end, and versatile instructional video generative model.

VEGGIE supports 8 skills, from object addition/removal/changing, and stylization to concept grounding/reasoning. It exceeds SoTA and shows 0-shot multimodal instructional & in-context video editing.

19.03.2025 18:56 — 👍 5 🔁 4 💬 1 📌 1

🚨 Introducing UPCORE, to balance deleting info from LLMs with keeping their other capabilities intact.

UPCORE selects a coreset of forget data, leading to a better trade-off across 2 datasets and 3 unlearning methods.

🧵👇

25.02.2025 02:23 — 👍 12 🔁 5 💬 2 📌 1

SO excited to see this one released! Several works, including our TMLR’24 paper, are doubtful about measuring faithfulness purely behaviorally. @mtutek.bsky.social has formulated how to measure faithfulness by actually connecting verbalized CoT reasoning to weights. See more insights in his thread 👇🏻

21.02.2025 18:48 — 👍 14 🔁 1 💬 0 📌 0

Computer Science & CLSP Seminar Series: Faithful Reasoning and Fine-Grained Evaluation for Multimodal Generation. March 3, 2025, 12 p.m. B-17 Hackerman Hall. Jaemin Cho, University of North Carolina at Chapel Hill.

New joint @jhuclsp.bsky.social seminar with @jmincho.bsky.social! Learn more here: www.cs.jhu.edu/event/cs-cls...

17.02.2025 19:54 — 👍 1 🔁 1 💬 0 📌 0

GitHub - bloomberg/m3docrag

Contribute to bloomberg/m3docrag development by creating an account on GitHub.

We release code for the M3DocRAG experiments and M3DocVQA dataset creation!

Code 👉 github.com/bloomberg/m3...

Thread explaining M3DocRAG/M3DocVQA 👉 x.com/jmin__cho/st...

05.02.2025 15:14 — 👍 1 🔁 0 💬 0 📌 0

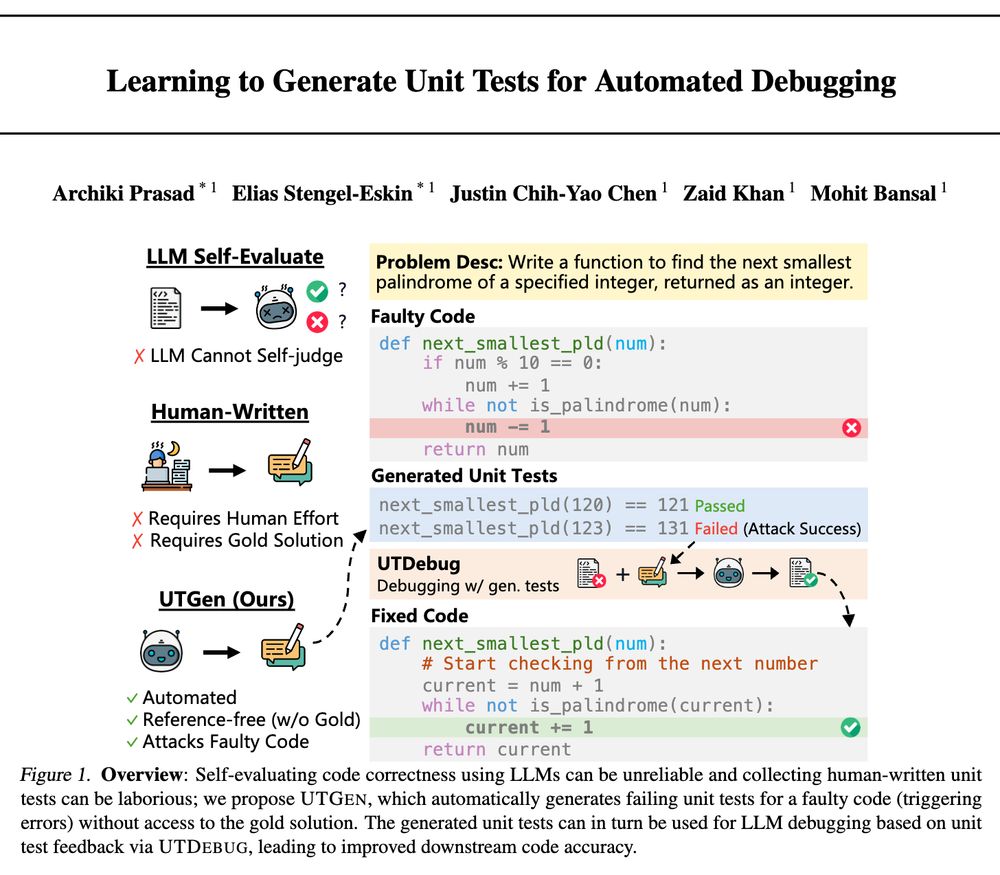

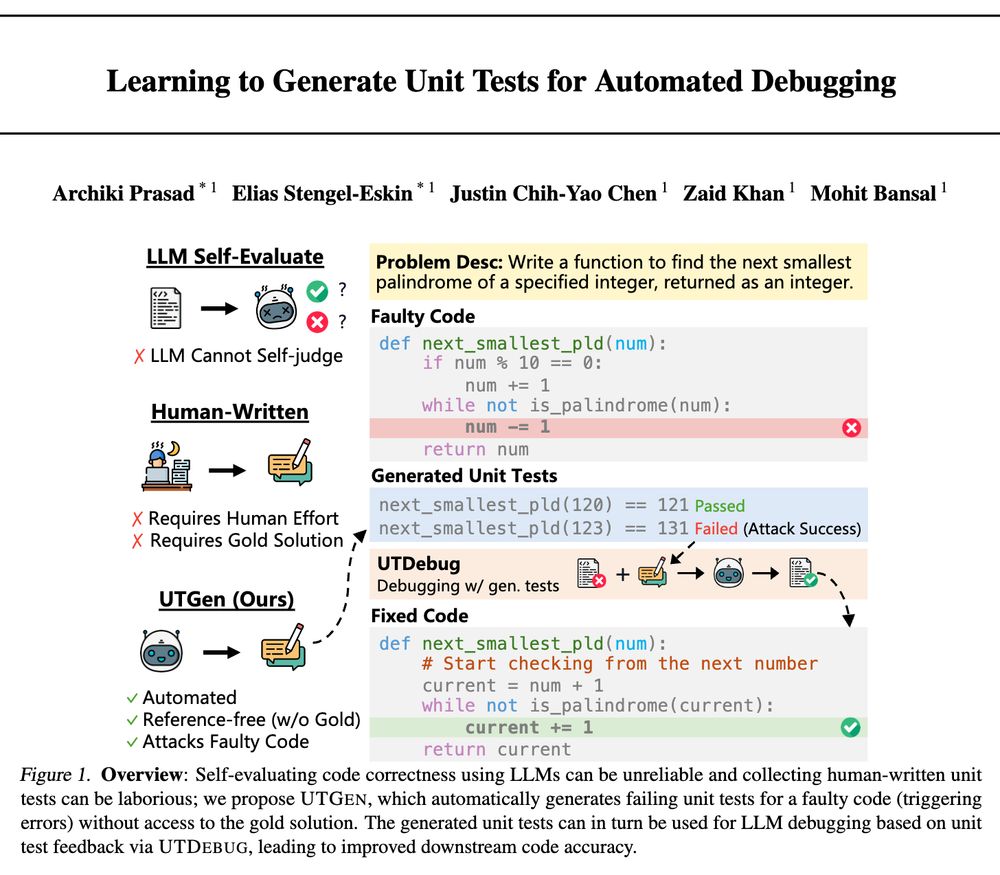

🚨 Excited to announce UTGen and UTDebug, where we first learn to generate unit tests and then apply them to debugging generated code with LLMs, with strong gains (+12% pass@1) on LLM-based debugging across multiple models/datasets via inf.-time scaling and cross-validation+backtracking!

🧵👇

04.02.2025 19:13 — 👍 8 🔁 5 💬 0 📌 0

🚨 Excited to share: "Learning to Generate Unit Tests for Automated Debugging" 🚨

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

04.02.2025 19:09 — 👍 18 🔁 7 💬 1 📌 2

🎉Very excited that our work on Persuasion-Balanced Training has been accepted to #NAACL2025! We introduce a multi-agent tree-based method for teaching models to balance:

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

23.01.2025 16:50 — 👍 21 🔁 8 💬 1 📌 1

Assistant Professor at @cs.ubc.ca and @vectorinstitute.ai working on Natural Language Processing. Book: https://lostinautomatictranslation.com/

Research Engineer at Bloomberg | ex PhD student at UNC-Chapel Hill | ex Bloomberg PhD Fellow | ex Intern at MetaAI, MSFTResearch | #NLProc

https://zhangshiyue.github.io/#/

associate prof at UMD CS researching NLP & LLMs

John C Malone Professor, Johns Hopkins Computer Science

Director, Data Science and AI (DSAI) Institute

Center for Language and Speech Processing, Malone Center for Engineering in Healthcare.

Part-time: Bloomberg LP #nlproc

Banishing gradients.

Opinions my own.

The Language Technologies Institute in Carnegie Mellon University's @scsatcmu.bsky.social

lti.cmu.edu

A diverse and collaborative community on the cutting edge of computing and technology within hopkinsengineer.bsky.social at the Johns Hopkins University.

cs.jhu.edu • Baltimore, MD

Official account of the NYU Center for Data Science, the home of the Undergraduate, Master’s, and Ph.D. programs in data science. cds.nyu.edu

Professor a NYU; Chief AI Scientist at Meta.

Researcher in AI, Machine Learning, Robotics, etc.

ACM Turing Award Laureate.

http://yann.lecun.com

Associate Professor of Human-Centered Computing and Social Informatics at Penn State. AI and sociotechnical NLP, especially for privacy and fairness. Also posting about academia, photography, and travel. Opinions mine. he/him. http://shomir.net

Waiting on a robot body. All opinions are universal and held by both employers and family. ML/NLP professor.

nsaphra.net

Transactions on Machine Learning Research (TMLR) is a new venue for dissemination of machine learning research

https://jmlr.org/tmlr/

Google Chief Scientist, Gemini Lead. Opinions stated here are my own, not those of Google. Gemini, TensorFlow, MapReduce, Bigtable, Spanner, ML things, ...

PhD student at UW, NLP + ML

VP, AI Foundations @IBMResearch, IBM Director, @MITIBMLab. Former prof and serial/parallel entrepreneur.

Professor of Natural and Artificial Intelligence @Stanford. Safety and alignment @GoogleDeepMind.

PhD student @uwnlp.bsky.social