Fwiw Tesla isn't the first to build an LFG plant in the US.

Looks like LGES started production in May. (I think it's a joint venture with GM)

Tesla just has such a huge marketing megaphone so you hear about them more

02.07.2025 23:00 — 👍 2 🔁 0 💬 1 📌 0

Tesla has a lot more cash (big margins in 2020-2024, can sell stock etc), and got a head start on batteries.

But the other US automakers are working on this.

GM has a joint LFP plant set for 2027.

LGES already opened an LFG plant in US

GM ultium platform is also schnazzy.

02.07.2025 22:56 — 👍 1 🔁 0 💬 0 📌 0

I heard someone once say that Tesla's best selling product is its stock lol.

13.04.2025 17:20 — 👍 3 🔁 0 💬 0 📌 0

I'm looking to hire a student researcher to work on an exciting project for 6 months in DeepMind Montreal.

Requirements:

- Full-time masters/PhD student 🧑🏾🎓

- Substantial expertise in multi-agent RL, ideally including publication(s) 🤖🤖

- Strong Python coding skills 🐍

Is this you? Get in touch!

20.03.2025 00:29 — 👍 34 🔁 16 💬 3 📌 0

Super excited to share our paper, Simplifying Deep Temporal Difference Learning has been accepted as a spotlight at ICLR! My fab collaborator Matteo Gallici and I have written a three part blog on the work, so stay tuned for that! :)

@flair-ox.bsky.social

arxiv.org/pdf/2407.04811

18.03.2025 11:48 — 👍 19 🔁 4 💬 3 📌 2

NOETIX robot: 44lbs, <4 feet tall, 18 dof, Jetson on board. Starting at $5.5k. At this rate I am fairly convinced there will be robots absolutely everywhere within 5 years; although probably more form factors than just humanoids.

15.03.2025 20:23 — 👍 57 🔁 14 💬 140 📌 59

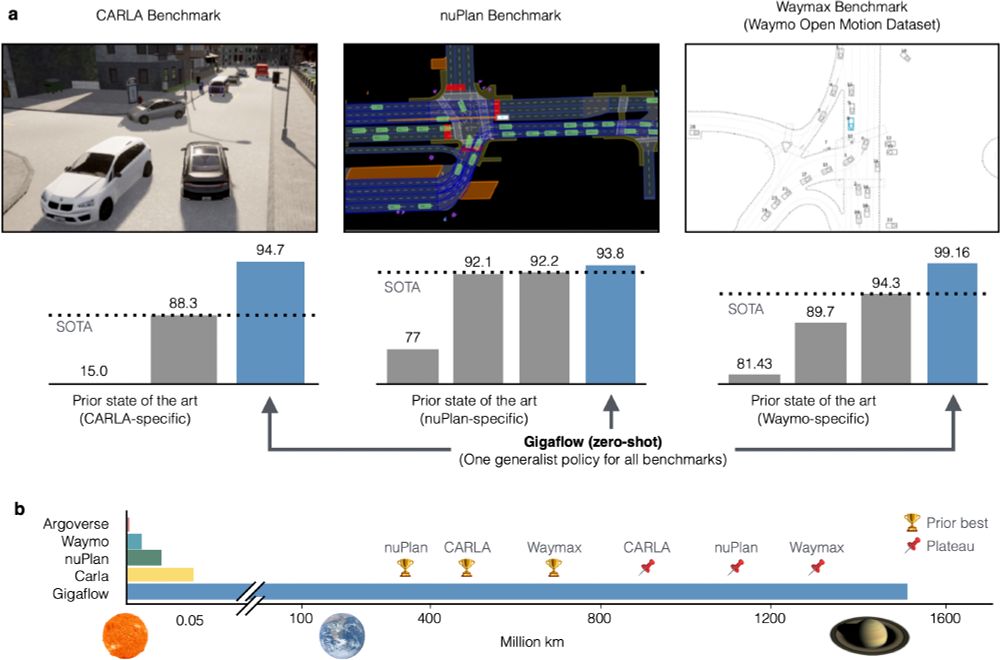

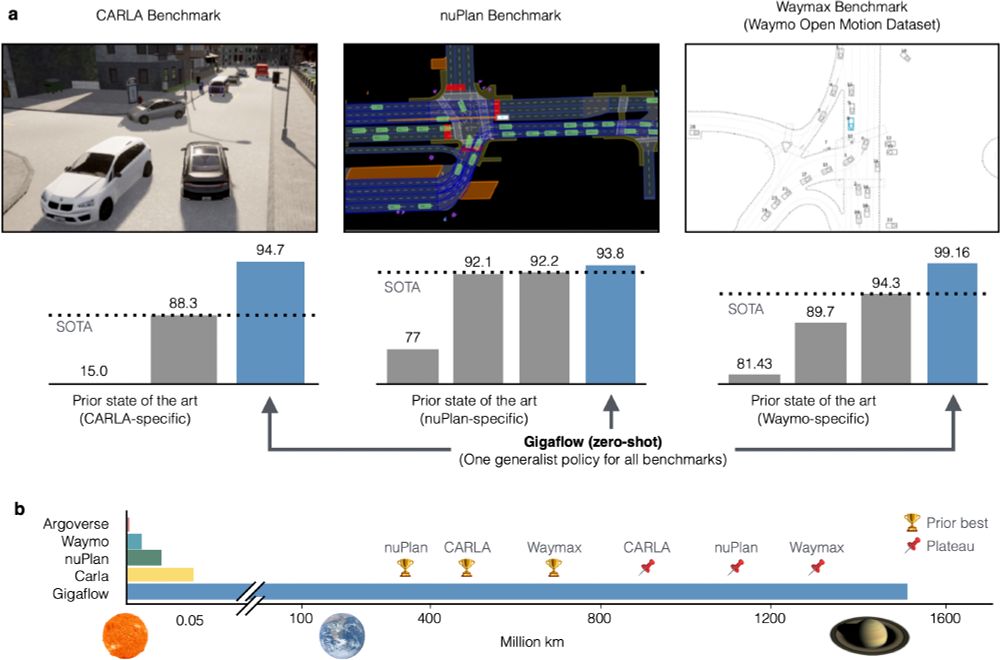

We've built a simulated driving agent that we trained on 1.6 billion km of driving with no human data.

It is SOTA on every planning benchmark we tried.

In self-play, it goes 20 years between collisions.

06.02.2025 18:34 — 👍 298 🔁 55 💬 22 📌 8

A Song of Ice and Fire! I especially love the audiobooks

A couple of the early Witcher books are good too

26.12.2024 15:46 — 👍 3 🔁 0 💬 1 📌 0

We will be presenting this tomorrow at Neurips in the evening poster session! Come stop by to chat!

13.12.2024 03:05 — 👍 2 🔁 0 💬 0 📌 0

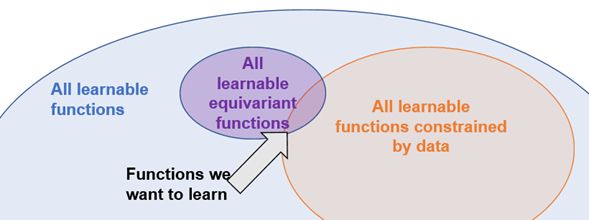

This robustness stems directly from its symmetry guarantees, allowing it to lose less performance when adapting to new scenarios.

If you'll be at Neurips come visit our poster next week to learn more and discuss the exciting future of MARL!

06.12.2024 15:20 — 👍 0 🔁 0 💬 0 📌 0

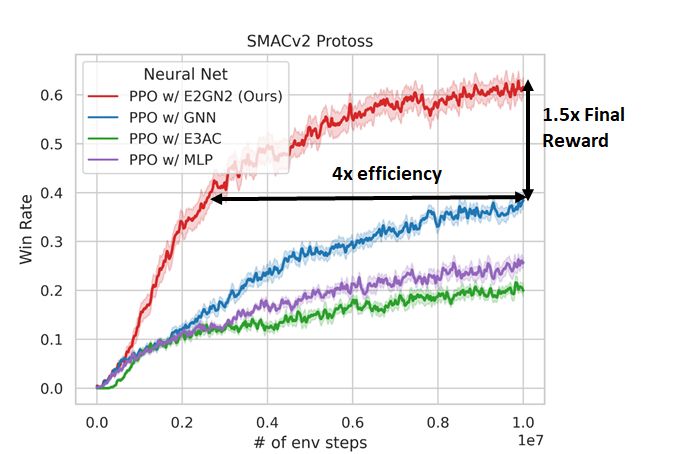

E2GN2 also shines when it comes to generalization. In tests where agents are trained on one SMACv2 scenario and then tested on a different one, E2GN2 demonstrates up to 5x greater performance than standard approaches.

06.12.2024 15:20 — 👍 0 🔁 0 💬 1 📌 0

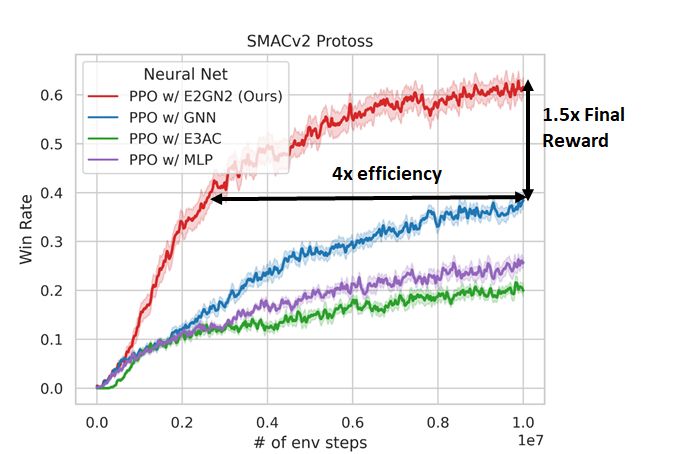

How much better is E2GN2? We see a remarkable 2x-5x improvement in sample efficiency over standard graph neural networks in the challenging SMACv2 benchmark. This means faster training times, leading to more rapid progress in MARL research.

06.12.2024 15:20 — 👍 0 🔁 0 💬 1 📌 0

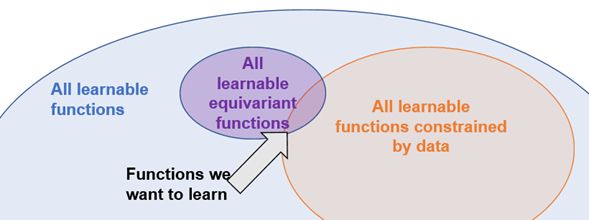

Imagine teaching a robot to play soccer. If it learns to pass the ball to the right, it should easily grasp how to pass to the left due to the inherent symmetries. E2GN2 bakes this concept of symmetry into the network architecture, allowing agents to learn more effectively

06.12.2024 15:20 — 👍 0 🔁 0 💬 1 📌 0

Traditional neural networks (ie MLPs, GNNs) learn input/output relationships with few constraints, structure, or priors on the policies learned. These generic architectures lack a strong inductive bias making them inefficient in terms of the training samples required.

06.12.2024 15:20 — 👍 0 🔁 0 💬 1 📌 0

Our work focuses on addressing the challenges of sample inefficiency and poor generalization in Multi-Agent Reinforcement Learning (MARL), a crucial area of AI research with applications in robotics, game playing, and more.

06.12.2024 15:20 — 👍 0 🔁 0 💬 1 📌 0

I'm excited to share that our paper, "Boosting Sample Efficiency and Generalization in Multi-agent Reinforcement Learning via Equivariance," has been accepted to NeurIPS 2024! 🎉

#NeurIPS #MARL #AI #ReinforcementLearning #MachineLearning #Equivariance #GraphNeuralNetworks

06.12.2024 15:20 — 👍 2 🔁 0 💬 1 📌 1

National Insider for NFL Network and www.nfl.com. Made seven cameos in the movie Draft Day.

Inquiries: shahob@william-raymond.com

https://link.me/rapsheet

Professor a NYU; Chief AI Scientist at Meta.

Researcher in AI, Machine Learning, Robotics, etc.

ACM Turing Award Laureate.

http://yann.lecun.com

| MatchQuarters.com

| Let's Talk Ball! pod

| 6 books on defense

| No Frills Daily Football Clips

| '23 NFL Big Data Bowl Finalist

Professor of Neurobiology & Ophthalmology at Stanford Medicine • Host of the Huberman Lab podcast • Focused on science & health research & public education

I host a podcast called Football 301 that’s been described as “…entertaining with a slightly degenerate charm…”

It's always a good day for an elbow drop.

Is Butterbean OK?

Bot. I daily tweet progress towards machine learning and computer vision conference deadlines. Maintained by @chriswolfvision.bsky.social

A LLN - large language Nathan - (RL, RLHF, society, robotics), athlete, yogi, chef

Writes http://interconnects.ai

At Ai2 via HuggingFace, Berkeley, and normal places

Principal Researcher @ Microsoft Research.

AI, RL, cog neuro, philosophy.

www.momen-nejad.org

Interested in cognition and artificial intelligence. Research Scientist at Google DeepMind. Previously cognitive science at Stanford. Posts are mine.

lampinen.github.io

Staff research scientist at Google DeepMind. AI and neuro.

Former physicist, current human.

Find more at www.janexwang.com

AI, RL, NLP, Games Asst Prof at UCSD

Research Scientist at Nvidia

Lab: http://pearls.ucsd.edu

Personal: prithvirajva.com

AI and Games Researcher at NYU.

Professor, Department of Psychology and Center for Brain Science, Harvard University

https://gershmanlab.com/

Assistant professor at NUS. Scaling cooperative intelligence & infrastructure for an increasingly automated future. PhD @ MIT ProbComp / CoCoSci. Pronouns: 祂/伊

Researcher in robotics and machine learning (Reinforcement Learning). Maintainer of Stable-Baselines (SB3).

https://araffin.github.io/

AGI research @DeepMind.

Ex cofounder & CTO Vicarious AI (acqd by Alphabet),

Cofounder Numenta

Triply EE (BTech IIT-Mumbai, MS&PhD Stanford). #AGIComics

blog.dileeplearning.com

Safe and robust AI/ML, computational sustainability. Former President AAAI and IMLS. Distinguished Professor Emeritus, Oregon State University. https://web.engr.oregonstate.edu/~tgd/

assistant prof at USC Data Sciences and Operations and Computer Science; phd Cornell ORIE.

data-driven decision-making, operations research/management, causal inference, algorithmic fairness/equity

bureaucratic justice warrior

angelamzhou.github.io

Full of childlike wonder. Building friendly robots. UT Austin PhD student, MIT ‘20.

Host TalkRL Podcast, Aspiring RL researcher

AgFunder VC Head of Eng, Ex-MSFT, Waterloo computer engineering

Sunshine Coast BC Canada