Dr. Arvind Narayanan (@randomwalker.bsky.social ) will be joining us as a Keynote Speaker at the Conference on Society-Centered AI 2026! Join us Feb 12-14 at Duke for industry and academic keynotes, research spotlight talks, and poster sessions. Learn more and Register here: sites.duke.edu/scai/

07.01.2026 15:00 — 👍 6 🔁 2 💬 0 📌 1

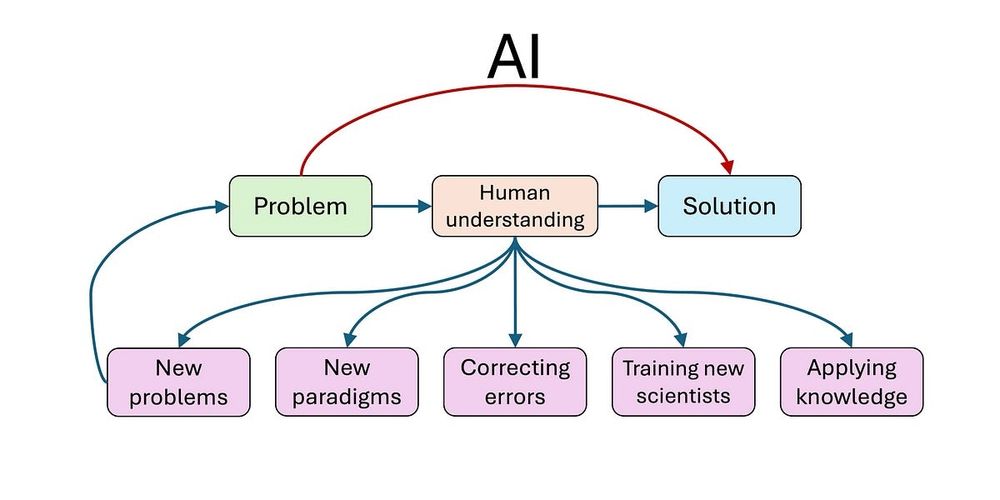

After science

Twenty-five years ago, Ted Chiang wrote a prescient science fiction short that began: “It has been 25 years since a report of original research was last submitted to our editors for publication, makin...

New piece w/ James Evans in Science explores what we call 'science after science', an era where our ability to control nature may exceed our ability to understand it; a new struggle to sustain curiosity & understanding under AI's predictive dominance. #ai #science

www.science.org/doi/10.1126/...

14.11.2025 18:23 — 👍 25 🔁 10 💬 0 📌 0

Three schematic diagrams. The first illustrates selective publishing of internal resection, the second selective causal focus, and the third selective access and funding for researchers.

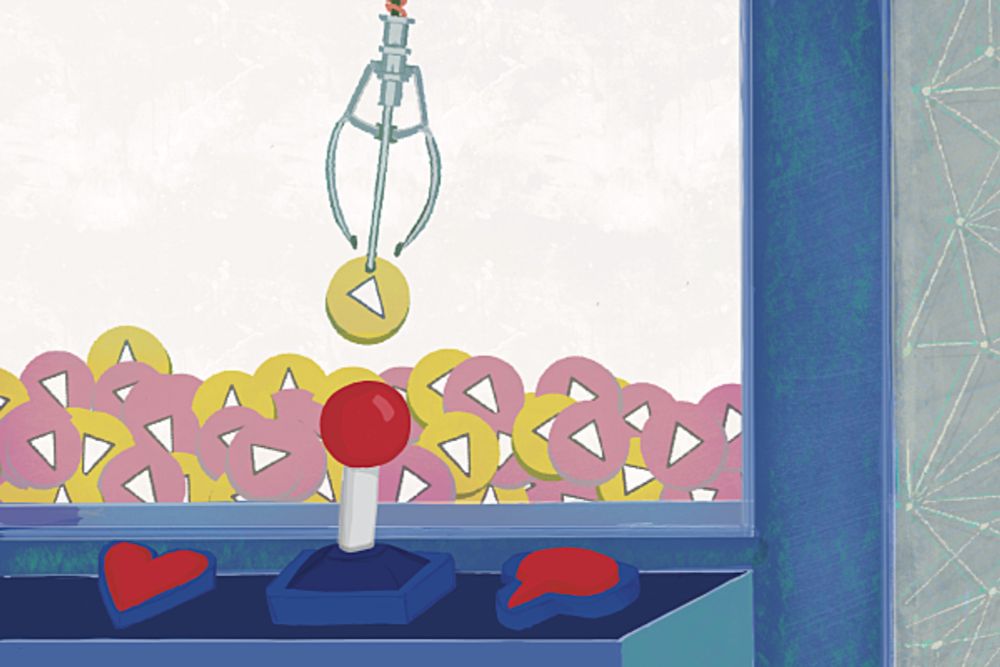

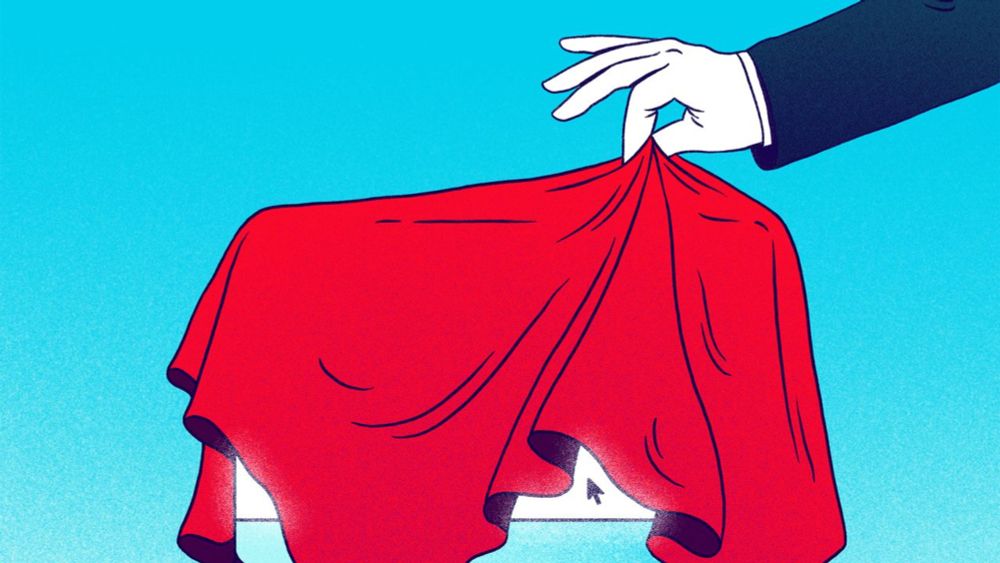

1. We ( @jbakcoleman.bsky.social, @cailinmeister.bsky.social, @jevinwest.bsky.social, and I) have a new preprint up on the arXiv.

There we explore how social media companies and other online information technology firms are able to manipulate scientific research about the effects of their products.

24.10.2025 00:47 — 👍 759 🔁 356 💬 16 📌 21

AI as Normal Technology

A new paper that we will expand into our next book

The "normal" framing is so key — @randomwalker.bsky.social gave us all such a useful way of articulating a valuable idea in this discussion. www.normaltech.ai/p/ai-as-norm...

17.10.2025 05:00 — 👍 200 🔁 25 💬 5 📌 2

Invitation to Apply: Princeton AI Policy Precepts in Washington, DC

📢📢 Call for federal employees for the AI Precepts in Washington, DC. Learn from experts Arvind Narayanan (@randomwalker.bsky.social), Mihir Kshirsagar, Peter Henderson (@peterhenderson.bsky.social, & Sayash Kapoor (@sayash.bsky.social). Deadline to apply: Fri, Oct. 3

mailchi.mp/princeton.ed...

26.09.2025 15:42 — 👍 1 🔁 1 💬 1 📌 0

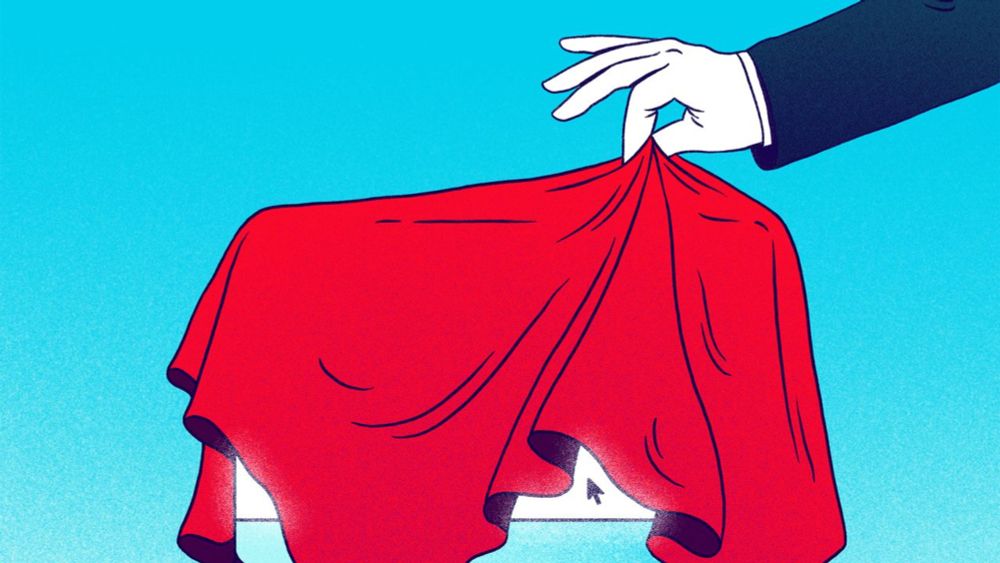

Paperback cover of AI Snake Oil: What Artificial Intelligence Can Do, What It Can’t, and How to Tell the Difference by Arvind Narayanan and Sayash Kapoor

From two of TIME’s 100 Most Influential People in AI, what you need to know about #AI—and how to defend yourself against bogus AI claims and products.

AI Snake Oil by @randomwalker.bsky.social and @sayash.bsky.social is now available in #paperback: press.princeton.edu/books/paperb...

23.09.2025 15:07 — 👍 10 🔁 3 💬 1 📌 0

Our #podcast series on Harry Frankfurt’s seminal work, On Bullshit continues with Arvind Narayanan who explores the subject of bullshit in #AI.

press.princeton.edu/ideas/the-tr...

@randomwalker.bsky.social @newbooksnetwork.bsky.social @calebzakarin.bsky.social

08.09.2025 23:09 — 👍 13 🔁 4 💬 0 📌 0

📣 Prof Arvind Narayanan (@randomwalker.bsky.social) is hiring a Princeton University undergrad for a Video Editor & Production Assistant this semester to help with his brand new YouTube channel @ArvindOnAI

Getting started right away so feel free to comment + share with students! Link to apply 👇

03.09.2025 15:11 — 👍 1 🔁 3 💬 1 📌 0

Podcast Episode: Separating AI Hope from AI Hype

If you believe the hype, artificial intelligence will soon take all our jobs, or solve all our problems, or destroy all boundaries between reality and lies, or help us live forever, or take over the w...

Even superintelligent AI cannot simply replace humans for most of what we do, nor can it perfect or ruin our world unless we let it, AI Snake Oil’s @randomwalker.bsky.social tells EFF’s Cindy Cohn and @thejasonkelley.com on the new “How to Fix the Internet.”

19.08.2025 17:17 — 👍 31 🔁 14 💬 1 📌 0

Podcast Episode: Separating AI Hope from AI Hype

If you believe the hype, artificial intelligence will soon take all our jobs, or solve all our problems, or destroy all boundaries between reality and lies, or help us live forever, or take over the w...

Great @eff.org podcast with @randomwalker.bsky.social, touching on his AI as Normal Technology paper w/ @sayash.bsky.social for our @knightcolumbia.org AI & Democratic Freedoms project. Short 🧵 of a few other papers related to this podcast discussion:

www.eff.org/deeplinks/20...

15.08.2025 17:30 — 👍 17 🔁 7 💬 1 📌 0

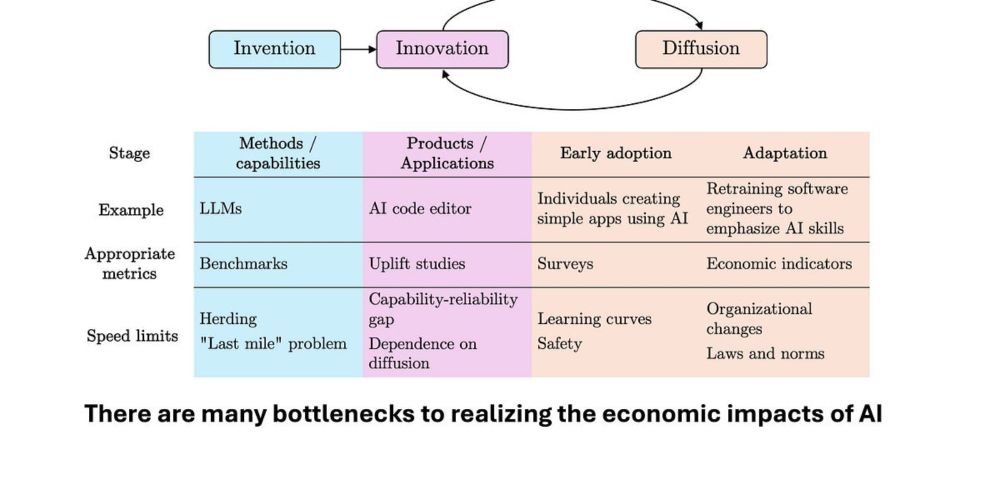

Advancing science- and evidence-based AI policy

Policy must be informed by, but also facilitate the generation of, scientific evidence

What kind of AI governance do we need? Our new piece in @science.org answers this: we need policy grounded in evidence and built to generate more of it. Evidence-based policymaking is not a slogan—it’s a design challenge for democratic governance in the age of AI www.science.org/doi/10.1126/... 🧵

31.07.2025 23:27 — 👍 129 🔁 55 💬 7 📌 3

Note that the data collection ended right before ChatGPT was released, so my guess is that the percentages are no longer small.

18.07.2025 01:00 — 👍 3 🔁 0 💬 0 📌 0

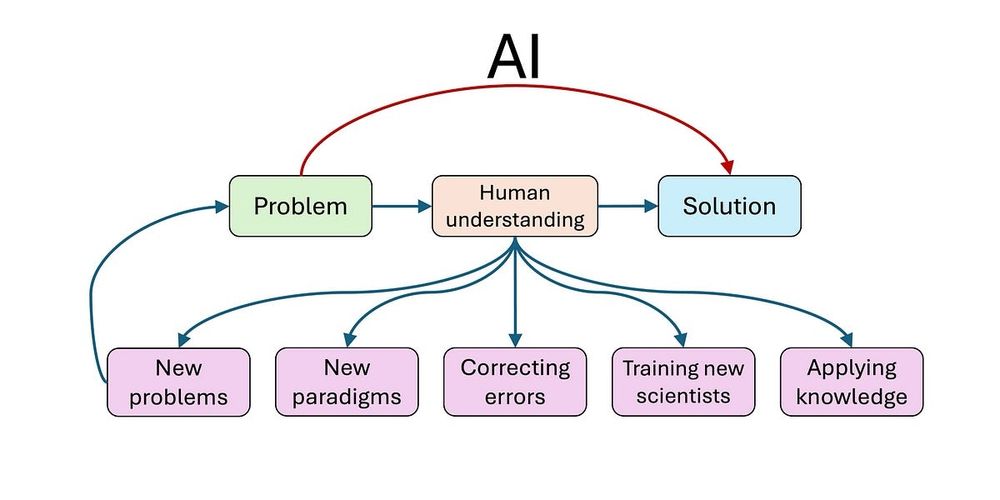

Could AI slow science?

Confronting the production-progress paradox

Fabulous post by @randomwalker.bsky.social & Sayash raising the same concern many of us have about whether we're on the right track with how we're using AI for science. Everyone should read it, take a deep breath & think through the implications.

www.aisnakeoil.com/p/could-ai-s...

17.07.2025 16:05 — 👍 158 🔁 69 💬 7 📌 11

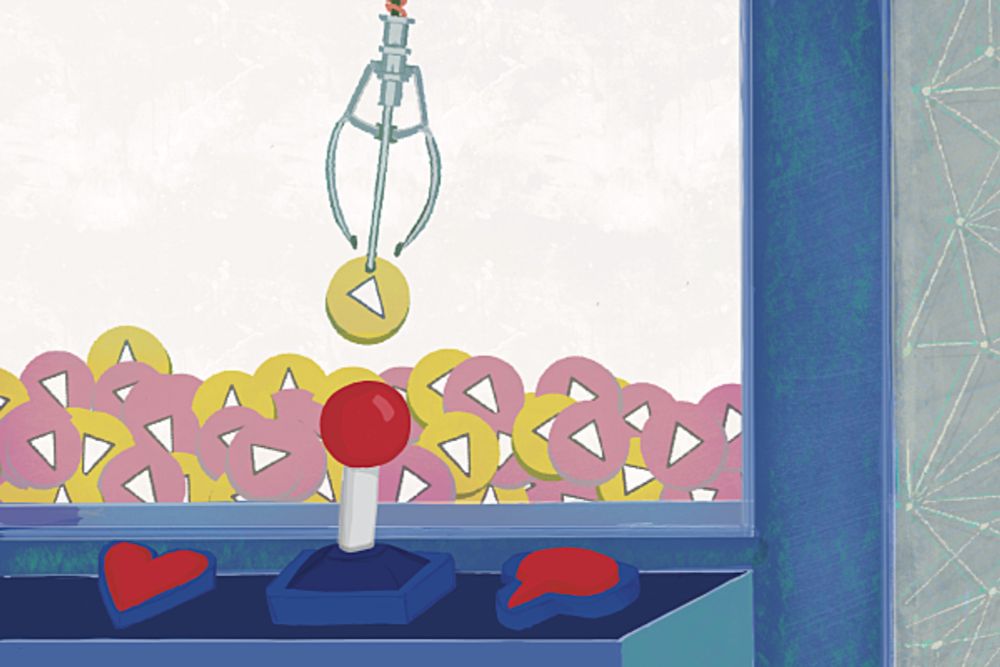

Understanding Social Media Recommendation Algorithms

I’m reading a very well written 2023 paper on social media recommender systems from @randomwalker.bsky.social I had completely forgotten that in the 00s “neither Facebook nor Twitter had the ability to reshare or retweet posts in your feed.”What a huge shift!

knightcolumbia.org/content/unde...

10.07.2025 15:51 — 👍 2 🔁 3 💬 0 📌 0

We’re hiring at Princeton on AI and society, working with Arvind Narayanan or me depending on fit.

I think current AI developments are all a huge deal but am very unexcited by current state of the AGI and/or AI safety discourse.

Please share as you see fit.

puwebp.princeton.edu/AcadHire/app...

20.06.2025 12:36 — 👍 83 🔁 51 💬 2 📌 1

After consideration, I will post occasionally, but heavily censor what I share compared to other sites.

I tried making the transition, but talking about AI here is just really fraught in ways that are tough to mitigate & make it hard to have good discussions (the point of social!). Maybe it changes

26.05.2025 04:25 — 👍 423 🔁 25 💬 75 📌 33

Two Paths for A.I.

The technology is complicated, but our choices are simple: we can remain passive, or assert control.

For @newyorker.com, Joshua Rothman spoke with @randomwalker.bsky.social and @sayash.bsky.social, authors of AI Snake Oil and a recently published paper “AI as Normal Technology”, which argues that practical obstacles will slow AI’s uses and potential: www.newyorker.com/culture/open...

28.05.2025 18:06 — 👍 17 🔁 3 💬 1 📌 3

"A hypothesis on the accelerating decline of reading:

* Broadly speaking, people read for pleasure/entertainment and for learning/obtaining information.

* Reading for pleasure has been declining for a while and is being replaced by videos (very sharply among young people). This trend will surely continue.

* Reading for obtaining information is getting intermediated by chatbots. We are in the very early stages of this shift, so I think people underappreciate the magnitude of what's coming. It's not just that AI replacing traditional web search. Even when it comes to reading news articles, business documents, or scientific papers, the vision that tech companies are pushing on us is AI summarization + synthesis + Q&A.

* We don't have to accept this, but I predict that most people will. It's a tradeoff between speed/convenience and accuracy/depth of understanding — the same tradeoff that was once offered to us when it became possible to search the web to look up a quick fact as opposed to reading about the topic in depth in an encyclopedia.

* Just as most people in most cases prefer a shallow web search over deeper reading, most people in most cases will prefer AI-intermediated access to knowledge. Traditional reading won't disappear, but people will do it vastly less often, except in hobbyist reading communities and professions where traditional reading is needed.

* The decline of reading-for-pleasure (due to video) and reading-for-information (due to AI) will accelerate each other, as reading text without an intermediary will come to be seen as a chore.

* Personally, I find this sad. But while it's tempting to moralize all this, I think that's unproductive. Yelling at individuals to resist new media has been done for centuries and has never worked.

* Even if people individually rationally choose these tradeoffs, I think we collectively lose something; critical reading skills are arguably essential for a democracy. We need to figure out what to do about that.

clear, depressing set of observations from @randomwalker.bsky.social - "The decline of reading-for-pleasure (due to video) and reading-for-information (due to AI) will accelerate each other, as reading text without an intermediary will come to be seen as a chore."

22.05.2025 14:31 — 👍 333 🔁 109 💬 14 📌 18

Moving towards informative and actionable social media research

Social media is nearly ubiquitous in modern life, and concerns have been raised about its putative societal impacts, ranging from undermining mental health and exacerbating polarization to fomenting v...

New preprint with @jbakcoleman.bsky.social @lewan.bsky.social @randomwalker.bsky.social @orbenamy.bsky.social @lfoswaldo.bsky.social where we argue for a complex-system perspective to understand the causal effects of social media on society and for a triangulation of methods

arxiv.org/abs/2505.09254

15.05.2025 06:31 — 👍 76 🔁 28 💬 2 📌 3

I'm excited that I can finally share what I've been working on for the past 9 months:

The United Nations 2025 Human Development Report: "A matter of choice: People and possibilities in the age of AI" 🧵

hdr.undp.org/content/huma...

06.05.2025 09:03 — 👍 109 🔁 28 💬 6 📌 5

AGI is not a milestone

There is no capability threshold that will lead to sudden impacts

“AGI is not a milestone because it is not actionable. A company declaring it has achieved, or is about to achieve, AGI has no implications for how businesses should plan, what safety interventions we need, or how policymakers should react.”

@randomwalker.bsky.social

open.substack.com/pub/aisnakeo...

01.05.2025 11:59 — 👍 6 🔁 1 💬 1 📌 0

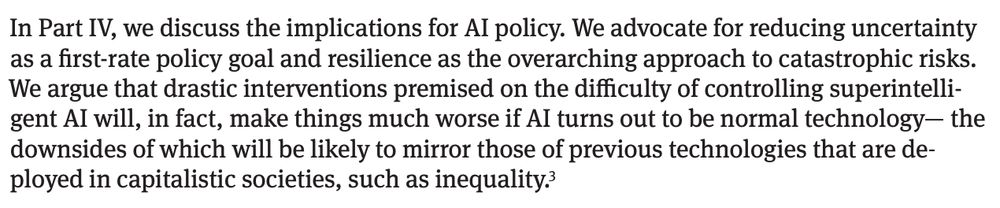

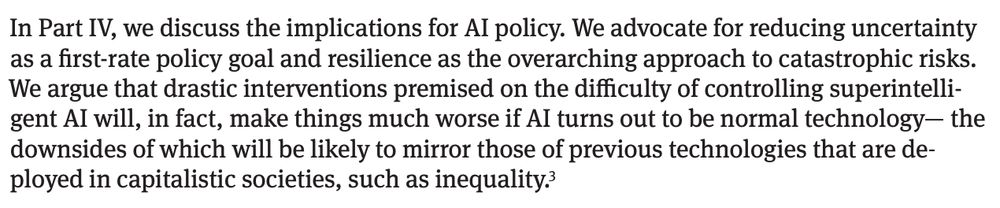

Okay just started @randomwalker.bsky.social and @sayash.bsky.social's new essay and this is 🔥🔥🔥.

"Resilience as the overarching approach to catastrophic risk" -- yes thank you exactly this.

kfai-documents.s3.amazonaws.com/documents/c3...

24.04.2025 20:41 — 👍 13 🔁 2 💬 1 📌 0

text says "ML Reproducibility Challenge Princeton University, New Jersey, USA, August 21 2025"

We are hosting @reproml.org 2025 on Aug. 21. There will be invited talks, oral presentations, and poster sessions. Keynote speakers include @randomwalker.bsky.social, @soumithchintala.bsky.social, @jfrankle.com, @jessedodge.bsky.social, @stellaathena.bsky.social

Register now: bit.ly/4cP8vIq

24.04.2025 18:57 — 👍 1 🔁 1 💬 0 📌 0

In this clip from our event last week, @randomwalker.bsky.social describes how we can map out the landscape of AI along two dimensions: how well the AI tool works, and how harmful (or benign) it is.

Watch a full recording of the event: youtu.be/C3TqcUEFR58

24.04.2025 15:21 — 👍 12 🔁 4 💬 1 📌 1

AI as Normal Technology

A new paper that we will expand into our next book

IMO, the most important piece on AI of the last 6 months and I recommend it to everyone. A genuinely careful consideration of the technology and its intersections with culture and labor from @randomwalker.bsky.social and @sayash.bsky.social Authors of AI Snake Oil substack.com/home/post/p-...

19.04.2025 12:37 — 👍 102 🔁 26 💬 4 📌 3

AI as Normal Technology

Truly thoughtful and essential analysis of the AI field from @randomwalker.bsky.social @sayash.bsky.social. States what many felt, but haven't articulated. Pairs well with Shazeda Ahmed's "epistemic culture of AI safety" and others' work on risk and anti-trust.

knightcolumbia.org/content/ai-a...

17.04.2025 16:25 — 👍 6 🔁 1 💬 1 📌 2

AI as Normal Technology

In a new essay from our "Artificial Intelligence and Democratic Freedoms" series, @randomwalker.bsky.social & @sayash.bsky.social make the case for thinking of #AI as normal technology, instead of superintelligence. Read here: knightcolumbia.org/content/ai-a...

15.04.2025 14:34 — 👍 38 🔁 17 💬 1 📌 6

epistemology of science and artificial intelligence // asst prof purdue university // argonne national lab // phd, university of chicago

www.eamonduede.com

Director, Princeton Language and Intelligence. Professor of CS.

I study algorithms/learning/data applied to democracy/markets/society. Asst. professor at Cornell Tech. https://gargnikhil.com/. Helping building personalized Bluesky research feed: https://bsky.app/profile/paper-feed.bsky.social/feed/preprintdigest

Lawyer, coder, baker.

Govt (Deputy US CTO for Obama and Biden), non-profit (Trust & Safety Professional Assn & Foundation, Data & Society, Public.resource), startup person (Google, Twitter).

Curious tinkerer.

@amac on the other thing.

Now in London.

Author: Recoding America, Founder: Code for America, Co-founder: USDS and USDR.

The Center for Information Technology Policy (CITP) is a nexus of expertise in technology, engineering, public policy, & the social sciences. Our researchers work to better understand and improve the relationship between technology & society. Princeton U.

Safe and robust AI/ML, computational sustainability. Former President AAAI and IMLS. Distinguished Professor Emeritus, Oregon State University. https://web.engr.oregonstate.edu/~tgd/

Professor of Psychology & Human Values at Princeton | Cognitive scientist curious about technology, narratives, & epistemic (in)justice | They/She 🏳️🌈

www.crockettlab.org

It is said that there may be seeming disorder and yet no real disorder at all

I lead Cohere For AI. Formerly Research

Google Brain. ML Efficiency, LLMs,

@trustworthy_ml.

Professor and Head of Machine Learning Department at Carnegie Mellon. Board member OpenAI. Chief Technical Advisor Gray Swan AI. Chief Expert Bosch Research.

Research Scientist @DeepMind | Previously @OSFellows & @hrdag. RT != endorsements. Opinions Mine. Pronouns: he/him

A LLN - large language Nathan - (RL, RLHF, society, robotics), athlete, yogi, chef

Writes http://interconnects.ai

At Ai2 via HuggingFace, Berkeley, and normal places

Professor at Wharton, studying AI and its implications for education, entrepreneurship, and work. Author of Co-Intelligence.

Book: https://a.co/d/bC2kSj1

Substack: https://www.oneusefulthing.org/

Web: https://mgmt.wharton.upenn.edu/profile/emollick

Political philosopher of AI. Assistant Prof @ UW-Madison. Previous: Harvard Tech & Human Rights Fellow, Princeton postdoc, Oxford DPhil 🤖 In Berlin this academic year 📍