Check out our website for more data on AI company finances!

epoch.ai/data/ai-com...

Check out our website for more data on AI company finances!

epoch.ai/data/ai-com...

OpenAI’s recent funding round nearly triples the amount they have raised so far. The Information has reported that OpenAI projects a $157B cash burn through 2028. This round, combined with $40B cash on hand, essentially matches that projection.

27.02.2026 21:37 — 👍 18 🔁 1 💬 2 📌 1

These roles are fully remote and we can hire in many countries, although we prefer significant overlap with North American timezones. Applications are rolling, so apply soon! epoch.ai/careers

27.02.2026 16:57 — 👍 3 🔁 1 💬 0 📌 0We’re looking for candidates for both roles who are up-to-date with AI trends, adept at managing multiple detailed workstreams, and excited about contributing to our mission: improving our understanding of the future of AI.

27.02.2026 16:57 — 👍 3 🔁 1 💬 1 📌 0

Our team is expanding! We’re hiring for a Researcher to work with our Senior Researcher, JS Denain, on pitching and developing new projects, and a Special Projects Associate to provide operational support for making great new benchmarks.

27.02.2026 16:57 — 👍 7 🔁 1 💬 1 📌 0For more details on this analysis, see our website: epoch.ai/data-insigh...

26.02.2026 19:19 — 👍 0 🔁 0 💬 0 📌 0Each company defines "capex" differently on earnings calls. Some include finance leases; some don't. So to build a consistent measure, we went directly to companies' financial filings and identified cash spending and new finance leases using standardized regulatory tags.

26.02.2026 19:19 — 👍 0 🔁 0 💬 1 📌 0Company statements and analyst projections also anticipate continued rapid spending growth in capital expenditures in 2026, though slower than this trend extrapolation.

26.02.2026 19:19 — 👍 0 🔁 0 💬 1 📌 0

Driven by investments in AI, hyperscaler capital expenditures have grown 70% per year since the release of GPT-4, nearing half a trillion dollars in total during 2025.

If this trend continues, Alphabet, Amazon, Meta, Microsoft and Oracle will spend $770 billion on capex in 2026.

This week's Gradient Update was written by Anson Ho.

All Gradient Updates are informal and opinionated analyses that represent the views of individual authors, not Epoch AI as a whole.

You can read the full post here: epoch.ai/gradient-up...

The implications could be bigger still. In scenarios like AI 2027 and Situational Awareness, automating AI R&D hugely accelerates software progress and hence capability growth.

The big open question is whether this acceleration can actually happen, which we discuss here:

bsky.app/profile/epo...

This has huge implications. For instance, it explains how DeepSeek caught up with o1 with less compute in a matter of months. The specific rate of AI software progress might even shift your AGI timelines by over a decade!

www.astralcodexten.com/p/what-happ...

In fact, AI software progress seems to be extremely fast. Each year, you need several times less training compute to reach the same level of capabilities:

26.02.2026 17:01 — 👍 1 🔁 0 💬 1 📌 0

This improvement also lets researchers push AI capabilities with the same training compute. So if software progress is fast enough, we could reach much greater capabilities without scaling training.

That’s why GPT-5 outperformed GPT-4.5 with less training compute.

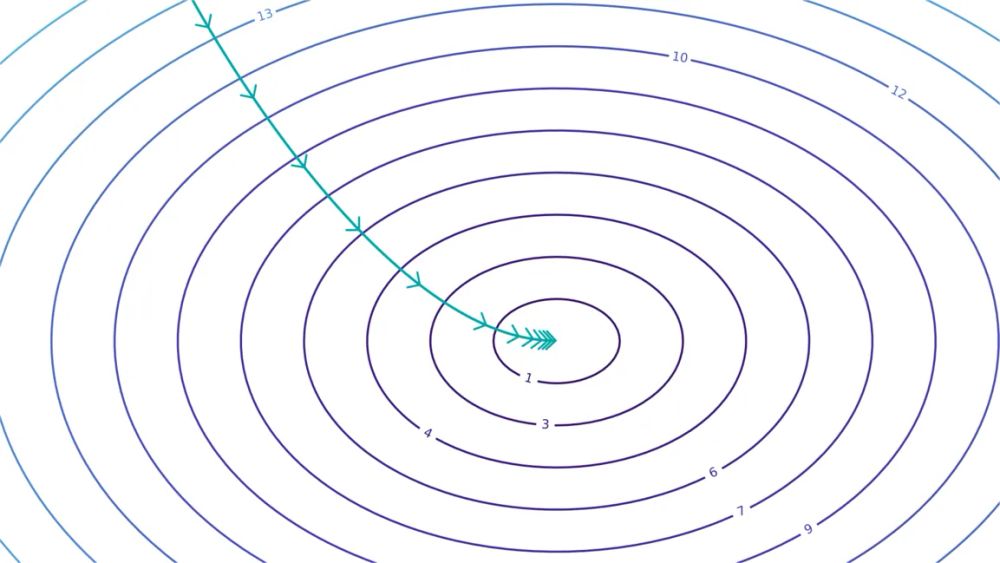

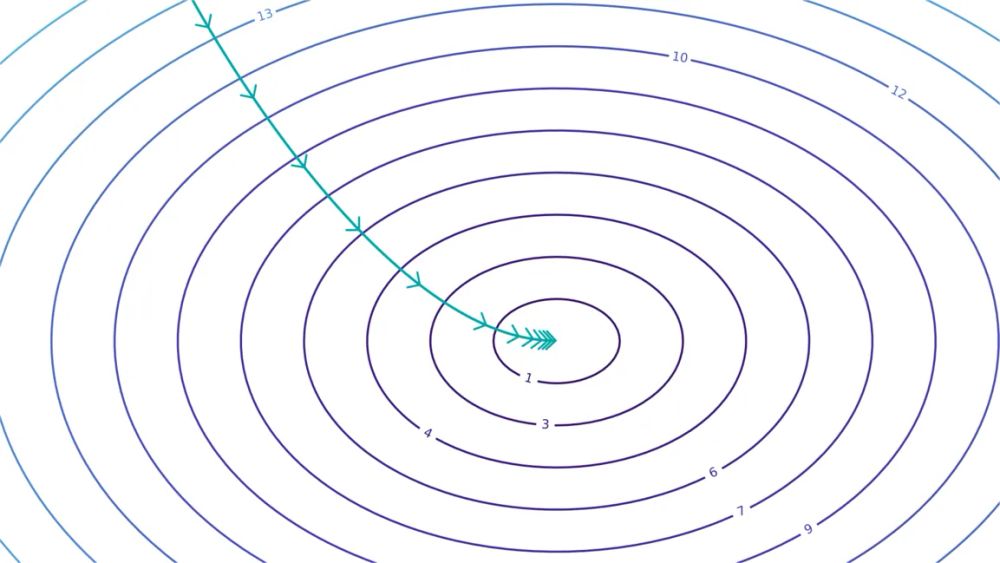

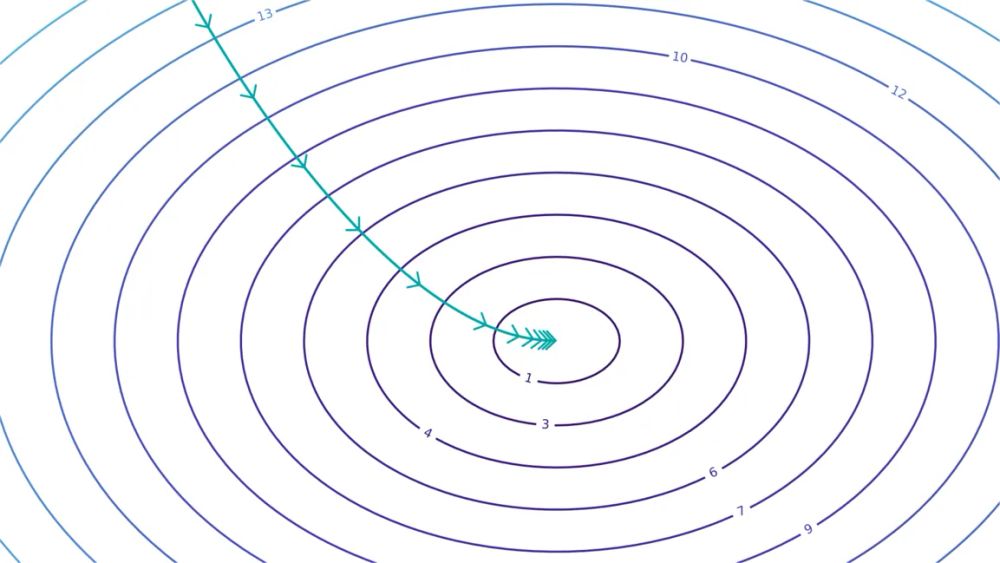

One way is to say that better AI software reduces the compute needed to reach the same capability.

For example, imagine a curve relating a measure of capabilities to log(training compute). After making an algorithmic innovation, the curve shifts to the left, saving compute:

There are many ways to improve algorithms and data. For example, you could change model architectures, build better RL environments, and improve training recipes.

But how do you concretize what makes some AI software better than others?

Developing more powerful AI isn’t just about scaling compute. It’s also about improving algorithms and data quality, which let you build better models with the same compute.

We call this “AI software progress” — here’s everything you need to know about it: 🧵

This week's Gradient Update was written by Anson Ho.

All Gradient Updates are informal and opinionated analyses that represent the views of individual authors, not Epoch AI as a whole.

You can read the full post here: epoch.ai/gradient-up...

But what’s driving these massive efficiency improvements? We discuss this in another thread:

bsky.app/profile/epo...

Despite the myriad uncertainties, Anson argues that software progress probably still improves several-fold per year. That’s because independent approaches yield this result, it’s backed up by statements from frontier labs, and corroborates individual case studies.

x.com/EpochAIRese...

Moreover, existing estimates don’t fully capture software progress in post-training, and rely on metrics which could be heavily overoptimized for. For instance, if capabilities are measured using heavily “bench maxxed” benchmarks, that could overstate progress.

26.02.2026 16:32 — 👍 1 🔁 0 💬 1 📌 0

2. Estimates are very sensitive to statistical assumptions. For example, they often falsely assume that “software quality” is the same across all labs at any time.

Parker Whitfill argues this could bias estimates much faster or slower — e.g. 9× slower! arxiv.org/abs/2508.11033

The numbers are very uncertain for two reasons.

1. They’re based on limited data, because we lack long-run time series with both model performance and training compute, which we need to derive estimates of software progress.

Almost all existing estimates suggest very fast progress, on the order of several times per year, though the uncertainty intervals are really wide.

Still, it’s very possible that training efficiency improves much faster than 3× per year. Even 10× per year seems possible!

In 2024, Epoch AI estimated the rate of software progress in language models. We found that training compute efficiency was improving at ~3x per year.

But this estimate was for pre-training, and is now outdated — so Epoch AI researcher Anson Ho took a new look at the numbers. 🧵

This week's Gradient Update was written by Anson Ho.

All Gradient Updates are informal and opinionated analyses that represent the views of individual authors, not Epoch AI as a whole.

You can read the full post here: epoch.ai/gradient-up...

Some prior analyses of the software intelligence explosion also use overly conservative estimates of software progress. Adjusting for this would counteract the force of the training compute bottleneck argument.

26.02.2026 16:24 — 👍 1 🔁 0 💬 1 📌 0

But this isn’t a slam-dunk argument, because software progress could continue through better data, and much smarter AIs could in principle find innovations that tremendously increase capabilities at the same training compute.

26.02.2026 16:24 — 👍 1 🔁 0 💬 1 📌 0This means key analyses in the literature overrate research effort's role in software progress. Even if AIs automate AI R&D, you're still bottlenecked on scaling training compute, which makes a software intelligence explosion less likely.

26.02.2026 16:24 — 👍 1 🔁 0 💬 1 📌 0

This could be a big deal for the debate about the software intelligence explosion. Previous models of this assumed that compute efficiency came from a combination of research effort and experiment compute, but it neglects how it could come from scaling training compute.

26.02.2026 16:24 — 👍 1 🔁 0 💬 1 📌 0