And I still haven’t learned what Skibidi means. 😡

16.10.2025 16:28 — 👍 1 🔁 0 💬 1 📌 0Joe Barrow

@jbarrow.bsky.social

NLP @ Pattern Data Prev: Adobe Research, PhD UMD

@jbarrow.bsky.social

NLP @ Pattern Data Prev: Adobe Research, PhD UMD

And I still haven’t learned what Skibidi means. 😡

16.10.2025 16:28 — 👍 1 🔁 0 💬 1 📌 0Now, some acknowledgments: this work was made possible thanks to a generous compute grant from Lambda!

And I've got a hosted version of the model that I'll be sharing in a couple days hosted on @modal-labs.bsky.social, which makes it basically free for me to host and scale

As part of the paper, I'm working on releasing the dataset and FFDNet models on HuggingFace.

Those will be out in the coming days, you can follow along here: github.com/jbarrow/comm...

🤗Paper: huggingface.co/papers/2509....

arXiv: arxiv.org/abs/2509.16506

Now, just because we filtered for the cleanest forms doesn't mean we got _perfect_ forms. There are still a lot of inconsistencies in how people prepare forms! In future work I'll be looking at mitigating data quality issues like these.

24.09.2025 17:51 — 👍 0 🔁 0 💬 1 📌 0

(Note, this doesn't _just_ apply to Acrobat, it's also better than Apple Preview -- neither Acrobat nor Preview even make an attempt at checkboxes, and they're often fooled by any straight, horizontal line. Left: Acrobat, Right: FFDNet)

24.09.2025 17:51 — 👍 0 🔁 0 💬 1 📌 0

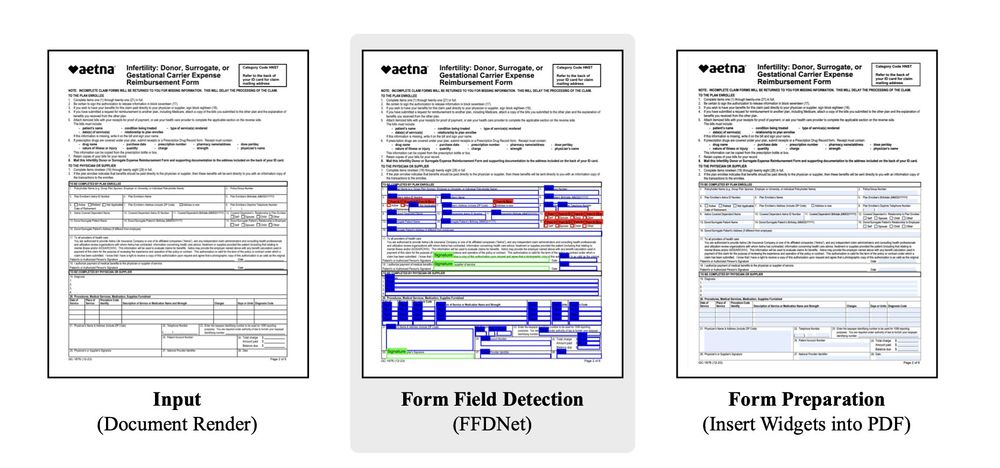

If we train object detectors to find the form fields on these pages, we get a much cleaner set of forms than if you used Acrobat to automatically prepare your form. (Left: Acrobat, Right: FFDNet).

24.09.2025 17:51 — 👍 0 🔁 0 💬 1 📌 0

Step 1 is to filter out for the cleanest forms possible. We start with 8MM PDFs from Common Crawl, and work our way down to ~60k of the cleanest forms we can find. The results is a ~500k page dataset, called CommonForms.

24.09.2025 17:51 — 👍 0 🔁 0 💬 1 📌 0

Paper thread of some work I’m *incredibly* proud of, my first single author paper!

Converting a PDF to a fillable form is a hard problem, and a lot of solutions don’t work very well! In CommonForms, I show that you can train models that outperform Adobe Acrobat for <$500! 🧵

Yeah I wonder if that statistic is flipped between the cities (though operated by the same provider — Lyft — I assume?)

No way that 99 out of every 100 riders in Boston have visited more than 27 stations?

Pretty sure you want that number to be lower. :p (my stats for DC ridership)

31.05.2025 05:04 — 👍 2 🔁 0 💬 1 📌 0

In which I argue that LLM-generated bounding boxes are impressive, but not that useful (yet): notes.penpusher.app/Misc/Horsesh...

23.04.2025 17:33 — 👍 2 🔁 0 💬 0 📌 0Would absolutely love that!

11.03.2025 12:28 — 👍 2 🔁 0 💬 0 📌 0"AI TOPS our stock price"

- Nvidia, today

Ah, yes, that ol' familiar unit of measure "AI TOPS"

07.01.2025 20:13 — 👍 4 🔁 0 💬 1 📌 0Agree, my ideal would be if you could type into an old, cheap, refurb kindle personally.

Here’s a video of a person typing into the Palma: www.reddit.com/r/Onyx_Boox/...

My experience (tablet) is that it’s maybe 10s from pickup to writing — wake up (3s), navigate to apps (2s), open app (3-5s)?

I’ve got one of the older, larger eInk tablets and use it for reading books/papers and taking notes. Battery after several years lasts about a week of average use, longer if I keep WiFi off.

21.12.2024 21:29 — 👍 1 🔁 0 💬 1 📌 0Not necessarily hitting the price point but there are eInk mini tablets (e.g. Boox Palma at around $200) that have Android, no sim (so no phone distractions), and long battery life (thanks to the eInk and being generally underpowered). They accept keyboards, too.

21.12.2024 21:27 — 👍 2 🔁 0 💬 1 📌 0

I put together a little guide on getting started with Google Gemini -- how to make multimodal calls, get structured outputs, and image bounding boxes to build an object detector.

notes.penpusher.app/Misc/Google+...

Drake meme template. No to: clear, concise prose Yes to: negative vspace

18.12.2024 10:37 — 👍 3 🔁 1 💬 0 📌 0

Drake meme template. No to: clear, concise prose Yes to: negative vspace

18.12.2024 10:37 — 👍 3 🔁 1 💬 0 📌 0Holy moly that created an extra half page of space!

18.12.2024 10:09 — 👍 2 🔁 0 💬 1 📌 0

A picture of a teapot to the right of a teacup, both in a flat-bottomed basket. The teacup has eta and flowers in it. There are 2 blue bounding boxes on the image, one labeled "Teapot" and one labeled "Teacup" that are over the teapot and teacup respectively.

Gemini 2.0 Flash is pretty good at localization in images. for an LMM (much better than GPT-4o in my experiments).

18.12.2024 09:26 — 👍 1 🔁 0 💬 0 📌 0ML history question: is there an earlier reference to pixel-only in-context (i.e. no fine-tuning) DocVQA performance than the GPT-4 announcement from OpenAI?

09.12.2024 09:45 — 👍 0 🔁 0 💬 0 📌 0Aged white tea, the kind that comes in a compressed disk or ball. My favorite kind of tea, imo they taste naturally quite sweet. Yunnan Sourcing has a bunch!

25.11.2024 05:58 — 👍 1 🔁 0 💬 1 📌 0