index the codebase first. Then ask o1 in "codebase chat" the same questions you would like to ask the author/owner of the codebase.

Mostly useful when digging into unknown/new codebases and trying to understand them. Or asking about possible bugs :)

26.01.2025 21:25 — 👍 4 🔁 0 💬 0 📌 0

yes, I noticed that over Christmas break and ever since, it's just... a lot more boring here.

My first reason to open social media is to be entertained. Second reason to entertain. Maybe last third to learn something new.

Not much of any of these here. I know I know, be the change and all that.

26.01.2025 21:23 — 👍 0 🔁 0 💬 1 📌 0

I noticed that I'm not using bsky much anymore. Not sure why, vibes.

Anyways, someone noticing that DeepSeek refuses to answer *anything* about Xi Jinping, even the question whether he exists at all, triggered me writing a short snippet on safety fine-tuning: lb.eyer.be/s/safety-sft...

26.01.2025 21:21 — 👍 87 🔁 7 💬 6 📌 0

First candidate for banger of the year appeared, only 2 days in:

02.01.2025 22:26 — 👍 26 🔁 0 💬 0 📌 1

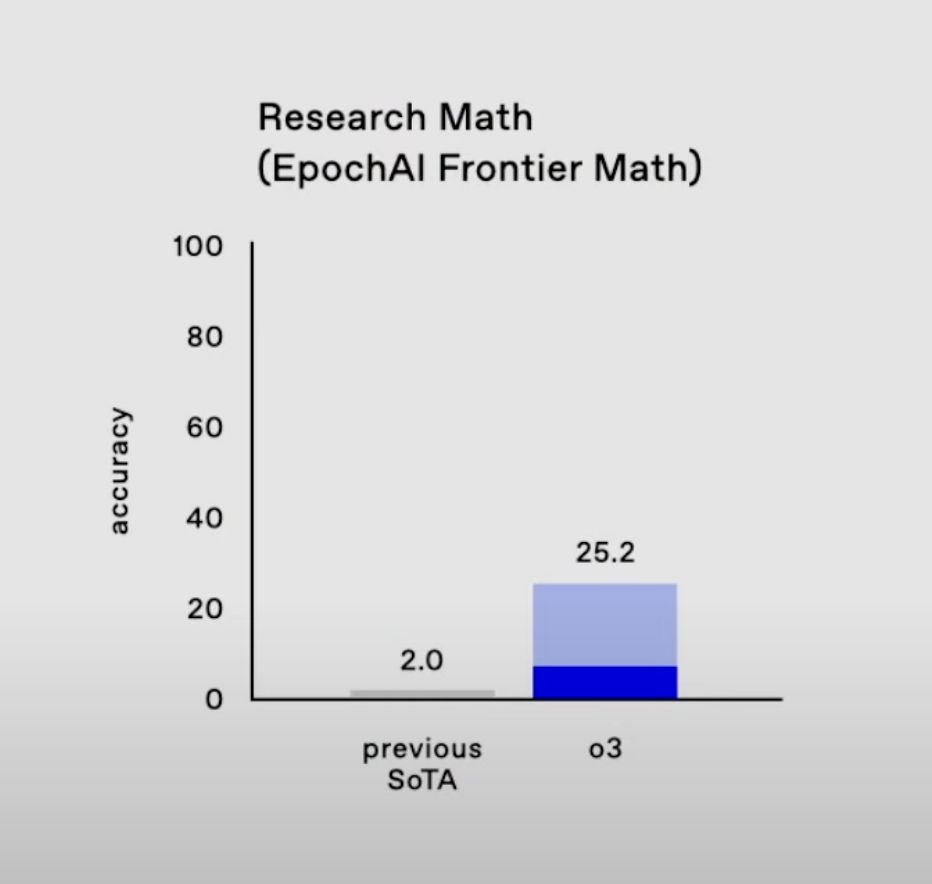

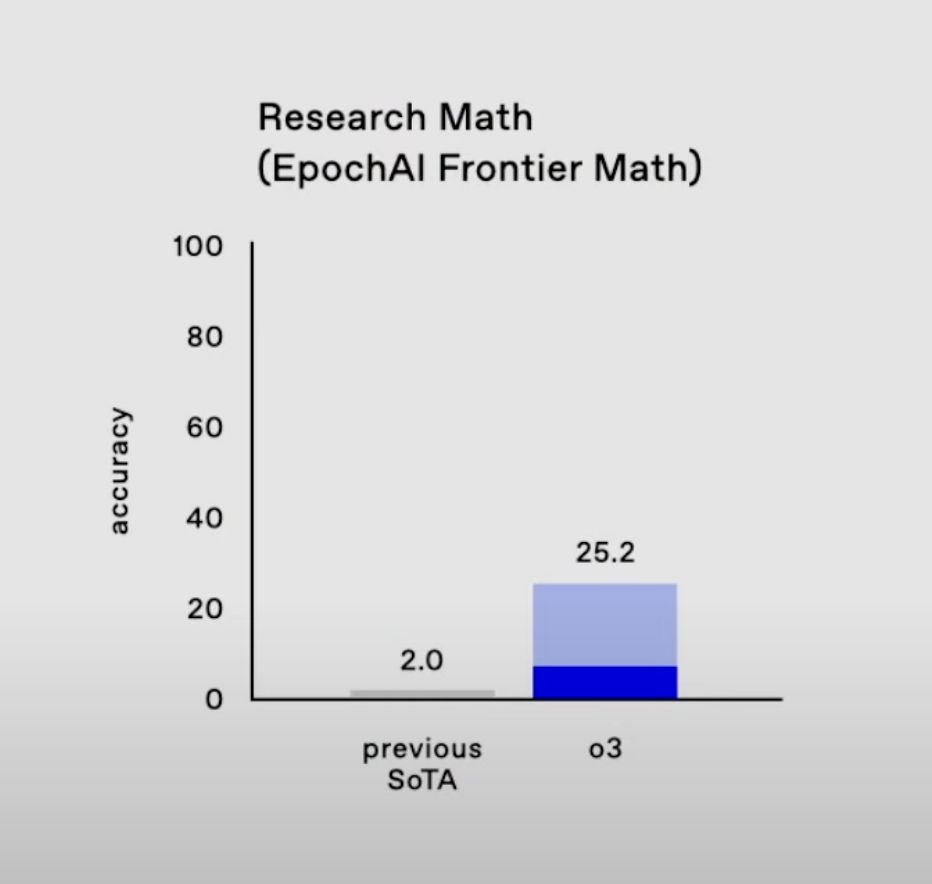

OpenAI skips o2, previews o3 scores, and they're truly crazy. Huge progress on the few benchmarks we think are truly hard today. Including ARC AGI.

Rip to people who say any of "progress is done," "scale is done," or "llms cant reason"

2024 was awesome. I love my job.

20.12.2024 18:08 — 👍 113 🔁 14 💬 11 📌 5

It reeeeally depends what are loss1 and loss2, both regarding what’s standard and what’s wasted.

I honestly think you are confused, the three codes in three different posts of you mean three different things. I don’t mean it in a negative way, but clearing it up would take more time than I want :/

19.12.2024 20:16 — 👍 1 🔁 0 💬 2 📌 0

Yeah it seems either he had a mistake in the OP, or the subject of the discussion has drifted :)

19.12.2024 20:11 — 👍 0 🔁 0 💬 0 📌 0

Until we got good enough AI supported search, no, you can’t realistically expect them to find anything and everything from the past 30 years when the vocab and everything changes.

19.12.2024 19:12 — 👍 10 🔁 0 💬 1 📌 0

Well, no, two things:

1. In the OP indeed *both* formulations waste compute, so yeah :)

2. In 2nd post, you are not doing the same thing as in your OP! In your 2nd, you are doing good old micro batching which indeed the second way is the standard way.

So what you say keeps changing O.o

19.12.2024 19:10 — 👍 0 🔁 0 💬 1 📌 0

I would enjoy that meeting ;)

19.12.2024 16:19 — 👍 3 🔁 0 💬 0 📌 0

Yeah it’s silly to expect the new generation to know everything the old generation did, doing so shows complete lack of empathy.

19.12.2024 16:19 — 👍 7 🔁 0 💬 1 📌 0

If the two graphs are completely disjoint, then there is no point in this. If they have some commonality (like model) then this does the common part twice.

19.12.2024 16:17 — 👍 1 🔁 0 💬 3 📌 0

I’m somewhat confident both of these are sins lol second one wastes a ton of compute!

19.12.2024 08:28 — 👍 6 🔁 0 💬 2 📌 0

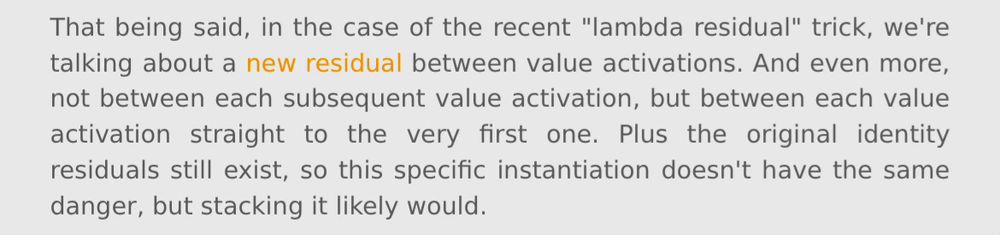

Not quite because this one is not stacked, so I give it better chance to scale:

19.12.2024 08:24 — 👍 1 🔁 0 💬 0 📌 0

A post by @cloneofsimo on Twitter made me write up some lore about residuals, ResNets, and Transformers. And I couldn't resist sliding in the usual cautionary tale about small/mid-scale != large-scale.

Blogpost: lb.eyer.be/s/residuals....

18.12.2024 23:14 — 👍 81 🔁 9 💬 3 📌 2

That’s what the globe was for!

14.12.2024 16:35 — 👍 2 🔁 0 💬 1 📌 0

One of the physics of llm papers studied that and found you need a certain amour of repetitions of a factoid before it’s memorized. Repetition can be either multi epochs or just the same fact in another document. Number of needed repeats is also related to model size.

13.12.2024 16:27 — 👍 11 🔁 2 💬 2 📌 0

OK OK I’ll admit it, I’m feeding off your fomo! There can’t be enough fomo! Mmmm fomo!

12.12.2024 16:45 — 👍 2 🔁 0 💬 0 📌 0

Yeah they compress videos to shit here, and are considering making good quality videos a paying feature.

12.12.2024 16:43 — 👍 3 🔁 0 💬 1 📌 0

No talk but two posters, I’m just a middle author but will try to be there (locca and NoFilter). That being said my main occupation here will be meeting many of my new colleagues.

10.12.2024 18:15 — 👍 1 🔁 0 💬 0 📌 0

Good morning Vancouver!

Things are different here: this guy is alone, chonky, and not scared at all, I was more scared of him towards the end lol.

Also look at that … industrialization

10.12.2024 18:09 — 👍 31 🔁 0 💬 3 📌 0

lol exactly. I said cdg whenever possible.

That being said, our flight is operated by AirCanada, including a layover in Canada, that is a lot worse.

09.12.2024 10:07 — 👍 5 🔁 0 💬 2 📌 0

Oops! Thanks

09.12.2024 10:06 — 👍 0 🔁 0 💬 0 📌 0

Good morning! On my way to NeurIPS, slightly sad to leave this beautiful place and my family for the week, but also excited to meet many new and old friends at NeurIPS!

09.12.2024 08:40 — 👍 66 🔁 0 💬 3 📌 1

This afternoon flight? I’m taking that too

09.12.2024 08:35 — 👍 2 🔁 0 💬 2 📌 0

Aye, finally I can without it being weird lol

08.12.2024 18:15 — 👍 5 🔁 0 💬 0 📌 0

08.12.2024 18:07 — 👍 3 🔁 0 💬 1 📌 0

08.12.2024 18:07 — 👍 3 🔁 0 💬 1 📌 0

YouTube video by Mediterranean Machine Learning (M2L) summer school

[M2L 2024] Transformers - Lucas Beyer

One of the best tutorials for understanding Transformers!

📽️ Watch here: www.youtube.com/watch?v=bMXq...

Big thanks to @giffmana.ai for this excellent content! 🙌

08.12.2024 09:58 — 👍 55 🔁 8 💬 0 📌 0

Yep, and related to this: bsky.app/profile/giff...

08.12.2024 08:46 — 👍 2 🔁 0 💬 0 📌 0

I’m confused, why not nbviewer.com which has existed and working for a decade?

08.12.2024 08:44 — 👍 4 🔁 0 💬 2 📌 0

Lead product for Google AI Studio, working on the Gemini API, and AGI, my views!

AI research scientist at Google Deepmind, Zürich

Boston transplant now living in San Francisco with a border collie / lab mix

Foundation Models for Generalizable Autonomy.

Assistant Professor in AI Robotics, Georgia Tech

prev Berkeley, Stanford, Toronto, Nvidia

Google DeepMind, Zurich; before: RWTH, DFKI. Views my own.

Research scientist at Google DeepMind.🦎

She/her.

http://www.aidanematzadeh.me/

AI + Astro/Physics. Assistant Prof at Cambridge.

astroautomata.com/

@PyTorch "My learning style is Horace twitter threads" -

@typedfemale

I work at Sakana AI 🐟🐠🐡 → @sakanaai.bsky.social

https://sakana.ai/careers

Co-founder & Chief Scientist at Yutori. Prev: Senior Director leading FAIR Embodied AI at Meta, and Professor at Georgia Tech.

PhD Student @Princeton VisualAI Lab, working on video and multimodal understanding.

realistic settings only.

https://tylerzhu.com/research

Competitive Machine Learning director at NVIDIA, 3x Kaggle Grandmaster CPMP, ENS ULM alumni. Kaggle profile: https://www.kaggle.com/cpmpml

08.12.2024 18:07 — 👍 3 🔁 0 💬 1 📌 0

08.12.2024 18:07 — 👍 3 🔁 0 💬 1 📌 0

![[M2L 2024] Transformers - Lucas Beyer](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:5pnsngbdz5udoytu3lylog7z/bafkreid5njn432psqkaccemu426qa4qcnig4gaet2s55htw4gsdozhbccy@jpeg)