“People who can suffer are ultimately the ones who are the most successful”. Jensen Huang’s, —NVIDIA’s CEO— advice to students youtu.be/zqI-EWQG8ZI?...

24.01.2025 13:58 — 👍 1 🔁 0 💬 0 📌 0@dadashkarimi.bsky.social

Postdoc at University of Pennsylvania, former developer at Martinos Center at MGH/Harvard, Yale '23, medical image analysis 🧠, deep learning, connectomics (he/him/his)

“People who can suffer are ultimately the ones who are the most successful”. Jensen Huang’s, —NVIDIA’s CEO— advice to students youtu.be/zqI-EWQG8ZI?...

24.01.2025 13:58 — 👍 1 🔁 0 💬 0 📌 0

I love this quote from Benjamin Franklin: ‘Either write things worth reading, or do things worth writing.’ @upenn.edu

27.12.2024 03:16 — 👍 4 🔁 1 💬 0 📌 0

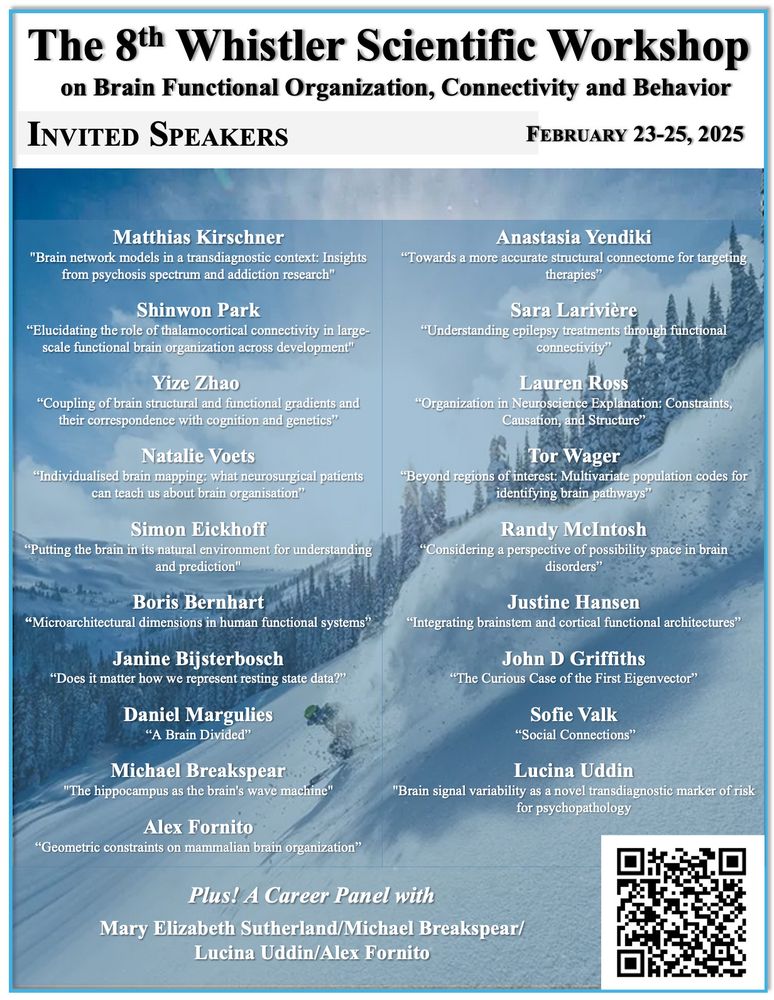

Now that those #OHBM abstracts are done - think about submitting to this connectivity workshop. Stellar lineup of speakers is set! Register (and submit abstracts) here -

medicine.yale.edu/mrrc/about/s...

deadline is Jan 10, 2025.

7/

We tested our method on two datasets:

- HASTE images.

- EPI scans.

And showed that it reaches State-of-the-art performance, especially in younger fetuses. Also, our model is contrast agnostic; it generalizes to various modalities. You can find our preprint at arxiv.org/pdf/2410.20532

6/

Testing: Step 2 (Fine-Level)

- Model B handles mid-sized patches (96³) on the cropped volume. Same for model C with 64³ windows.

- The majority voting across A, B, and C defines consistent regions likely containing the brain.

- Model D refines the final binary mask to avoid edge effects.

5/

Testing: Step 1 (Breadth-Level)

Model A scans large patches (128³) for the brain.

Model D tests tiny patches (32³) to ensure fine-grained accuracy.

Combined masks crop the image to areas of interest for progressively further refinements.

4/

To tackle maternal tissues that usually confuse U-Nets, we train 4 U-Nets:

- Each is optimized for different patch sizes.

- Synthetic images include full, partial, and absent brains.

This multi-scale approach prepares us to handle complex scenarios during testing.

3/

Our synthesizer has two components:

- One controls the shape of the brain (applied on labels 1 to 7)

- One manages the background (label 0 and labels 8 to 24).

Separate parameters for each category allow us to have fine control over the variability of the shapes (e.g., warping, scaling, noise)

2/

During training, we augment label maps with random background shapes:

- A big ellipse (womb-like).

- Contours inside/outside the ellipse.

- Synthetic “sticks” and “bones” mimicking maternal anatomy.

This creates diverse and realistic label maps.

🧵 1/ Do you have limited annotations and need a robust fetal brain extraction model with endless training data?

We introduce Breadth-Fine Search (BFS) and Deep Focused Sliding Window (DFS): a framework trained on infinite synthetic images derived from a small set of annotated seeds (label maps).

5/

Testing: Step 1 (Breadth-Level)

Model A scans large patches (128³) for the brain.

Model D tests tiny patches (32³) to ensure fine-grained accuracy.

Combined masks crop the image to areas of interest for progressively further refinements.

4/

To tackle maternal tissues that usually confuse U-Nets, we train 4 U-Nets:

- Each is optimized for different patch sizes.

- Synthetic images include full, partial, and absent brains.

This multi-scale approach prepares us to handle complex scenarios during testing.

3/

Our synthesizer has two components:

- One controls the shape of the brain (applied on labels 1 to 7)

- One manages the background (label 0 and labels 8 to 24).

Separate parameters for each category allow us to have fine control over the variability of the shapes (e.g., warping, scaling, noise)

2/

During training, we augment label maps with random background shapes:

- A big ellipse (womb-like).

- Contours inside/outside the ellipse.

- Synthetic “sticks” and “bones” mimicking maternal anatomy.

This creates diverse and realistic label maps.

Nice work from Anja Samardzija and team. Instead of using CPM to identify networks, networks are predefined and used to evaluate external measures. This provides a framework for the development of improved tests assessing specific brain networks.

www.biorxiv.org/content/10.1...