RLJ | RLC Call for Papers

Hi RL Enthusiasts!

RLC is coming to Montreal, Quebec, in the summer: Aug 16–19, 2026!

Call for Papers is up now:

Abstract: Mar 1 (AOE)

Submission: Mar 5 (AOE)

Excited to see what you’ve been up to - Submit your best work!

rl-conference.cc/callforpaper...

Please share widely!

23.12.2025 22:16 — 👍 61 🔁 27 💬 0 📌 5

Could we meta-learn which data to train on? Yes!

Does this make LLM training more efficient? Yes!

Would you like to know exactly how? arxiv.org/pdf/2505.17895

(come see us at NeurIPS too!)

06.11.2025 17:24 — 👍 8 🔁 0 💬 0 📌 0

@rl-conference.bsky.social

27.08.2025 12:48 — 👍 1 🔁 0 💬 0 📌 0

Where do some of Reinforcement Learning's great thinkers stand today?

Find out! Keynotes of the RL Conference are online:

www.youtube.com/playlist?lis...

Wanting vs liking, Agent factories, Theoretical limit of LLMs, Pluralist value, RL teachers, Knowledge flywheels

(guess who talked about which!)

27.08.2025 12:46 — 👍 76 🔁 24 💬 1 📌 1

On my way to #ICML2025 to present our algorithm that strongly scales with inference compute, in both performance and sample diversity! 🚀

Reach out if you’d like to chat more!

13.07.2025 12:26 — 👍 8 🔁 2 💬 0 📌 0

Deadline to apply is this Wednesday!

02.06.2025 09:40 — 👍 4 🔁 1 💬 0 📌 0

The RL team is a small team led by David Silver. We build RL algorithms and solve ambitious research challenges. As one of DeepMind's oldest teams, it has been instrumental in building DQN, AlphaGo, Rainbow, AlphaZero, MuZero, AlphaStar, AlphaProof, Gemini, etc. Help us build the next big thing!

24.05.2025 10:08 — 👍 2 🔁 0 💬 1 📌 0

Research Engineer, Reinforcement Learning

London, UK

Ever thought of joining DeepMind's RL team? We're recruiting for a research engineering role in London:

job-boards.greenhouse.io/deepmind/job...

Please spread the word!

22.05.2025 15:11 — 👍 28 🔁 8 💬 1 📌 1

When faced with a challenge (like debugging) it helps to think back to examples of how you've overcome challenges in the past. Same for LLMs!

The method we introduce in this paper is efficient because examples are chosen for their complementarity, leading to much steeper inference-time scaling! 🧪

20.03.2025 10:23 — 👍 19 🔁 5 💬 0 📌 0

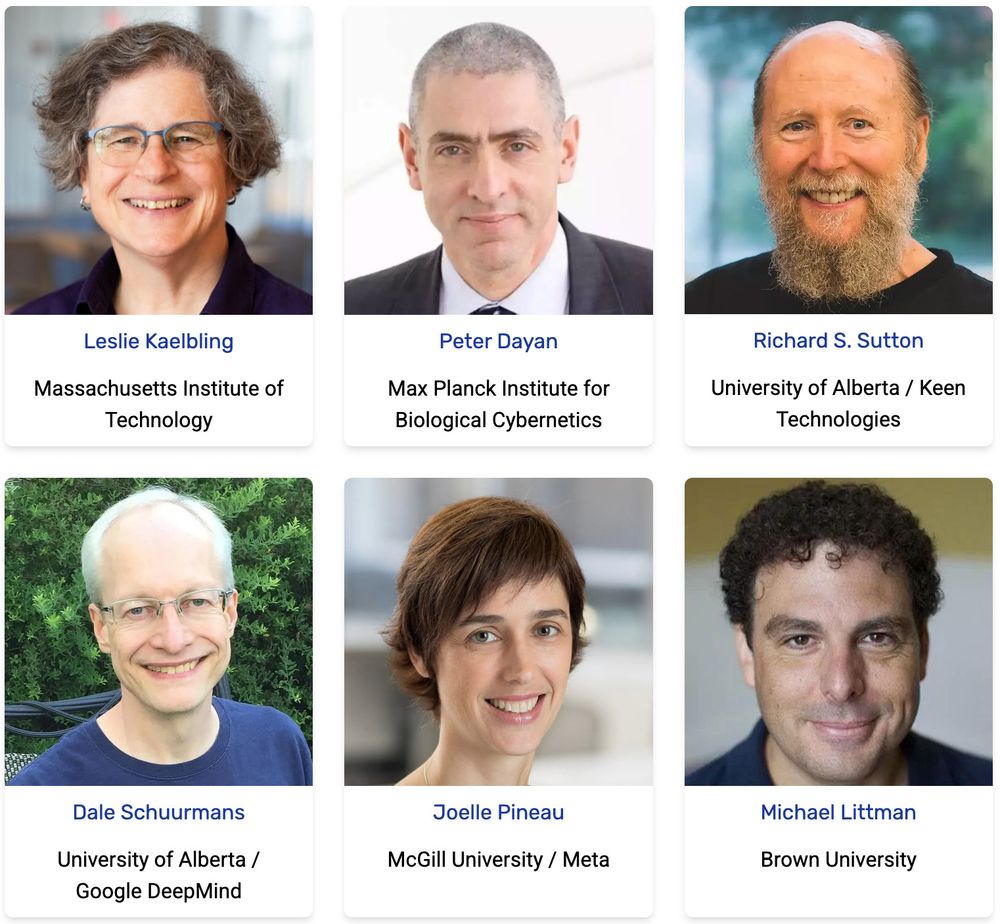

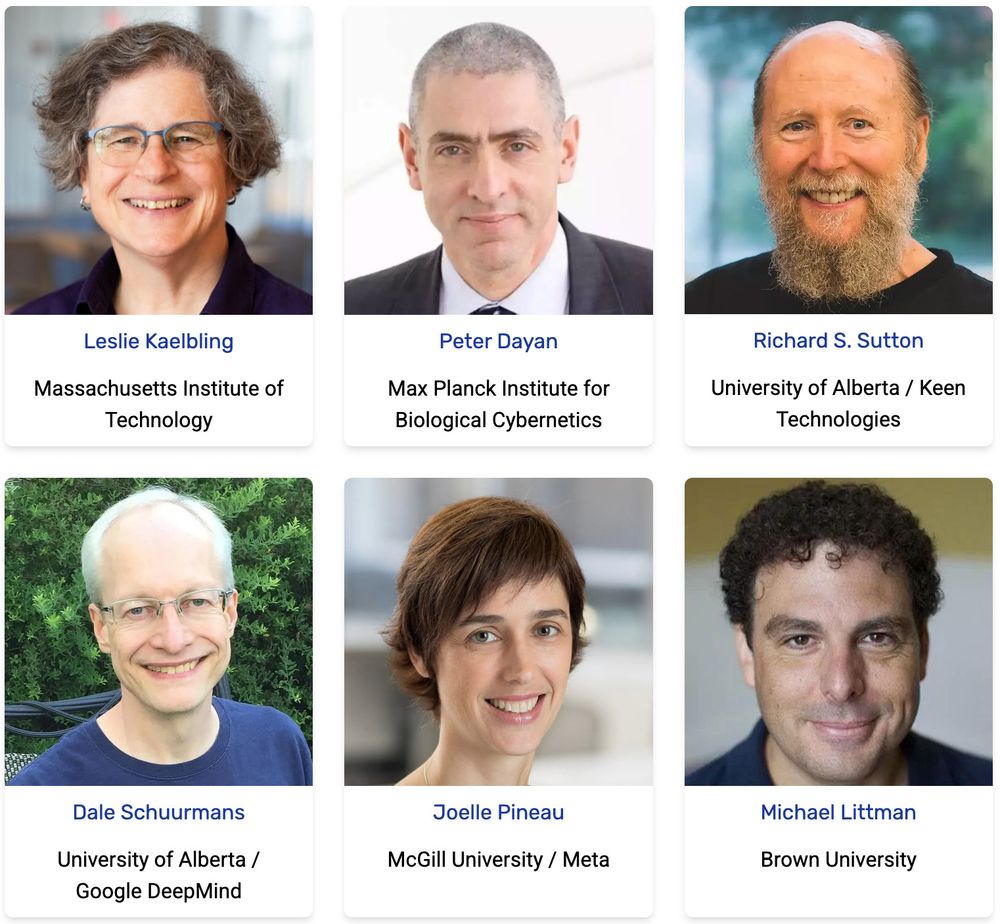

RLC Keynote speakers: Leslie Kaelbling, Peter Dayan, Rich Sutton, Dale Schuurmans, Joelle Pineau, Michael Littman

Some extra motivation for those of you in RLC deadline mode: our line-up of keynote speakers -- as all accepted papers get a talk, they may attend yours!

@rl-conference.bsky.social

24.02.2025 11:16 — 👍 37 🔁 10 💬 0 📌 1

200 great visualisations: 200 facets and nuances of 1 planetary story.

31.01.2025 13:41 — 👍 5 🔁 0 💬 0 📌 0

The sound of two users joining per second: "tik", "tok"...

30.01.2025 11:39 — 👍 4 🔁 0 💬 0 📌 0

YouTube video by Reinforcement Learning Conference

David Silver - Towards Superhuman Intelligence - RLC 2024

Reposting David Silver's talk about how RL is the way to intelligence. No particular reason

www.youtube.com/watch?v=pkpJ...

27.01.2025 23:32 — 👍 71 🔁 7 💬 0 📌 0

Announcement of Richard S. Sutton as RLC 2025 keynote speaker

Excited to announce the first RLC 2025 keynote speaker, a researcher who needs little introduction, whose textbook we've all read, and who keeps pushing the frontier on RL with human-level sample efficiency

08.01.2025 15:03 — 👍 51 🔁 4 💬 0 📌 0

Could language games (and playing many of them) be the renewable energy that Ilya was hinting at yesterday? They do address two core challenges of self-improvement -- let's discuss!

My talk is today at 11:40am, West Meeting Room 220-222, #NeurIPS2024

language-gamification.github.io/schedule/

14.12.2024 16:30 — 👍 27 🔁 1 💬 0 📌 0

Don't get to talk enough about RL during #neurips2024? Then join us for more, tomorrow night at The Pearl!

10.12.2024 22:42 — 👍 14 🔁 0 💬 0 📌 0

Dynamic programming has a fun origin story. In 1950, Bellman wanted to coin a term that "was something not even a Congressman could object to".

See here:

pubsonline.informs.org/doi/pdf/10.1...

09.12.2024 16:50 — 👍 5 🔁 0 💬 1 📌 0

This year's (first-ever) RL conference was a breath of fresh air! And now that it's established, the next edition is likely to be even better: Consider sending your best and most original RL work there, and then join us in Edmonton next summer!

02.12.2024 19:37 — 👍 19 🔁 3 💬 0 📌 0

Ohh... good morning to you too!

Clearly this got off on the wrong foot: do you want to try again, maybe more constructively (in the spirit of bluesky not being the other place)? This is a preprint, so I'd be happy to hear your suggestions for making it less "ignorant"...

02.12.2024 10:36 — 👍 4 🔁 0 💬 1 📌 0

Either one or many players. For "improvement" to be well-defined, one agent must be special (see footnote 6), but the multi-agent setting has many benefits.

30.11.2024 16:54 — 👍 1 🔁 0 💬 1 📌 0

Open-Endedness is Essential for Artificial Superhuman Intelligence

In recent years there has been a tremendous surge in the general capabilities of AI systems, mainly fuelled by training foundation models on internetscale data. Nevertheless, the creation of openended...

1: open-ended means that it will keep producing novel and learnable artifacts (see the definition here: arxiv.org/abs/2406.04268), on the timescale of interest for the observer.

2: I think as a thought experiment it is valid, as it could work in principle, but of course it hasn't been built?

29.11.2024 12:32 — 👍 1 🔁 0 💬 1 📌 0

In section 5 (second paragraph), there's about a dozen references to language games people are already using (one per paper), some with ingenious ways to provide feedback.

Also, I suspect the workshop will ultimately have the poster abstracts online with plenty of additional material!

28.11.2024 19:08 — 👍 1 🔁 0 💬 1 📌 0

@colah.bsky.social: with a few years' hindsight, how do you see the Distill space now? Is there a chance for a reboot or a rebirth in another form?

28.11.2024 11:23 — 👍 2 🔁 0 💬 0 📌 0

Distill — Latest articles about machine learning

Articles about Machine Learning

I think the Distill journal was really valuable in this space, but unfortunately is no longer around to help...

distill.pub

27.11.2024 13:42 — 👍 12 🔁 0 💬 2 📌 0

Oh, this is my tribe!

Some other people here that I appreciate for their infectious positivity:

@akoopa.bsky.social

@jhamrick.bsky.social

@rockt.ai

@pcastr.bsky.social

@luisazintgraf.bsky.social

@dabelcs.bsky.social

@aditimavalankar.bsky.social

23.11.2024 10:35 — 👍 12 🔁 0 💬 4 📌 0

RLC will be held at the Univ. of Alberta, Edmonton, in 2025. I'm happy to say that we now have the conference's website out: rl-conference.cc/index.html

Looking forward to seeing you all there!

@rl-conference.bsky.social

#reinforcementlearning

22.11.2024 22:46 — 👍 60 🔁 19 💬 2 📌 2

Ok, we'll have to make sure a restricted the closed system generates an open-ended set of ideas then! 😉

20.11.2024 10:10 — 👍 2 🔁 0 💬 0 📌 0

Research Strategy + Science at Google DeepMind. http://arkitus.com

Computer Scientist, Leading Science and Strategic Initiatives @ Google DeepMind.

Research Scientist at Google DeepMind interested in science.

Views here are my own.

https://www.nowozin.net/sebastian/

A summer school on the uses of AI in and for games.

Industry lectures, key techniques in AI and games for playing, generating the content, and modelling players.

Works at Google DeepMind on Safe+Ethical AI

Research scientist @ Google DeepMind. AI to help humans.

Institute Professor, MIT Economics. Co-Director of @mitshapingwork.bsky.social. Author of Why Nations Fail, The Narrow Corridor, and Power & Progress.

Researcher in machine learning

CTO of Technology & Society at Google, working on fundamental AI research and exploring the nature and origins of intelligence.

AI researcher at Google DeepMind -- views on here are my own.

Interested in cognition & AI, consciousness, ethics, figuring out the future.

UBC Computer Science; physics-based models of human movement; deep reinforcement learning; animation; robotics

Kempner Institute research fellow @Harvard interested in scaling up (deep) reinforcement learning theories of human cognition

prev: deepmind, umich, msr

https://cogscikid.com/

Researching learning, decision-making & habitual behavior 🧠 / ECN & BCCN PhD Fellow

milenamusial.com

Postdoc in Cognitive and Computational Neuroscience at the University of Leiden, former postdoc at Ecole Normale Supérieure (Paris, France) and PhD student at Université Paris Saclay, interested in how we make decisions.

Ask me about Reinforcement Learning

Research @ Sony AI

AI should learn from its experiences, not copy your data.

My website for answering RL questions: https://www.decisionsanddragons.com/

Views and posts are my own.

she/her pronounced Ya'elle, not like the university. Comp cog neuro, feminist, activist, mom. Stop the war NOW. End the occupation. Equality will bring security.