🧵 7/7

Given our results, these tasks will hopefully also serve as proper benchmarks for the TDL and GeomDL communities.

💻 Code, data, and tutorial soon available!

@francescadomin8

🧵 7/7

Given our results, these tasks will hopefully also serve as proper benchmarks for the TDL and GeomDL communities.

💻 Code, data, and tutorial soon available!

@francescadomin8

🧵 6/7

🧠 Based on these theoretical insights, we test SSNs on brain dynamics classification tasks, showing huge improvements upon existing methods by up to 50% over vanilla message-passing GNNs and by up to 27% over the second-best models.

🧵 5/7

✅ We utilize SSNs to bridge the gap between neurotopology and the deep learning world, marking a first-time connection. In particular, we prove that SSNs are able to recover several key topological invariants that are critical to characterize brain activity.

🧵 4/7

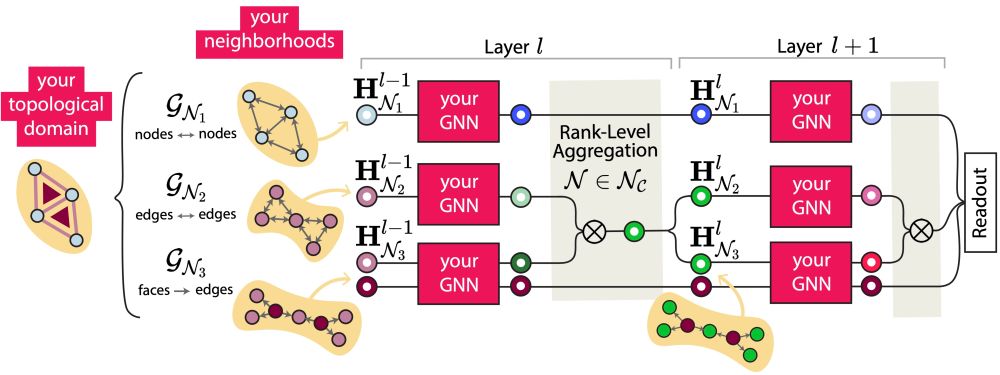

✅ SSNs can be implemented following any differentiable approach, not only message-passing.

✅ We also introduce Routing-SSNs (R-SSNs), lightweight scalable variants that dynamically select the most relevant interactions in a learnable way.

🧵 3/7

🌟 Contribution:

✅ We present Semi-Simplicial Neural Networks (SSNs), models operating on semi-simplicial sets, representing the currently most comprehensive deep learning framework to capture topological higher-order directed interactions in data.

🧵 2/7

Thank you all, especially

@manuel_lecha, who has been the incredibly talented, driving force behind this work. I will not forget our endless conversations at every time of day and night! 😅 🤣🫂

🧵 1/7

Our new preprint “Directed Semi-Simplicial Learning with Applications to Brain Activity Decoding" is online at arxiv.org/abs/2505.17939

An amazing project with a dream team, which led to a principled Topological Deep Learning architecture for a unique real-world use case!

🧵 4/7

✅ SSNs can be implemented following any differentiable approach, not only message-passing.

✅ We also introduce Routing-SSNs (R-SSNs), lightweight scalable variants that dynamically select the most relevant interactions in a learnable way.

🧵 3/7

🌟 Contribution:

✅ We present Semi-Simplicial Neural Networks (SSNs), models operating on semi-simplicial sets, representing the currently most comprehensive deep learning framework to capture topological higher-order directed interactions in data.

🧵 2/7

Thank you all, especially

@manuel_lecha, who has been the incredibly talented, driving force behind this work. I will not forget our endless conversations at every time of day and night! 😅 🤣🫂

🧵 1/7

Our new preprint “Directed Semi-Simplicial Learning with Applications to Brain Activity Decoding" is online at arxiv.org/abs/2505.17939

An amazing project with a dream team, which led to a principled Topological Deep Learning architecture for a unique real-world use case!

Thank you Guillermo Bernárdez, @clabat9.bsky.social @ninamiolane.bsky.social

for making this work possible!

🏠 @geometric-intel.bsky.social @ucsb.bsky.social

🍩TopoTune takes any neural network as input and builds the most general TDL model to date, complete with permutation equivariance and unparalleled expressivity.

⚙️ Thanks to its implementation in TopoBench, defining and training these models only requires a few lines of code.

TopoTune is going to ICML 2025!🎉🇨🇦

Curious to try topological deep learning with your custom GNN or your specific dataset? We built this for you! Find out how to get started at geometric-intelligence.github.io/topotune/

📢📢📢"The Relativity of Causal Knowledge"

THANK YOU to @clabat9.bsky.social for working side by side with me on this exciting project and making this article possible. Big thanks also to @hansmriess.bsky.social and Fabio Massimo Zennaro for their valuable feedback on an earlier version of the paper.

When we view causality subjectively, it turns into relative causal knowledge—much like a fact can seem like an opinion when seen subjectively. Still, causality is causality and a fact remains a fact, but it becomes understandable only when viewed within the entire network.🧵 9/9

18.03.2025 13:34 — 👍 0 🔁 0 💬 0 📌 0

Each subject in a network of relations has then its subjective Causal Knowledge, BUT it can be be accessed by the other subjects of the network only trough their own perspective. Imagine how important is this in an agent AI network (or in any human network).

🧵 8/9

Overall, what we did was using these tools to go beyond Structural Causal Models as we usually intend them and define a broad notion of Causal Knowledge.

🧵 7/9

Although they are sophisticated mathematical frameworks, we invite any ML practitioner to read this work, it is self-contained and has immediate methodological and practical implications.

🧵 6/9

We believe this was a necessary step toward a better understanding of causality and will have significant implications on AI.

The moment our conceptual goal was clear, we found the technical tools needed to implement it: Network sheaves and category theory.

🧵 5/9

By stripping causality of its oracular and absolute meaning, the relativity of causal knowledge situates it within a different ontological setting, where truth is not monolithic but emerges inevitably and relatively from a set of relationships.

🧵 4/9

We ended up converging on a simple but technically unexplored concept: any causal model is an imperfect and subjective representation of the world, and it cannot be severed from the network of relations the subject is immersed in.

🧵 3/9

One day, Gabriele and I were talking about Grothendieck and his relativism, and we asked ourselves how his approach could be philosophically framed and how it could be used for causal theory.

🧵 2/9

📜“The Relativity of Causal Knowledge”

arxiv.org/abs/2503.11718

Bluntly, this was the funniest paper I ever worked on. A thank you to @officiallydac, who was amazing at making our vision a technically sound reality.

(Spoiler: more categories and sheaves for you 😇)

🧵 1/9

🔥🌏 According to the @IPCC_CH , over 40% of the global population (about 3.5 billion people) live in contexts of extreme climate vulnerability.

The also IPCC identified 127 risks that affect every aspect of private, social, and economic life of everyone.

Everyone.

🧵 10/10

These numbers help dismantle the false narrative of a Western World under siege by migrants and highlight how most migration occurs within or around the regions most affected.

🧵 9/10

🙅 Contrary to the rhetoric of an “invasion,” the Report points out that:

• 76 million people—the majority of those 120 million—are internally displaced in their own countries;

• 69% of recognized refugees are located in neighboring countries to those in crisis.

🧵 8/10

🌍🚶♀️It is no coincidence that @Refugees estimates that, in 2024, around 120 million people worldwide are forcibly displaced, and 75% of them come from countries with high exposure to climate risks.

🧵 7/10

This finding underscores how the effects of global warming—droughts, desertification, extreme weather events, and the depletion of agricultural land—are directly linked to individuals’ migratory choices.

🧵 6/10

🏛️🔍 From a survey conducted by interviewing 348 migrants in various reception centers in Italy, it emerged that 69% of those classified as “economic migrants” still identify climate change as one of the contributing factors to their decision to move.

🧵 5/10