😀😀😀

02.07.2025 15:18 — 👍 0 🔁 0 💬 0 📌 0Alfredo Canziani

@alfcnz.bsky.social

Musician, math lover, cook, dancer, 🏳️🌈, and an ass prof of Computer Science at New York University

@alfcnz.bsky.social

Musician, math lover, cook, dancer, 🏳️🌈, and an ass prof of Computer Science at New York University

😀😀😀

02.07.2025 15:18 — 👍 0 🔁 0 💬 0 📌 0

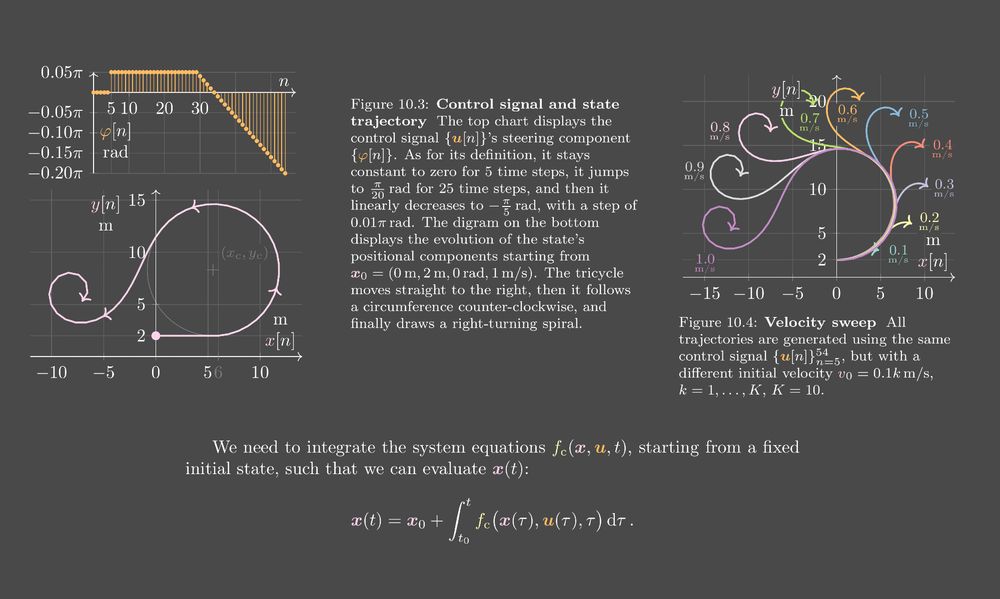

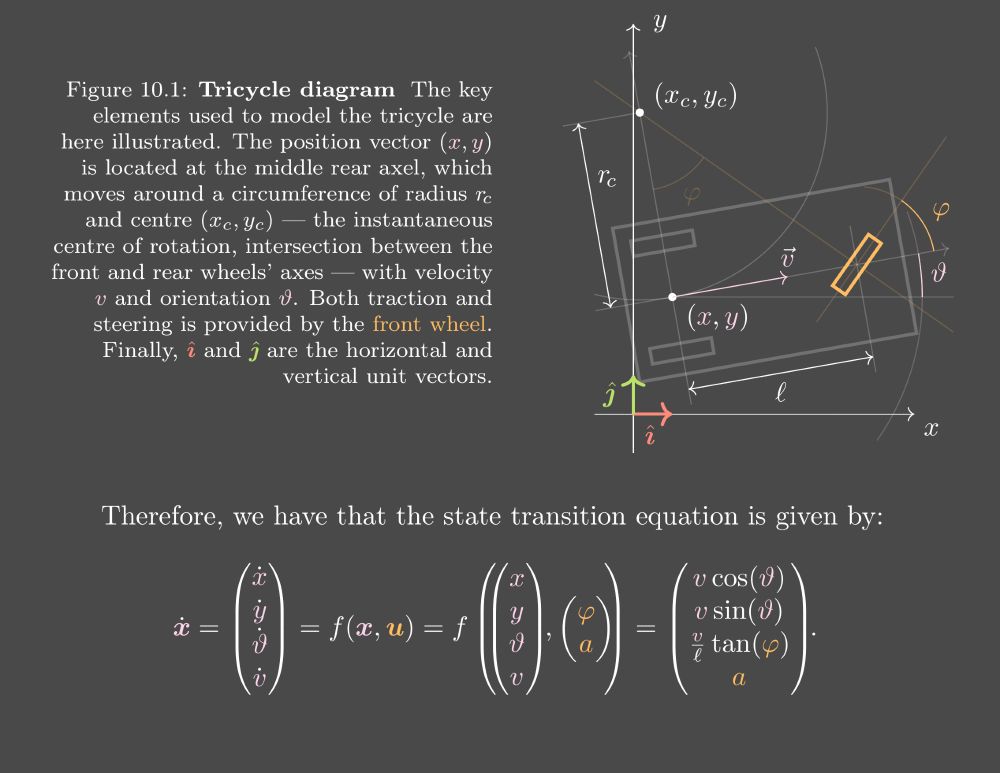

To compute the movement of the state x(t), we need to temporally integrate its velocity field ẋ(t). 🤓

The control signal steering angle stays at 0, then 0.05π, then linearly to −0.20π. The vehicle moves along circumferences.

Finally, a sweep of initial velocity is performed.

No, it’s just a non native speaker making silly mistakes. 😅😅😅

Thanks for catching that! 😀

Currently, writing chapter 10, «Planning and control».

Physical constrains for the evolution of the state (e.g. pure rotation of the wheels) are encoded through the velocity of the state ẋ = dx(t)/dt, a function of the state x(t) and the control u(t).

🥳🥳🥳

10.06.2025 20:36 — 👍 1 🔁 0 💬 0 📌 0

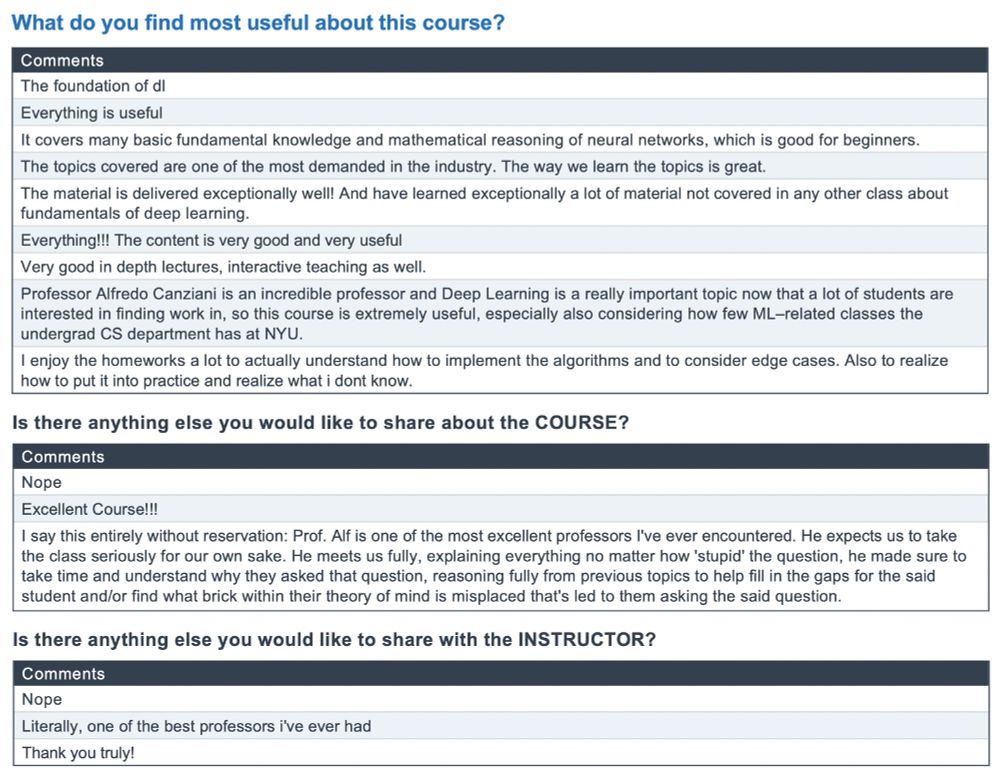

Oh! The undergrad feedback came in! 🥹🥹🥹

10.06.2025 20:03 — 👍 10 🔁 1 💬 1 📌 0

Releasing the Energy-Book 🔋 from its first appendix's chapter, where I explain how I create my figures. 🎨

Feel free to report errors via the issues' tracker, contribute to the exercises, and show me what you can draw, via the discussion section. 🥳

github.com/Atcold/Energ...

On a summer Friday night,

the first chapter sees the light.

🥹🥹🥹

Yeah, it took me 20 days to get back 🥹🥹🥹

I swear I respond to instant messages as they get through! 🥲🥲🥲

Anyhow, one more successful semester completed. 🥳🥳🥳

This is the first semester I'm teaching this course.

I think I want to wait until version 2 (coming out this fall) before deciding to push the entire course online.

This of this lesson as a preview of what's coming next.

I'll be using it for advertising my course with the upcoming students.

You've been asking what I've been up to and how the book 📖 was coming along… well, since this new course is under construction, all my energy has been diverted to this project.

It's been exhausting 🥵 but rewarding 😌. It forced me to cover the history and the basics of my field.

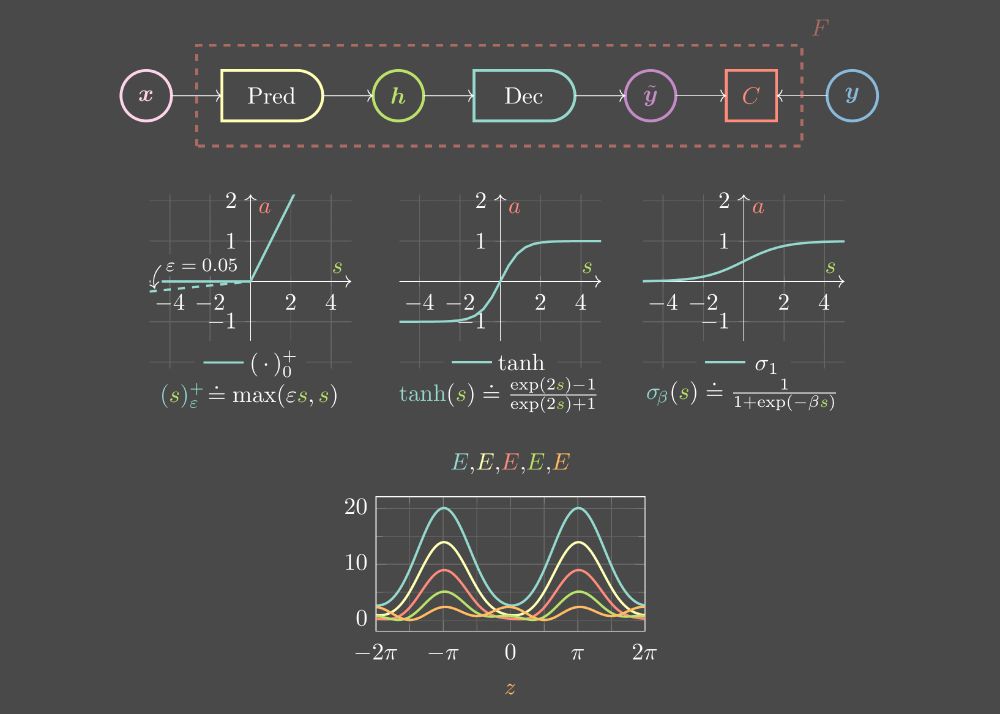

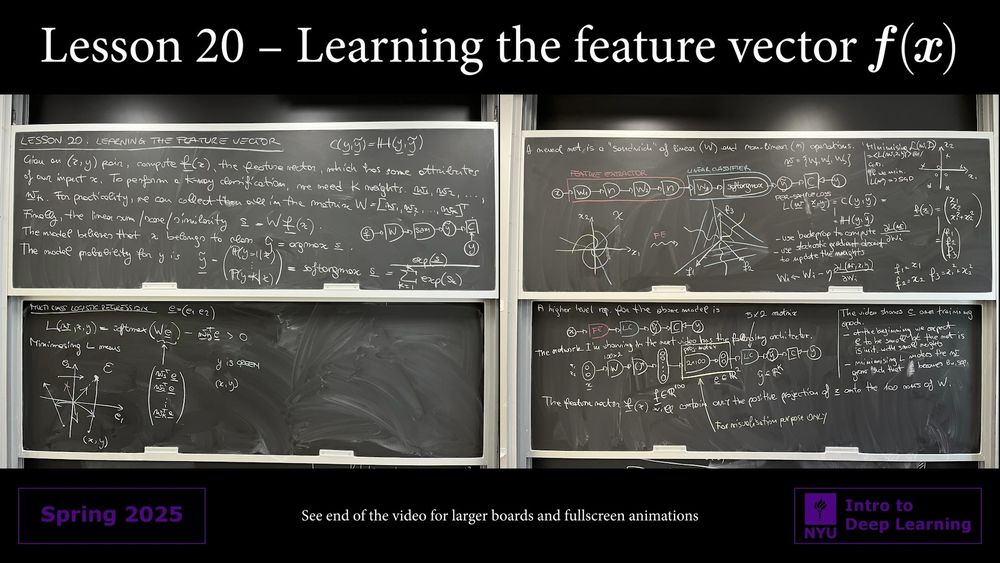

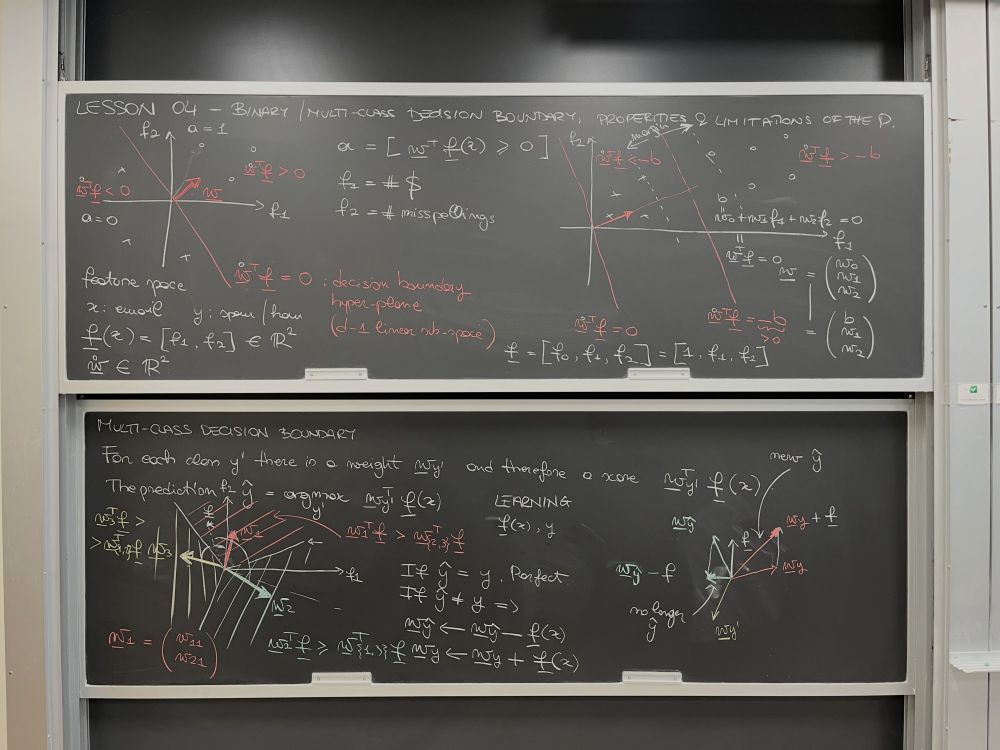

In this lecture from my new undergrad course, we review linear multiclass classification, leverage backprop and gradient descent to learn a linearly separable feature vector for the input, and observe the training dynamics in a 2D embedding space. 🤓

youtu.be/saskQ-EjCLQ

This is different from the video I made 5 years ago, where the input-output linear interpolation of an already trained network shows what a neural net does to its input. Namely, it follows a piece-wise linear mapping defined by the hidden layer.

08.04.2025 04:19 — 👍 2 🔁 0 💬 0 📌 0Training of a 2 → 100 → 2 → 5 fully connected ReLU neural net via cross-entropy minimisation.

• it starts outputting small embeddings

• around epoch 300 learns an identity function

• takes 1700 epochs more to unwind the data manifold

Did you enjoy Alfredo Canziani's lecture as much as we did?! If so, check out his website to find more about his educational offer: atcold.github.io

You can also find really cool material on Alfredo's YouTube channel! @alfcnz.bsky.social

📣 A pocos días del comienzo del Khipu 2025, nos complace anunciar que tanto las actividades del salón principal como el acto de clausura del viernes se retransmitirán en directo por este canal: khipu.ai/live/. ¡Los esperamos!

07.03.2025 11:30 — 👍 7 🔁 2 💬 0 📌 0I *really* had a blast giving this improvised lecture on a topic requested on the spot without any sleep! 🤪

The audience seemed enjoying the show. 😄

To find more about my educational offer, check out my website! atcold.github.io

Follow here and subscribe on YouTube! 😀

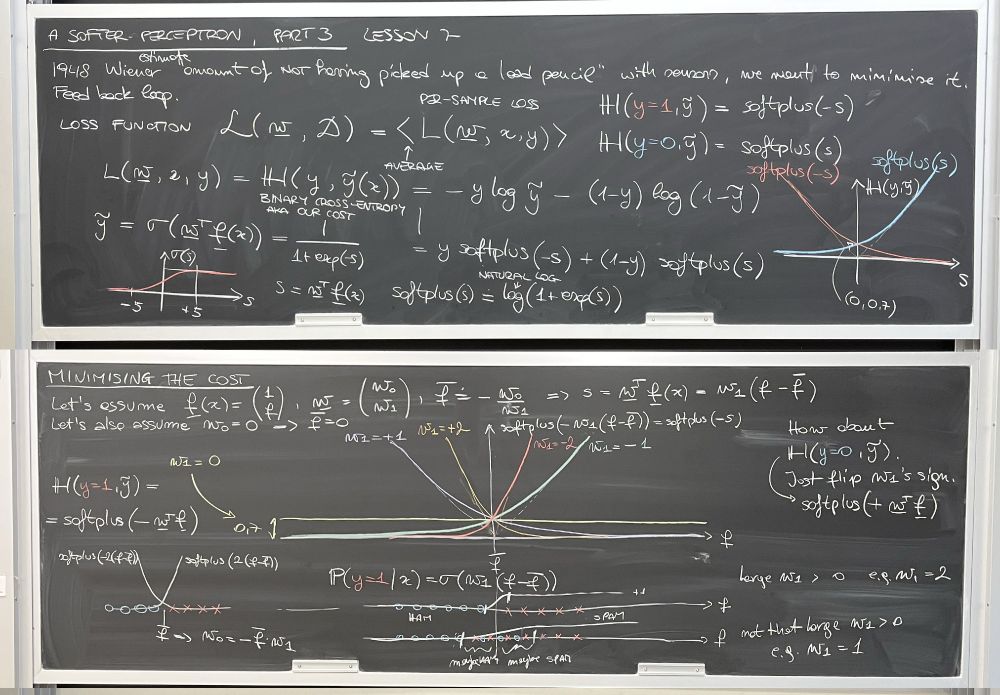

In today's episode, we review the concepts of loss ℒ(𝘄, 𝒟), per-sample loss L(𝘄, x, y), binary cross-entropy cost ℍ(y, ỹ) = y softplus(−s) + (1−y) softplus(s), ỹ = σ(𝘄ᵀ𝗳(x)).

Then, we minimised the loss by choosing convenient values for our weight vector 𝘄.

@nyucourant.bsky.social

Yay! 🥳🥳🥳

01.02.2025 01:28 — 👍 0 🔁 0 💬 0 📌 0😅😅😅

30.01.2025 20:26 — 👍 0 🔁 0 💬 0 📌 0

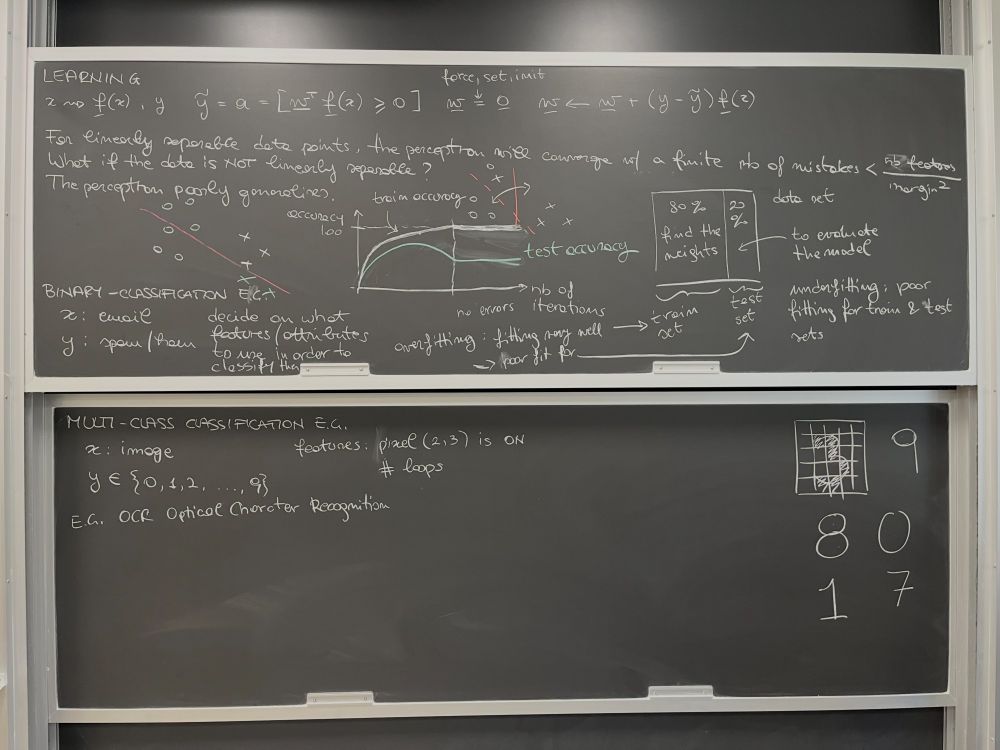

Tue morning: *prepares slides*

Tue class: *improv blackboard lecture*

Outcome: unexpectedly great lecture.

Thu morning: *prep handwritten notes*

Thu class: *executes blackboard lecture*

Students: 🤩🤩🤩🤩🤩🤩🤩🤩🤩

@nyucourant.bsky.social @nyudatascience.bsky.social

🥹🥹🥹

26.01.2025 05:01 — 👍 0 🔁 0 💬 0 📌 0I had linear algebra, calculus, and machine learning. I just removed the latter. 😅😅😅

26.01.2025 02:55 — 👍 1 🔁 0 💬 0 📌 0🥹🥹🥹

26.01.2025 02:54 — 👍 0 🔁 0 💬 0 📌 0What’s going on? 😮😮😮

25.01.2025 23:53 — 👍 0 🔁 0 💬 1 📌 0🤗🤗🤗

25.01.2025 19:39 — 👍 0 🔁 0 💬 0 📌 0🥰🥰🥰

24.01.2025 05:14 — 👍 1 🔁 0 💬 0 📌 0

I think the new undergrad course is going well. At least we're having fun! 😁😁😁

23.01.2025 21:19 — 👍 16 🔁 1 💬 1 📌 0I am! 🥲🥲🥲

23.01.2025 05:17 — 👍 0 🔁 0 💬 0 📌 0