Final week to apply for the 2026 SFI Complexity Postdoctoral Fellowships

If you're an early-career scholar and passionate about collaborative, transdisciplinary research beyond traditional departments, this is the postdoc fellowship for you.

Deadline: Oct 1, 2025

Apply: santafe.edu/sfifellowship

22.09.2025 18:59 — 👍 16 🔁 20 💬 0 📌 1

Have a striking image of your biophysics research? 🐭🐒🐛🪰🦠Submit it to the DBIO Image Contest!

The winning images will be advertised on shirts and other media at the 2026 Global Physics Summit.

Submit here:

05.09.2025 14:29 — 👍 1 🔁 1 💬 0 📌 0

Republicans Want States to Cut Food Aid Errors. Their Bill Could Do the Opposite.

Republicans want states to cut food aid errors. Their bill could do the opposite: www.nytimes.com/2025/07/02/u...

02.07.2025 22:16 — 👍 95 🔁 20 💬 8 📌 2

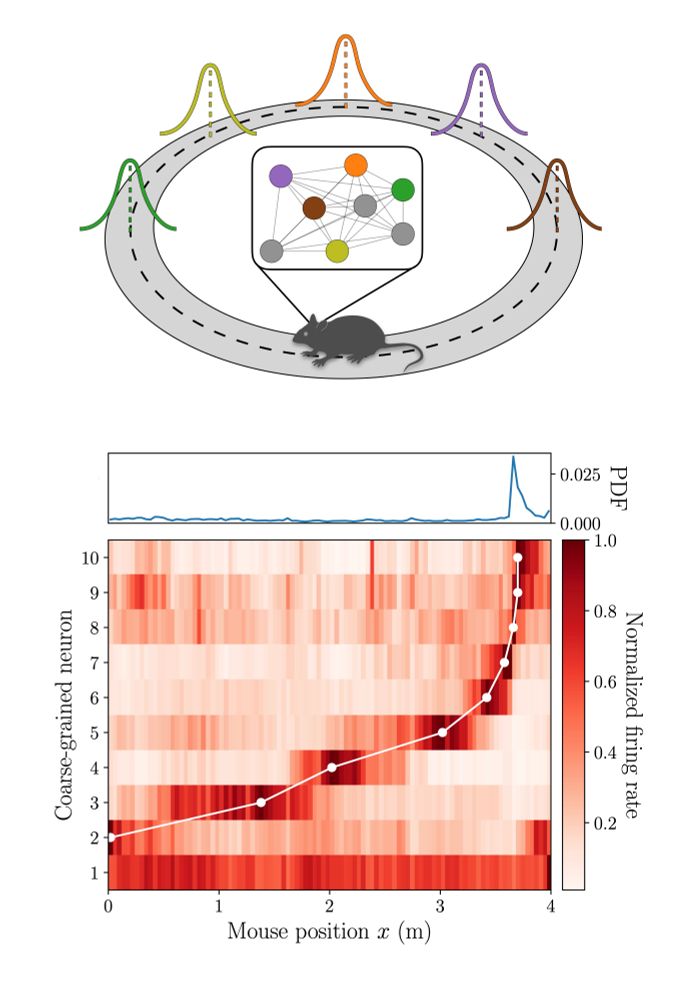

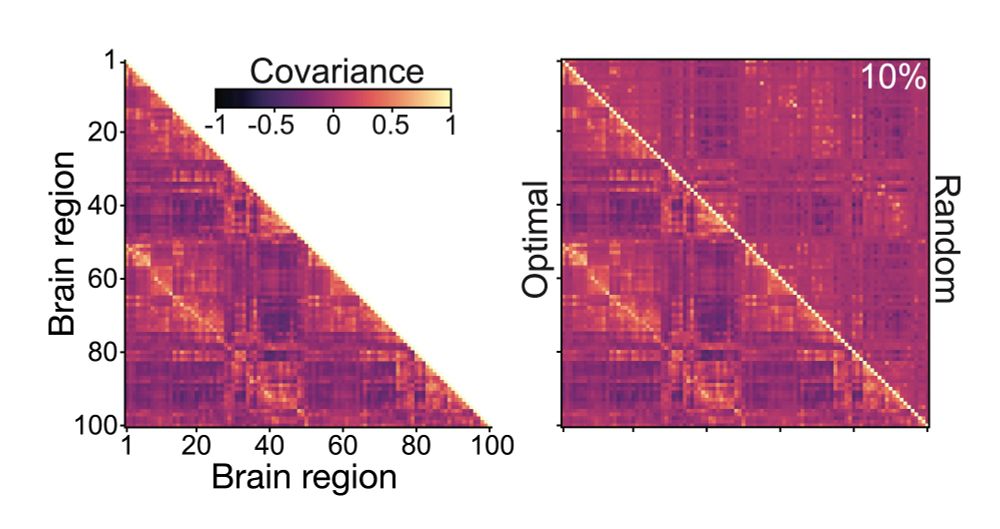

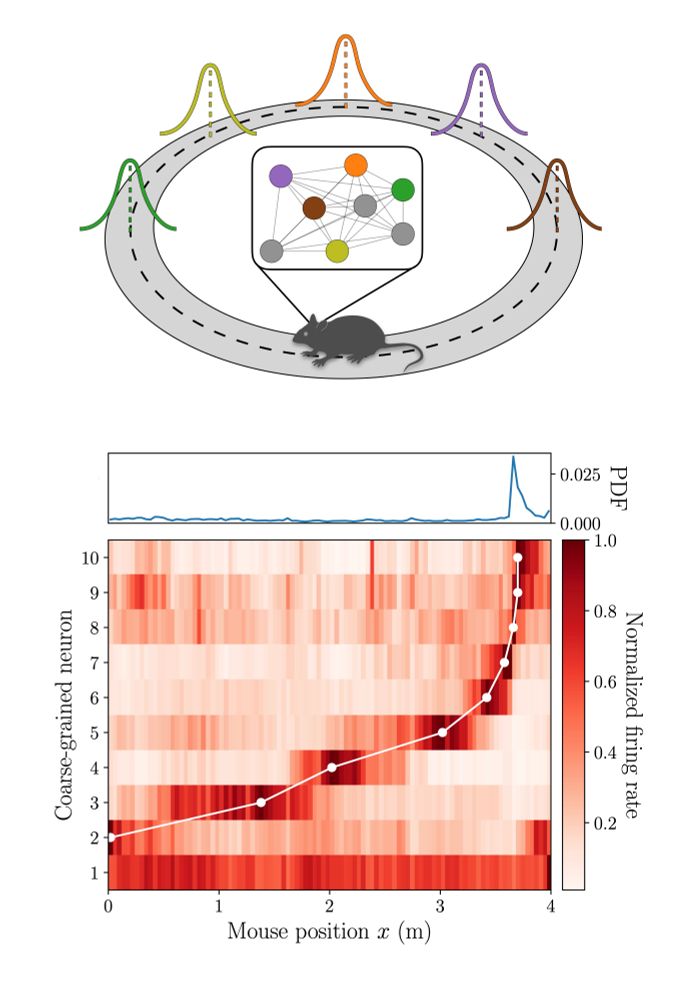

Interested in coarse-graining, irreversibility, or neural activity in the hippocampus?

If so, check out our new preprint exploring how maximizing the irreversibility preserved from microscopic dynamics leads to interpretable coarse-grained descriptions of biological systems!

05.06.2025 18:23 — 👍 5 🔁 1 💬 0 📌 0

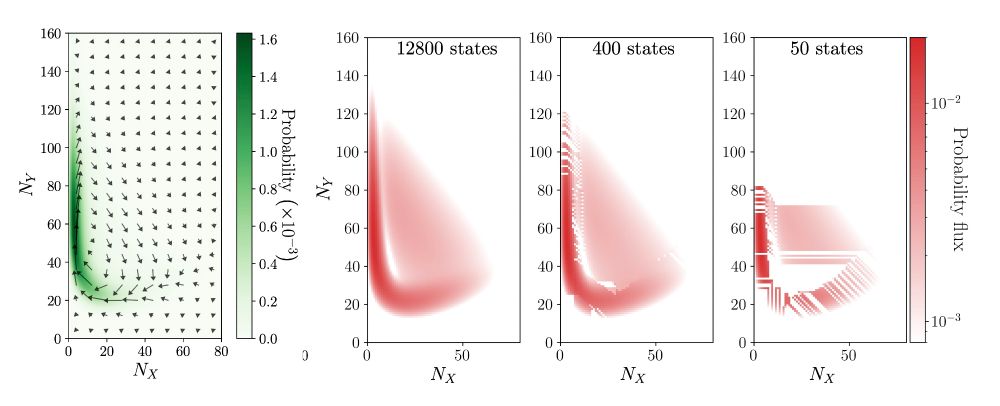

Living systems operate nonequilibrium processes across many scales in space and time. Is there a model-free way to bridge the descriptions at different levels of coarse-graining? Here we find that preserving the evidence of time-reversal symmetry breaking works remarkably well!

05.06.2025 19:26 — 👍 8 🔁 1 💬 0 📌 0

Check out the preprint for much more: "Coarse-graining dynamics to maximize irreversibility"

And a massive shout out to the leaders of the project: Qiwei Yu (@qiweiyu.bsky.social) and Matt Leighton (@mleighton.bsky.social)

05.06.2025 18:16 — 👍 1 🔁 0 💬 0 📌 0

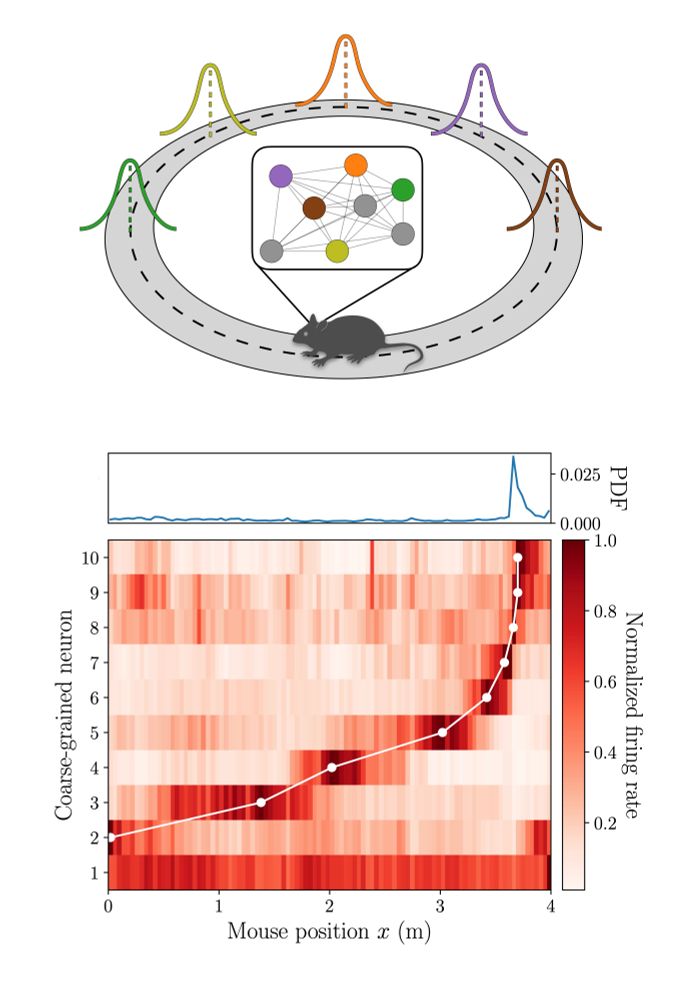

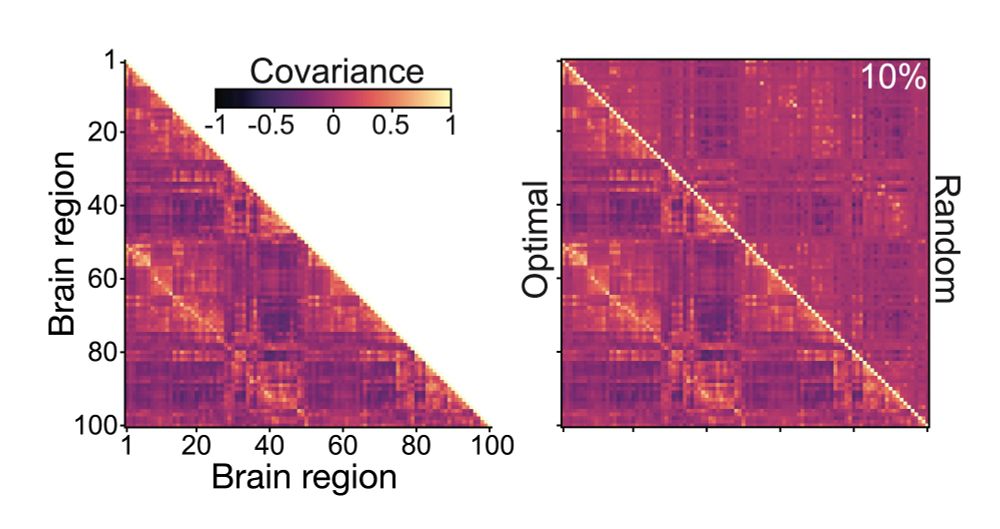

In neural dynamics in the hippocampus, the maximum irreversibility coarse-graining uncovers a large-scale loop of flux in neural space that is directly driven by the animal's movement in physical space.

05.06.2025 18:16 — 👍 1 🔁 0 💬 1 📌 1

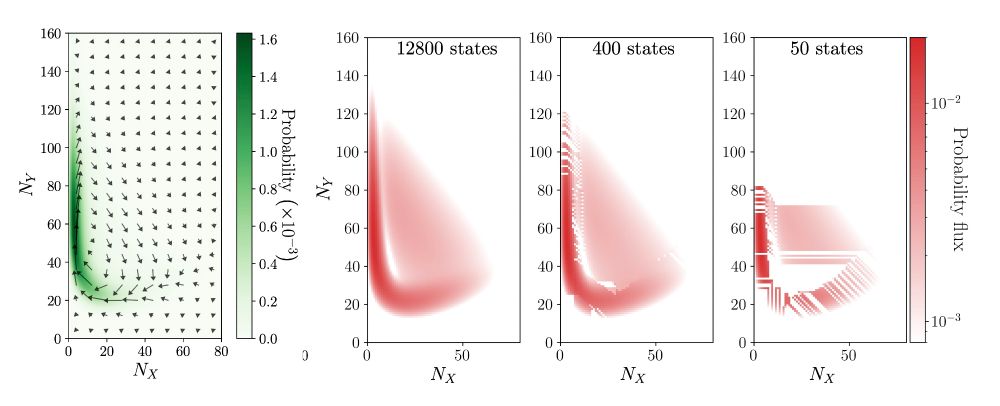

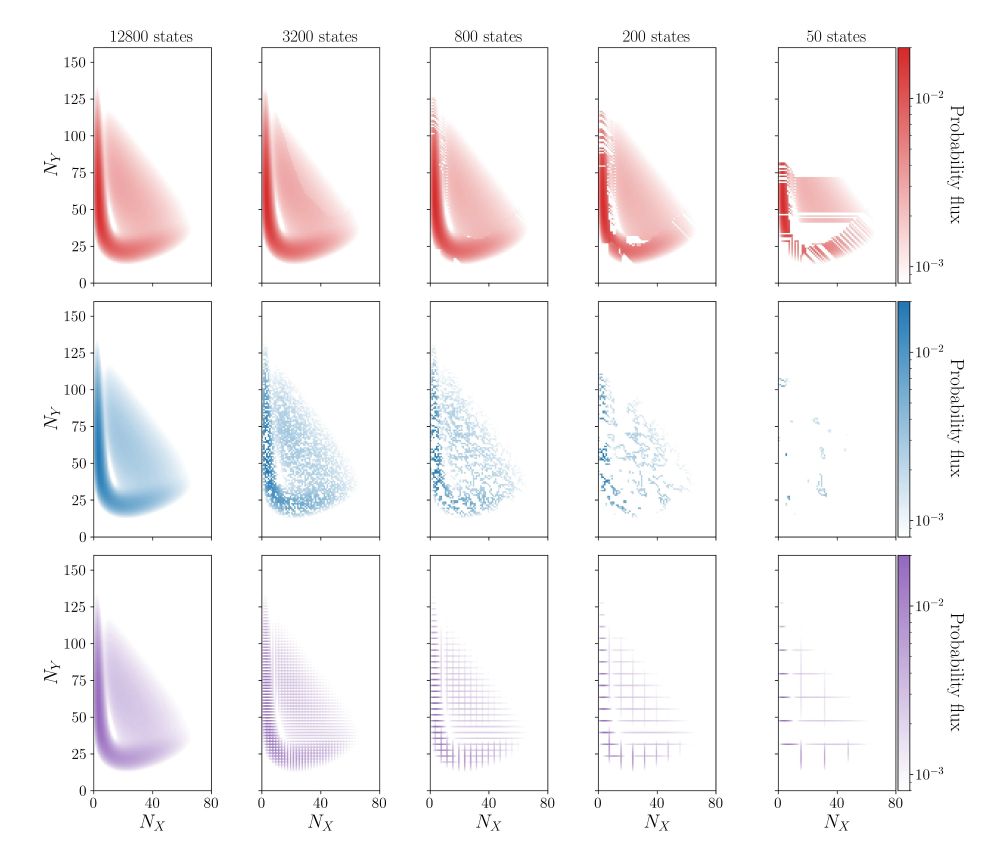

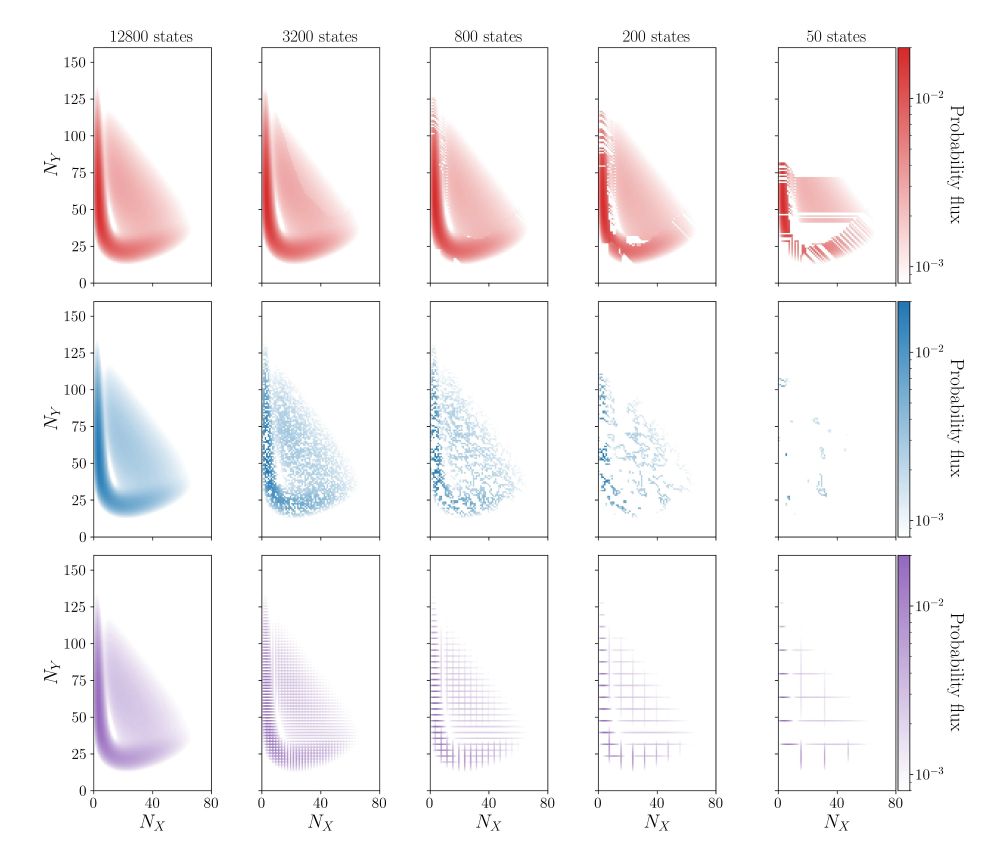

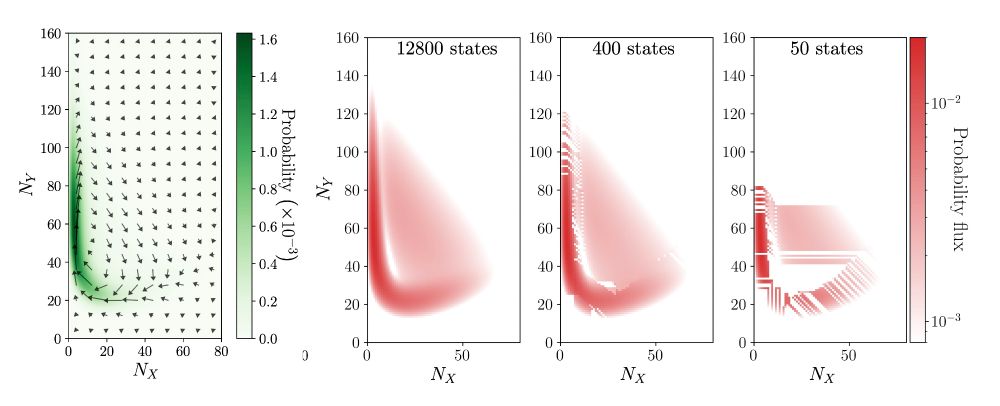

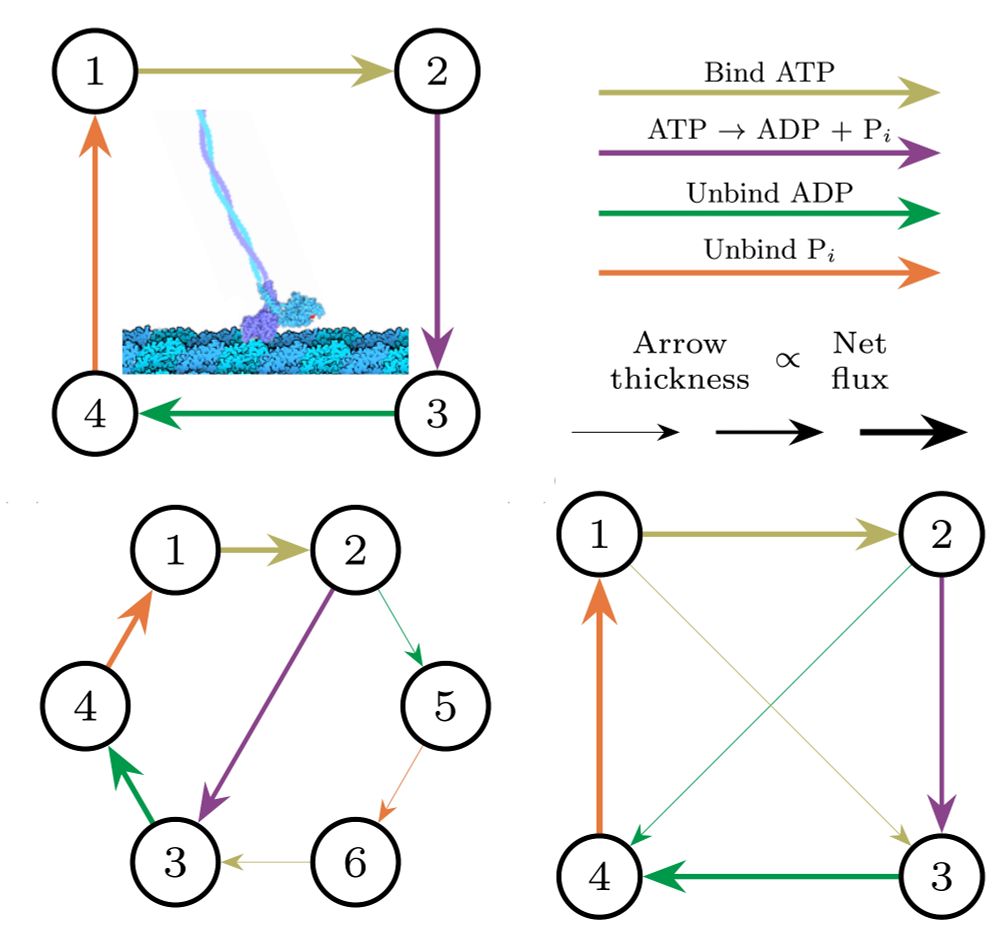

In chemical oscillators, the maximum irreversibility coarse-graining picks out macroscopic loops of flux that dominate the dynamics.

05.06.2025 18:16 — 👍 1 🔁 0 💬 1 📌 0

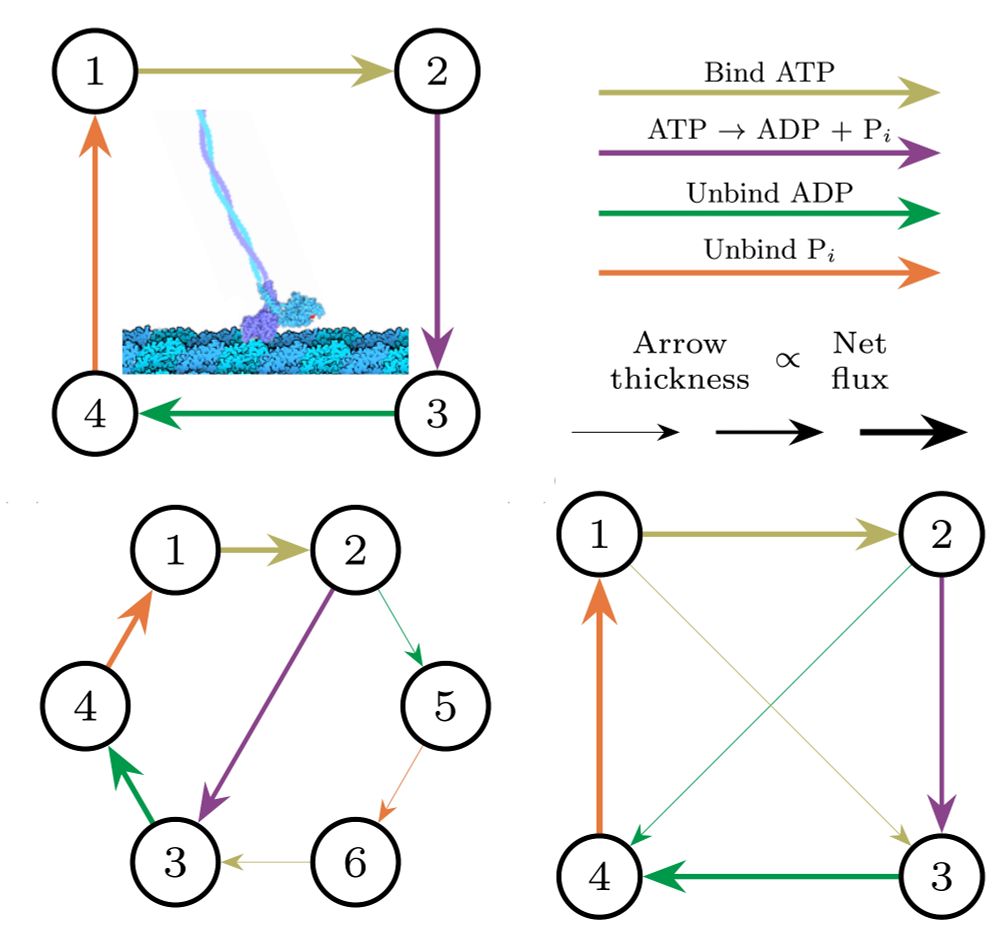

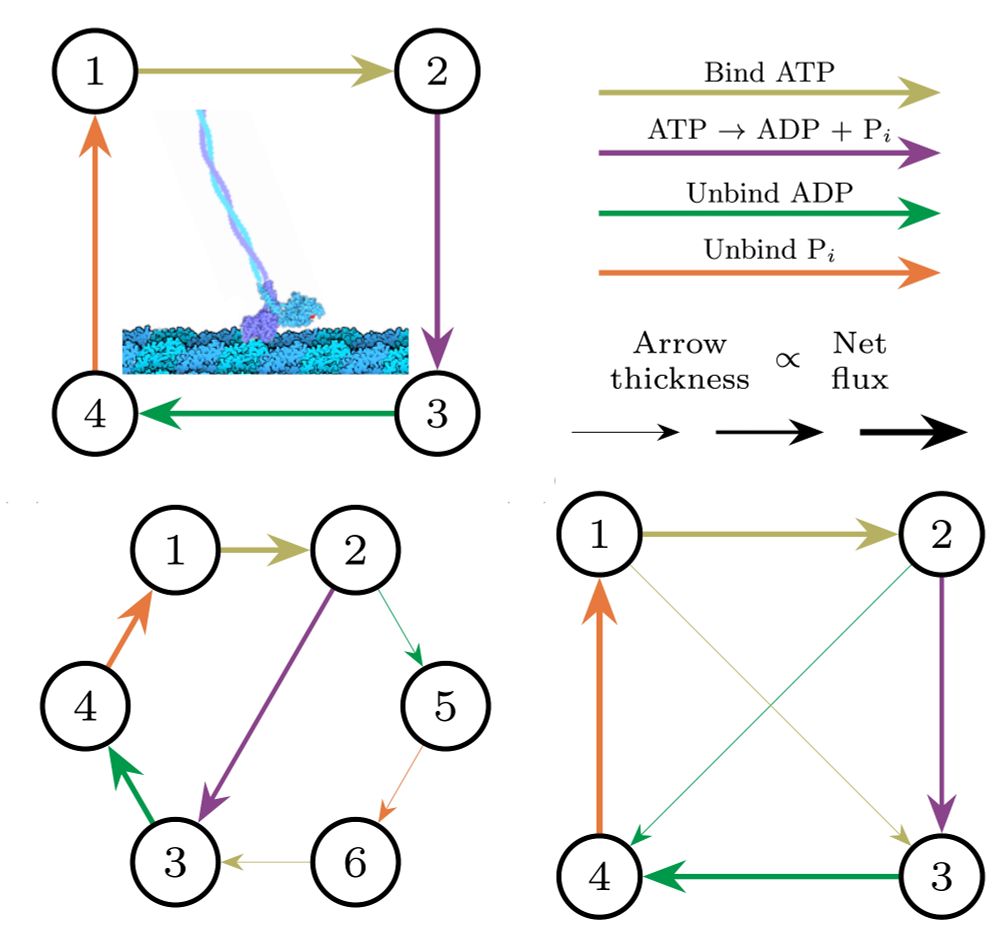

Across a range of living systems, this maximum irreversibility coarse-graining uncovers key biological functions.

For example, in models of kinesin (a motor protein that ships cargo inside your cells), we can derive simplified dynamics without losing any irreversibility.

05.06.2025 18:16 — 👍 1 🔁 0 💬 1 📌 0

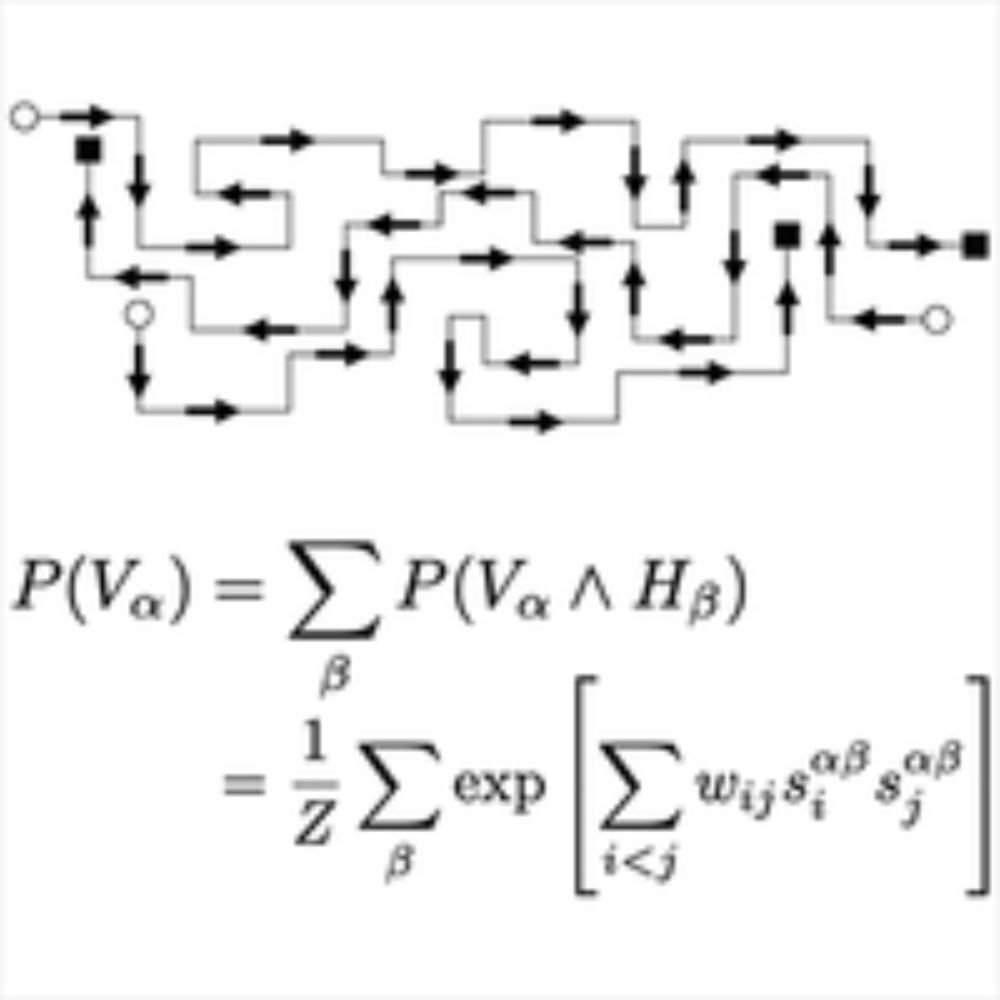

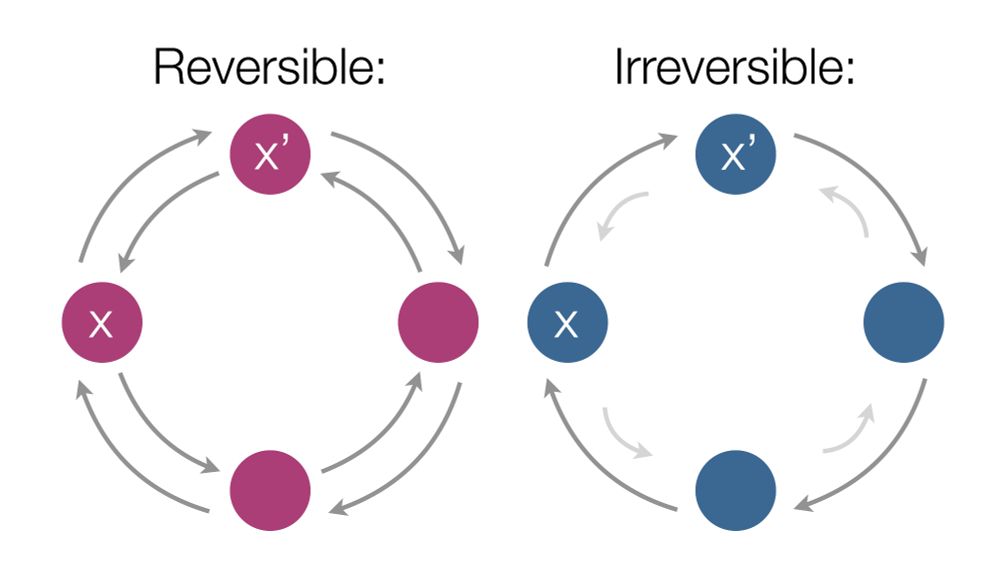

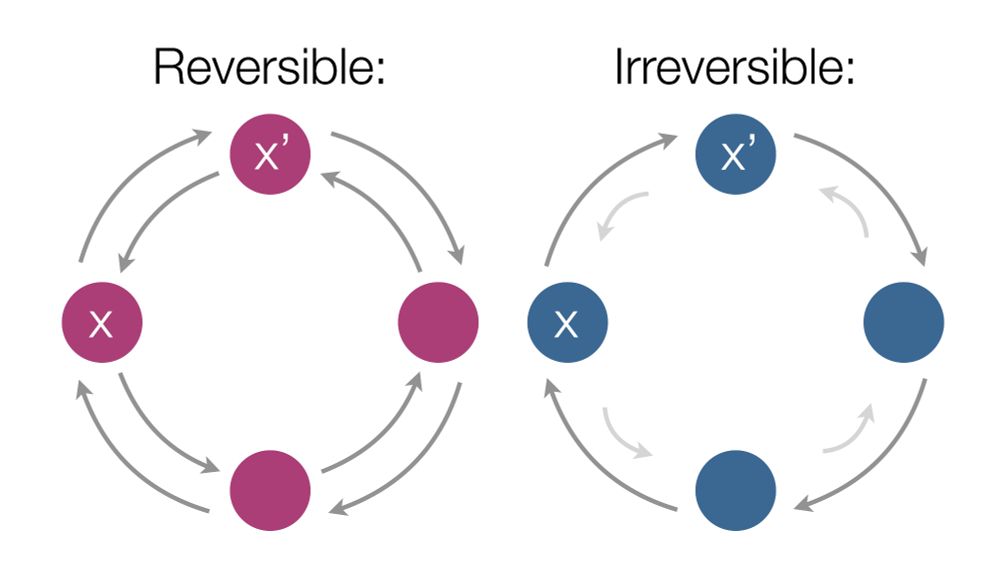

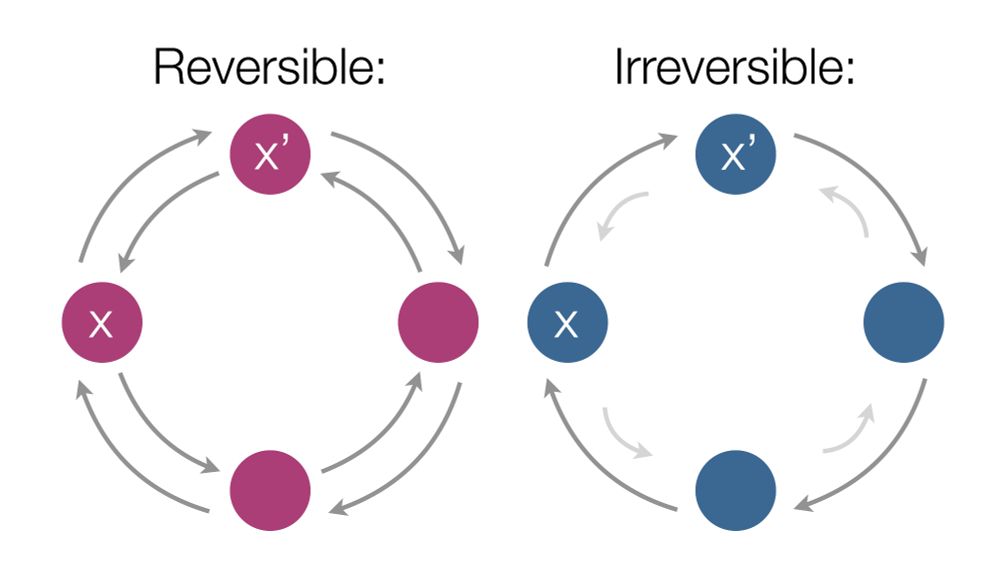

When living systems burn energy, they drive irreversible dynamics and produce entropy.

Under coarse-graining, the apparent irreversibility can only decrease.

This means that -- at every level of description -- there's a unique coarse-graining with maximum irreversibility.

05.06.2025 18:16 — 👍 1 🔁 0 💬 1 📌 0

Biology consumes energy at the microscale to power functions across all scales: From proteins and cells to entire populations of animals.

Led by @qiweiyu.bsky.social and @mleighton.bsky.social, we study how coarse-graining can help to bridge this gap 👇🧵

arxiv.org/abs/2506.01909

05.06.2025 18:16 — 👍 6 🔁 2 💬 1 📌 2

In neural dynamics in the hippocampus, the maximum irreversibility coarse-graining uncovers a large-scale loop of flux in neural space that is directly driven by the animal's movement in physical space.

05.06.2025 17:49 — 👍 0 🔁 0 💬 1 📌 0

In chemical oscillators, the maximum irreversibility coarse-graining picks out macroscopic loops of flux that dominate the dynamics.

05.06.2025 17:49 — 👍 0 🔁 0 💬 1 📌 0

Across a range of living systems, this maximum irreversibility coarse-graining uncovers key biological functions.

For example, in models of kinesin (a motor protein that ships cargo inside your cells), we can derive simplified dynamics without losing any irreversibility.

05.06.2025 17:49 — 👍 0 🔁 0 💬 1 📌 0

When living systems burn energy, they drive irreversible dynamics and produce entropy.

Under coarse-graining, the apparent irreversibility can only decrease.

This means that -- at every level of description -- there's a unique coarse-graining with maximum irreversibility.

05.06.2025 17:49 — 👍 0 🔁 0 💬 1 📌 0

Resources - Unit - DBIO

Science is built on public trust, federal funding, and academic freedom. All of these are currently being eroded, but what can we do?

Join us for an online panel on "Being a Voice for Science" (June 11, 2-3pm ET).

Info and registration: engage.aps.org/dbio/resourc...

02.06.2025 17:43 — 👍 1 🔁 2 💬 1 📌 1

Apply or nominate for DBIO awards by June 2! Awards include:

APS Fellowship

Max Delbrück Prize in Biological Physics

Award for Outstanding Doctoral Thesis Research in Biological Physics

16.05.2025 19:24 — 👍 2 🔁 1 💬 1 📌 0

Huge shoutout to my student David Carcamo for leading this paper, and Nick Weaver and Purushottam Dixit for invaluable contributions! ❤️

16.05.2025 17:14 — 👍 0 🔁 0 💬 0 📌 0

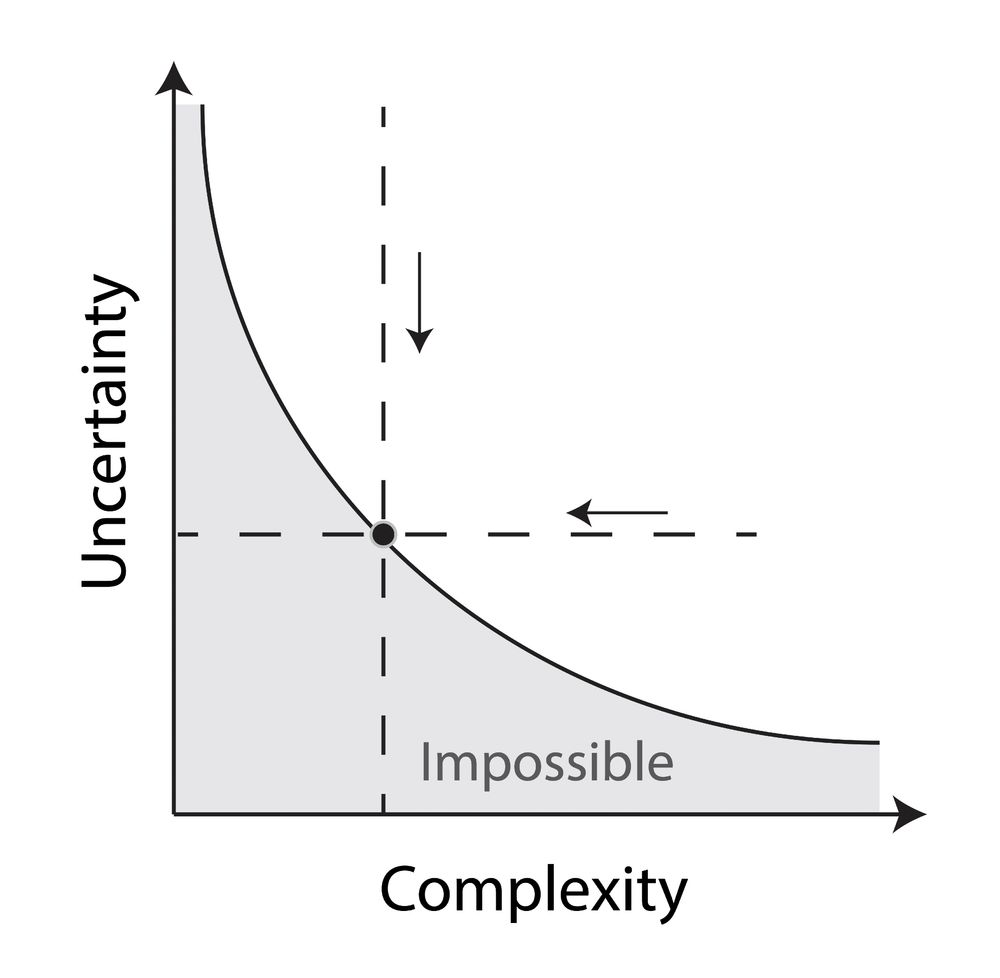

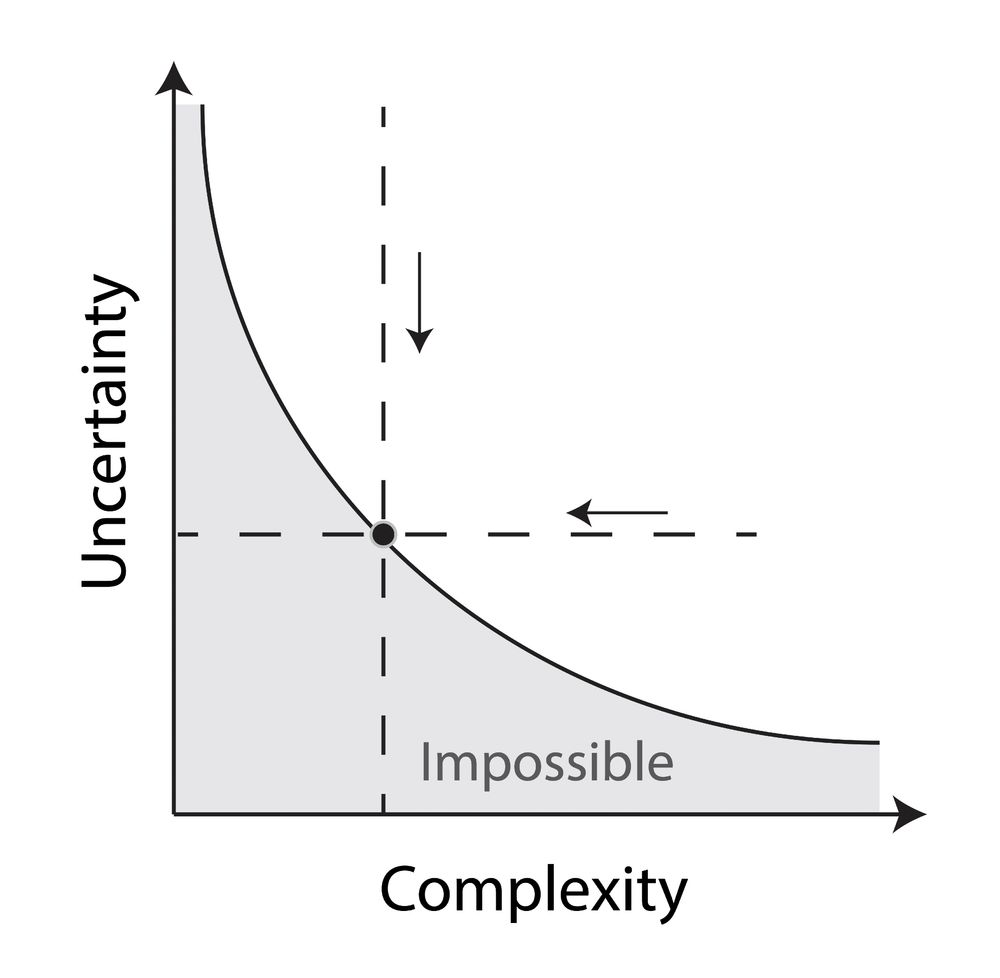

...but these applications are limited by our ability to solve difficult statistical physics problems! We thus need new creative methods in order to construct optimal compressions of complex systems.

16.05.2025 17:14 — 👍 0 🔁 0 💬 1 📌 0

We review emerging applications, which range from neuroscience and biology to machine learning and engineering...

16.05.2025 17:14 — 👍 0 🔁 0 💬 1 📌 0

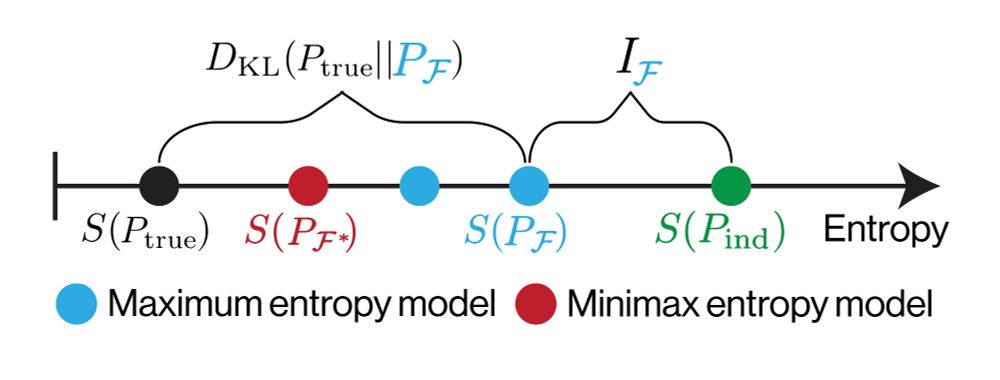

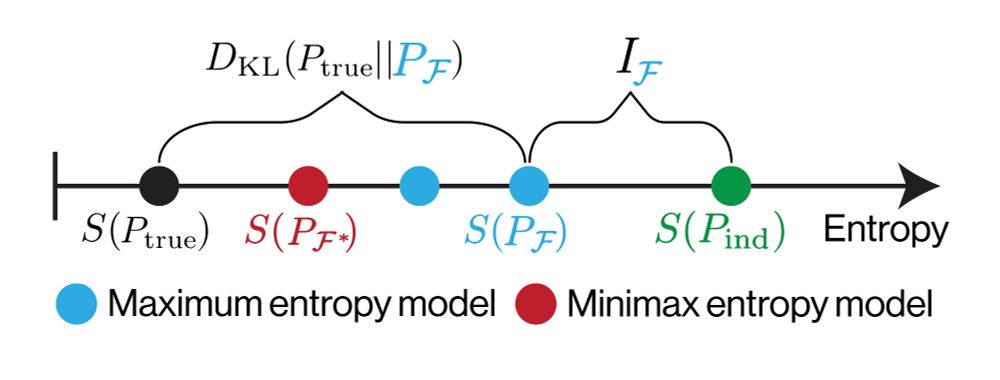

Starting only with MDL, we show that the optimal details provide as much information about the data as possible while remaining maximally random with regard to all unobserved details

This "minimax entropy" principle was proposed 25 years ago but remains largely unexplored

16.05.2025 17:14 — 👍 0 🔁 0 💬 1 📌 0

Information theory makes these intuitions concrete: Each model gives us an encoding of the data, and the shortest code provides the best compression of the data. This is the minimum description length (MDL) principle

16.05.2025 17:14 — 👍 1 🔁 0 💬 1 📌 0

When constructing models of the world, we aim for good compressions: models that are as accurate as possible with as few details as possible. But which details should we include in a model?

An answer lies in the "minimax entropy" principle 👇

arxiv.org/abs/2505.01607

16.05.2025 17:14 — 👍 1 🔁 0 💬 1 📌 0

What can physics tell us about the brain? - Part 2

Podcast Episode · Simplifying Complexity · 05/12/2025 · 31m

What is the connection between information theory and statistical physics? And how can this connection help us understand the brain?

Final installment with the Simplifying Complexity podcast (

@bhcomplexity.bsky.social)

podcasts.apple.com/us/podcast/w...

16.05.2025 14:23 — 👍 2 🔁 0 💬 0 📌 0

What can physics tell us about the brain? - Part 1

Podcast Episode · Simplifying Complexity · 04/28/2025 · 35m

Statistical physics and information theory may seem daunting, but with a little insight they can become intuitive and are actually deeply related.

A fun chat on the Simplifying Complexity podcast (@bhcomplexity.bsky.social)

podcasts.apple.com/us/podcast/w...

28.04.2025 16:54 — 👍 3 🔁 1 💬 0 📌 0

Currently @ Yale, working on causal inference & cutting down on caffeine.

Website: melodyyhuang.com

Research Fellow at the Flatiron Institute | Previously: Postdoc at IBM Research, PhD Cornell Physics | Statistical mechanics, theoretical biophysics, dynamical systems, machine learning

davidhathcock.github.io

Assistant professor at Cornell Psychology Department. CoCoCo Lab (Cornell Computational Cognition Lab) @co3lab.bsky.social. I am recruiting!

Professor at OIST. Biological and statistical physics.

Interested in #physics of life. Theorist but some of my best friends are experimentalists.

Sen. Sanders of Vermont, Ranking Member of the U.S. Senate Committee on Health, Education, Labor & Pensions, is the longest-serving independent in congressional history.

Postdoc at the Princeton Neuroscience Institute.

Planning in complex environments, RL and network science.

https://www.aekahn.com

Watch the Suns and Nets live from Macao in the NBA China Games 2025! 10/10 (8:00am/et) & 10/12 (7:00am/et) on NBA TV

The official account for the NBA

Illuminating math and science. Supported by the Simons Foundation. 2022 Pulitzer Prize in Explanatory Reporting. www.quantamagazine.org

A podcast that explores the underlying principles of complex systems - systems that defy our rational view of the world. By Sean Brady

https://omny.fm/shows/simplifying-complexity/playlists/podcast

| Cellular/Molecular-turned-Computational Neuroscientist |

| What do neurons even do?? | Neural Computation with Dendrites |

| Biophysical Optimization | AI <-> Neuro |

| Postdoctoral Research Fellow at the Harvard Kempner Institute |

| www.ilenna.com

Biological physicist, cat and human dad, interests: modeling intracellular and multicellular transport and organization, interdisciplinary graduate and undergraduate training

Official account of the American Physical Society (APS) Division on Statistical & Nonlinear Physics (DSNP).

Advancing interdisciplinary research in non-equilibrium and statistical physics.

Join now: https://go.aps.org/4eCIyvD

A journal for cutting-edge physics research.

Quantum, bio, astro, optical, high-energy, nuclear, plasma, and condensed-matter physics, physics education research, complexity, and more.

https://www.nature.com/nphys/

Recognizing and celebrating Black scientists in Biophysics | Founder: @StevensSostre.bsky.social | Logo: Taneisha Gillyard-Cheairs, PhD | Web: blackinbiophysics.org | #BIBPSWeek2021 YouTube link: https://tinyurl.com/yvw86b53

At KITP on the UC Santa Barbara campus, researchers in theoretical physics and allied fields collaborate on questions at the leading edges of science.

www.kitp.ucsb.edu

Assistant Professor @UCSDPhySci @UCSDPhysics. Interest in how complex dynamics arise in active living and robotic matter. Lab website: https://www.tzerhan.com/