This, combined with most fields outside of computer science being overly concerned with maintaining cultural and social solidarity (especially against encroaching technology brothers) seems like the most likely explanation.

01.11.2025 20:47 — 👍 1 🔁 0 💬 0 📌 0

Is basic image understanding solved in today’s SOTA VLMs? Not quite.

We present VisualOverload, a VQA benchmark testing simple vision skills (like counting & OCR) in dense scenes. Even the best model (o3) only scores 19.8% on our hardest split.

08.09.2025 15:27 — 👍 16 🔁 5 💬 2 📌 0

Here’s what I’ve been working on for the past year. This is SkyTour, a 3D exterior tour utilizing Gaussian Splat. The UX is in the modeling of the “flight path.” I led the prototyping team that built the first POC. I was the sole designer and researcher on the project, one of the 1st inventors.

16.07.2025 03:43 — 👍 66 🔁 5 💬 5 📌 0

Ah cool, then why is that last bit true?

03.07.2025 23:59 — 👍 0 🔁 0 💬 0 📌 0

I don't see how the last sentence follows logically from the two prior sentences.

03.07.2025 22:52 — 👍 0 🔁 0 💬 1 📌 0

Be sure to do a dedication where you thank a ton of people, it's kind plus it feels good.

Besides that I'd just do a staple job of your papers. Doing new stuff in a thesis is usually a mistake, unless you later submit it as a paper or post it online somewhere. Nobody reads past the dedication.

03.07.2025 22:50 — 👍 3 🔁 0 💬 1 📌 0

This thread rules

29.06.2025 00:44 — 👍 3 🔁 0 💬 0 📌 0

YouTube video by SpAItial AI

SpAItial AI: Building Spatial Foundation Models

🚀🚀🚀Announcing our $13M funding round to build the next generation of AI: 𝐒𝐩𝐚𝐭𝐢𝐚𝐥 𝐅𝐨𝐮𝐧𝐝𝐚𝐭𝐢𝐨𝐧 𝐌𝐨𝐝𝐞𝐥𝐬 that can generate entire 3D environments anchored in space & time. 🚀🚀🚀

Interested? Join our world-class team:

🌍 spaitial.ai

youtu.be/FiGX82RUz8U

27.05.2025 09:26 — 👍 53 🔁 9 💬 4 📌 0

📺 Now available: Watch the recording of Aaron Hertzmann's talk, "Can Computers Create Art?" www.youtube.com/watch?v=40CB...

@uoftartsci.bsky.social

30.04.2025 20:23 — 👍 10 🔁 4 💬 0 📌 0

YouTube video by Jon Barron

Radiance Fields and the Future of Generative Media

Here's a recording of my 3DV keynote from a couple weeks ago. If you're already familiar with my research, I recommend skipping to ~22 minutes in where I get to the fun stuff (whether or not 3D has been bitter-lesson'ed by video generation models)

www.youtube.com/watch?v=hFlF...

28.04.2025 20:52 — 👍 61 🔁 12 💬 2 📌 1

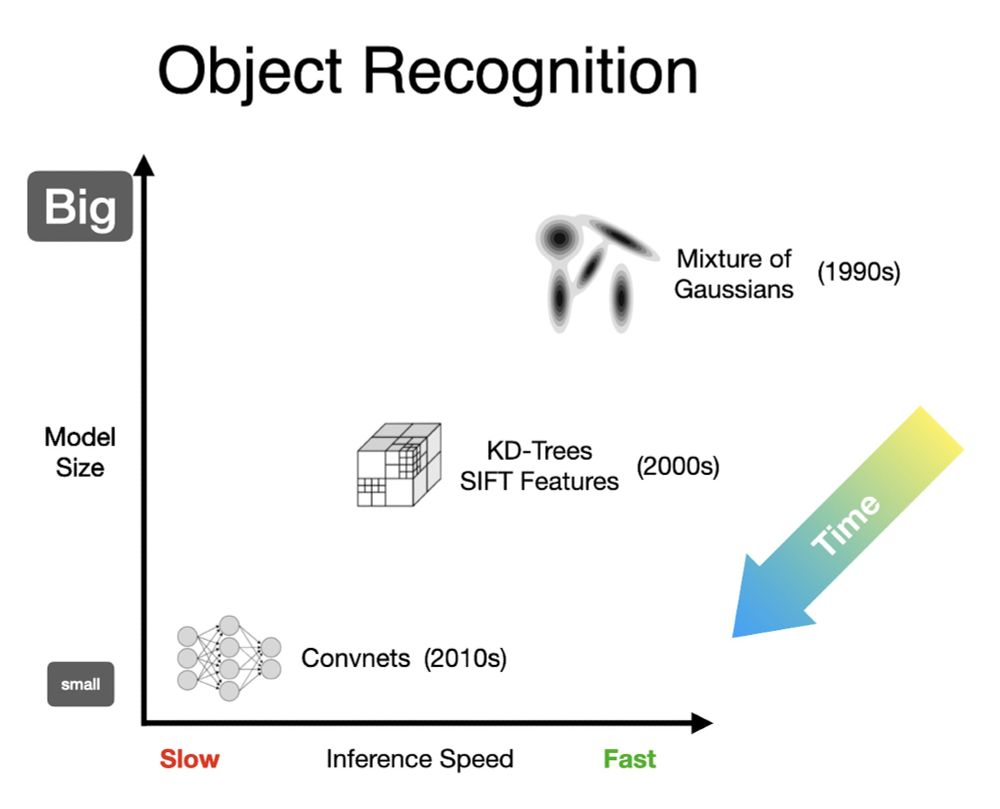

yeah those fisher kernel models were surprisingly gnarly towards the end of their run.

09.04.2025 19:42 — 👍 1 🔁 0 💬 0 📌 0

yep absolutely. Super hard to do, but absolutely the best approach if it works.

08.04.2025 17:50 — 👍 4 🔁 0 💬 0 📌 0

If you want you can see the models that AlexNet beat in the 2012 imagenet competition, they were quite huge, here's one: www.image-net.org/static_files.... But I think the better though experiment is to imagine how large a shallow model would have to be to match AlexNet's capacity (very very huge)

08.04.2025 17:48 — 👍 2 🔁 0 💬 1 📌 0

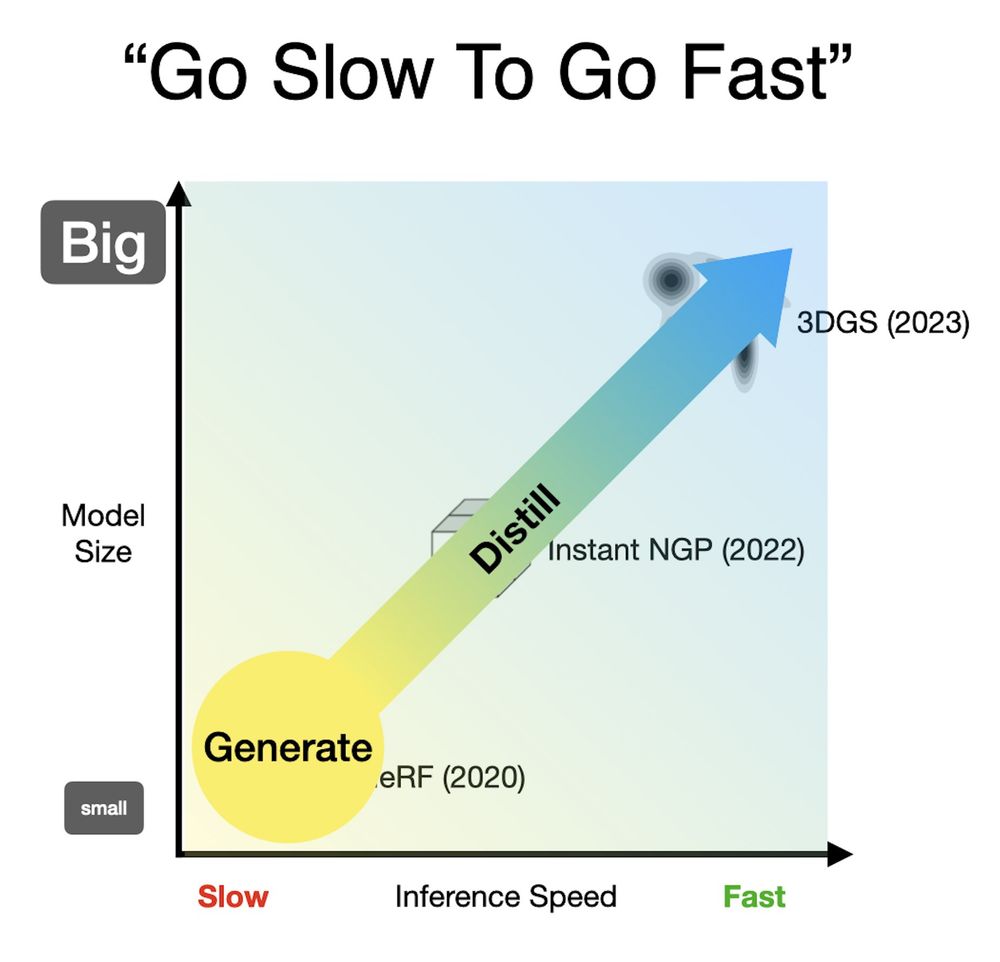

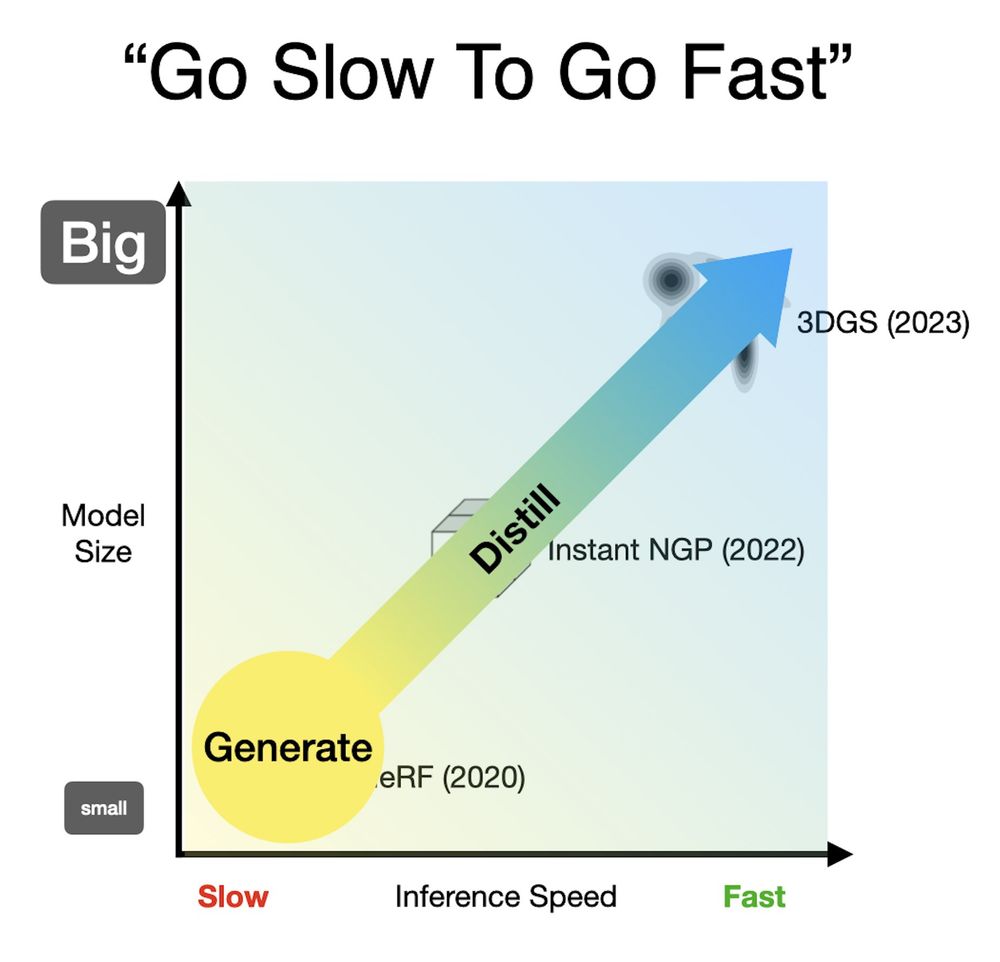

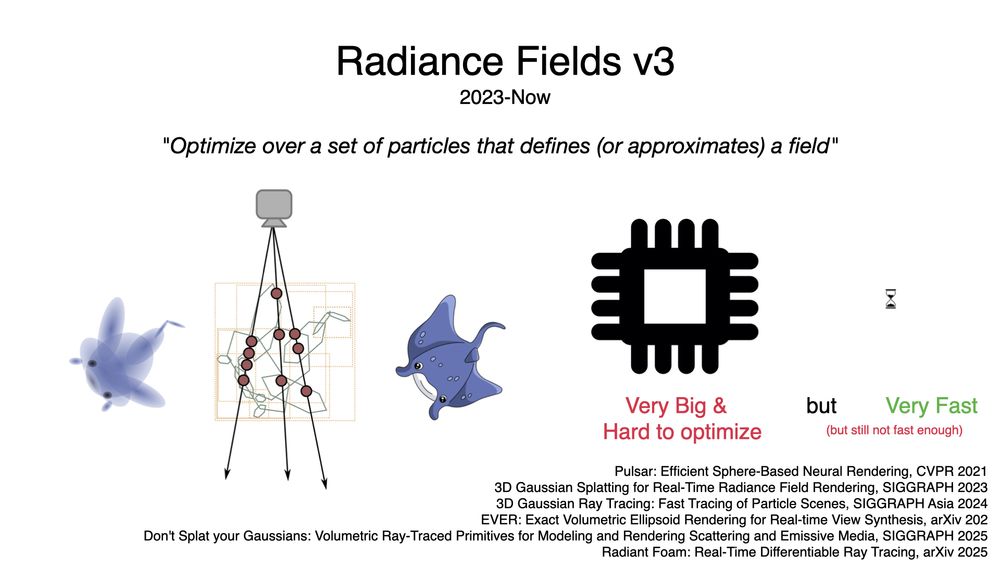

One pattern I like (used in DreamFusion and CAT3D) is to "go slow to go fast" --- generate something small and slow to harness all that AI goodness, and then bake that 3D generation into something that renders fast. Moving along this speed/size continuum is a powerful tool.

08.04.2025 17:25 — 👍 7 🔁 0 💬 1 📌 0

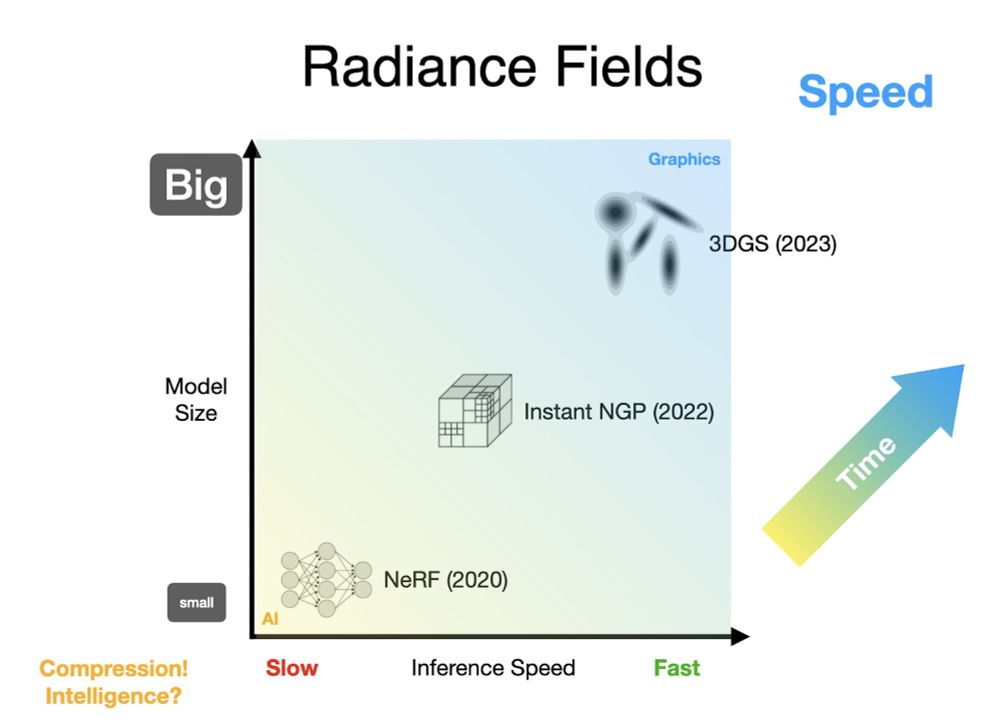

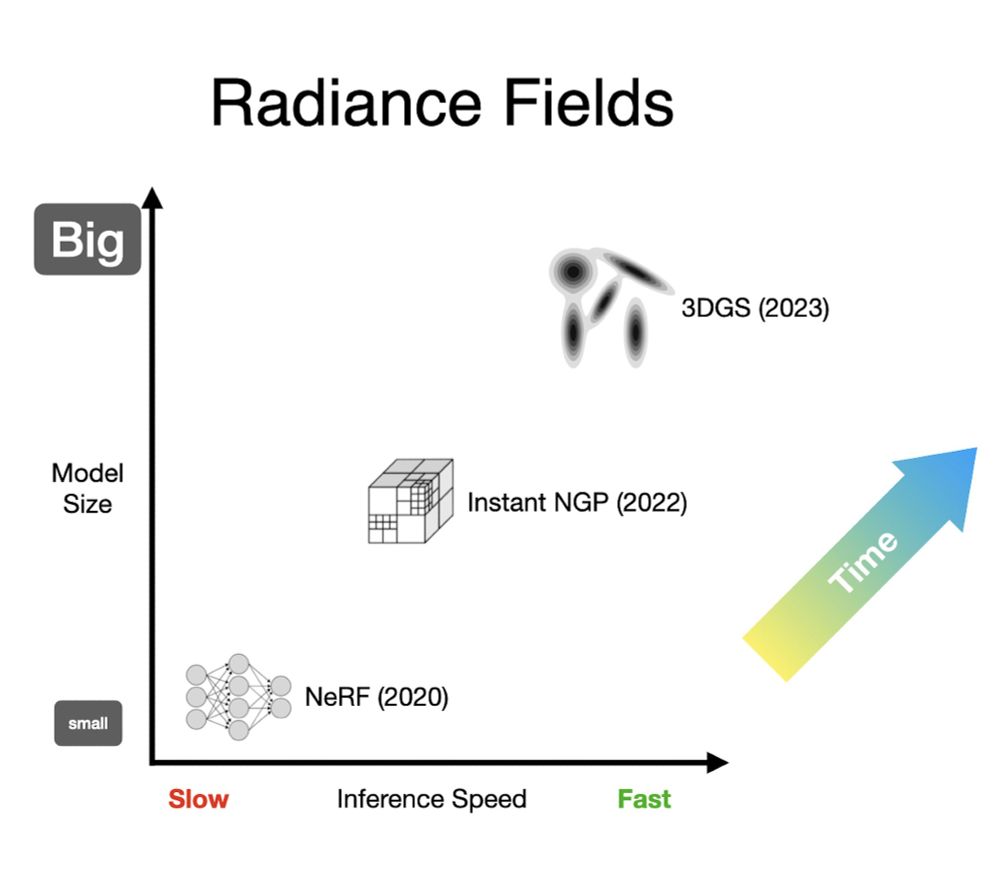

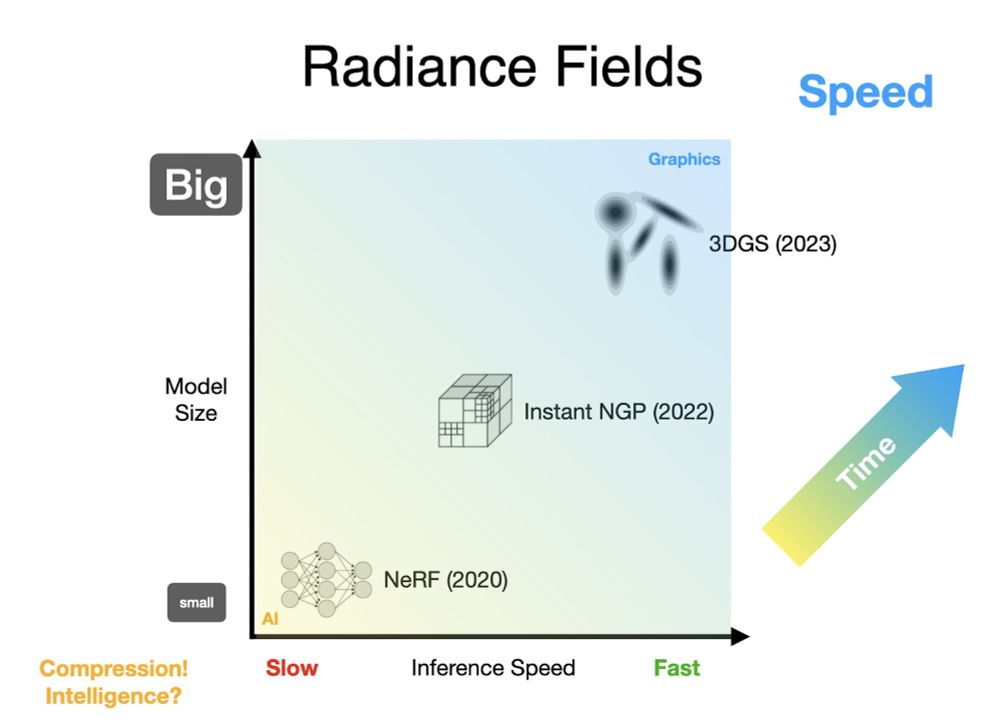

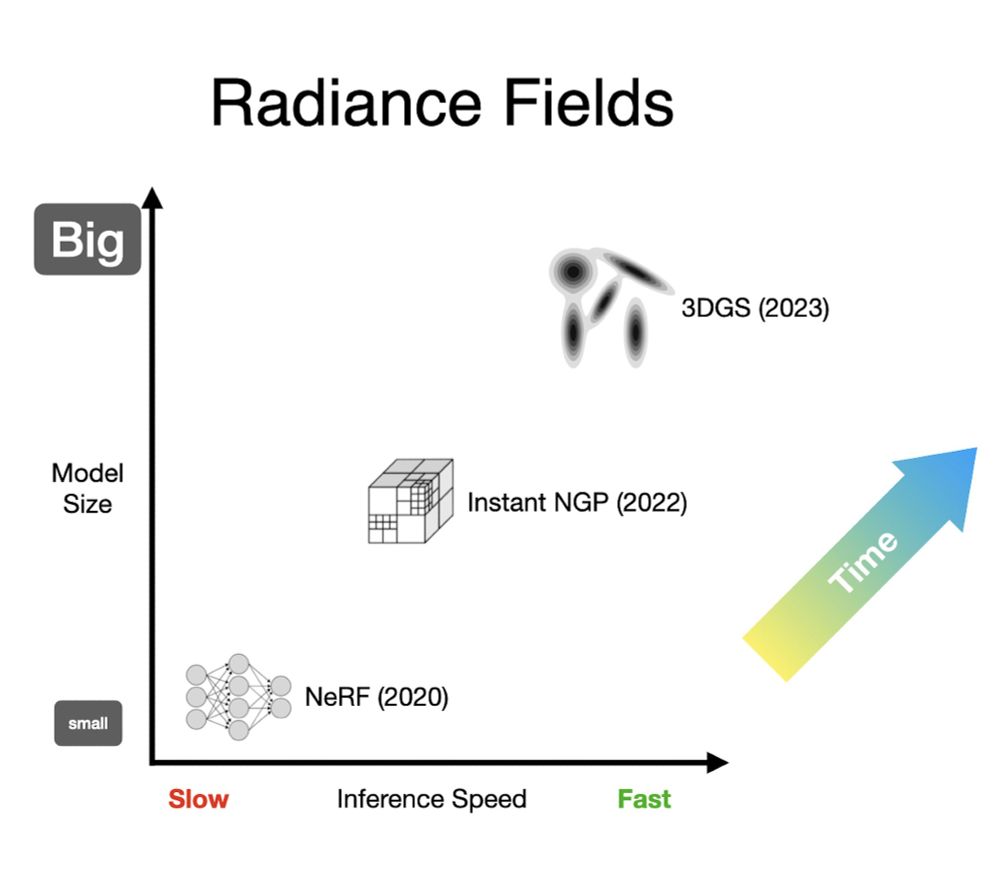

It makes sense that radiance fields trended towards speed --- real-time performance is paramount in 3D graphics. But what we've seen in AI suggests that magical things can happen if you forgo speed and embrace compression. What else is in that lower left corner of this graph?

08.04.2025 17:25 — 👍 4 🔁 0 💬 2 📌 0

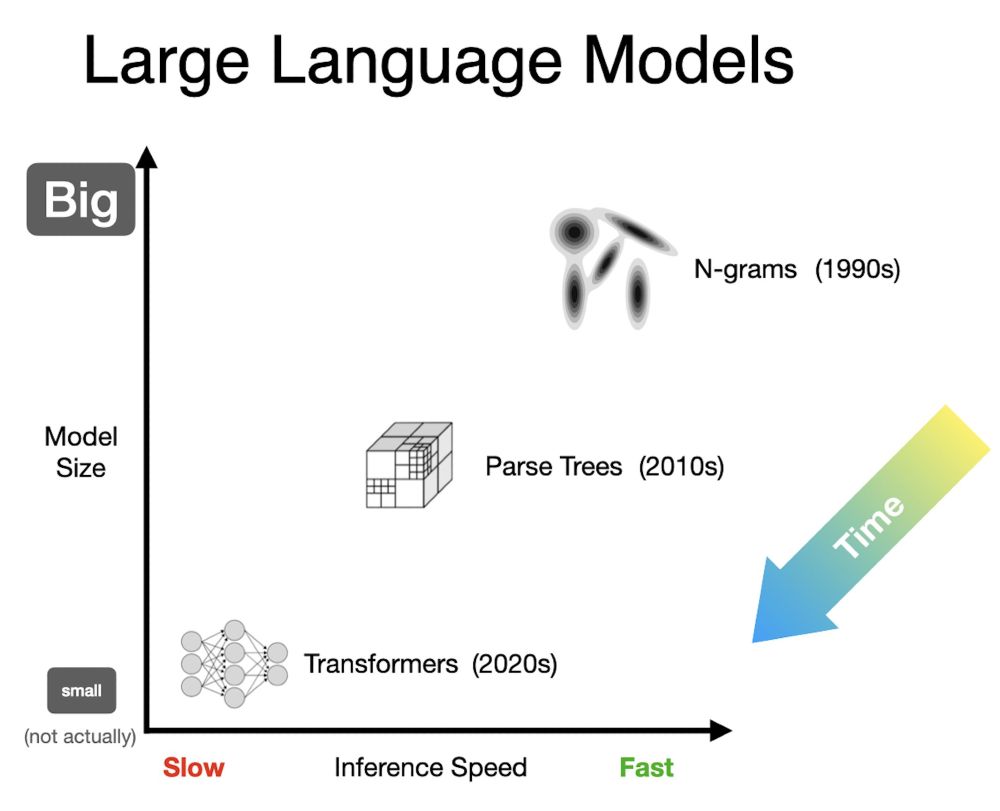

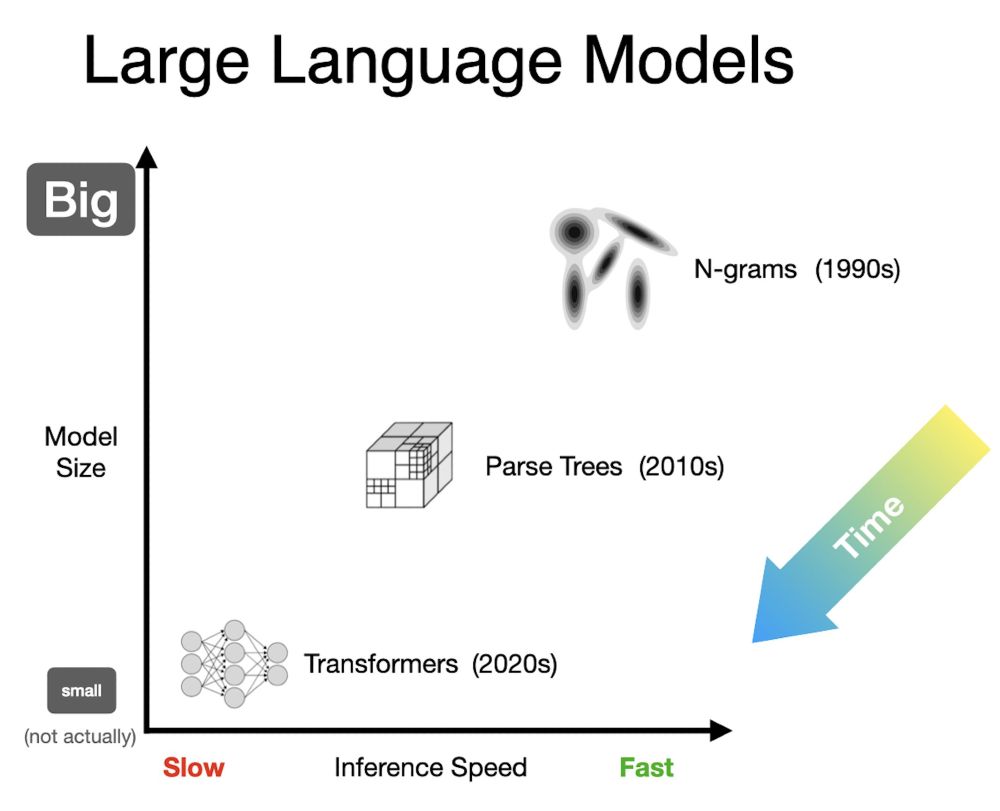

And this gets a bit hand-wavy, but NLP also started with shallow+fast+big n-gram models, then moved to parse trees etc, and then on to transformers. And yes, I know, transformers aren't actually small, but they are insanely compressed! "Compression is intelligence", as they say.

08.04.2025 17:25 — 👍 3 🔁 0 💬 1 📌 0

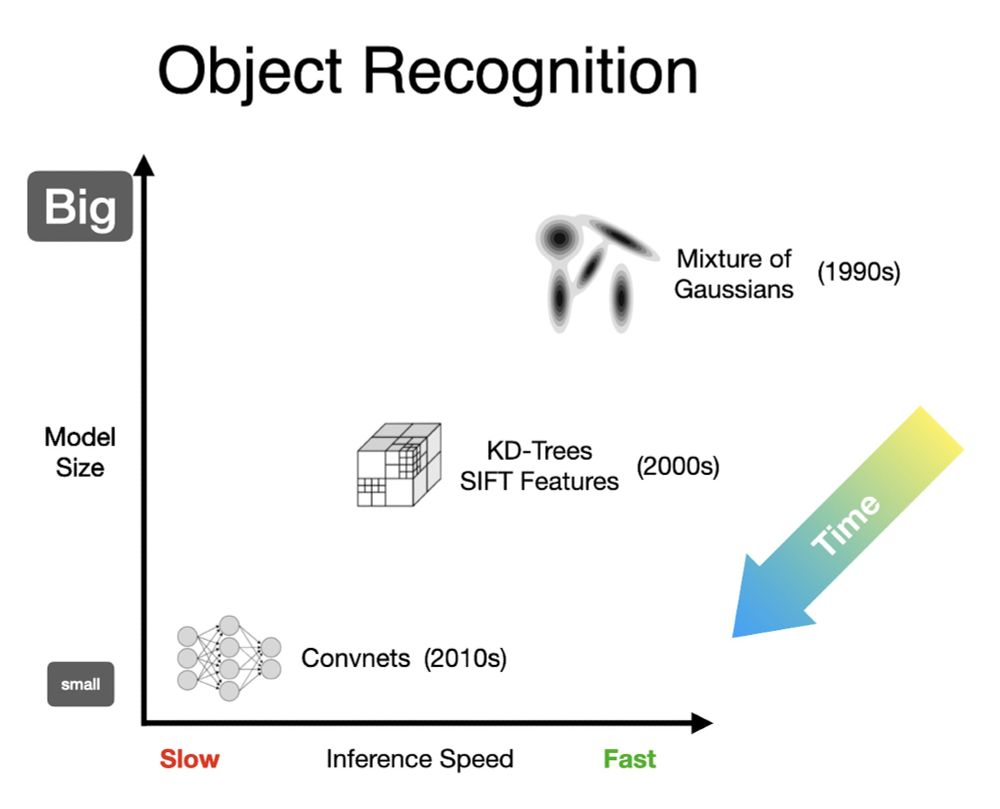

In fact, it's the *opposite* of what we saw in object recognition. There we started with shallow+fast+big models like mixtures of Gaussians on color, then moved to more compact and hierarchical models using trees and features, and finally to highly compressed CNNs and VITs.

08.04.2025 17:25 — 👍 4 🔁 0 💬 2 📌 0

Let's plot the trajectory of these three generations, with speed on the x-axis and model size on the y-axis. Over time, we've been steadily moving to bigger and faster models, up and to the right. This is sensible, but it's not the trend that other AI fields have been on...

08.04.2025 17:25 — 👍 5 🔁 0 💬 1 📌 0

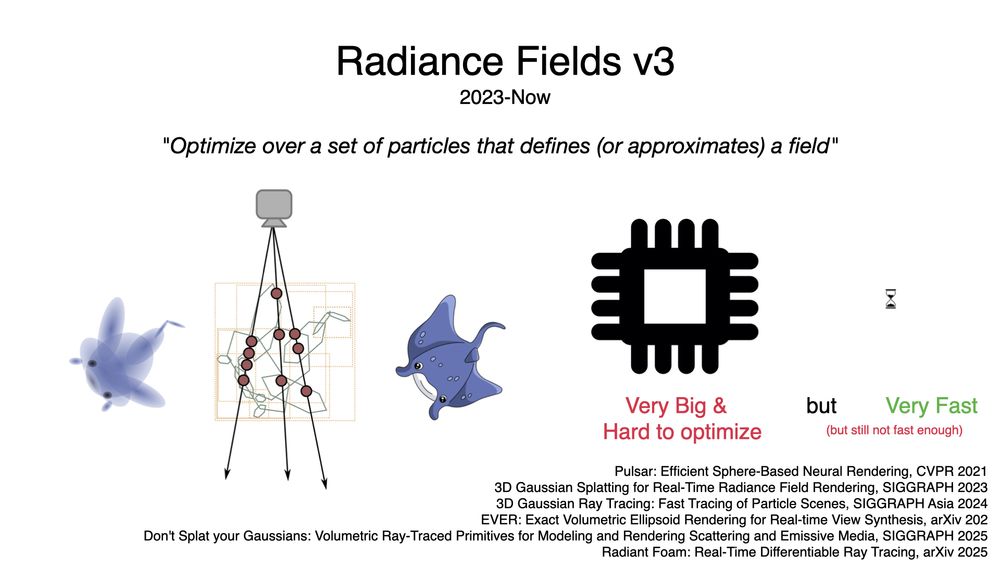

Generation three swapped out those voxel grids for a bag of particles, with 3DGS getting the most adoption (shout out to 2021's pulsar though). These models are larger than grids, and can be tricky to optimize, but the upside for rendering speed is so huge that it's worth it.

08.04.2025 17:25 — 👍 7 🔁 0 💬 1 📌 0

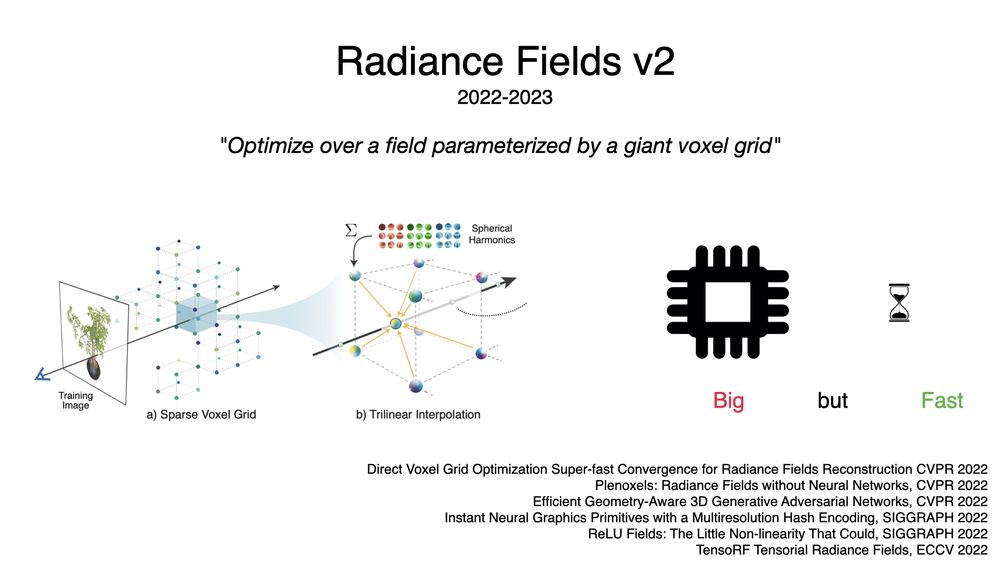

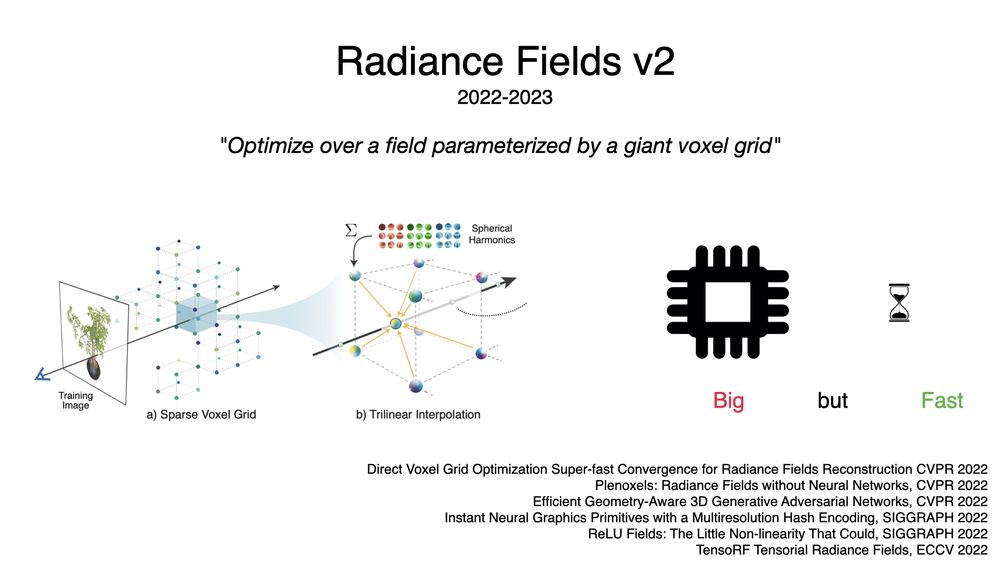

The second generation was all about swapping out MLPs for a giant voxel grid of some kind, usually with some hierarchy/aliasing (NGP) or low-rank (TensoRF) trick for dealing with OOMs. These grids are much bigger than MLPs, but they're easy to train and fast to render.

08.04.2025 17:25 — 👍 4 🔁 0 💬 1 📌 0

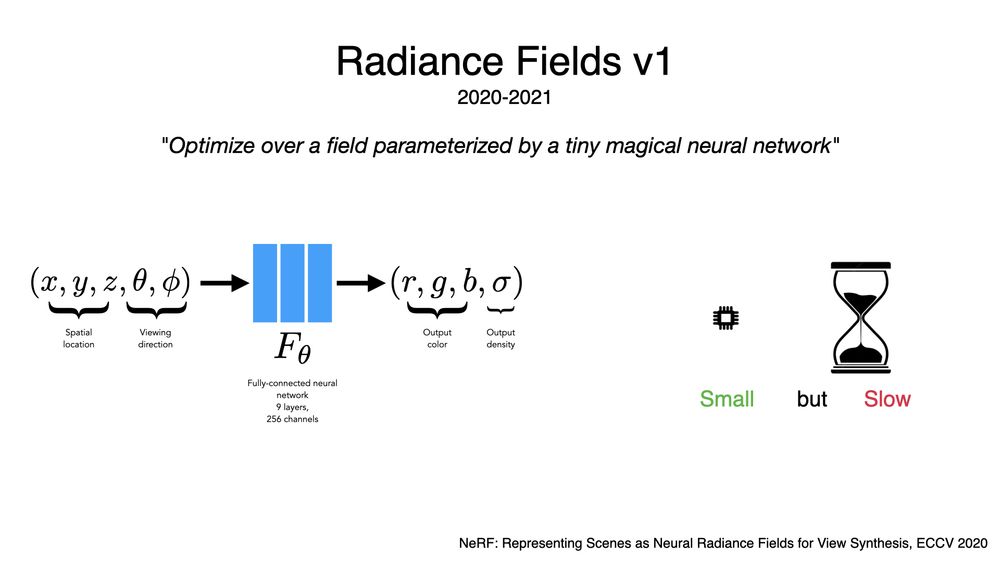

A thread of thoughts on radiance fields, from my keynote at 3DV:

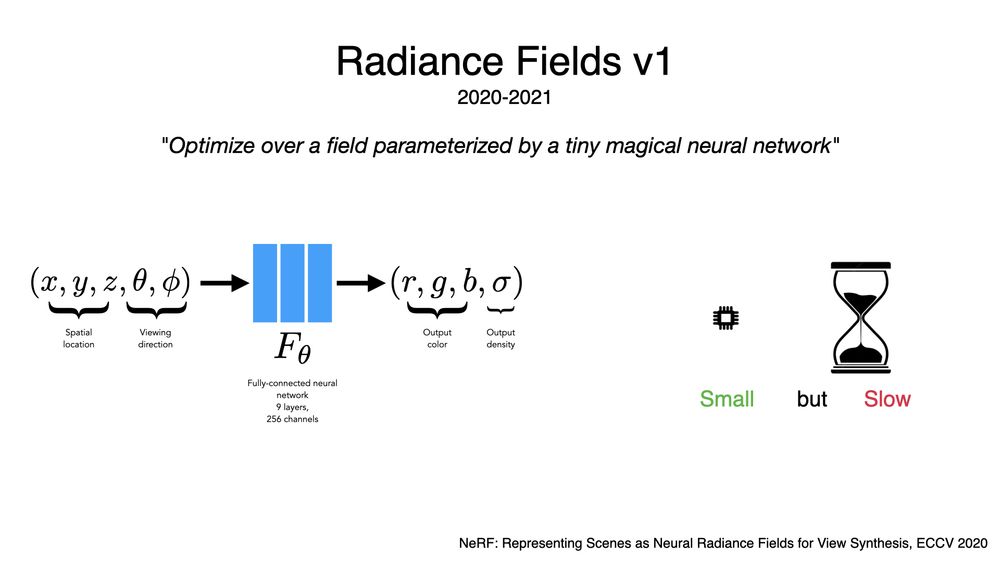

Radiance fields have had 3 distinct generations. First was NeRF: just posenc and a tiny MLP. This was slow to train but worked really well, and it was unusually compressed --- The NeRF was smaller than the images.

08.04.2025 17:25 — 👍 93 🔁 21 💬 2 📌 1

Here's Bolt3D: fast feed-forward 3D generation from one or many input images. Diffusion means that generated scenes contain lots of interesting structure in unobserved regions. ~6 seconds to generate, renders in real time.

Project page: szymanowiczs.github.io/bolt3d

Arxiv: arxiv.org/abs/2503.14445

19.03.2025 18:37 — 👍 35 🔁 6 💬 1 📌 2

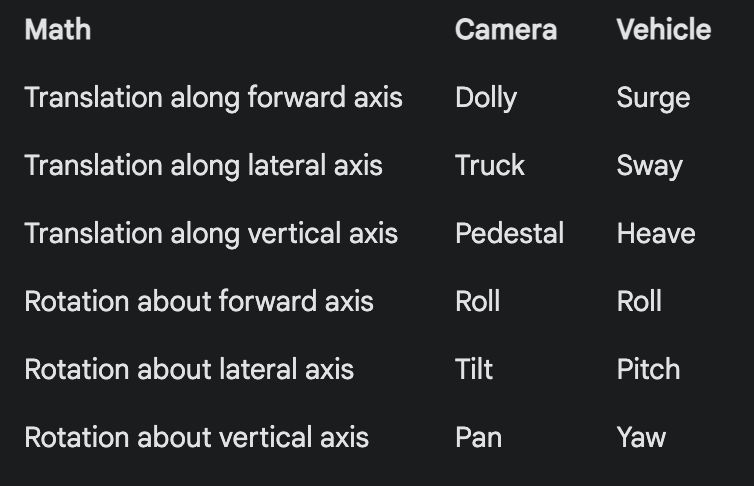

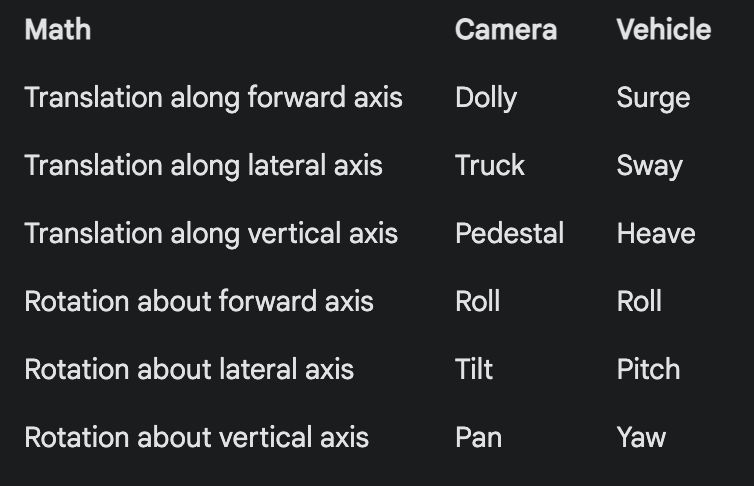

I made this handy cheat sheet for the jargon that 6DOF math maps to for cameras and vehicles. Worth learning if you, like me, are worried about embarrassing yourself in front of a cinematographer or naval admiral.

19.03.2025 16:50 — 👍 33 🔁 5 💬 0 📌 0

It's certainly a shocking result, but I think concluding that "Sora learned 3D consistency" isn't a totally well-founded claim. We have no real idea what any models learn under the hood, and it should be possible for models to produce plausible videos without actually doing anything "in 3D".

11.03.2025 16:41 — 👍 4 🔁 0 💬 0 📌 0

But if there's an actual physical conference with talks and posters, there would need to be an actual cutoff date for submissions to be presented at this year's conference. Wouldn't that become the de facto deadline, even in a theoretically rolling system?

03.03.2025 14:49 — 👍 2 🔁 0 💬 1 📌 0

Renegade was so underrated

28.02.2025 16:39 — 👍 0 🔁 0 💬 1 📌 0

Good tweet! Hadn't seen it, wasn't subtweeting it

27.02.2025 06:57 — 👍 4 🔁 0 💬 0 📌 0

(no, sorry, what post? Can you link me?)

27.02.2025 06:38 — 👍 0 🔁 0 💬 1 📌 0

Fighting every day to deliver a city that working New Yorkers can actually afford. Mayor of New York City.

Staff writer for the Atlantic. Messages with links are intended as prompts to read the linked story, not self-contained arguments substituting for the linked story.

Anti-cynic. Towards a weirder future. Reinforcement Learning, Autonomous Vehicles, transportation systems, the works. Asst. Prof at NYU

https://emerge-lab.github.io

https://www.admonymous.co/eugenevinitsky

Punk news coming your way

Go read the full articles: www.thehardtimes.net

We celebrate retro gaming culture. 🕹️ Retro gaming news, reviews, features and guides. 🦤 100% Independent.

//30s

// 🏳️⚧️ she/they

//Emmy Nominated Editor: Insert Credit, Polygon, The Games Press, DC Action News, Topic Lords

// ourbroadcastday.com

// full-cyborg catgirl witch

CEO of Bluesky, steward of AT Protocol.

dec/acc 🌱 🪴 🌳

Senior Correspondent at Vox covering the crisis of global democracy. Author of The Reactionary Spirit, a book on that topic, and a '25-'26 distinguished visiting fellow at the University of Pennsylvania's Perry World House.

San Diego Dec 2-7, 25 and Mexico City Nov 30-Dec 5, 25. Comments to this account are not monitored. Please send feedback to townhall@neurips.cc.

host and founder, Post Games

co-host, The Besties

https://www.patreon.com/c/PostGames

https://postgame.substack.com/

https://podcasts.apple.com/us/podcast/post-games/id1815131711

Signal: plante.01

Simulation and rendering nerd. Co-founder and CTO @JangaFX

Working on EmberGen and more.

Discord: vassvik @vassvik@mastodon.gamedev.place

Christian, husband, dad. Vice President of the United States.

jdvance.com

it’s me

https://youtube.com/@any_austin

New Book out this summer: Sawyer Lee and the Quest to Just Stay Home.

Other things of mine: Bea Wolf, A City on Mars, and SMBC

Website: www.smbc-comics.com

Patreon: https://www.patreon.com/ZachWeinersmith?ty=h

Basketball (and podcasts and games) are my favorite sport, I like the way they dribble up and down the court. Best GM in Podcasting

Research Scientist@Google DeepMind

Assoc Prof@York University, Toronto

mbrubake.github.io

Research: Computer Vision and Machine Learning, esp generative models.

Applications: CryoEM (cryoSPARC), Statistics (Stan), Forensics, and more

Official account for the IEEE/CVF International Conference on Computer Vision. #ICCV2025 Honolulu 🇺🇸 Co-hosted by @natanielruiz @antoninofurnari @yaelvinker @CSProfKGD

ROMchip: A Journal of Game Histories // www.romchip.org

GET OUR NEWSLETTER: http://eepurl.com/crCul1

Editors:

@lainenooney.bsky.social

@daveparisi.bsky.social

@liebenwalde.bsky.social

Soraya Murray

star of snl / grimlin 3: dawn of desmond / shrek 5 / rightful owner of muppet ip / singer as well

Lecturer in Maths & Stats at Bristol. Interested in probabilistic + numerical computation, statistical modelling + inference. (he / him).

Homepage: https://sites.google.com/view/sp-monte-carlo

Seminar: https://sites.google.com/view/monte-carlo-semina