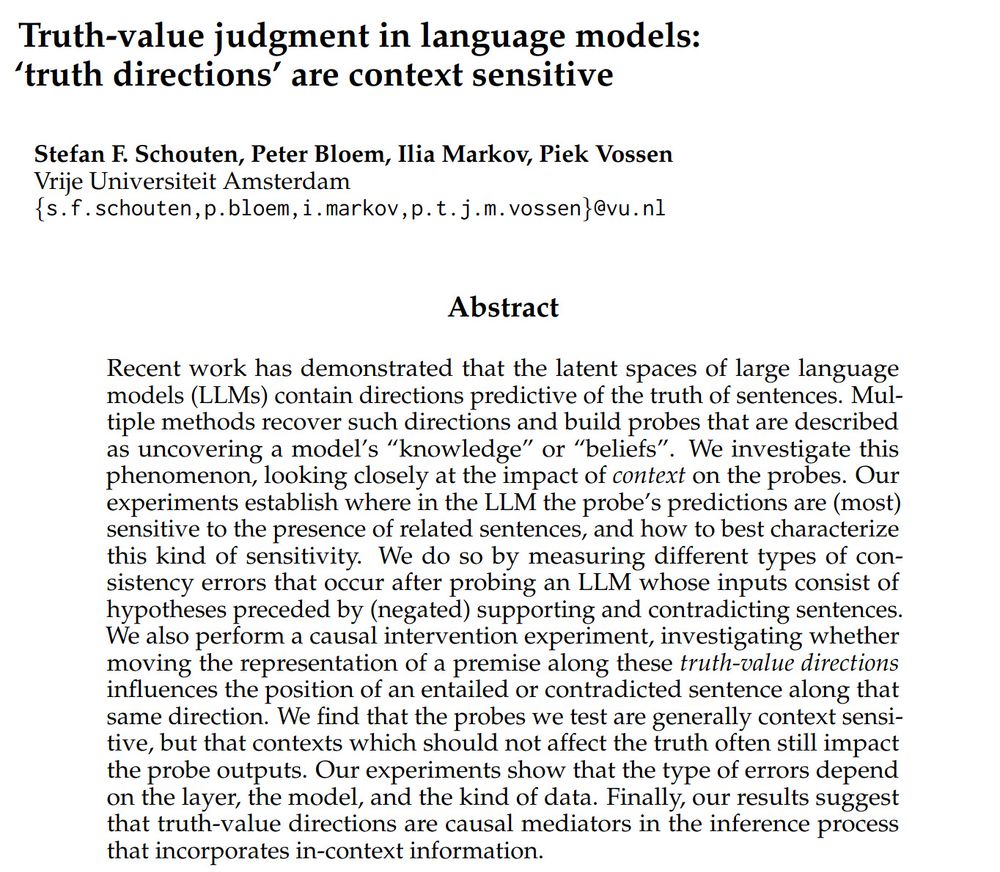

📢 Our paper on 'Truth-value Judgment in LLMs' was accepted to @colmweb.org #COLM2025!

In this paper, we investigate how LLMs keep track of the truth of sentences when reasoning.

@stefanfs.me.bsky.social

PhD candidate CLTL VU Amsterdam Prev. Research Intern Huawei stefanfs.me

📢 Our paper on 'Truth-value Judgment in LLMs' was accepted to @colmweb.org #COLM2025!

In this paper, we investigate how LLMs keep track of the truth of sentences when reasoning.

📄 Paper: arxiv.org/abs/2404.18865

Thanks to my coauthors @pbloem.sigmoid.social.ap.brid.gy, @ilia-markov.bsky.social and Piek Vossen

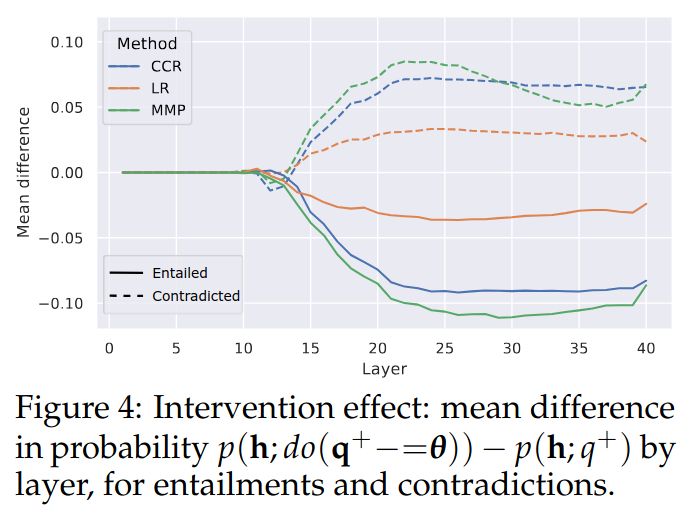

Finally, when intervening on hidden states, we find that the truth-value directions identified are causal mediators in the inference process.

14.07.2025 14:54 — 👍 0 🔁 0 💬 1 📌 0

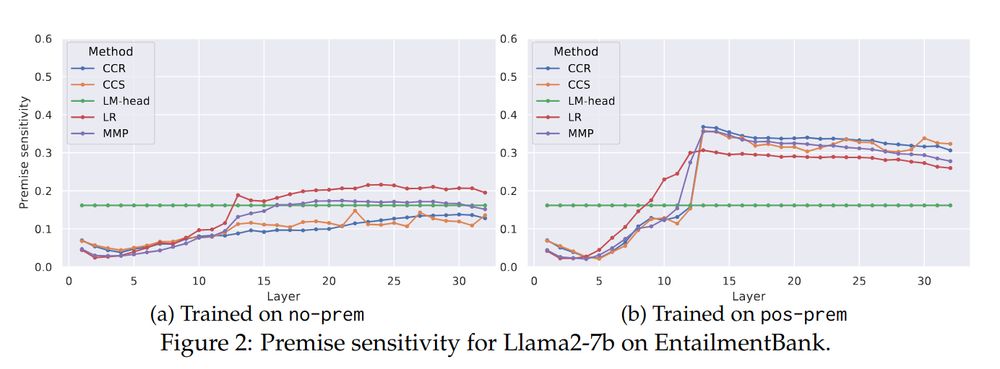

Even directions identified from single sentences show some sensitivity to the context, but sensitivity increases when probes are based on examples where sentences appear in inferential contexts.

14.07.2025 14:54 — 👍 0 🔁 0 💬 1 📌 0

Regardless of probing method and dataset, truth-value directions are found to be sensitive to context. However, we also find they are sensitive to the presence of irrelevant information.

14.07.2025 14:54 — 👍 0 🔁 0 💬 1 📌 0

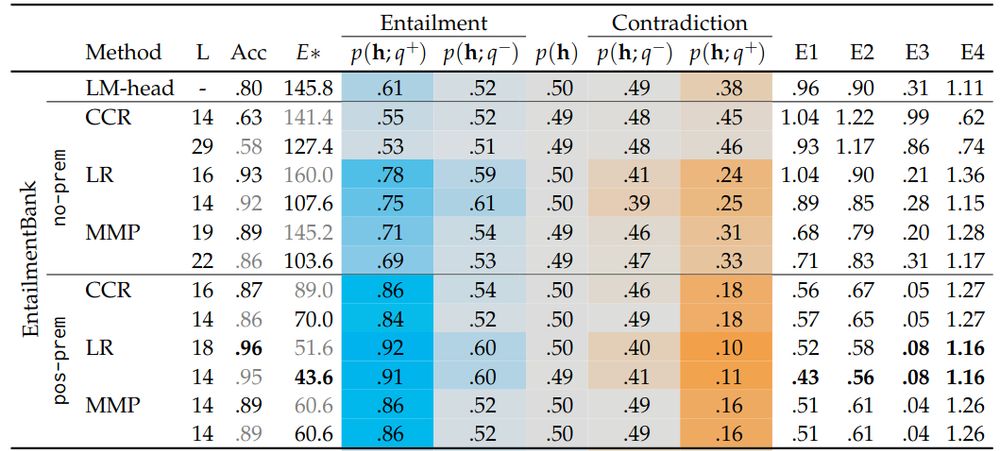

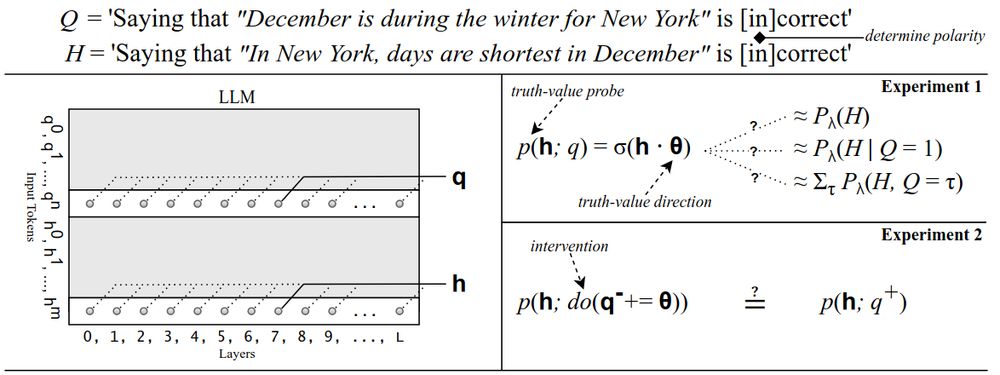

We use probing techniques that identify directions in the model's latent space which encode if sentences are more or less likely to be true. By manipulating inputs and hidden states we evaluate whether probabilities update in appropriate ways.

14.07.2025 14:54 — 👍 0 🔁 0 💬 1 📌 0

📢 Our paper on 'Truth-value Judgment in LLMs' was accepted to @colmweb.org #COLM2025!

In this paper, we investigate how LLMs keep track of the truth of sentences when reasoning.

Even truth-value directions identified from individual sentences still show some sensitivity to context, although the sensitivity increases when probes are based on sentences appearing in an inferential context.

14.07.2025 14:39 — 👍 0 🔁 0 💬 0 📌 0

We find that regardless of probing method and dataset, models are found to incorporate in-context information when assigning truth-values to sentences. However, we also find they are sensitive to irrelevant information.

14.07.2025 14:39 — 👍 0 🔁 0 💬 1 📌 0

We use probing techniques that identify directions in the model's latent space used to represent sentences as more or less likely to be true. By manipulating both inputs as well as hidden states, we test if probabilities update as expected.

14.07.2025 14:39 — 👍 0 🔁 0 💬 1 📌 0